copy files from SuLvXiangXin

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +15 -0

- LICENSE +202 -0

- README.md +252 -3

- configs/360.gin +15 -0

- configs/360_glo.gin +15 -0

- configs/blender.gin +15 -0

- configs/blender_refnerf.gin +41 -0

- configs/llff_256.gin +19 -0

- configs/llff_512.gin +19 -0

- configs/llff_raw.gin +73 -0

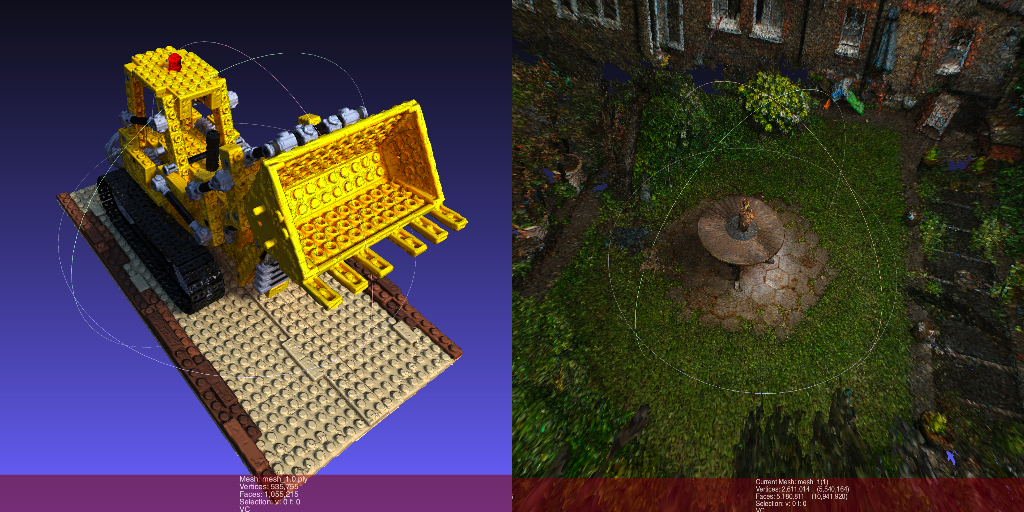

- configs/multi360.gin +5 -0

- eval.py +307 -0

- extract.py +638 -0

- gridencoder/__init__.py +1 -0

- gridencoder/backend.py +40 -0

- gridencoder/grid.py +198 -0

- gridencoder/setup.py +50 -0

- gridencoder/src/bindings.cpp +9 -0

- gridencoder/src/gridencoder.cu +645 -0

- gridencoder/src/gridencoder.h +17 -0

- internal/camera_utils.py +673 -0

- internal/checkpoints.py +38 -0

- internal/configs.py +177 -0

- internal/coord.py +225 -0

- internal/datasets.py +1016 -0

- internal/geopoly.py +108 -0

- internal/image.py +126 -0

- internal/math.py +133 -0

- internal/models.py +740 -0

- internal/pycolmap/.gitignore +2 -0

- internal/pycolmap/LICENSE.txt +21 -0

- internal/pycolmap/README.md +4 -0

- internal/pycolmap/pycolmap/__init__.py +5 -0

- internal/pycolmap/pycolmap/camera.py +259 -0

- internal/pycolmap/pycolmap/database.py +340 -0

- internal/pycolmap/pycolmap/image.py +35 -0

- internal/pycolmap/pycolmap/rotation.py +324 -0

- internal/pycolmap/pycolmap/scene_manager.py +670 -0

- internal/pycolmap/tools/colmap_to_nvm.py +69 -0

- internal/pycolmap/tools/delete_images.py +36 -0

- internal/pycolmap/tools/impute_missing_cameras.py +180 -0

- internal/pycolmap/tools/save_cameras_as_ply.py +92 -0

- internal/pycolmap/tools/transform_model.py +48 -0

- internal/pycolmap/tools/write_camera_track_to_bundler.py +60 -0

- internal/pycolmap/tools/write_depthmap_to_ply.py +139 -0

- internal/raw_utils.py +360 -0

- internal/ref_utils.py +174 -0

- internal/render.py +242 -0

- internal/stepfun.py +403 -0

- internal/train_utils.py +263 -0

.gitignore

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

__pycache__/

|

| 2 |

+

interal/__pycache__/

|

| 3 |

+

tests/__pycache__/

|

| 4 |

+

.DS_Store

|

| 5 |

+

.vscode/

|

| 6 |

+

.idea/

|

| 7 |

+

__MACOSX/

|

| 8 |

+

exp/

|

| 9 |

+

data/

|

| 10 |

+

assets/

|

| 11 |

+

test.py

|

| 12 |

+

test2.py

|

| 13 |

+

*.mp4

|

| 14 |

+

*.ply

|

| 15 |

+

scripts/train_360_debug.sh

|

LICENSE

ADDED

|

@@ -0,0 +1,202 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

Apache License

|

| 3 |

+

Version 2.0, January 2004

|

| 4 |

+

http://www.apache.org/licenses/

|

| 5 |

+

|

| 6 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 7 |

+

|

| 8 |

+

1. Definitions.

|

| 9 |

+

|

| 10 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 11 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 12 |

+

|

| 13 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 14 |

+

the copyright owner that is granting the License.

|

| 15 |

+

|

| 16 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 17 |

+

other entities that control, are controlled by, or are under common

|

| 18 |

+

control with that entity. For the purposes of this definition,

|

| 19 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 20 |

+

direction or management of such entity, whether by contract or

|

| 21 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 22 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 23 |

+

|

| 24 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 25 |

+

exercising permissions granted by this License.

|

| 26 |

+

|

| 27 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 28 |

+

including but not limited to software source code, documentation

|

| 29 |

+

source, and configuration files.

|

| 30 |

+

|

| 31 |

+

"Object" form shall mean any form resulting from mechanical

|

| 32 |

+

transformation or translation of a Source form, including but

|

| 33 |

+

not limited to compiled object code, generated documentation,

|

| 34 |

+

and conversions to other media types.

|

| 35 |

+

|

| 36 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 37 |

+

Object form, made available under the License, as indicated by a

|

| 38 |

+

copyright notice that is included in or attached to the work

|

| 39 |

+

(an example is provided in the Appendix below).

|

| 40 |

+

|

| 41 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 42 |

+

form, that is based on (or derived from) the Work and for which the

|

| 43 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 44 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 45 |

+

of this License, Derivative Works shall not include works that remain

|

| 46 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 47 |

+

the Work and Derivative Works thereof.

|

| 48 |

+

|

| 49 |

+

"Contribution" shall mean any work of authorship, including

|

| 50 |

+

the original version of the Work and any modifications or additions

|

| 51 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 52 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 53 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 54 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 55 |

+

means any form of electronic, verbal, or written communication sent

|

| 56 |

+

to the Licensor or its representatives, including but not limited to

|

| 57 |

+

communication on electronic mailing lists, source code control systems,

|

| 58 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 59 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 60 |

+

excluding communication that is conspicuously marked or otherwise

|

| 61 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 62 |

+

|

| 63 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 64 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 65 |

+

subsequently incorporated within the Work.

|

| 66 |

+

|

| 67 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 68 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 69 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 70 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 71 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 72 |

+

Work and such Derivative Works in Source or Object form.

|

| 73 |

+

|

| 74 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 75 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 76 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 77 |

+

(except as stated in this section) patent license to make, have made,

|

| 78 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 79 |

+

where such license applies only to those patent claims licensable

|

| 80 |

+

by such Contributor that are necessarily infringed by their

|

| 81 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 82 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 83 |

+

institute patent litigation against any entity (including a

|

| 84 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 85 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 86 |

+

or contributory patent infringement, then any patent licenses

|

| 87 |

+

granted to You under this License for that Work shall terminate

|

| 88 |

+

as of the date such litigation is filed.

|

| 89 |

+

|

| 90 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 91 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 92 |

+

modifications, and in Source or Object form, provided that You

|

| 93 |

+

meet the following conditions:

|

| 94 |

+

|

| 95 |

+

(a) You must give any other recipients of the Work or

|

| 96 |

+

Derivative Works a copy of this License; and

|

| 97 |

+

|

| 98 |

+

(b) You must cause any modified files to carry prominent notices

|

| 99 |

+

stating that You changed the files; and

|

| 100 |

+

|

| 101 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 102 |

+

that You distribute, all copyright, patent, trademark, and

|

| 103 |

+

attribution notices from the Source form of the Work,

|

| 104 |

+

excluding those notices that do not pertain to any part of

|

| 105 |

+

the Derivative Works; and

|

| 106 |

+

|

| 107 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 108 |

+

distribution, then any Derivative Works that You distribute must

|

| 109 |

+

include a readable copy of the attribution notices contained

|

| 110 |

+

within such NOTICE file, excluding those notices that do not

|

| 111 |

+

pertain to any part of the Derivative Works, in at least one

|

| 112 |

+

of the following places: within a NOTICE text file distributed

|

| 113 |

+

as part of the Derivative Works; within the Source form or

|

| 114 |

+

documentation, if provided along with the Derivative Works; or,

|

| 115 |

+

within a display generated by the Derivative Works, if and

|

| 116 |

+

wherever such third-party notices normally appear. The contents

|

| 117 |

+

of the NOTICE file are for informational purposes only and

|

| 118 |

+

do not modify the License. You may add Your own attribution

|

| 119 |

+

notices within Derivative Works that You distribute, alongside

|

| 120 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 121 |

+

that such additional attribution notices cannot be construed

|

| 122 |

+

as modifying the License.

|

| 123 |

+

|

| 124 |

+

You may add Your own copyright statement to Your modifications and

|

| 125 |

+

may provide additional or different license terms and conditions

|

| 126 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 127 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 128 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 129 |

+

the conditions stated in this License.

|

| 130 |

+

|

| 131 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 132 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 133 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 134 |

+

this License, without any additional terms or conditions.

|

| 135 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 136 |

+

the terms of any separate license agreement you may have executed

|

| 137 |

+

with Licensor regarding such Contributions.

|

| 138 |

+

|

| 139 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 140 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 141 |

+

except as required for reasonable and customary use in describing the

|

| 142 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 143 |

+

|

| 144 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 145 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 146 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 147 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 148 |

+

implied, including, without limitation, any warranties or conditions

|

| 149 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 150 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 151 |

+

appropriateness of using or redistributing the Work and assume any

|

| 152 |

+

risks associated with Your exercise of permissions under this License.

|

| 153 |

+

|

| 154 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 155 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 156 |

+

unless required by applicable law (such as deliberate and grossly

|

| 157 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 158 |

+

liable to You for damages, including any direct, indirect, special,

|

| 159 |

+

incidental, or consequential damages of any character arising as a

|

| 160 |

+

result of this License or out of the use or inability to use the

|

| 161 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 162 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 163 |

+

other commercial damages or losses), even if such Contributor

|

| 164 |

+

has been advised of the possibility of such damages.

|

| 165 |

+

|

| 166 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 167 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 168 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 169 |

+

or other liability obligations and/or rights consistent with this

|

| 170 |

+

License. However, in accepting such obligations, You may act only

|

| 171 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 172 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 173 |

+

defend, and hold each Contributor harmless for any liability

|

| 174 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 175 |

+

of your accepting any such warranty or additional liability.

|

| 176 |

+

|

| 177 |

+

END OF TERMS AND CONDITIONS

|

| 178 |

+

|

| 179 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 180 |

+

|

| 181 |

+

To apply the Apache License to your work, attach the following

|

| 182 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 183 |

+

replaced with your own identifying information. (Don't include

|

| 184 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 185 |

+

comment syntax for the file format. We also recommend that a

|

| 186 |

+

file or class name and description of purpose be included on the

|

| 187 |

+

same "printed page" as the copyright notice for easier

|

| 188 |

+

identification within third-party archives.

|

| 189 |

+

|

| 190 |

+

Copyright [yyyy] [name of copyright owner]

|

| 191 |

+

|

| 192 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 193 |

+

you may not use this file except in compliance with the License.

|

| 194 |

+

You may obtain a copy of the License at

|

| 195 |

+

|

| 196 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 197 |

+

|

| 198 |

+

Unless required by applicable law or agreed to in writing, software

|

| 199 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 200 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 201 |

+

See the License for the specific language governing permissions and

|

| 202 |

+

limitations under the License.

|

README.md

CHANGED

|

@@ -1,3 +1,252 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# ZipNeRF

|

| 2 |

+

|

| 3 |

+

An unofficial pytorch implementation of

|

| 4 |

+

"Zip-NeRF: Anti-Aliased Grid-Based Neural Radiance Fields"

|

| 5 |

+

[https://arxiv.org/abs/2304.06706](https://arxiv.org/abs/2304.06706).

|

| 6 |

+

This work is based on [multinerf](https://github.com/google-research/multinerf), so features in refnerf,rawnerf,mipnerf360 are also available.

|

| 7 |

+

|

| 8 |

+

## News

|

| 9 |

+

- (6.22) Add extracting mesh through tsdf; add [gradient scaling](https://gradient-scaling.github.io/) for near plane floaters.

|

| 10 |

+

- (5.26) Implement the latest version of ZipNeRF [https://arxiv.org/abs/2304.06706](https://arxiv.org/abs/2304.06706).

|

| 11 |

+

- (5.22) Add extracting mesh; add logging,checkpointing system

|

| 12 |

+

|

| 13 |

+

## Results

|

| 14 |

+

New results(5.27):

|

| 15 |

+

|

| 16 |

+

360_v2:

|

| 17 |

+

|

| 18 |

+

https://github.com/SuLvXiangXin/zipnerf-pytorch/assets/83005605/2b276e48-2dc4-4508-8441-e90ec963f7d9

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

360_v2_glo:(fewer floaters, but worse metric)

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

https://github.com/SuLvXiangXin/zipnerf-pytorch/assets/83005605/bddb5610-2a4f-4981-8e17-71326a24d291

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

mesh results(5.27):

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

Mipnerf360(PSNR):

|

| 38 |

+

|

| 39 |

+

| | bicycle | garden | stump | room | counter | kitchen | bonsai |

|

| 40 |

+

|:---------:|:-------:|:------:|:-----:|:-----:|:-------:|:-------:|:------:|

|

| 41 |

+

| Paper | 25.80 | 28.20 | 27.55 | 32.65 | 29.38 | 32.50 | 34.46 |

|

| 42 |

+

| This repo | 25.44 | 27.98 | 26.75 | 32.13 | 29.10 | 32.63 | 34.20 |

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

Blender(PSNR):

|

| 46 |

+

|

| 47 |

+

| | chair | drums | ficus | hotdog | lego | materials | mic | ship |

|

| 48 |

+

|:---------:|:-----:|:-----:|:-----:|:------:|:-----:|:---------:|:-----:|:-----:|

|

| 49 |

+

| Paper | 34.84 | 25.84 | 33.90 | 37.14 | 34.84 | 31.66 | 35.15 | 31.38 |

|

| 50 |

+

| This repo | 35.26 | 25.51 | 32.66 | 36.56 | 35.04 | 29.43 | 34.93 | 31.38 |

|

| 51 |

+

|

| 52 |

+

For Mipnerf360 dataset, the model is trained with a downsample factor of 4 for outdoor scene and 2 for indoor scene(same as in paper).

|

| 53 |

+

Training speed is about 1.5x slower than paper(1.5 hours on 8 A6000).

|

| 54 |

+

|

| 55 |

+

The hash decay loss seems to have little effect(?), as many floaters can be found in the final results in both experiments (especially in Blender).

|

| 56 |

+

|

| 57 |

+

## Install

|

| 58 |

+

|

| 59 |

+

```

|

| 60 |

+

# Clone the repo.

|

| 61 |

+

git clone https://github.com/SuLvXiangXin/zipnerf-pytorch.git

|

| 62 |

+

cd zipnerf-pytorch

|

| 63 |

+

|

| 64 |

+

# Make a conda environment.

|

| 65 |

+

conda create --name zipnerf python=3.9

|

| 66 |

+

conda activate zipnerf

|

| 67 |

+

|

| 68 |

+

# Install requirements.

|

| 69 |

+

pip install -r requirements.txt

|

| 70 |

+

|

| 71 |

+

# Install other extensions

|

| 72 |

+

pip install ./gridencoder

|

| 73 |

+

|

| 74 |

+

# Install nvdiffrast (optional, for textured mesh)

|

| 75 |

+

git clone https://github.com/NVlabs/nvdiffrast

|

| 76 |

+

pip install ./nvdiffrast

|

| 77 |

+

|

| 78 |

+

# Install a specific cuda version of torch_scatter

|

| 79 |

+

# see more detail at https://github.com/rusty1s/pytorch_scatter

|

| 80 |

+

CUDA=cu117

|

| 81 |

+

pip install torch-scatter -f https://data.pyg.org/whl/torch-2.0.0+${CUDA}.html

|

| 82 |

+

```

|

| 83 |

+

|

| 84 |

+

## Dataset

|

| 85 |

+

[mipnerf360](http://storage.googleapis.com/gresearch/refraw360/360_v2.zip)

|

| 86 |

+

|

| 87 |

+

[refnerf](https://storage.googleapis.com/gresearch/refraw360/ref.zip)

|

| 88 |

+

|

| 89 |

+

[nerf_synthetic](https://drive.google.com/drive/folders/128yBriW1IG_3NJ5Rp7APSTZsJqdJdfc1)

|

| 90 |

+

|

| 91 |

+

[nerf_llff_data](https://drive.google.com/drive/folders/128yBriW1IG_3NJ5Rp7APSTZsJqdJdfc1)

|

| 92 |

+

|

| 93 |

+

```

|

| 94 |

+

mkdir data

|

| 95 |

+

cd data

|

| 96 |

+

|

| 97 |

+

# e.g. mipnerf360 data

|

| 98 |

+

wget http://storage.googleapis.com/gresearch/refraw360/360_v2.zip

|

| 99 |

+

unzip 360_v2.zip

|

| 100 |

+

```

|

| 101 |

+

|

| 102 |

+

## Train

|

| 103 |

+

```

|

| 104 |

+

# Configure your training (DDP? fp16? ...)

|

| 105 |

+

# see https://huggingface.co/docs/accelerate/index for details

|

| 106 |

+

accelerate config

|

| 107 |

+

|

| 108 |

+

# Where your data is

|

| 109 |

+

DATA_DIR=data/360_v2/bicycle

|

| 110 |

+

EXP_NAME=360_v2/bicycle

|

| 111 |

+

|

| 112 |

+

# Experiment will be conducted under "exp/${EXP_NAME}" folder

|

| 113 |

+

# "--gin_configs=configs/360.gin" can be seen as a default config

|

| 114 |

+

# and you can add specific config useing --gin_bindings="..."

|

| 115 |

+

accelerate launch train.py \

|

| 116 |

+

--gin_configs=configs/360.gin \

|

| 117 |

+

--gin_bindings="Config.data_dir = '${DATA_DIR}'" \

|

| 118 |

+

--gin_bindings="Config.exp_name = '${EXP_NAME}'" \

|

| 119 |

+

--gin_bindings="Config.factor = 4"

|

| 120 |

+

|

| 121 |

+

# or you can also run without accelerate (without DDP)

|

| 122 |

+

CUDA_VISIBLE_DEVICES=0 python train.py \

|

| 123 |

+

--gin_configs=configs/360.gin \

|

| 124 |

+

--gin_bindings="Config.data_dir = '${DATA_DIR}'" \

|

| 125 |

+

--gin_bindings="Config.exp_name = '${EXP_NAME}'" \

|

| 126 |

+

--gin_bindings="Config.factor = 4"

|

| 127 |

+

|

| 128 |

+

# alternatively you can use an example training script

|

| 129 |

+

bash scripts/train_360.sh

|

| 130 |

+

|

| 131 |

+

# blender dataset

|

| 132 |

+

bash scripts/train_blender.sh

|

| 133 |

+

|

| 134 |

+

# metric, render image, etc can be viewed through tensorboard

|

| 135 |

+

tensorboard --logdir "exp/${EXP_NAME}"

|

| 136 |

+

|

| 137 |

+

```

|

| 138 |

+

|

| 139 |

+

### Render

|

| 140 |

+

Rendering results can be found in the directory `exp/${EXP_NAME}/render`

|

| 141 |

+

```

|

| 142 |

+

accelerate launch render.py \

|

| 143 |

+

--gin_configs=configs/360.gin \

|

| 144 |

+

--gin_bindings="Config.data_dir = '${DATA_DIR}'" \

|

| 145 |

+

--gin_bindings="Config.exp_name = '${EXP_NAME}'" \

|

| 146 |

+

--gin_bindings="Config.render_path = True" \

|

| 147 |

+

--gin_bindings="Config.render_path_frames = 480" \

|

| 148 |

+

--gin_bindings="Config.render_video_fps = 60" \

|

| 149 |

+

--gin_bindings="Config.factor = 4"

|

| 150 |

+

|

| 151 |

+

# alternatively you can use an example rendering script

|

| 152 |

+

bash scripts/render_360.sh

|

| 153 |

+

```

|

| 154 |

+

## Evaluate

|

| 155 |

+

Evaluating results can be found in the directory `exp/${EXP_NAME}/test_preds`

|

| 156 |

+

```

|

| 157 |

+

# using the same exp_name as in training

|

| 158 |

+

accelerate launch eval.py \

|

| 159 |

+

--gin_configs=configs/360.gin \

|

| 160 |

+

--gin_bindings="Config.data_dir = '${DATA_DIR}'" \

|

| 161 |

+

--gin_bindings="Config.exp_name = '${EXP_NAME}'" \

|

| 162 |

+

--gin_bindings="Config.factor = 4"

|

| 163 |

+

|

| 164 |

+

|

| 165 |

+

# alternatively you can use an example evaluating script

|

| 166 |

+

bash scripts/eval_360.sh

|

| 167 |

+

```

|

| 168 |

+

|

| 169 |

+

## Extract mesh

|

| 170 |

+

Mesh results can be found in the directory `exp/${EXP_NAME}/mesh`

|

| 171 |

+

```

|

| 172 |

+

# more configuration can be found in internal/configs.py

|

| 173 |

+

accelerate launch extract.py \

|

| 174 |

+

--gin_configs=configs/360.gin \

|

| 175 |

+

--gin_bindings="Config.data_dir = '${DATA_DIR}'" \

|

| 176 |

+

--gin_bindings="Config.exp_name = '${EXP_NAME}'" \

|

| 177 |

+

--gin_bindings="Config.factor = 4"

|

| 178 |

+

# --gin_bindings="Config.mesh_radius = 1" # (optional) smaller for more details e.g. 0.2 in bicycle scene

|

| 179 |

+

# --gin_bindings="Config.isosurface_threshold = 20" # (optional) empirical value

|

| 180 |

+

# --gin_bindings="Config.mesh_voxels=134217728" # (optional) number of voxels used to extract mesh, e.g. 134217728 equals to 512**3 . Smaller values may solve OutoFMemoryError

|

| 181 |

+

# --gin_bindings="Config.vertex_color = True" # (optional) saving mesh with vertex color instead of atlas which is much slower but with more details.

|

| 182 |

+

# --gin_bindings="Config.vertex_projection = True" # (optional) use projection for vertex color

|

| 183 |

+

|

| 184 |

+

# or extracting mesh using tsdf method

|

| 185 |

+

accelerate launch extract.py \

|

| 186 |

+

--gin_configs=configs/360.gin \

|

| 187 |

+

--gin_bindings="Config.data_dir = '${DATA_DIR}'" \

|

| 188 |

+

--gin_bindings="Config.exp_name = '${EXP_NAME}'" \

|

| 189 |

+

--gin_bindings="Config.factor = 4"

|

| 190 |

+

|

| 191 |

+

# alternatively you can use an example script

|

| 192 |

+

bash scripts/extract_360.sh

|

| 193 |

+

```

|

| 194 |

+

|

| 195 |

+

## OutOfMemory

|

| 196 |

+

you can decrease the total batch size by

|

| 197 |

+

adding e.g. `--gin_bindings="Config.batch_size = 8192" `,

|

| 198 |

+

or decrease the test chunk size by adding e.g. `--gin_bindings="Config.render_chunk_size = 8192" `,

|

| 199 |

+

or use more GPU by configure `accelerate config` .

|

| 200 |

+

|

| 201 |

+

|

| 202 |

+

## Preparing custom data

|

| 203 |

+

More details can be found at https://github.com/google-research/multinerf

|

| 204 |

+

```

|

| 205 |

+

DATA_DIR=my_dataset_dir

|

| 206 |

+

bash scripts/local_colmap_and_resize.sh ${DATA_DIR}

|

| 207 |

+

```

|

| 208 |

+

|

| 209 |

+

## TODO

|

| 210 |

+

- [x] Add MultiScale training and testing

|

| 211 |

+

|

| 212 |

+

## Citation

|

| 213 |

+

```

|

| 214 |

+

@misc{barron2023zipnerf,

|

| 215 |

+

title={Zip-NeRF: Anti-Aliased Grid-Based Neural Radiance Fields},

|

| 216 |

+

author={Jonathan T. Barron and Ben Mildenhall and Dor Verbin and Pratul P. Srinivasan and Peter Hedman},

|

| 217 |

+

year={2023},

|

| 218 |

+

eprint={2304.06706},

|

| 219 |

+

archivePrefix={arXiv},

|

| 220 |

+

primaryClass={cs.CV}

|

| 221 |

+

}

|

| 222 |

+

|

| 223 |

+

@misc{multinerf2022,

|

| 224 |

+

title={{MultiNeRF}: {A} {Code} {Release} for {Mip-NeRF} 360, {Ref-NeRF}, and {RawNeRF}},

|

| 225 |

+

author={Ben Mildenhall and Dor Verbin and Pratul P. Srinivasan and Peter Hedman and Ricardo Martin-Brualla and Jonathan T. Barron},

|

| 226 |

+

year={2022},

|

| 227 |

+

url={https://github.com/google-research/multinerf},

|

| 228 |

+

}

|

| 229 |

+

|

| 230 |

+

@Misc{accelerate,

|

| 231 |

+

title = {Accelerate: Training and inference at scale made simple, efficient and adaptable.},

|

| 232 |

+

author = {Sylvain Gugger, Lysandre Debut, Thomas Wolf, Philipp Schmid, Zachary Mueller, Sourab Mangrulkar},

|

| 233 |

+

howpublished = {\url{https://github.com/huggingface/accelerate}},

|

| 234 |

+

year = {2022}

|

| 235 |

+

}

|

| 236 |

+

|

| 237 |

+

@misc{torch-ngp,

|

| 238 |

+

Author = {Jiaxiang Tang},

|

| 239 |

+

Year = {2022},

|

| 240 |

+

Note = {https://github.com/ashawkey/torch-ngp},

|

| 241 |

+

Title = {Torch-ngp: a PyTorch implementation of instant-ngp}

|

| 242 |

+

}

|

| 243 |

+

```

|

| 244 |

+

|

| 245 |

+

## Acknowledgements

|

| 246 |

+

This work is based on my another repo https://github.com/SuLvXiangXin/multinerf-pytorch,

|

| 247 |

+

which is basically a pytorch translation from [multinerf](https://github.com/google-research/multinerf)

|

| 248 |

+

|

| 249 |

+

- Thanks to [multinerf](https://github.com/google-research/multinerf) for amazing multinerf(MipNeRF360,RefNeRF,RawNeRF) implementation

|

| 250 |

+

- Thanks to [accelerate](https://github.com/huggingface/accelerate) for distributed training

|

| 251 |

+

- Thanks to [torch-ngp](https://github.com/ashawkey/torch-ngp) for super useful hashencoder

|

| 252 |

+

- Thanks to [Yurui Chen](https://github.com/519401113) for discussing the details of the paper.

|

configs/360.gin

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Config.exp_name = 'test'

|

| 2 |

+

Config.dataset_loader = 'llff'

|

| 3 |

+

Config.near = 0.2

|

| 4 |

+

Config.far = 1e6

|

| 5 |

+

Config.factor = 4

|

| 6 |

+

|

| 7 |

+

Model.raydist_fn = 'power_transformation'

|

| 8 |

+

Model.opaque_background = True

|

| 9 |

+

|

| 10 |

+

PropMLP.disable_density_normals = True

|

| 11 |

+

PropMLP.disable_rgb = True

|

| 12 |

+

PropMLP.grid_level_dim = 1

|

| 13 |

+

|

| 14 |

+

NerfMLP.disable_density_normals = True

|

| 15 |

+

|

configs/360_glo.gin

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Config.dataset_loader = 'llff'

|

| 2 |

+

Config.near = 0.2

|

| 3 |

+

Config.far = 1e6

|

| 4 |

+

Config.factor = 4

|

| 5 |

+

|

| 6 |

+

Model.raydist_fn = 'power_transformation'

|

| 7 |

+

Model.num_glo_features = 128

|

| 8 |

+

Model.opaque_background = True

|

| 9 |

+

|

| 10 |

+

PropMLP.disable_density_normals = True

|

| 11 |

+

PropMLP.disable_rgb = True

|

| 12 |

+

PropMLP.grid_level_dim = 1

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

NerfMLP.disable_density_normals = True

|

configs/blender.gin

ADDED

|

@@ -0,0 +1,15 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Config.exp_name = 'test'

|

| 2 |

+

Config.dataset_loader = 'blender'

|

| 3 |

+

Config.near = 2

|

| 4 |

+

Config.far = 6

|

| 5 |

+

Config.factor = 0

|

| 6 |

+

Config.hash_decay_mults = 10

|

| 7 |

+

|

| 8 |

+

Model.raydist_fn = None

|

| 9 |

+

|

| 10 |

+

PropMLP.disable_density_normals = True

|

| 11 |

+

PropMLP.disable_rgb = True

|

| 12 |

+

PropMLP.grid_level_dim = 1

|

| 13 |

+

|

| 14 |

+

NerfMLP.disable_density_normals = True

|

| 15 |

+

|

configs/blender_refnerf.gin

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Config.dataset_loader = 'blender'

|

| 2 |

+

Config.batching = 'single_image'

|

| 3 |

+

Config.near = 2

|

| 4 |

+

Config.far = 6

|

| 5 |

+

|

| 6 |

+

Config.eval_render_interval = 5

|

| 7 |

+

Config.compute_normal_metrics = True

|

| 8 |

+

Config.data_loss_type = 'mse'

|

| 9 |

+

Config.distortion_loss_mult = 0.0

|

| 10 |

+

Config.orientation_loss_mult = 0.1

|

| 11 |

+

Config.orientation_loss_target = 'normals_pred'

|

| 12 |

+

Config.predicted_normal_loss_mult = 3e-4

|

| 13 |

+

Config.orientation_coarse_loss_mult = 0.01

|

| 14 |

+

Config.predicted_normal_coarse_loss_mult = 3e-5

|

| 15 |

+

Config.interlevel_loss_mult = 0.0

|

| 16 |

+

Config.data_coarse_loss_mult = 0.1

|

| 17 |

+

Config.adam_eps = 1e-8

|

| 18 |

+

|

| 19 |

+

Model.num_levels = 2

|

| 20 |

+

Model.single_mlp = True

|

| 21 |

+

Model.num_prop_samples = 128 # This needs to be set despite single_mlp = True.

|

| 22 |

+

Model.num_nerf_samples = 128

|

| 23 |

+

Model.anneal_slope = 0.

|

| 24 |

+

Model.dilation_multiplier = 0.

|

| 25 |

+

Model.dilation_bias = 0.

|

| 26 |

+

Model.single_jitter = False

|

| 27 |

+

Model.resample_padding = 0.01

|

| 28 |

+

Model.distinct_prop = False

|

| 29 |

+

|

| 30 |

+

NerfMLP.disable_density_normals = False

|

| 31 |

+

NerfMLP.enable_pred_normals = True

|

| 32 |

+

NerfMLP.use_directional_enc = True

|

| 33 |

+

NerfMLP.use_reflections = True

|

| 34 |

+

NerfMLP.deg_view = 5

|

| 35 |

+

NerfMLP.enable_pred_roughness = True

|

| 36 |

+

NerfMLP.use_diffuse_color = True

|

| 37 |

+

NerfMLP.use_specular_tint = True

|

| 38 |

+

NerfMLP.use_n_dot_v = True

|

| 39 |

+

NerfMLP.bottleneck_width = 128

|

| 40 |

+

NerfMLP.density_bias = 0.5

|

| 41 |

+

NerfMLP.max_deg_point = 16

|

configs/llff_256.gin

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Config.dataset_loader = 'llff'

|

| 2 |

+

Config.near = 0.

|

| 3 |

+

Config.far = 1.

|

| 4 |

+

Config.factor = 4

|

| 5 |

+

Config.forward_facing = True

|

| 6 |

+

Config.adam_eps = 1e-8

|

| 7 |

+

|

| 8 |

+

Model.opaque_background = True

|

| 9 |

+

Model.num_levels = 2

|

| 10 |

+

Model.num_prop_samples = 128

|

| 11 |

+

Model.num_nerf_samples = 32

|

| 12 |

+

|

| 13 |

+

PropMLP.disable_density_normals = True

|

| 14 |

+

PropMLP.disable_rgb = True

|

| 15 |

+

|

| 16 |

+

NerfMLP.disable_density_normals = True

|

| 17 |

+

|

| 18 |

+

NerfMLP.max_deg_point = 16

|

| 19 |

+

PropMLP.max_deg_point = 16

|

configs/llff_512.gin

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Config.dataset_loader = 'llff'

|

| 2 |

+

Config.near = 0.

|

| 3 |

+

Config.far = 1.

|

| 4 |

+

Config.factor = 4

|

| 5 |

+

Config.forward_facing = True

|

| 6 |

+

Config.adam_eps = 1e-8

|

| 7 |

+

|

| 8 |

+

Model.opaque_background = True

|

| 9 |

+

Model.num_levels = 2

|

| 10 |

+

Model.num_prop_samples = 128

|

| 11 |

+

Model.num_nerf_samples = 32

|

| 12 |

+

|

| 13 |

+

PropMLP.disable_density_normals = True

|

| 14 |

+

PropMLP.disable_rgb = True

|

| 15 |

+

|

| 16 |

+

NerfMLP.disable_density_normals = True

|

| 17 |

+

|

| 18 |

+

NerfMLP.max_deg_point = 16

|

| 19 |

+

PropMLP.max_deg_point = 16

|

configs/llff_raw.gin

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# General LLFF settings

|

| 2 |

+

|

| 3 |

+

Config.dataset_loader = 'llff'

|

| 4 |

+

Config.near = 0.

|

| 5 |

+

Config.far = 1.

|

| 6 |

+

Config.factor = 4

|

| 7 |

+

Config.forward_facing = True

|

| 8 |

+

|

| 9 |

+

PropMLP.disable_density_normals = True # Turn this off if using orientation loss.

|

| 10 |

+

PropMLP.disable_rgb = True

|

| 11 |

+

|

| 12 |

+

NerfMLP.disable_density_normals = True # Turn this off if using orientation loss.

|

| 13 |

+

|

| 14 |

+

NerfMLP.max_deg_point = 16

|

| 15 |

+

PropMLP.max_deg_point = 16

|

| 16 |

+

|

| 17 |

+

Config.train_render_every = 5000

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

########################## RawNeRF specific settings ##########################

|

| 21 |

+

|

| 22 |

+

Config.rawnerf_mode = True

|

| 23 |

+

Config.data_loss_type = 'rawnerf'

|

| 24 |

+

Config.apply_bayer_mask = True

|

| 25 |

+

Model.learned_exposure_scaling = True

|

| 26 |

+

|

| 27 |

+

Model.num_levels = 2

|

| 28 |

+

Model.num_prop_samples = 128 # Using extra samples for now because of noise instability.

|

| 29 |

+

Model.num_nerf_samples = 128

|

| 30 |

+

Model.opaque_background = True

|

| 31 |

+

Model.distinct_prop = False

|

| 32 |

+

|

| 33 |

+

# RGB activation we use for linear color outputs is exp(x - 5).

|

| 34 |

+

NerfMLP.rgb_padding = 0.

|

| 35 |

+

NerfMLP.rgb_activation = @math.safe_exp

|

| 36 |

+

NerfMLP.rgb_bias = -5.

|

| 37 |

+

PropMLP.rgb_padding = 0.

|

| 38 |

+

PropMLP.rgb_activation = @math.safe_exp

|

| 39 |

+

PropMLP.rgb_bias = -5.

|

| 40 |

+

|

| 41 |

+

## Experimenting with the various regularizers and losses:

|

| 42 |

+

Config.interlevel_loss_mult = .0 # Turning off interlevel for now (default = 1.).

|

| 43 |

+

Config.distortion_loss_mult = .01 # Distortion loss helps with floaters (default = .01).

|

| 44 |

+

Config.orientation_loss_mult = 0. # Orientation loss also not great (try .01).

|

| 45 |

+

Config.data_coarse_loss_mult = 0.1 # Setting this to match old MipNeRF.

|

| 46 |

+

|

| 47 |

+

## Density noise used in original NeRF:

|

| 48 |

+

NerfMLP.density_noise = 1.

|

| 49 |

+

PropMLP.density_noise = 1.

|

| 50 |

+

|

| 51 |

+

## Use a single MLP for all rounds of sampling:

|

| 52 |

+

Model.single_mlp = True

|

| 53 |

+

|

| 54 |

+

## Some algorithmic settings to match the paper:

|

| 55 |

+

Model.anneal_slope = 0.

|

| 56 |

+

Model.dilation_multiplier = 0.

|

| 57 |

+

Model.dilation_bias = 0.

|

| 58 |

+

Model.single_jitter = False

|

| 59 |

+

NerfMLP.weight_init = 'glorot_uniform'

|

| 60 |

+

PropMLP.weight_init = 'glorot_uniform'

|

| 61 |

+

|

| 62 |

+

## Training hyperparameters used in the paper:

|

| 63 |

+

Config.batch_size = 16384

|

| 64 |

+

Config.render_chunk_size = 16384

|

| 65 |

+

Config.lr_init = 1e-3

|

| 66 |

+

Config.lr_final = 1e-5

|

| 67 |

+

Config.max_steps = 500000

|

| 68 |

+

Config.checkpoint_every = 25000

|

| 69 |

+

Config.lr_delay_steps = 2500

|

| 70 |

+

Config.lr_delay_mult = 0.01

|

| 71 |

+

Config.grad_max_norm = 0.1

|

| 72 |

+

Config.grad_max_val = 0.1

|

| 73 |

+

Config.adam_eps = 1e-8

|

configs/multi360.gin

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

include 'configs/360.gin'

|

| 2 |

+

Config.multiscale = True

|

| 3 |

+

Config.multiscale_levels = 4

|

| 4 |

+

|

| 5 |

+

|

eval.py

ADDED

|

@@ -0,0 +1,307 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import logging

|

| 2 |

+

import os

|

| 3 |

+

import sys

|

| 4 |

+

import time

|

| 5 |

+

import accelerate

|

| 6 |

+

from absl import app

|

| 7 |

+

import gin

|

| 8 |

+

from internal import configs

|

| 9 |

+

from internal import datasets

|

| 10 |

+

from internal import image

|

| 11 |

+

from internal import models

|

| 12 |

+

from internal import raw_utils

|

| 13 |

+

from internal import ref_utils

|

| 14 |

+

from internal import train_utils

|

| 15 |

+

from internal import checkpoints

|

| 16 |

+

from internal import utils

|

| 17 |

+

from internal import vis

|

| 18 |

+

import numpy as np

|

| 19 |

+

import torch

|

| 20 |

+

import tensorboardX

|

| 21 |

+

from torch.utils._pytree import tree_map

|

| 22 |

+

|

| 23 |

+

configs.define_common_flags()

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

def summarize_results(folder, scene_names, num_buckets):

|

| 27 |

+