Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,161 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

tags:

|

| 4 |

+

- not-for-all-audiences

|

| 5 |

+

---

|

| 6 |

+

# CalderaAI/Hexoteric-7B

|

| 7 |

+

Full model card soon. Early release;

|

| 8 |

+

Spherical Hexa-Merge of hand-picked Mistrel-7B models.

|

| 9 |

+

This is the successor to Naberius-7B, building on its findings.

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

More to come soon...

|

| 13 |

+

|

| 14 |

+

---

|

| 15 |

+

# AshhLimaRP-Mistral-7B (Alpaca, v1)

|

| 16 |

+

|

| 17 |

+

This is a version of LimaRP with 2000 training samples _up to_ about 9k tokens length

|

| 18 |

+

finetuned on [Ashhwriter-Mistral-7B](https://huggingface.co/lemonilia/Ashhwriter-Mistral-7B).

|

| 19 |

+

|

| 20 |

+

LimaRP is a longform-oriented, novel-style roleplaying chat model intended to replicate the experience

|

| 21 |

+

of 1-on-1 roleplay on Internet forums. Short-form, IRC/Discord-style RP (aka "Markdown format")

|

| 22 |

+

is not supported. The model does not include instruction tuning, only manually picked and

|

| 23 |

+

slightly edited RP conversations with persona and scenario data.

|

| 24 |

+

|

| 25 |

+

Ashhwriter, the base, is a model entirely finetuned on human-written lewd stories.

|

| 26 |

+

|

| 27 |

+

## Available versions

|

| 28 |

+

- Float16 HF weights

|

| 29 |

+

- LoRA Adapter ([adapter_config.json](https://huggingface.co/lemonilia/AshhLimaRP-Mistral-7B/resolve/main/adapter_config.json) and [adapter_model.bin](https://huggingface.co/lemonilia/AshhLimaRP-Mistral-7B/resolve/main/adapter_model.bin))

|

| 30 |

+

- [4bit AWQ](https://huggingface.co/lemonilia/AshhLimaRP-Mistral-7B/tree/main/AWQ)

|

| 31 |

+

- [Q4_K_M GGUF](https://huggingface.co/lemonilia/AshhLimaRP-Mistral-7B/resolve/main/AshhLimaRP-Mistral-7B.Q4_K_M.gguf)

|

| 32 |

+

- [Q6_K GGUF](https://huggingface.co/lemonilia/AshhLimaRP-Mistral-7B/resolve/main/AshhLimaRP-Mistral-7B.Q6_K.gguf)

|

| 33 |

+

|

| 34 |

+

## Prompt format

|

| 35 |

+

[Extended Alpaca format](https://github.com/tatsu-lab/stanford_alpaca),

|

| 36 |

+

with `### Instruction:`, `### Input:` immediately preceding user inputs and `### Response:`

|

| 37 |

+

immediately preceding model outputs. While Alpaca wasn't originally intended for multi-turn

|

| 38 |

+

responses, in practice this is not a problem; the format follows a pattern already used by

|

| 39 |

+

other models.

|

| 40 |

+

|

| 41 |

+

```

|

| 42 |

+

### Instruction:

|

| 43 |

+

Character's Persona: {bot character description}

|

| 44 |

+

|

| 45 |

+

User's Persona: {user character description}

|

| 46 |

+

|

| 47 |

+

Scenario: {what happens in the story}

|

| 48 |

+

|

| 49 |

+

Play the role of Character. You must engage in a roleplaying chat with User below this line. Do not write dialogues and narration for User.

|

| 50 |

+

|

| 51 |

+

### Input:

|

| 52 |

+

User: {utterance}

|

| 53 |

+

|

| 54 |

+

### Response:

|

| 55 |

+

Character: {utterance}

|

| 56 |

+

|

| 57 |

+

### Input

|

| 58 |

+

User: {utterance}

|

| 59 |

+

|

| 60 |

+

### Response:

|

| 61 |

+

Character: {utterance}

|

| 62 |

+

|

| 63 |

+

(etc.)

|

| 64 |

+

```

|

| 65 |

+

|

| 66 |

+

You should:

|

| 67 |

+

- Replace all text in curly braces (curly braces included) with your own text.

|

| 68 |

+

- Replace `User` and `Character` with appropriate names.

|

| 69 |

+

|

| 70 |

+

|

| 71 |

+

### Message length control

|

| 72 |

+

Inspired by the previously named "Roleplay" preset in SillyTavern, with this

|

| 73 |

+

version of LimaRP it is possible to append a length modifier to the response instruction

|

| 74 |

+

sequence, like this:

|

| 75 |

+

|

| 76 |

+

```

|

| 77 |

+

### Input

|

| 78 |

+

User: {utterance}

|

| 79 |

+

|

| 80 |

+

### Response: (length = medium)

|

| 81 |

+

Character: {utterance}

|

| 82 |

+

```

|

| 83 |

+

|

| 84 |

+

This has an immediately noticeable effect on bot responses. The lengths using during training are:

|

| 85 |

+

`micro`, `tiny`, `short`, `medium`, `long`, `massive`, `huge`, `enormous`, `humongous`, `unlimited`.

|

| 86 |

+

**The recommended starting length is medium**. Keep in mind that the AI can ramble or impersonate

|

| 87 |

+

the user with very long messages.

|

| 88 |

+

|

| 89 |

+

The length control effect is reproducible, but the messages will not necessarily follow

|

| 90 |

+

lengths very precisely, rather follow certain ranges on average, as seen in this table

|

| 91 |

+

with data from tests made with one reply at the beginning of the conversation:

|

| 92 |

+

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

Response length control appears to work well also deep into the conversation. **By omitting

|

| 96 |

+

the modifier, the model will choose the most appropriate response length** (although it might

|

| 97 |

+

not necessarily be what the user desires).

|

| 98 |

+

|

| 99 |

+

## Suggested settings

|

| 100 |

+

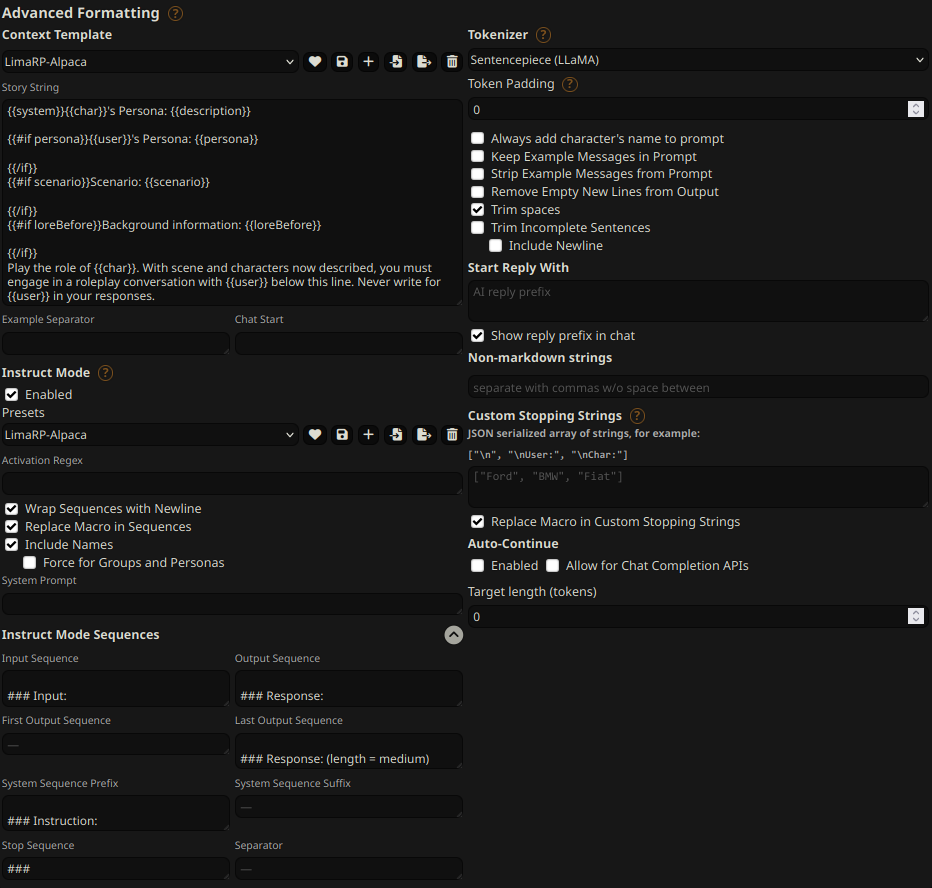

You can follow these instruction format settings in SillyTavern. Replace `medium` with

|

| 101 |

+

your desired response length:

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

## Text generation settings

|

| 106 |

+

These settings could be a good general starting point:

|

| 107 |

+

|

| 108 |

+

- TFS = 0.90

|

| 109 |

+

- Temperature = 0.70

|

| 110 |

+

- Repetition penalty = ~1.11

|

| 111 |

+

- Repetition penalty range = ~2048

|

| 112 |

+

- top-k = 0 (disabled)

|

| 113 |

+

- top-p = 1 (disabled)

|

| 114 |

+

|

| 115 |

+

## Training procedure

|

| 116 |

+

[Axolotl](https://github.com/OpenAccess-AI-Collective/axolotl) was used for training

|

| 117 |

+

on 2x NVidia A40 GPUs.

|

| 118 |

+

|

| 119 |

+

The A40 GPUs have been graciously provided by [Arc Compute](https://www.arccompute.io/).

|

| 120 |

+

|

| 121 |

+

### Training hyperparameters

|

| 122 |

+

A lower learning rate than usual was employed. Due to an unforeseen issue the training

|

| 123 |

+

was cut short and as a result 3 epochs were trained instead of the planned 4. Using 2 GPUs,

|

| 124 |

+

the effective global batch size would have been 16.

|

| 125 |

+

|

| 126 |

+

Training was continued from the most recent LoRA adapter from Ashhwriter, using the same

|

| 127 |

+

LoRA R and LoRA alpha.

|

| 128 |

+

|

| 129 |

+

- lora_model_dir: /home/anon/bin/axolotl/OUT_mistral-stories/checkpoint-6000/

|

| 130 |

+

- learning_rate: 0.00005

|

| 131 |

+

- lr_scheduler: cosine

|

| 132 |

+

- noisy_embedding_alpha: 3.5

|

| 133 |

+

- num_epochs: 4

|

| 134 |

+

- sequence_len: 8750

|

| 135 |

+

- lora_r: 256

|

| 136 |

+

- lora_alpha: 16

|

| 137 |

+

- lora_dropout: 0.05

|

| 138 |

+

- lora_target_linear: True

|

| 139 |

+

- bf16: True

|

| 140 |

+

- fp16: false

|

| 141 |

+

- tf32: True

|

| 142 |

+

- load_in_8bit: True

|

| 143 |

+

- adapter: lora

|

| 144 |

+

- micro_batch_size: 2

|

| 145 |

+

- optimizer: adamw_bnb_8bit

|

| 146 |

+

- warmup_steps: 10

|

| 147 |

+

- optimizer: adamw_torch

|

| 148 |

+

- flash_attention: true

|

| 149 |

+

- sample_packing: true

|

| 150 |

+

- pad_to_sequence_len: true

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

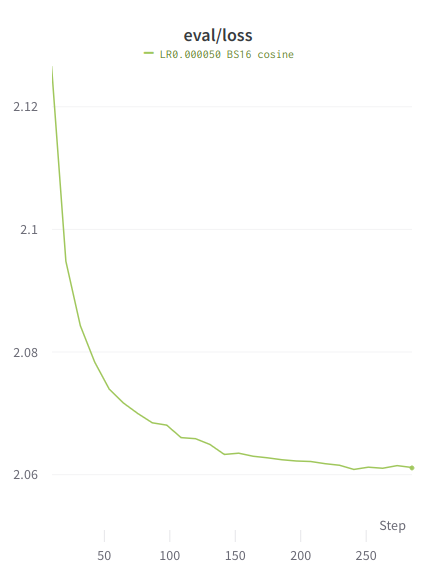

### Loss graphs

|

| 154 |

+

Values are higher than typical because the training is performed on the entire

|

| 155 |

+

sample, similar to unsupervised finetuning.

|

| 156 |

+

|

| 157 |

+

#### Train loss

|

| 158 |

+

|

| 159 |

+

|

| 160 |

+

#### Eval loss

|

| 161 |

+

|