Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,119 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

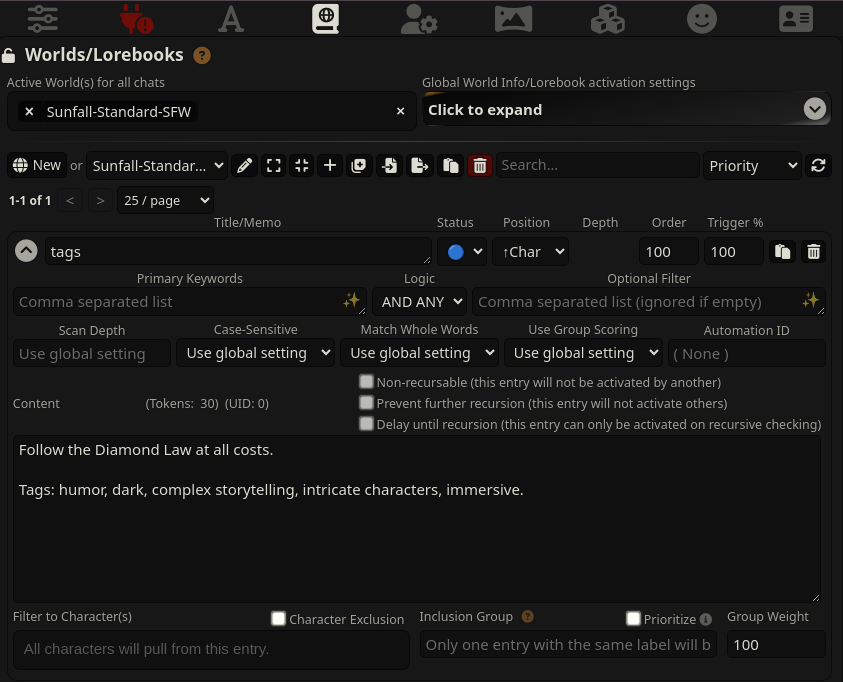

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: llama3

|

| 3 |

+

license_name: llama3

|

| 4 |

+

license_link: LICENSE

|

| 5 |

+

library_name: transformers

|

| 6 |

+

tags:

|

| 7 |

+

- not-for-all-audiences

|

| 8 |

+

datasets:

|

| 9 |

+

- crestf411/LimaRP-DS

|

| 10 |

+

- AI-MO/NuminaMath-CoT

|

| 11 |

+

---

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

```

|

| 15 |

+

e88 88e d8

|

| 16 |

+

d888 888b 8888 8888 ,"Y88b 888 8e d88

|

| 17 |

+

C8888 8888D 8888 8888 "8" 888 888 88b d88888

|

| 18 |

+

Y888 888P Y888 888P ,ee 888 888 888 888

|

| 19 |

+

"88 88" "88 88" "88 888 888 888 888

|

| 20 |

+

b

|

| 21 |

+

8b,

|

| 22 |

+

|

| 23 |

+

e88'Y88 d8 888

|

| 24 |

+

d888 'Y ,"Y88b 888,8, d88 ,e e, 888

|

| 25 |

+

C8888 "8" 888 888 " d88888 d88 88b 888

|

| 26 |

+

Y888 ,d ,ee 888 888 888 888 , 888

|

| 27 |

+

"88,d88 "88 888 888 888 "YeeP" 888

|

| 28 |

+

|

| 29 |

+

PROUDLY PRESENTS

|

| 30 |

+

```

|

| 31 |

+

# L3.1-70B-sunfall-v0.6.1-exl2-longcal

|

| 32 |

+

|

| 33 |

+

Quantized using 115 rows of 8192 tokens from the default ExLlamav2-calibration dataset.

|

| 34 |

+

|

| 35 |

+

Branches:

|

| 36 |

+

- `main` -- `measurement.json`

|

| 37 |

+

- `6b8h` -- 6bpw, 8bit lm_head

|

| 38 |

+

- `4.65b6h` -- 4.65bpw, 6bit lm_head

|

| 39 |

+

- `4.5b6h` -- 4.5bpw, 6bit lm_head

|

| 40 |

+

- `2.25b6h` -- 2.25bpw, 6bit lm_head

|

| 41 |

+

|

| 42 |

+

Original model link: [crestf411/L3.1-70B-sunfall-v0.6.1](https://huggingface.co/crestf411/L3.1-70B-sunfall-v0.6.1)

|

| 43 |

+

|

| 44 |

+

Original model README below.

|

| 45 |

+

|

| 46 |

+

-----

|

| 47 |

+

|

| 48 |

+

Sunfall (2024-07-31) v0.6.1 on top of Meta's Llama-3 70B Instruct.

|

| 49 |

+

|

| 50 |

+

**NOTE: This model requires a slightly lower temperature than usual. Recommended starting point in Silly Tavern are:**

|

| 51 |

+

|

| 52 |

+

* Temperature: **1.2**

|

| 53 |

+

* MinP: **0.06**

|

| 54 |

+

* Optional DRY: **0.8 1.75 2 0**

|

| 55 |

+

|

| 56 |

+

General heuristic:

|

| 57 |

+

|

| 58 |

+

* Lots of slop: temperature is too low. Raise it.

|

| 59 |

+

* Model is making mistakes about subtle or obvious details in the scene: temperature is too high. Lower it.

|

| 60 |

+

|

| 61 |

+

*Mergers/fine-tuners: [there is a LoRA of this model](https://huggingface.co/crestf411/sunfall-peft/tree/main/l3.1-70b). Consider merging that instead of merging this model.*

|

| 62 |

+

|

| 63 |

+

To use lore book tags ([example](https://files.catbox.moe/w5otyq.json)), make sure you use **Status: Blue (constant)** and write e.g.

|

| 64 |

+

|

| 65 |

+

```

|

| 66 |

+

Follow the Diamond Law at all costs.

|

| 67 |

+

|

| 68 |

+

Tags: humor, dark, complex storytelling, intricate characters, immersive.

|

| 69 |

+

```

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

This model has been trained on context that mimics that of Silly Tavern's Llama3-instruct preset, with the following settings:

|

| 74 |

+

|

| 75 |

+

**System Prompt:**

|

| 76 |

+

```

|

| 77 |

+

You are an expert actor that can fully immerse yourself into any role given. You do not break character for any reason. Currently your role is {{char}}, which is described in detail below. As {{char}}, continue the exchange with {{user}}.

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

The card has also been trained on content which includes a narrator card, which was used when the content did not mainly revolve around two characters. Future versions will expand on this idea, so forgive the vagueness at this time.

|

| 81 |

+

|

| 82 |

+

(The Diamond Law is this, although new rules were added: https://files.catbox.moe/d15m3g.txt -- So far results are unclear, but the training was done with this phrase included, and the training data adheres to the law.)

|

| 83 |

+

|

| 84 |

+

The model has also been trained to do storywriting. The system message ends up looking something like this:

|

| 85 |

+

```

|

| 86 |

+

You are an expert storyteller, who can roleplay or write compelling stories. Follow the Diamond Law at all costs. Below is a scenario with character descriptions and content tags. Write a story based on this scenario.

|

| 87 |

+

|

| 88 |

+

Scenario: The story is about James, blabla.

|

| 89 |

+

|

| 90 |

+

James is an overweight 63 year old blabla.

|

| 91 |

+

|

| 92 |

+

Lucy: James's 62 year old wife.

|

| 93 |

+

|

| 94 |

+

Tags: tag1, tag2, tag3, ...

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

MMLU-Pro Benchmark: model overall is higher than the instruct base, but it loses in specific categories.

|

| 98 |

+

|

| 99 |

+

```

|

| 100 |

+

Llama3.1 70B Instruct base:

|

| 101 |

+

|

| 102 |

+

| overall | biology | business | chemistry | computer science | economics | engineering | health | history | law | math | philosophy | physics | psychology | other |

|

| 103 |

+

| ------- | ------- | -------- | --------- | ---------------- | --------- | ----------- | ------ | ------- | ----- | ----- | ---------- | ------- | ---------- | ----- |

|

| 104 |

+

| 58.64 | 73.91 | 60.00 | 61.11 | 69.23 | 70.37 | 51.61 | 57.69 | 66.67 | 51.43 | 55.81 | 68.75 | 51.22 | 48.00 | 58.62 |

|

| 105 |

+

| 224 | 17 | 15 | 22 | 9 | 19 | 16 | 15 | 8 | 18 | 24 | 11 | 21 | 12 | 17 |

|

| 106 |

+

| 382 | 23 | 25 | 36 | 13 | 27 | 31 | 26 | 12 | 35 | 43 | 16 | 41 | 25 | 29 |

|

| 107 |

+

|

| 108 |

+

Sunfall v0.6.1:

|

| 109 |

+

|

| 110 |

+

| overall | biology | business | chemistry | computer science | economics | engineering | health | history | law | math | philosophy | physics | psychology | other |

|

| 111 |

+

| ------- | ------- | -------- | --------- | ---------------- | --------- | ----------- | ------ | ------- | ----- | ----- | ---------- | ------- | ---------- | ----- |

|

| 112 |

+

| 60.73 | 78.26 | 60.00 | 55.56 | 69.23 | 70.37 | 64.52 | 65.38 | 75.00 | 42.86 | 62.79 | 68.75 | 56.10 | 56.00 | 51.72 |

|

| 113 |

+

| 232 | 18 | 15 | 20 | 9 | 19 | 20 | 17 | 9 | 15 | 27 | 11 | 23 | 14 | 15 |

|

| 114 |

+

| 382 | 23 | 25 | 36 | 13 | 27 | 31 | 26 | 12 | 35 | 43 | 16 | 41 | 25 | 29 |

|

| 115 |

+

```

|

| 116 |

+

|

| 117 |

+

The above benchmark output is with temp 0 and no other helping samplers. The model on its own is strong, but it gets more easily confused than the base instruct model.

|

| 118 |

+

|

| 119 |

+

Probably because I traumatized it with my vile dataset. Who knows.

|