Chiyu Zhang, Muhammad Abdul-Mageed, Ganesh Jarwaha

Publish at Findings of ACL 2023

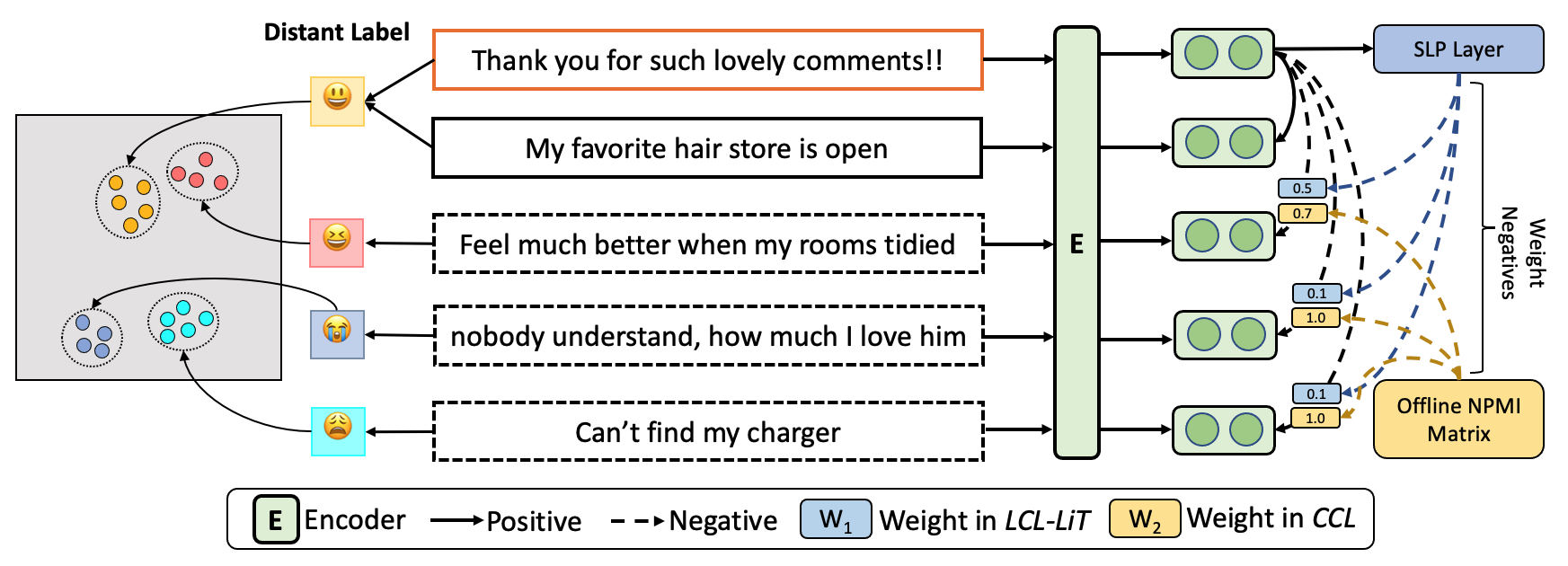

[]() []() Illustration of our proposed InfoDCL framework. We exploit distant/surrogate labels (i.e., emojis) to supervise two contrastive losses, corpus-aware contrastive loss (CCL) and Light label-aware contrastive loss (LCL-LiT). Sequence representations from our model should keep the cluster of each class distinguishable and preserve semantic relationships between classes. ## Checkpoints of Models Pre-Trained with InfoDCL Enlish Models: * InfoDCL-RoBERTa trained with TweetEmoji-EN: https://huggingface.co/UBC-NLP/InfoDCL-emoji * InfoDCL-RoBERTa trained with TweetHashtag-EN: https://huggingface.co/UBC-NLP/InfoDCL-hashtag Multilingual Model: * InfoDCL-XLMR trained with multilingual TweetEmoji-multi: UBC-NLP/InfoDCL-Emoji-XLMR-Base ## Model Performance Fine-tuning results on our 24 Socio-pragmatic Meaning datasets (average macro-F1 over five runs).