Commit

•

9dd04b6

1

Parent(s):

a09fc71

Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,63 @@

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

| 3 |

+

language:

|

| 4 |

+

- hy

|

| 5 |

+

pipeline_tag: text-generation

|

| 6 |

+

tags:

|

| 7 |

+

- multilingual

|

| 8 |

+

- PyTorch

|

| 9 |

+

- Transformers

|

| 10 |

+

- gpt3

|

| 11 |

+

- gpt2

|

| 12 |

+

- Deepspeed

|

| 13 |

+

- Megatron

|

| 14 |

+

datasets:

|

| 15 |

+

- mc4

|

| 16 |

+

- wikipedia

|

| 17 |

+

thumbnail: "https://github.com/sberbank-ai/mgpt"

|

| 18 |

---

|

| 19 |

+

|

| 20 |

+

# Multilingual GPT model, Armenian language finetune

|

| 21 |

+

|

| 22 |

+

We introduce a monolingual GPT-3-based model for Armenian language

|

| 23 |

+

|

| 24 |

+

The model is based on [mGPT](https://huggingface.co/sberbank-ai/mGPT/), a family of autoregressive GPT-like models with 1.3 billion parameters trained on 60 languages from 25 language families using Wikipedia and Colossal Clean Crawled Corpus.

|

| 25 |

+

|

| 26 |

+

We reproduce the GPT-3 architecture using GPT-2 sources and the sparse attention mechanism, [Deepspeed](https://github.com/microsoft/DeepSpeed) and [Megatron](https://github.com/NVIDIA/Megatron-LM) frameworks allows us to effectively parallelize the training and inference steps. The resulting models show performance on par with the recently released [XGLM](https://arxiv.org/pdf/2112.10668.pdf) models at the same time covering more languages and enhancing NLP possibilities for low resource languages.

|

| 27 |

+

|

| 28 |

+

## Code

|

| 29 |

+

The source code for the mGPT XL model is available on [Github](https://github.com/sberbank-ai/mgpt)

|

| 30 |

+

|

| 31 |

+

## Paper

|

| 32 |

+

mGPT: Few-Shot Learners Go Multilingual

|

| 33 |

+

|

| 34 |

+

[Abstract](https://arxiv.org/abs/2204.07580) [PDF](https://arxiv.org/pdf/2204.07580.pdf)

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

```

|

| 39 |

+

@misc{https://doi.org/10.48550/arxiv.2204.07580,

|

| 40 |

+

doi = {10.48550/ARXIV.2204.07580},

|

| 41 |

+

url = {https://arxiv.org/abs/2204.07580},

|

| 42 |

+

author = {Shliazhko, Oleh and Fenogenova, Alena and Tikhonova, Maria and Mikhailov, Vladislav and Kozlova, Anastasia and Shavrina, Tatiana},

|

| 43 |

+

keywords = {Computation and Language (cs.CL), Artificial Intelligence (cs.AI), FOS: Computer and information sciences, FOS: Computer and information sciences, I.2; I.2.7, 68-06, 68-04, 68T50, 68T01},

|

| 44 |

+

title = {mGPT: Few-Shot Learners Go Multilingual},

|

| 45 |

+

publisher = {arXiv},

|

| 46 |

+

year = {2022},

|

| 47 |

+

copyright = {Creative Commons Attribution 4.0 International}

|

| 48 |

+

}

|

| 49 |

+

|

| 50 |

+

```

|

| 51 |

+

|

| 52 |

+

## Training

|

| 53 |

+

|

| 54 |

+

The model was fine-tuned on 170GB of Armenian texts, including MC4, Archive.org fiction, EANC public data, OpenSubtitles, OSCAR corpus and blog texts.

|

| 55 |

+

|

| 56 |

+

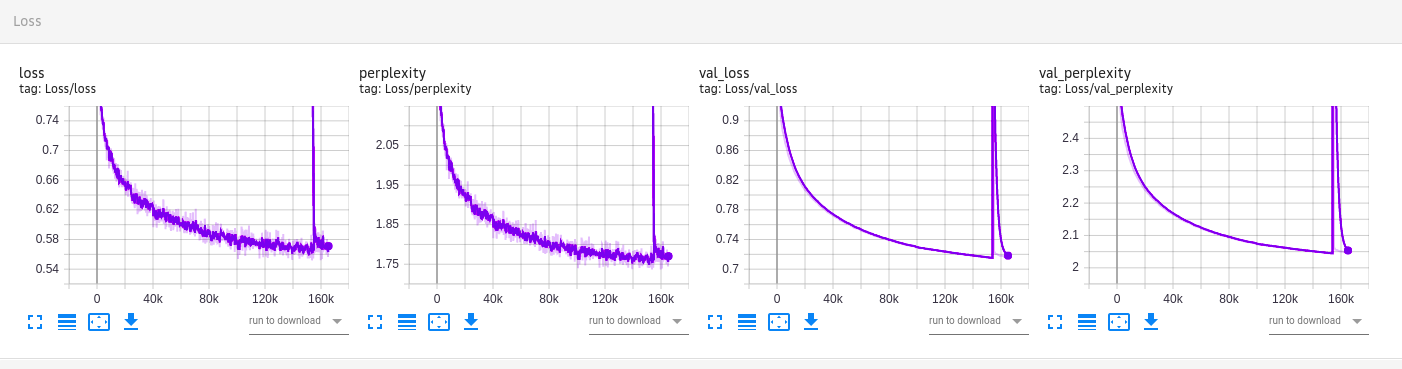

Val perplexity is 2.046.

|

| 57 |

+

|

| 58 |

+

The mGPT model was pre-trained for 12 days x 256 GPU (Tesla NVidia V100), 4 epochs, then 9 days x 64 GPU, 1 epoch

|

| 59 |

+

|

| 60 |

+

The Armenian finetune was around 7 days with 4 Tesla NVidia V100 and has made 160k steps.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

What happens on this image? The model is originally trained with sparse attention masks, then fine-tuned with no sparsity on the last steps (perplexity and loss peak). Getting rid of the sparsity in the end of the training helps to integrate the model into the GPT2 HF class.

|