cyber-meow

commited on

Commit

•

8522c78

1

Parent(s):

97b8469

onimai

Browse files- grasswonder-umamusume/README.md +2 -2

- onimai/README.md +67 -0

- onimai/samples/00026-4010692159.png +0 -0

- onimai/samples/00030-286171376.png +0 -0

- onimai/samples/00034-2431887953.png +0 -0

- onimai/samples/grid-00010-492069042.png +0 -0

- onimai/samples/grid-00017-492069042.png +0 -0

- suremio-nozomizo-eilanya-maplesally/.README.md.swp +0 -0

- suremio-nozomizo-eilanya-maplesally/README.md +5 -2

grasswonder-umamusume/README.md

CHANGED

|

@@ -35,7 +35,7 @@ Trained with [Kohya trainer](https://github.com/Linaqruf/kohya-trainer)

|

|

| 35 |

|

| 36 |

|

| 37 |

|

| 38 |

-

### LoRA

|

| 39 |

|

| 40 |

Please refer to [LoRA Training Guide](https://rentry.org/lora_train)

|

| 41 |

|

|

@@ -44,7 +44,7 @@ Please refer to [LoRA Training Guide](https://rentry.org/lora_train)

|

|

| 44 |

- learning rate 1e-4

|

| 45 |

- batch size 6

|

| 46 |

- clip skip 2

|

| 47 |

-

- number of training steps 7520 (20 epochs)

|

| 48 |

|

| 49 |

*Examples*

|

| 50 |

|

|

|

|

| 35 |

|

| 36 |

|

| 37 |

|

| 38 |

+

### LoRA

|

| 39 |

|

| 40 |

Please refer to [LoRA Training Guide](https://rentry.org/lora_train)

|

| 41 |

|

|

|

|

| 44 |

- learning rate 1e-4

|

| 45 |

- batch size 6

|

| 46 |

- clip skip 2

|

| 47 |

+

- number of training steps 7520/6 (20 epochs)

|

| 48 |

|

| 49 |

*Examples*

|

| 50 |

|

onimai/README.md

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

This folder contains models trained for the two characters oyama mahiro and oyama mihari.

|

| 2 |

+

|

| 3 |

+

Trigger words are

|

| 4 |

+

- oyama mahiro

|

| 5 |

+

- oyama mihari

|

| 6 |

+

|

| 7 |

+

To get anime style you can add `aniscreen`

|

| 8 |

+

|

| 9 |

+

At this point I feel like having oyama in the trigger is probably a bad idea because it seems to cause more character blending.

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

### Dataset

|

| 13 |

+

|

| 14 |

+

Total size 338

|

| 15 |

+

|

| 16 |

+

screenshots 127

|

| 17 |

+

- Mahiro: 51

|

| 18 |

+

- Mihari: 46

|

| 19 |

+

- Mahiro + Mihari: 30

|

| 20 |

+

|

| 21 |

+

fanart 92

|

| 22 |

+

- Mahiro: 68

|

| 23 |

+

- Mihari: 8

|

| 24 |

+

- Mahiro + Mihari: 16

|

| 25 |

+

|

| 26 |

+

Regularization 119

|

| 27 |

+

|

| 28 |

+

For training the following repeat is used

|

| 29 |

+

- 1 for Mahiro and reg

|

| 30 |

+

- 2 for Mihari

|

| 31 |

+

- 4 for Mahiro + Mihari

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

### Base model

|

| 35 |

+

|

| 36 |

+

[NMFSAN](https://huggingface.co/Crosstyan/BPModel/blob/main/NMFSAN/README.md)

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

### LoRA

|

| 40 |

+

|

| 41 |

+

Please refer to [LoRA Training Guide](https://rentry.org/lora_train)

|

| 42 |

+

|

| 43 |

+

- training of text encoder turned on

|

| 44 |

+

- network dimension 64

|

| 45 |

+

- learning rate scheduler constant

|

| 46 |

+

- learning rate 1e-4 and 1e-5 (two separate runs)

|

| 47 |

+

- batch size 7

|

| 48 |

+

- clip skip 2

|

| 49 |

+

- number of training epochs 45

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

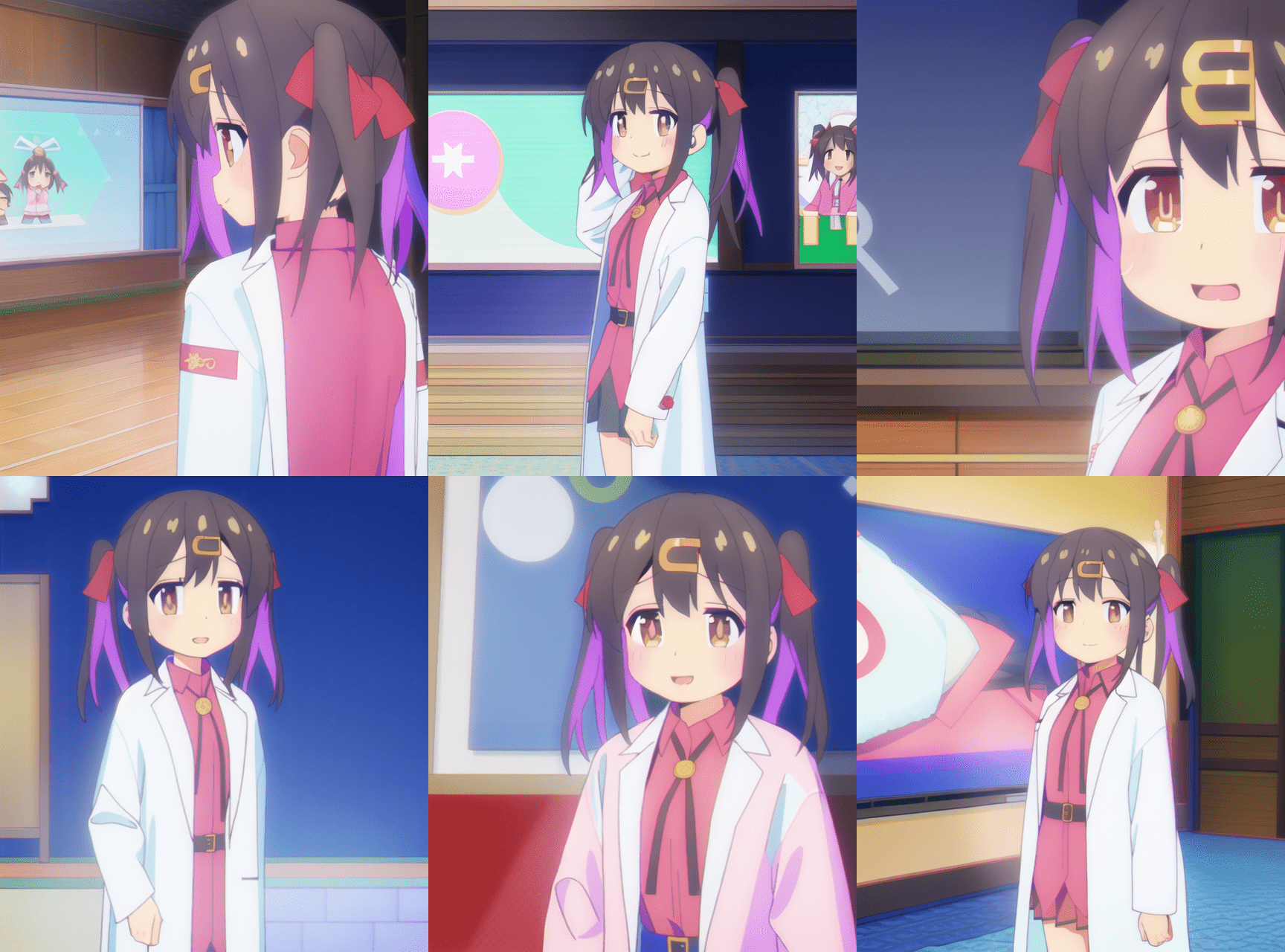

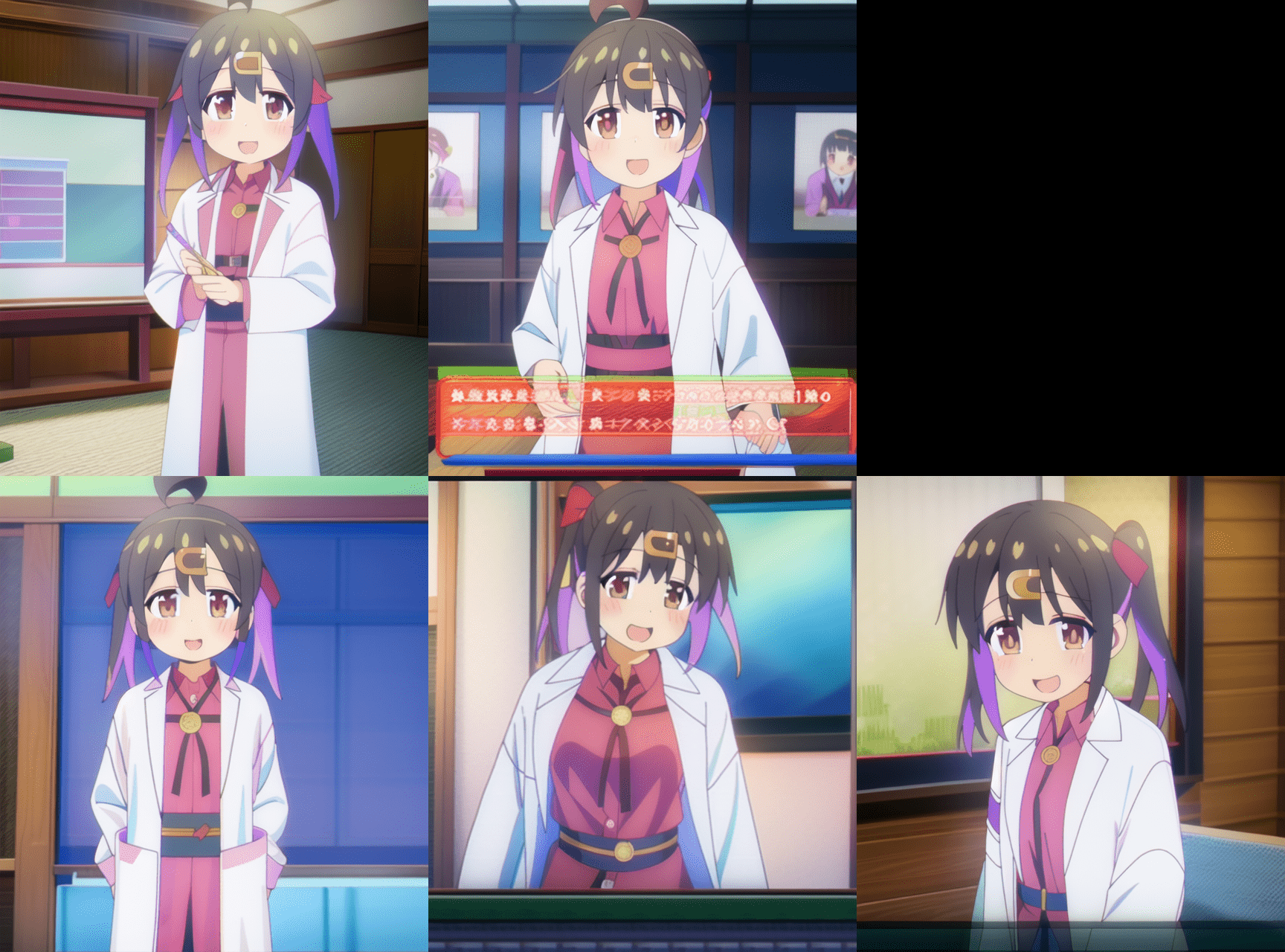

### Comparaison

|

| 53 |

+

|

| 54 |

+

learning rate 1e-4

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

learning rate 1e-5

|

| 59 |

+

|

| 60 |

+

|

| 61 |

+

Normally with 2 repeats and 45 epochs we should have perfectly learned the character with dreambooth (using typically lr=1e-6), but here with lr=1e-5 it does not seem to work very well. lr=1e-4 produces quite correct results but there is a risk of overfitting.

|

| 62 |

+

|

| 63 |

+

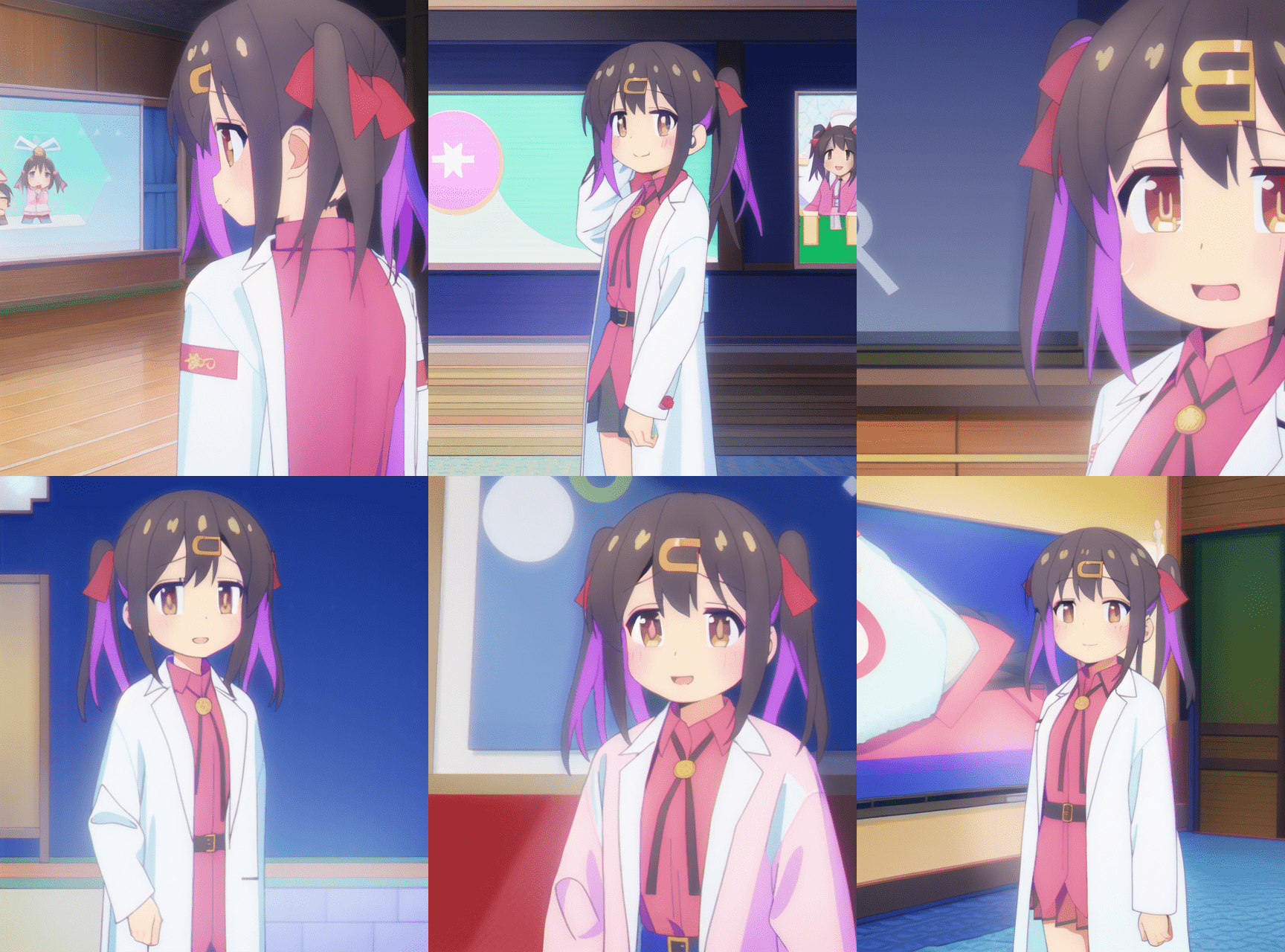

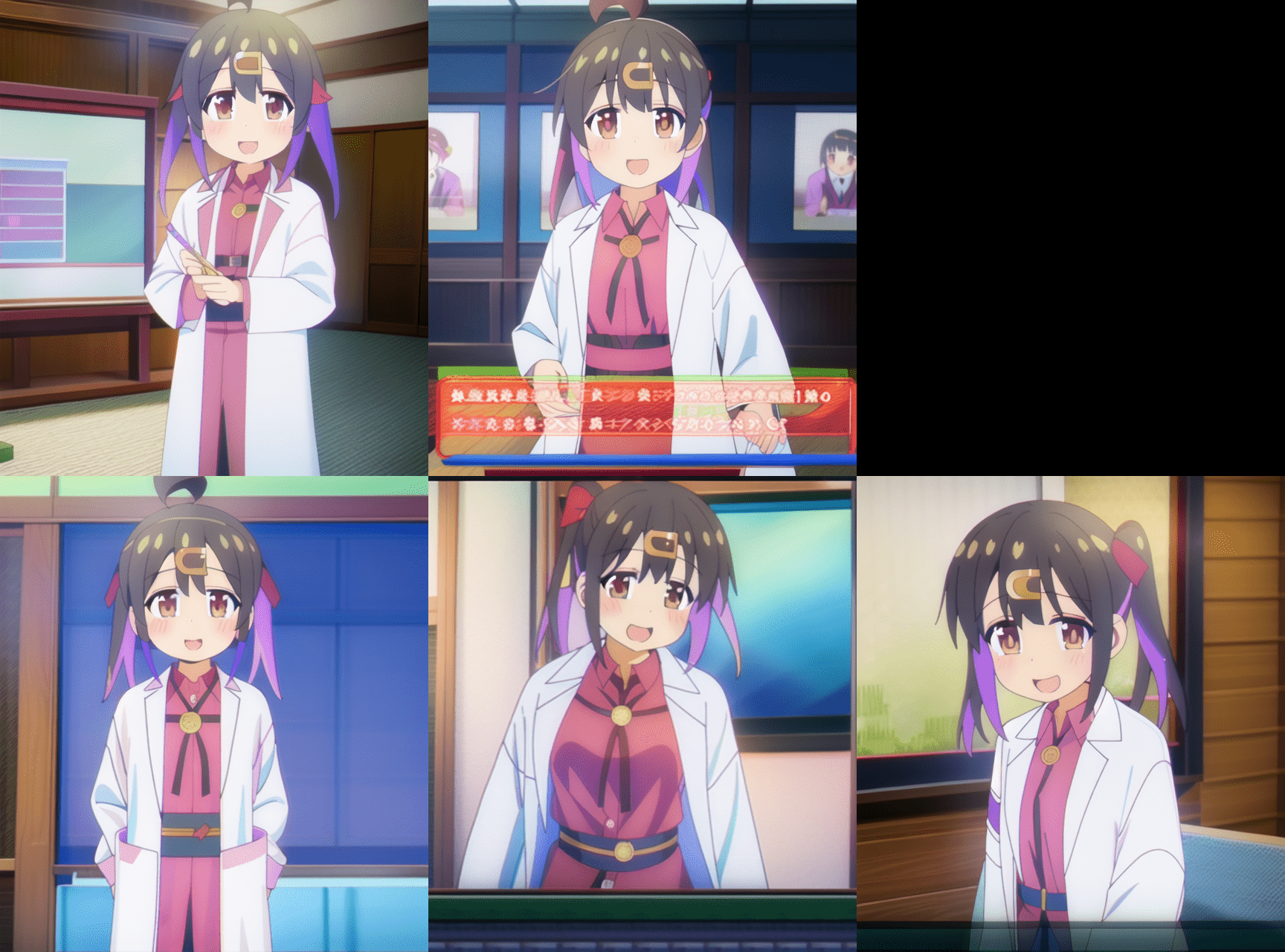

### Examples

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

|

onimai/samples/00026-4010692159.png

ADDED

|

onimai/samples/00030-286171376.png

ADDED

|

onimai/samples/00034-2431887953.png

ADDED

|

onimai/samples/grid-00010-492069042.png

ADDED

|

onimai/samples/grid-00017-492069042.png

ADDED

|

suremio-nozomizo-eilanya-maplesally/.README.md.swp

DELETED

|

Binary file (12.3 kB)

|

|

|

suremio-nozomizo-eilanya-maplesally/README.md

CHANGED

|

@@ -44,6 +44,7 @@ Regularization 276

|

|

| 44 |

|

| 45 |

[NMFSAN](https://huggingface.co/Crosstyan/BPModel/blob/main/NMFSAN/README.md) so you can have different styles

|

| 46 |

|

|

|

|

| 47 |

### Native training

|

| 48 |

|

| 49 |

Trained with [Kohya trainer](https://github.com/Linaqruf/kohya-trainer)

|

|

@@ -60,7 +61,9 @@ Trained with [Kohya trainer](https://github.com/Linaqruf/kohya-trainer)

|

|

| 60 |

|

| 61 |

|

| 62 |

|

| 63 |

-

|

|

|

|

|

|

|

| 64 |

|

| 65 |

Please refer to [LoRA Training Guide](https://rentry.org/lora_train)

|

| 66 |

|

|

@@ -69,7 +72,7 @@ Please refer to [LoRA Training Guide](https://rentry.org/lora_train)

|

|

| 69 |

- learning rate 1e-4

|

| 70 |

- batch size 6

|

| 71 |

- clip skip 2

|

| 72 |

-

- number of training steps 69700 (50 epochs)

|

| 73 |

|

| 74 |

*Examples*

|

| 75 |

|

|

|

|

| 44 |

|

| 45 |

[NMFSAN](https://huggingface.co/Crosstyan/BPModel/blob/main/NMFSAN/README.md) so you can have different styles

|

| 46 |

|

| 47 |

+

|

| 48 |

### Native training

|

| 49 |

|

| 50 |

Trained with [Kohya trainer](https://github.com/Linaqruf/kohya-trainer)

|

|

|

|

| 61 |

|

| 62 |

|

| 63 |

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

### LoRA

|

| 67 |

|

| 68 |

Please refer to [LoRA Training Guide](https://rentry.org/lora_train)

|

| 69 |

|

|

|

|

| 72 |

- learning rate 1e-4

|

| 73 |

- batch size 6

|

| 74 |

- clip skip 2

|

| 75 |

+

- number of training steps 69700/6 (50 epochs)

|

| 76 |

|

| 77 |

*Examples*

|

| 78 |

|