---

language:

- id

- ms

license: apache-2.0

tags:

- g2p

- fill-mask

inference: false

---

# ID G2P BERT

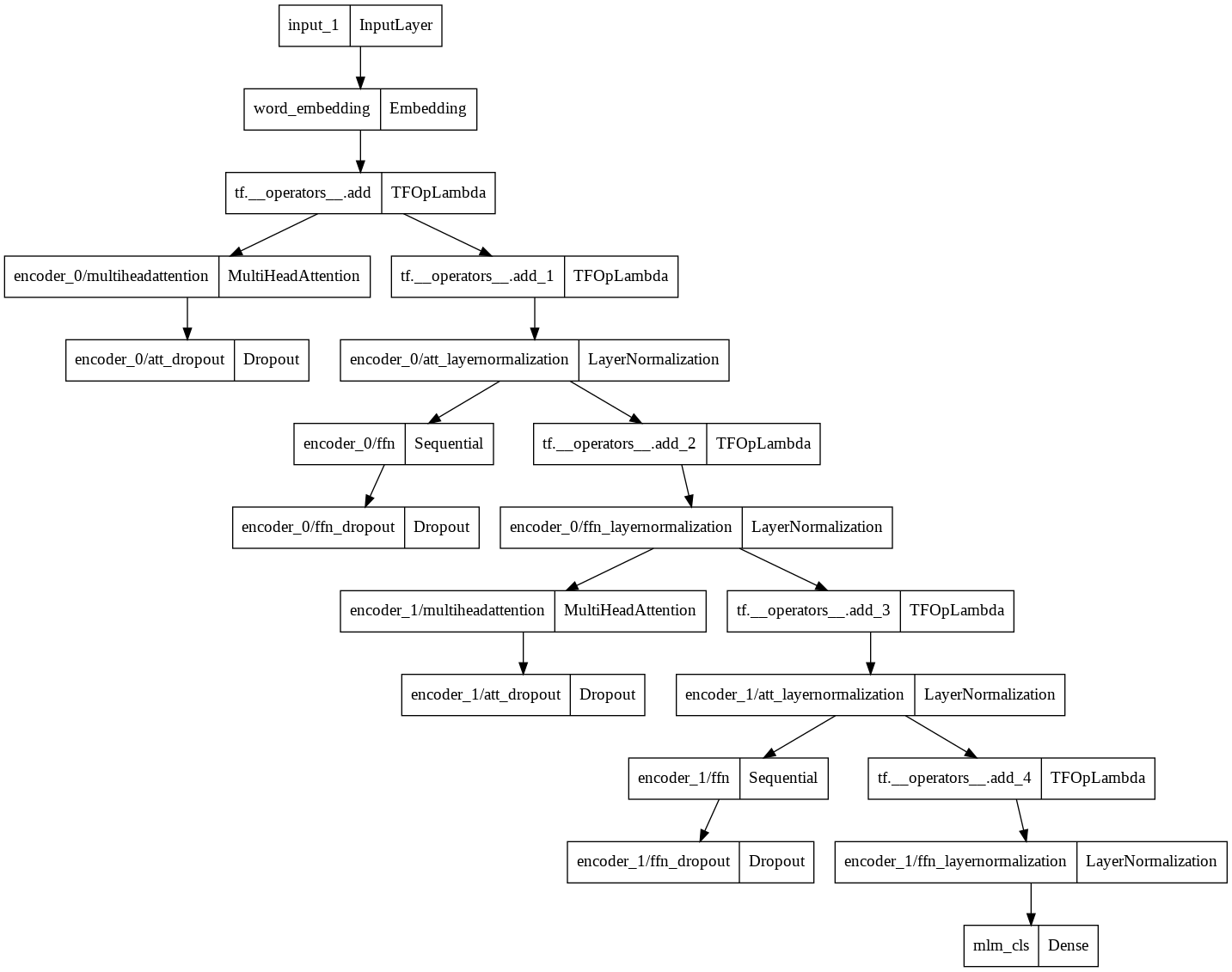

ID G2P BERT is a phoneme de-masking model based on the [BERT](https://arxiv.org/abs/1810.04805) architecture. This model was trained from scratch on a modified [Malay/Indonesian lexicon](https://huggingface.co/datasets/bookbot/id_word2phoneme).

This model was trained using the [Keras](https://keras.io/) framework. All training was done on Google Colaboratory. We adapted the [BERT Masked Language Modeling training script](https://keras.io/examples/nlp/masked_language_modeling) provided by the official Keras Code Example.

## Model

| Model | #params | Arch. | Training/Validation data |

| ------------- | ------- | ----- | ------------------------ |

| `id-g2p-bert` | 200K | BERT | Malay/Indonesian Lexicon |

## Training Procedure

Model Config

vocab_size: 32

max_len: 32

embed_dim: 128

num_attention_head: 2

feed_forward_dim: 128

num_layers: 2

Training Setting

batch_size: 32

optimizer: "adam"

learning_rate: 0.001

epochs: 100

## How to Use

Tokenizers

id2token = {

0: '',

1: '[UNK]',

2: 'a',

3: 'n',

4: 'ə',

5: 'i',

6: 'r',

7: 'k',

8: 'm',

9: 't',

10: 'u',

11: 'g',

12: 's',

13: 'b',

14: 'p',

15: 'l',

16: 'd',

17: 'o',

18: 'e',

19: 'h',

20: 'c',

21: 'y',

22: 'j',

23: 'w',

24: 'f',

25: 'v',

26: '-',

27: 'z',

28: "'",

29: 'q',

30: '[mask]'

}

token2id = {

'': 0,

"'": 28,

'-': 26,

'[UNK]': 1,

'[mask]': 30,

'a': 2,

'b': 13,

'c': 20,

'd': 16,

'e': 18,

'f': 24,

'g': 11,

'h': 19,

'i': 5,

'j': 22,

'k': 7,

'l': 15,

'm': 8,

'n': 3,

'o': 17,

'p': 14,

'q': 29,

'r': 6,

's': 12,

't': 9,

'u': 10,

'v': 25,

'w': 23,

'y': 21,

'z': 27,

'ə': 4

}

```py

import keras

import tensorflow as tf

import numpy as np

mlm_model = keras.models.load_model(

"bert_mlm.h5", custom_objects={"MaskedLanguageModel": MaskedLanguageModel}

)

MAX_LEN = 32

def inference(sequence):

sequence = " ".join([c if c != "e" else "[mask]" for c in sequence])

tokens = [token2id[c] for c in sequence.split()]

pad = [token2id[""] for _ in range(MAX_LEN - len(tokens))]

tokens = tokens + pad

input_ids = tf.convert_to_tensor(np.array([tokens]))

prediction = mlm_model.predict(input_ids)

# find masked idx token

masked_index = np.where(input_ids == mask_token_id)

masked_index = masked_index[1]

# get prediction at those masked index only

mask_prediction = prediction[0][masked_index]

predicted_ids = np.argmax(mask_prediction, axis=1)

# replace mask with predicted token

for i, idx in enumerate(masked_index):

tokens[idx] = predicted_ids[i]

return "".join([id2token[t] for t in tokens if t != 0])

inference("mengembangkannya")

```

## Authors

ID G2P BERT was trained and evaluated by [Ananto Joyoadikusumo](https://anantoj.github.io/), [Steven Limcorn](https://stevenlimcorn.github.io/), [Wilson Wongso](https://w11wo.github.io/). All computation and development are done on Google Colaboratory.

## Framework versions

- Keras 2.8.0

- TensorFlow 2.8.0