Commit

•

38057e4

1

Parent(s):

e34adac

Upload 1671 files

Browse files- .gitattributes +6 -0

- CircumSpect/object_detection/__pycache__/detect_objects.cpython-39.pyc +0 -0

- CircumSpect/object_detection/detect_objects.py +1 -1

- SoundScribe/transcribe.py +1 -3

- api_host.py +63 -110

- crystal.py +5 -4

- database/current_frame.jpg +0 -0

- database/input.txt +1 -1

- database/notes.txt +0 -0

- database/recording.wav +0 -0

- requirements.txt +1 -1

- utils.py +19 -7

.gitattributes

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

CircumSpect/object_detection/weights/groundingdino_swint_ogc.pth filter=lfs diff=lfs merge=lfs -text

|

| 2 |

+

Perceptrix/finetune/scripts/eval/local_data/reading_comprehension/coqa.jsonl filter=lfs diff=lfs merge=lfs -text

|

| 3 |

+

Perceptrix/finetune/scripts/eval/local_data/reading_comprehension/narrative_qa.jsonl filter=lfs diff=lfs merge=lfs -text

|

| 4 |

+

Perceptrix/finetune/scripts/eval/local_data/symbolic_problem_solving/bigbench_elementary_math_qa.jsonl filter=lfs diff=lfs merge=lfs -text

|

| 5 |

+

SoundScribe/SpeakerID/tools/speech_data_explorer/screenshot.png filter=lfs diff=lfs merge=lfs -text

|

| 6 |

+

SoundScribe/voices/Vatsal.wav filter=lfs diff=lfs merge=lfs -text

|

CircumSpect/object_detection/__pycache__/detect_objects.cpython-39.pyc

CHANGED

|

Binary files a/CircumSpect/object_detection/__pycache__/detect_objects.cpython-39.pyc and b/CircumSpect/object_detection/__pycache__/detect_objects.cpython-39.pyc differ

|

|

|

CircumSpect/object_detection/detect_objects.py

CHANGED

|

@@ -83,5 +83,5 @@ def locate_object(objects, image):

|

|

| 83 |

|

| 84 |

if __name__ == "__main__":

|

| 85 |

frame, coord = locate_object(

|

| 86 |

-

"https://images.nationalgeographic.org/image/upload/v1638890052/EducationHub/photos/robots-3d-landing-page.jpg"

|

| 87 |

print(coord)

|

|

|

|

| 83 |

|

| 84 |

if __name__ == "__main__":

|

| 85 |

frame, coord = locate_object(

|

| 86 |

+

"drawer", "https://images.nationalgeographic.org/image/upload/v1638890052/EducationHub/photos/robots-3d-landing-page.jpg")

|

| 87 |

print(coord)

|

SoundScribe/transcribe.py

CHANGED

|

@@ -26,7 +26,6 @@ queued = False

|

|

| 26 |

def transcribe(audio):

|

| 27 |

result = model.transcribe(audio)

|

| 28 |

transcription = result['text']

|

| 29 |

-

print(transcription)

|

| 30 |

# user = find_user("database/recording.wav")

|

| 31 |

user = "Vatsal"

|

| 32 |

if user != "Crystal":

|

|

@@ -73,8 +72,7 @@ def listen(model, stream):

|

|

| 73 |

if queued:

|

| 74 |

transcription()

|

| 75 |

queued = False

|

| 76 |

-

|

| 77 |

-

print("No audio found")

|

| 78 |

silence_duration = 0

|

| 79 |

output_file.close()

|

| 80 |

audio_data = None

|

|

|

|

| 26 |

def transcribe(audio):

|

| 27 |

result = model.transcribe(audio)

|

| 28 |

transcription = result['text']

|

|

|

|

| 29 |

# user = find_user("database/recording.wav")

|

| 30 |

user = "Vatsal"

|

| 31 |

if user != "Crystal":

|

|

|

|

| 72 |

if queued:

|

| 73 |

transcription()

|

| 74 |

queued = False

|

| 75 |

+

|

|

|

|

| 76 |

silence_duration = 0

|

| 77 |

output_file.close()

|

| 78 |

audio_data = None

|

api_host.py

CHANGED

|

@@ -9,134 +9,87 @@ import os

|

|

| 9 |

|

| 10 |

model = whisper.load_model("base")

|

| 11 |

|

| 12 |

-

|

| 13 |

def transcribe(audio):

|

| 14 |

result = model.transcribe(audio)

|

| 15 |

transcription = result['text']

|

| 16 |

print(transcription)

|

| 17 |

return transcription

|

| 18 |

|

| 19 |

-

|

| 20 |

app = Flask(__name__)

|

| 21 |

|

|

|

|

|

|

|

|

|

|

| 22 |

|

| 23 |

-

|

| 24 |

-

def display_image():

|

| 25 |

try:

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

image_data = np.array(image_data, dtype=np.uint8)

|

| 30 |

-

image = cv2.imdecode(image_data, cv2.IMREAD_COLOR)

|

| 31 |

-

|

| 32 |

-

cv2.imwrite('API.jpg', image)

|

| 33 |

-

answer, annotated_image = locate_object(prompt, "API.jpg")

|

| 34 |

-

|

| 35 |

-

return jsonify({'message': answer, 'annotated_image': annotated_image})

|

| 36 |

-

|

| 37 |

except Exception as e:

|

|

|

|

| 38 |

return jsonify({'error': str(e)})

|

| 39 |

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

try:

|

| 44 |

-

|

| 45 |

-

|

| 46 |

-

|

| 47 |

-

|

| 48 |

-

|

| 49 |

-

|

| 50 |

-

cv2.imwrite('API.jpg', image)

|

| 51 |

-

answer = answer_question(prompt, "API.jpg")

|

| 52 |

-

|

| 53 |

-

return jsonify({'message': answer})

|

| 54 |

-

|

| 55 |

except Exception as e:

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

|

| 59 |

-

@app.route('/object_description', methods=['POST'])

|

| 60 |

-

def display_image():

|

| 61 |

-

try:

|

| 62 |

-

image_data = request.json['image_data']

|

| 63 |

-

|

| 64 |

-

image_data = np.array(image_data, dtype=np.uint8)

|

| 65 |

-

image = cv2.imdecode(image_data, cv2.IMREAD_COLOR)

|

| 66 |

-

|

| 67 |

-

cv2.imwrite('API.jpg', image)

|

| 68 |

-

answer = find_object_description("API.jpg")

|

| 69 |

-

|

| 70 |

-

return jsonify({'message': answer})

|

| 71 |

-

|

| 72 |

-

except Exception as e:

|

| 73 |

-

return jsonify({'error': str(e)})

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

@app.route('/perceptrix', methods=['POST'])

|

| 77 |

-

def display_image():

|

| 78 |

-

try:

|

| 79 |

-

prompt = request.json['prompt']

|

| 80 |

-

|

| 81 |

-

answer = perceptrix(prompt)

|

| 82 |

-

|

| 83 |

-

return jsonify({'message': answer})

|

| 84 |

-

|

| 85 |

-

except Exception as e:

|

| 86 |

-

return jsonify({'error': str(e)})

|

| 87 |

-

|

| 88 |

-

|

| 89 |

-

@app.route('/robotix', methods=['POST'])

|

| 90 |

-

def display_image():

|

| 91 |

-

try:

|

| 92 |

-

prompt = request.json['prompt']

|

| 93 |

-

|

| 94 |

-

answer = robotix(prompt)

|

| 95 |

-

|

| 96 |

-

return jsonify({'message': answer})

|

| 97 |

-

|

| 98 |

-

except Exception as e:

|

| 99 |

-

return jsonify({'error': str(e)})

|

| 100 |

-

|

| 101 |

-

|

| 102 |

-

@app.route('/search_keyword', methods=['POST'])

|

| 103 |

-

def display_image():

|

| 104 |

-

try:

|

| 105 |

-

prompt = request.json['prompt']

|

| 106 |

-

|

| 107 |

-

answer = search_keyword(prompt)

|

| 108 |

-

|

| 109 |

-

return jsonify({'message': answer})

|

| 110 |

-

|

| 111 |

-

except Exception as e:

|

| 112 |

-

return jsonify({'error': str(e)})

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

@app.route('/identify_objects_from_text', methods=['POST'])

|

| 116 |

-

def display_image():

|

| 117 |

-

try:

|

| 118 |

-

prompt = request.json['prompt']

|

| 119 |

-

|

| 120 |

-

answer = identify_objects_from_text(prompt)

|

| 121 |

-

|

| 122 |

-

return jsonify({'message': answer})

|

| 123 |

-

|

| 124 |

-

except Exception as e:

|

| 125 |

-

return jsonify({'error': str(e)})

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

@app.route('/transcribe', methods=['POST'])

|

| 129 |

-

def upload_audio():

|

| 130 |

-

audio_file = request.files['audio']

|

| 131 |

-

|

| 132 |

-

filename = os.path.join("database", audio_file.filename)

|

| 133 |

-

audio_file.save(filename)

|

| 134 |

-

return jsonify({'message': transcribe(filename)})

|

| 135 |

-

|

| 136 |

|

| 137 |

def run_app():

|

| 138 |

app.run(port=7777)

|

| 139 |

|

| 140 |

if __name__ == "__main__":

|

| 141 |

runner = threading.Thread(target=run_app)

|

| 142 |

-

runner.start()

|

|

|

|

| 9 |

|

| 10 |

model = whisper.load_model("base")

|

| 11 |

|

|

|

|

| 12 |

def transcribe(audio):

|

| 13 |

result = model.transcribe(audio)

|

| 14 |

transcription = result['text']

|

| 15 |

print(transcription)

|

| 16 |

return transcription

|

| 17 |

|

|

|

|

| 18 |

app = Flask(__name__)

|

| 19 |

|

| 20 |

+

@app.route('/', methods=['POST', 'GET'])

|

| 21 |

+

def home():

|

| 22 |

+

return jsonify({'message': 'WORKING'})

|

| 23 |

|

| 24 |

+

def handle_request(func, *args):

|

|

|

|

| 25 |

try:

|

| 26 |

+

result = func(*args)

|

| 27 |

+

return jsonify({'message': result})

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 28 |

except Exception as e:

|

| 29 |

+

print(e)

|

| 30 |

return jsonify({'error': str(e)})

|

| 31 |

|

| 32 |

+

@app.route('/locate_object', methods=['POST', 'GET'])

|

| 33 |

+

def _locate_object():

|

| 34 |

+

image_data = request.json['image']

|

| 35 |

+

prompt = request.json['prompt']

|

| 36 |

+

image_data = np.array(image_data, dtype=np.uint8)

|

| 37 |

+

image = cv2.imdecode(image_data, cv2.IMREAD_COLOR)

|

| 38 |

+

cv2.imwrite('API.jpg', image)

|

| 39 |

+

return handle_request(locate_object, prompt, "API.jpg")

|

| 40 |

+

|

| 41 |

+

@app.route('/vqa', methods=['POST', 'GET'])

|

| 42 |

+

def _vqa():

|

| 43 |

+

image_data = request.json['image']

|

| 44 |

+

prompt = request.json['prompt']

|

| 45 |

+

image_data = np.array(image_data, dtype=np.uint8)

|

| 46 |

+

image = cv2.imdecode(image_data, cv2.IMREAD_COLOR)

|

| 47 |

+

cv2.imwrite('API.jpg', image)

|

| 48 |

+

return handle_request(answer_question, prompt, "API.jpg")

|

| 49 |

+

|

| 50 |

+

@app.route('/object_description', methods=['POST', 'GET'])

|

| 51 |

+

def _object_description():

|

| 52 |

+

image_data = request.json['image']

|

| 53 |

+

image_data = np.array(image_data, dtype=np.uint8)

|

| 54 |

+

image = cv2.imdecode(image_data, cv2.IMREAD_COLOR)

|

| 55 |

+

cv2.imwrite('API.jpg', image)

|

| 56 |

+

return handle_request(find_object_description, "API.jpg")

|

| 57 |

+

|

| 58 |

+

@app.route('/perceptrix', methods=['POST', 'GET'])

|

| 59 |

+

def _perceptrix():

|

| 60 |

+

prompt = request.json['prompt']

|

| 61 |

+

return handle_request(perceptrix, prompt)

|

| 62 |

+

|

| 63 |

+

@app.route('/robotix', methods=['POST', 'GET'])

|

| 64 |

+

def _robotix():

|

| 65 |

+

prompt = request.json['prompt']

|

| 66 |

+

return handle_request(robotix, prompt)

|

| 67 |

+

|

| 68 |

+

@app.route('/search_keyword', methods=['POST', 'GET'])

|

| 69 |

+

def _search_keyword():

|

| 70 |

+

prompt = request.json['prompt']

|

| 71 |

+

return handle_request(search_keyword, prompt)

|

| 72 |

+

|

| 73 |

+

@app.route('/identify_objects_from_text', methods=['POST', 'GET'])

|

| 74 |

+

def _identify_objects_from_text():

|

| 75 |

+

prompt = request.json['prompt']

|

| 76 |

+

return handle_request(identify_objects_from_text, prompt)

|

| 77 |

+

|

| 78 |

+

@app.route('/transcribe', methods=['POST', 'GET'])

|

| 79 |

+

def _upload_audio():

|

| 80 |

try:

|

| 81 |

+

audio_file = request.files['audio']

|

| 82 |

+

filename = os.path.join("./", audio_file.filename)

|

| 83 |

+

audio_file.save(filename)

|

| 84 |

+

print("RECEIVED")

|

| 85 |

+

return jsonify({'message': transcribe(filename)})

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 86 |

except Exception as e:

|

| 87 |

+

print(e)

|

| 88 |

+

return jsonify({'message': "Error"})

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 89 |

|

| 90 |

def run_app():

|

| 91 |

app.run(port=7777)

|

| 92 |

|

| 93 |

if __name__ == "__main__":

|

| 94 |

runner = threading.Thread(target=run_app)

|

| 95 |

+

runner.start()

|

crystal.py

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

from utils import setup_device, check_api_usage, record_chat, load_chat, get_time

|

| 2 |

-

from SoundScribe.speakerID import find_user

|

| 3 |

from SoundScribe import speak, live_listen

|

| 4 |

from internet import get_weather_data

|

| 5 |

import threading

|

|

@@ -30,7 +30,7 @@ AUTOMATION_COMAND = "Home Automation"

|

|

| 30 |

weather = None

|

| 31 |

|

| 32 |

device = setup_device()

|

| 33 |

-

print("INITIALIZING CRYSTAL -

|

| 34 |

"Acceleration" if str(device) != "cpu" else "")

|

| 35 |

|

| 36 |

|

|

@@ -51,7 +51,6 @@ def understand_surroundings():

|

|

| 51 |

while True:

|

| 52 |

current_events = answer_question(

|

| 53 |

"Describe your surroundings", "./database/current_frame.jpg")

|

| 54 |

-

|

| 55 |

time.sleep(10)

|

| 56 |

|

| 57 |

|

|

@@ -134,7 +133,8 @@ visual_processing.start()

|

|

| 134 |

|

| 135 |

while True:

|

| 136 |

full_history = load_chat()

|

| 137 |

-

username = find_user("recording.wav")

|

|

|

|

| 138 |

with open("database/notes.txt", "r") as notes:

|

| 139 |

notes = notes.read()

|

| 140 |

input_text = f"Time- {get_time()}\nWeather- {weather}\nSurroundings- {current_events}"+(f"\nNotes- {notes}" if notes else "")

|

|

@@ -171,6 +171,7 @@ while True:

|

|

| 171 |

relevant_history = f"{relevant_history}\n{username}: " + \

|

| 172 |

"\n" + input_text + "\nCRYSTAL: "

|

| 173 |

response = str(perceptrix(relevant_history))

|

|

|

|

| 174 |

with open("./database/input.txt", 'w') as clearfile:

|

| 175 |

clearfile.write("")

|

| 176 |

|

|

|

|

| 1 |

from utils import setup_device, check_api_usage, record_chat, load_chat, get_time

|

| 2 |

+

# from SoundScribe.speakerID import find_user

|

| 3 |

from SoundScribe import speak, live_listen

|

| 4 |

from internet import get_weather_data

|

| 5 |

import threading

|

|

|

|

| 30 |

weather = None

|

| 31 |

|

| 32 |

device = setup_device()

|

| 33 |

+

print("INITIALIZING CRYSTAL - DETECTED DEVICE:", str(device).upper(),

|

| 34 |

"Acceleration" if str(device) != "cpu" else "")

|

| 35 |

|

| 36 |

|

|

|

|

| 51 |

while True:

|

| 52 |

current_events = answer_question(

|

| 53 |

"Describe your surroundings", "./database/current_frame.jpg")

|

|

|

|

| 54 |

time.sleep(10)

|

| 55 |

|

| 56 |

|

|

|

|

| 133 |

|

| 134 |

while True:

|

| 135 |

full_history = load_chat()

|

| 136 |

+

# username = find_user("recording.wav")

|

| 137 |

+

username = "Vatsal"

|

| 138 |

with open("database/notes.txt", "r") as notes:

|

| 139 |

notes = notes.read()

|

| 140 |

input_text = f"Time- {get_time()}\nWeather- {weather}\nSurroundings- {current_events}"+(f"\nNotes- {notes}" if notes else "")

|

|

|

|

| 171 |

relevant_history = f"{relevant_history}\n{username}: " + \

|

| 172 |

"\n" + input_text + "\nCRYSTAL: "

|

| 173 |

response = str(perceptrix(relevant_history))

|

| 174 |

+

response = "<###CRYSTAL-INTERNAL###> Speech\n"+response

|

| 175 |

with open("./database/input.txt", 'w') as clearfile:

|

| 176 |

clearfile.write("")

|

| 177 |

|

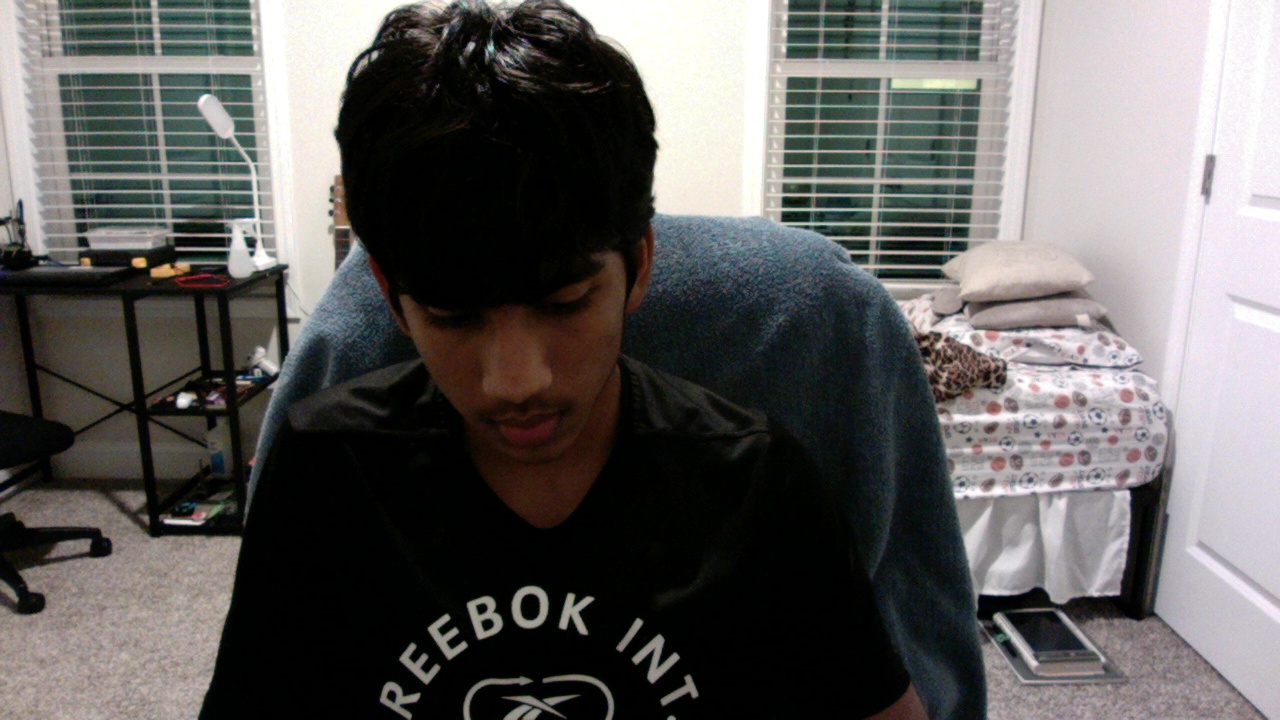

database/current_frame.jpg

CHANGED

|

|

database/input.txt

CHANGED

|

@@ -1 +1 @@

|

|

| 1 |

-

|

|

|

|

| 1 |

+

Hello. Can you hear me?

|

database/notes.txt

ADDED

|

File without changes

|

database/recording.wav

CHANGED

|

Binary files a/database/recording.wav and b/database/recording.wav differ

|

|

|

requirements.txt

CHANGED

|

@@ -1,3 +1,4 @@

|

|

|

|

|

| 1 |

absl-py

|

| 2 |

accelerate

|

| 3 |

addict

|

|

@@ -109,7 +110,6 @@ gql

|

|

| 109 |

graphql-core

|

| 110 |

greenlet

|

| 111 |

grpcio

|

| 112 |

-

gTTS

|

| 113 |

gunicorn

|

| 114 |

h11

|

| 115 |

h2

|

|

|

|

| 1 |

+

git+https://github.com/coqui-ai/TTS

|

| 2 |

absl-py

|

| 3 |

accelerate

|

| 4 |

addict

|

|

|

|

| 110 |

graphql-core

|

| 111 |

greenlet

|

| 112 |

grpcio

|

|

|

|

| 113 |

gunicorn

|

| 114 |

h11

|

| 115 |

h2

|

utils.py

CHANGED

|

@@ -58,6 +58,11 @@ def check_api_usage():

|

|

| 58 |

"\t2. Use On-Device Calculations\n"

|

| 59 |

"Enter your choice (1/2): ")

|

| 60 |

USE_CLOUD_API = choice == "1"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 61 |

else:

|

| 62 |

print("CRYSTAL Cloud API not reachable.")

|

| 63 |

else:

|

|

@@ -68,7 +73,7 @@ def check_api_usage():

|

|

| 68 |

|

| 69 |

|

| 70 |

def perceptrix(prompt):

|

| 71 |

-

url = API_URL+"perceptrix

|

| 72 |

|

| 73 |

payload = {'prompt': prompt}

|

| 74 |

headers = {'Content-Type': 'application/json'}

|

|

@@ -78,7 +83,7 @@ def perceptrix(prompt):

|

|

| 78 |

|

| 79 |

|

| 80 |

def robotix(prompt):

|

| 81 |

-

url = API_URL+"robotix

|

| 82 |

|

| 83 |

payload = {'prompt': prompt}

|

| 84 |

headers = {'Content-Type': 'application/json'}

|

|

@@ -88,7 +93,7 @@ def robotix(prompt):

|

|

| 88 |

|

| 89 |

|

| 90 |

def identify_objects_from_text(prompt):

|

| 91 |

-

url = API_URL+"identify_objects_from_text

|

| 92 |

|

| 93 |

payload = {'prompt': prompt}

|

| 94 |

headers = {'Content-Type': 'application/json'}

|

|

@@ -98,7 +103,7 @@ def identify_objects_from_text(prompt):

|

|

| 98 |

|

| 99 |

|

| 100 |

def search_keyword(prompt):

|

| 101 |

-

url = API_URL+"search_keyword

|

| 102 |

|

| 103 |

payload = {'prompt': prompt}

|

| 104 |

headers = {'Content-Type': 'application/json'}

|

|

@@ -108,7 +113,10 @@ def search_keyword(prompt):

|

|

| 108 |

|

| 109 |

|

| 110 |

def answer_question(prompt, frame):

|

| 111 |

-

url = API_URL+"vqa

|

|

|

|

|

|

|

|

|

|

| 112 |

_, image_data = cv2.imencode('.jpg', frame)

|

| 113 |

image = image_data.tolist()

|

| 114 |

|

|

@@ -121,7 +129,9 @@ def answer_question(prompt, frame):

|

|

| 121 |

|

| 122 |

|

| 123 |

def find_object_description(prompt, frame):

|

| 124 |

-

url = API_URL+"object_description

|

|

|

|

|

|

|

| 125 |

_, image_data = cv2.imencode('.jpg', frame)

|

| 126 |

image = image_data.tolist()

|

| 127 |

|

|

@@ -134,7 +144,9 @@ def find_object_description(prompt, frame):

|

|

| 134 |

|

| 135 |

|

| 136 |

def locate_object(prompt, frame):

|

| 137 |

-

url = API_URL+"locate_object

|

|

|

|

|

|

|

| 138 |

_, image_data = cv2.imencode('.jpg', frame)

|

| 139 |

image = image_data.tolist()

|

| 140 |

|

|

|

|

| 58 |

"\t2. Use On-Device Calculations\n"

|

| 59 |

"Enter your choice (1/2): ")

|

| 60 |

USE_CLOUD_API = choice == "1"

|

| 61 |

+

if USE_CLOUD_API:

|

| 62 |

+

print("RUNNING ON CLOUD")

|

| 63 |

+

else:

|

| 64 |

+

print("RUNNING LOCALLY")

|

| 65 |

+

|

| 66 |

else:

|

| 67 |

print("CRYSTAL Cloud API not reachable.")

|

| 68 |

else:

|

|

|

|

| 73 |

|

| 74 |

|

| 75 |

def perceptrix(prompt):

|

| 76 |

+

url = API_URL+"perceptrix"

|

| 77 |

|

| 78 |

payload = {'prompt': prompt}

|

| 79 |

headers = {'Content-Type': 'application/json'}

|

|

|

|

| 83 |

|

| 84 |

|

| 85 |

def robotix(prompt):

|

| 86 |

+

url = API_URL+"robotix"

|

| 87 |

|

| 88 |

payload = {'prompt': prompt}

|

| 89 |

headers = {'Content-Type': 'application/json'}

|

|

|

|

| 93 |

|

| 94 |

|

| 95 |

def identify_objects_from_text(prompt):

|

| 96 |

+

url = API_URL+"identify_objects_from_text"

|

| 97 |

|

| 98 |

payload = {'prompt': prompt}

|

| 99 |

headers = {'Content-Type': 'application/json'}

|

|

|

|

| 103 |

|

| 104 |

|

| 105 |

def search_keyword(prompt):

|

| 106 |

+

url = API_URL+"search_keyword"

|

| 107 |

|

| 108 |

payload = {'prompt': prompt}

|

| 109 |

headers = {'Content-Type': 'application/json'}

|

|

|

|

| 113 |

|

| 114 |

|

| 115 |

def answer_question(prompt, frame):

|

| 116 |

+

url = API_URL+"vqa"

|

| 117 |

+

if type(frame) == str:

|

| 118 |

+

frame = cv2.imread(frame)

|

| 119 |

+

|

| 120 |

_, image_data = cv2.imencode('.jpg', frame)

|

| 121 |

image = image_data.tolist()

|

| 122 |

|

|

|

|

| 129 |

|

| 130 |

|

| 131 |

def find_object_description(prompt, frame):

|

| 132 |

+

url = API_URL+"object_description"

|

| 133 |

+

if type(frame) == str:

|

| 134 |

+

frame = cv2.imread(frame)

|

| 135 |

_, image_data = cv2.imencode('.jpg', frame)

|

| 136 |

image = image_data.tolist()

|

| 137 |

|

|

|

|

| 144 |

|

| 145 |

|

| 146 |

def locate_object(prompt, frame):

|

| 147 |

+

url = API_URL+"locate_object"

|

| 148 |

+

if type(frame) == str:

|

| 149 |

+

frame = cv2.imread(frame)

|

| 150 |

_, image_data = cv2.imencode('.jpg', frame)

|

| 151 |

image = image_data.tolist()

|

| 152 |

|