Upload extensions using SD-Hub extension

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +144 -0

- extensions/1-sd-dynamic-thresholding/.github/FUNDING.yml +1 -0

- extensions/1-sd-dynamic-thresholding/.github/workflows/publish.yml +21 -0

- extensions/1-sd-dynamic-thresholding/.gitignore +1 -0

- extensions/1-sd-dynamic-thresholding/LICENSE.txt +21 -0

- extensions/1-sd-dynamic-thresholding/README.md +120 -0

- extensions/1-sd-dynamic-thresholding/__init__.py +6 -0

- extensions/1-sd-dynamic-thresholding/__pycache__/dynthres_core.cpython-310.pyc +0 -0

- extensions/1-sd-dynamic-thresholding/__pycache__/dynthres_unipc.cpython-310.pyc +0 -0

- extensions/1-sd-dynamic-thresholding/dynthres_comfyui.py +86 -0

- extensions/1-sd-dynamic-thresholding/dynthres_core.py +167 -0

- extensions/1-sd-dynamic-thresholding/dynthres_unipc.py +111 -0

- extensions/1-sd-dynamic-thresholding/github/cat_demo_1.jpg +0 -0

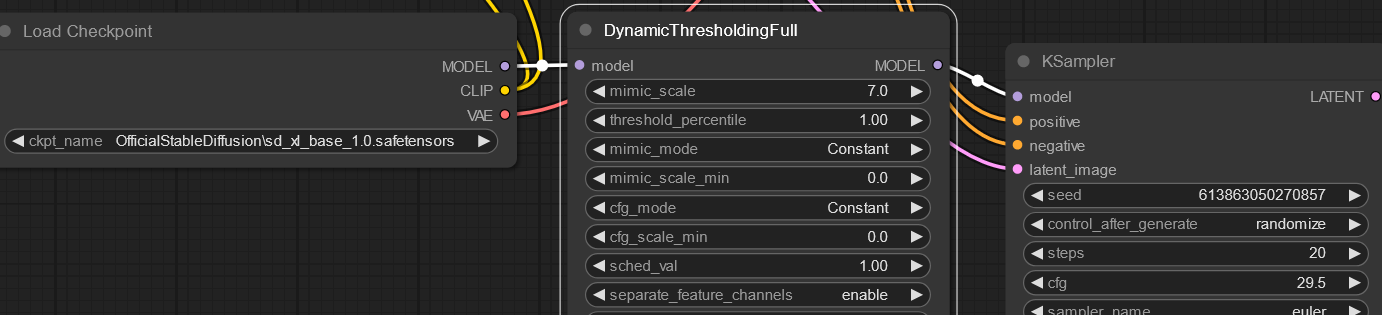

- extensions/1-sd-dynamic-thresholding/github/comfy_node.png +0 -0

- extensions/1-sd-dynamic-thresholding/github/grid_preview.png +0 -0

- extensions/1-sd-dynamic-thresholding/github/ui.png +0 -0

- extensions/1-sd-dynamic-thresholding/javascript/active.js +68 -0

- extensions/1-sd-dynamic-thresholding/pyproject.toml +13 -0

- extensions/1-sd-dynamic-thresholding/scripts/__pycache__/dynamic_thresholding.cpython-310.pyc +0 -0

- extensions/1-sd-dynamic-thresholding/scripts/dynamic_thresholding.py +270 -0

- extensions/ABG_extension/.gitignore +1 -0

- extensions/ABG_extension/README.md +32 -0

- extensions/ABG_extension/install.py +11 -0

- extensions/ABG_extension/scripts/__pycache__/app.cpython-310.pyc +0 -0

- extensions/ABG_extension/scripts/app.py +183 -0

- extensions/Automatic1111-Geeky-Remb/LICENSE +21 -0

- extensions/Automatic1111-Geeky-Remb/README.md +258 -0

- extensions/Automatic1111-Geeky-Remb/__init__.py +4 -0

- extensions/Automatic1111-Geeky-Remb/install.py +7 -0

- extensions/Automatic1111-Geeky-Remb/requirements.txt +6 -0

- extensions/Automatic1111-Geeky-Remb/scripts/__pycache__/geeky-remb.cpython-310.pyc +0 -0

- extensions/Automatic1111-Geeky-Remb/scripts/geeky-remb.py +475 -0

- extensions/CFGRescale_For_Forge/LICENSE +21 -0

- extensions/CFGRescale_For_Forge/README.md +6 -0

- extensions/CFGRescale_For_Forge/extensions-builtin/sd_forge_cfgrescale/scripts/__pycache__/forge_cfgrescale.cpython-310.pyc +0 -0

- extensions/CFGRescale_For_Forge/extensions-builtin/sd_forge_cfgrescale/scripts/forge_cfgrescale.py +45 -0

- extensions/CFGRescale_For_Forge/ldm_patched/contrib/external_cfgrescale.py +44 -0

- extensions/CFG_Rescale_webui/.gitignore +160 -0

- extensions/CFG_Rescale_webui/LICENSE +21 -0

- extensions/CFG_Rescale_webui/README.md +10 -0

- extensions/CFG_Rescale_webui/scripts/CFGRescale.py +225 -0

- extensions/CFG_Rescale_webui/scripts/__pycache__/CFGRescale.cpython-310.pyc +0 -0

- extensions/CharacteristicGuidanceWebUI/CHGextension_pic.PNG +0 -0

- extensions/CharacteristicGuidanceWebUI/LICENSE +201 -0

- extensions/CharacteristicGuidanceWebUI/README.md +184 -0

- extensions/CharacteristicGuidanceWebUI/scripts/CHGextension.py +439 -0

- extensions/CharacteristicGuidanceWebUI/scripts/CharaIte.py +530 -0

- extensions/CharacteristicGuidanceWebUI/scripts/__pycache__/CHGextension.cpython-310.pyc +0 -0

- extensions/CharacteristicGuidanceWebUI/scripts/__pycache__/CharaIte.cpython-310.pyc +0 -0

- extensions/CharacteristicGuidanceWebUI/scripts/__pycache__/forge_CHG.cpython-310.pyc +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,147 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

extensions/advanced_euler_sampler_extension/img/xyz_grid-0000-114514.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

extensions/artjiggler/thesaurus.jsonl filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

extensions/canvas-zoom/dist/templates/frontend/assets/index-0c8f6dbd.js.map filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

extensions/canvas-zoom/dist/v1_1_v1_5_1/templates/frontend/assets/index-2a280c06.js.map filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

extensions/diffusion-noise-alternatives-webui/images/ColorGrade.png filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

extensions/diffusion-noise-alternatives-webui/images/GrainCompare.png filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

extensions/latent-upscale/assets/default.png filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

extensions/latent-upscale/assets/img2img_latent_upscale_process.png filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

extensions/latent-upscale/assets/nearest-exact-normal1.png filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

extensions/latent-upscale/assets/nearest-exact-normal2.png filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

extensions/latent-upscale/assets/nearest-exact-simple1.png filter=lfs diff=lfs merge=lfs -text

|

| 47 |

+

extensions/latent-upscale/assets/nearest-exact-simple2.png filter=lfs diff=lfs merge=lfs -text

|

| 48 |

+

extensions/latent-upscale/assets/nearest-exact-simple8.png filter=lfs diff=lfs merge=lfs -text

|

| 49 |

+

extensions/sd-canvas-editor/doc/images/overall.png filter=lfs diff=lfs merge=lfs -text

|

| 50 |

+

extensions/sd-canvas-editor/doc/images/panels.png filter=lfs diff=lfs merge=lfs -text

|

| 51 |

+

extensions/sd-canvas-editor/doc/images/photos.png filter=lfs diff=lfs merge=lfs -text

|

| 52 |

+

extensions/sd-civitai-browser-plus_fix/aria2/lin/aria2 filter=lfs diff=lfs merge=lfs -text

|

| 53 |

+

extensions/sd-civitai-browser-plus_fix/aria2/win/aria2.exe filter=lfs diff=lfs merge=lfs -text

|

| 54 |

+

extensions/sd-webui-Lora-queue-helper/docs/output_sample.png filter=lfs diff=lfs merge=lfs -text

|

| 55 |

+

extensions/sd-webui-agentattention/samples/xyz_grid-2415-1-desk.png filter=lfs diff=lfs merge=lfs -text

|

| 56 |

+

extensions/sd-webui-agentattention/samples/xyz_grid-2417-1-goldfinch,[[:space:]]Carduelis[[:space:]]carduelis.png filter=lfs diff=lfs merge=lfs -text

|

| 57 |

+

extensions/sd-webui-agentattention/samples/xyz_grid-2418-1-bell[[:space:]]pepper.png filter=lfs diff=lfs merge=lfs -text

|

| 58 |

+

extensions/sd-webui-agentattention/samples/xyz_grid-2419-1-bicycle.png filter=lfs diff=lfs merge=lfs -text

|

| 59 |

+

extensions/sd-webui-agentattention/samples/xyz_grid-2428-1-desk.jpg filter=lfs diff=lfs merge=lfs -text

|

| 60 |

+

extensions/sd-webui-birefnet/sd-webui-birefnet.png filter=lfs diff=lfs merge=lfs -text

|

| 61 |

+

extensions/sd-webui-cads/samples/comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 62 |

+

extensions/sd-webui-cads/samples/grid-7069.png filter=lfs diff=lfs merge=lfs -text

|

| 63 |

+

extensions/sd-webui-cads/samples/grid-7070.png filter=lfs diff=lfs merge=lfs -text

|

| 64 |

+

extensions/sd-webui-cardmaster/docked-detail-view.png filter=lfs diff=lfs merge=lfs -text

|

| 65 |

+

extensions/sd-webui-cardmaster/preview.gif filter=lfs diff=lfs merge=lfs -text

|

| 66 |

+

extensions/sd-webui-cardmaster/toma-chan[[:space:]]alt.png filter=lfs diff=lfs merge=lfs -text

|

| 67 |

+

extensions/sd-webui-cardmaster/toma-chan.png filter=lfs diff=lfs merge=lfs -text

|

| 68 |

+

extensions/sd-webui-cutoff/images/cover.jpg filter=lfs diff=lfs merge=lfs -text

|

| 69 |

+

extensions/sd-webui-cutoff/images/sample-1.png filter=lfs diff=lfs merge=lfs -text

|

| 70 |

+

extensions/sd-webui-cutoff/images/sample-2.png filter=lfs diff=lfs merge=lfs -text

|

| 71 |

+

extensions/sd-webui-cutoff/images/sample-3.png filter=lfs diff=lfs merge=lfs -text

|

| 72 |

+

extensions/sd-webui-cutoff/images/sample-4.png filter=lfs diff=lfs merge=lfs -text

|

| 73 |

+

extensions/sd-webui-cutoff/images/sample-4_small.png filter=lfs diff=lfs merge=lfs -text

|

| 74 |

+

extensions/sd-webui-cutoff/images/sample-5.png filter=lfs diff=lfs merge=lfs -text

|

| 75 |

+

extensions/sd-webui-cutoff/images/sample-5_small.png filter=lfs diff=lfs merge=lfs -text

|

| 76 |

+

extensions/sd-webui-diffusion-cg/examples/xl_off.jpg filter=lfs diff=lfs merge=lfs -text

|

| 77 |

+

extensions/sd-webui-diffusion-cg/examples/xl_on.jpg filter=lfs diff=lfs merge=lfs -text

|

| 78 |

+

extensions/sd-webui-ditail/assets/Intro-a.png filter=lfs diff=lfs merge=lfs -text

|

| 79 |

+

extensions/sd-webui-ditail/assets/Intro-b.png filter=lfs diff=lfs merge=lfs -text

|

| 80 |

+

extensions/sd-webui-ditail/assets/Intro-vertical.png filter=lfs diff=lfs merge=lfs -text

|

| 81 |

+

extensions/sd-webui-ditail/assets/Intro.png filter=lfs diff=lfs merge=lfs -text

|

| 82 |

+

extensions/sd-webui-dycfg/images/05.png filter=lfs diff=lfs merge=lfs -text

|

| 83 |

+

extensions/sd-webui-dycfg/images/09.png filter=lfs diff=lfs merge=lfs -text

|

| 84 |

+

extensions/sd-webui-fabric/static/example_2_default.png filter=lfs diff=lfs merge=lfs -text

|

| 85 |

+

extensions/sd-webui-fabric/static/example_2_feedback.png filter=lfs diff=lfs merge=lfs -text

|

| 86 |

+

extensions/sd-webui-fabric/static/example_3_10.png filter=lfs diff=lfs merge=lfs -text

|

| 87 |

+

extensions/sd-webui-fabric/static/example_3_feedback.png filter=lfs diff=lfs merge=lfs -text

|

| 88 |

+

extensions/sd-webui-fabric/static/fabric_demo.gif filter=lfs diff=lfs merge=lfs -text

|

| 89 |

+

extensions/sd-webui-image-comparison/tab.gif filter=lfs diff=lfs merge=lfs -text

|

| 90 |

+

extensions/sd-webui-img2txt/sd-webui-img2txt.gif filter=lfs diff=lfs merge=lfs -text

|

| 91 |

+

extensions/sd-webui-incantations/images/xyz_grid-0463-3.jpg filter=lfs diff=lfs merge=lfs -text

|

| 92 |

+

extensions/sd-webui-incantations/images/xyz_grid-0469-4.jpg filter=lfs diff=lfs merge=lfs -text

|

| 93 |

+

extensions/sd-webui-incantations/images/xyz_grid-2652-1419902843-cinematic[[:space:]]4K[[:space:]]photo[[:space:]]of[[:space:]]a[[:space:]]dog[[:space:]]riding[[:space:]]a[[:space:]]bus[[:space:]]and[[:space:]]eating[[:space:]]cake[[:space:]]and[[:space:]]wearing[[:space:]]headphones.png filter=lfs diff=lfs merge=lfs -text

|

| 94 |

+

extensions/sd-webui-incantations/images/xyz_grid-2660-1590472902-A[[:space:]]photo[[:space:]]of[[:space:]]a[[:space:]]lion[[:space:]]and[[:space:]]a[[:space:]]grizzly[[:space:]]bear[[:space:]]and[[:space:]]a[[:space:]]tiger[[:space:]]in[[:space:]]the[[:space:]]woods.jpg filter=lfs diff=lfs merge=lfs -text

|

| 95 |

+

extensions/sd-webui-incantations/images/xyz_grid-3040-1-a[[:space:]]puppy[[:space:]]and[[:space:]]a[[:space:]]kitten[[:space:]]on[[:space:]]the[[:space:]]moon.png filter=lfs diff=lfs merge=lfs -text

|

| 96 |

+

extensions/sd-webui-incantations/images/xyz_grid-3348-1590472902-A[[:space:]]photo[[:space:]]of[[:space:]]a[[:space:]]lion[[:space:]]and[[:space:]]a[[:space:]]grizzly[[:space:]]bear[[:space:]]and[[:space:]]a[[:space:]]tiger[[:space:]]in[[:space:]]the[[:space:]]woods.jpg filter=lfs diff=lfs merge=lfs -text

|

| 97 |

+

extensions/sd-webui-llul/images/llul_yuv420p.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 98 |

+

extensions/sd-webui-llul/images/mask_effect.jpg filter=lfs diff=lfs merge=lfs -text

|

| 99 |

+

extensions/sd-webui-lora-masks/images/example.02.png filter=lfs diff=lfs merge=lfs -text

|

| 100 |

+

extensions/sd-webui-lora-masks/images/example.03.png filter=lfs diff=lfs merge=lfs -text

|

| 101 |

+

extensions/sd-webui-matview/images/sample1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 102 |

+

extensions/sd-webui-panorama-tools/images/example_2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 103 |

+

extensions/sd-webui-panorama-tools/images/example_3.jpg filter=lfs diff=lfs merge=lfs -text

|

| 104 |

+

extensions/sd-webui-panorama-tools/images/panorama_tools_ui_screenshot.jpg filter=lfs diff=lfs merge=lfs -text

|

| 105 |

+

extensions/sd-webui-picbatchwork/bin/ebsynth.dll filter=lfs diff=lfs merge=lfs -text

|

| 106 |

+

extensions/sd-webui-picbatchwork/bin/ebsynth.exe filter=lfs diff=lfs merge=lfs -text

|

| 107 |

+

extensions/sd-webui-picbatchwork/img/2.gif filter=lfs diff=lfs merge=lfs -text

|

| 108 |

+

extensions/sd-webui-pixelart/examples/custom_palette_demo.mp4 filter=lfs diff=lfs merge=lfs -text

|

| 109 |

+

extensions/sd-webui-samplers-scheduler/images/example2.png filter=lfs diff=lfs merge=lfs -text

|

| 110 |

+

extensions/sd-webui-samplers-scheduler/images/example3.png filter=lfs diff=lfs merge=lfs -text

|

| 111 |

+

extensions/sd-webui-semantic-guidance/samples/comparison.png filter=lfs diff=lfs merge=lfs -text

|

| 112 |

+

extensions/sd-webui-semantic-guidance/samples/enhance.jpg filter=lfs diff=lfs merge=lfs -text

|

| 113 |

+

extensions/sd-webui-smea/sample.jpg filter=lfs diff=lfs merge=lfs -text

|

| 114 |

+

extensions/sd-webui-smea/sample2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 115 |

+

extensions/sd-webui-timemachine/images/tm_result.png filter=lfs diff=lfs merge=lfs -text

|

| 116 |

+

extensions/sd-webui-vectorscope-cc/samples/XYZ.jpg filter=lfs diff=lfs merge=lfs -text

|

| 117 |

+

extensions/sd-webui-xl_vec/images/crop_top.png filter=lfs diff=lfs merge=lfs -text

|

| 118 |

+

extensions/sd-webui-xl_vec/images/mult.png filter=lfs diff=lfs merge=lfs -text

|

| 119 |

+

extensions/sd-webui-xl_vec/images/original_size.png filter=lfs diff=lfs merge=lfs -text

|

| 120 |

+

extensions/sd-webui-xyz-addon/img/Extra-Network-Weight.png filter=lfs diff=lfs merge=lfs -text

|

| 121 |

+

extensions/sd-webui-xyz-addon/img/Multi-Axis-2.png filter=lfs diff=lfs merge=lfs -text

|

| 122 |

+

extensions/sd-webui-xyz-addon/img/Multi-Axis-3.png filter=lfs diff=lfs merge=lfs -text

|

| 123 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-Combinations.png filter=lfs diff=lfs merge=lfs -text

|

| 124 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-P.png filter=lfs diff=lfs merge=lfs -text

|

| 125 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-Permutations-1-2.png filter=lfs diff=lfs merge=lfs -text

|

| 126 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-Permutations-2.png filter=lfs diff=lfs merge=lfs -text

|

| 127 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR-Permutations.png filter=lfs diff=lfs merge=lfs -text

|

| 128 |

+

extensions/sd-webui-xyz-addon/img/Prompt-SR.png filter=lfs diff=lfs merge=lfs -text

|

| 129 |

+

extensions/sd_extension-prompt_formatter/Twemoji.Mozilla.ttf filter=lfs diff=lfs merge=lfs -text

|

| 130 |

+

extensions/sd_webui_masactrl/resources/img/xyz_grid-0010-1508457017.png filter=lfs diff=lfs merge=lfs -text

|

| 131 |

+

extensions/sd_webui_masactrl-ash/resources/img/xyz_grid-0010-1508457017.png filter=lfs diff=lfs merge=lfs -text

|

| 132 |

+

extensions/sd_webui_realtime_lcm_canvas/preview.png filter=lfs diff=lfs merge=lfs -text

|

| 133 |

+

extensions/sd_webui_realtime_lcm_canvas/scripts/models/models--Lykon--dreamshaper-7/blobs/6d0f6f2e3f7f0133e7684e8dffd7d0dfb1f2b09ddaf327763214449cc7a36574 filter=lfs diff=lfs merge=lfs -text

|

| 134 |

+

extensions/sd_webui_realtime_lcm_canvas/scripts/models/models--Lykon--dreamshaper-7/blobs/79d051410ce59a337cb358d79a3e81b9e0c691c34fcc7625ecfebf6a5db233a4 filter=lfs diff=lfs merge=lfs -text

|

| 135 |

+

extensions/sd_webui_realtime_lcm_canvas/scripts/models/models--Lykon--dreamshaper-7/blobs/a6f6744cfbcfe4fa9d236a231fd67e248389df7187dc15d52f16d9e9872105ff filter=lfs diff=lfs merge=lfs -text

|

| 136 |

+

extensions/sd_webui_sghm/preview.png filter=lfs diff=lfs merge=lfs -text

|

| 137 |

+

extensions/sd_webui_sghm/sghm/models/SGHM filter=lfs diff=lfs merge=lfs -text

|

| 138 |

+

extensions/ssasd/images/sample.png filter=lfs diff=lfs merge=lfs -text

|

| 139 |

+

extensions/ssasd/images/sample2.png filter=lfs diff=lfs merge=lfs -text

|

| 140 |

+

extensions/ssasd/images/sample3.png filter=lfs diff=lfs merge=lfs -text

|

| 141 |

+

extensions/stable-diffusion-webui-composable-lora/readme/changelog_2023-04-08.png filter=lfs diff=lfs merge=lfs -text

|

| 142 |

+

extensions/stable-diffusion-webui-composable-lora/readme/fig11.png filter=lfs diff=lfs merge=lfs -text

|

| 143 |

+

extensions/stable-diffusion-webui-composable-lora/readme/fig12.png filter=lfs diff=lfs merge=lfs -text

|

| 144 |

+

extensions/stable-diffusion-webui-composable-lora/readme/fig13.png filter=lfs diff=lfs merge=lfs -text

|

| 145 |

+

extensions/stable-diffusion-webui-composable-lora/readme/fig8.png filter=lfs diff=lfs merge=lfs -text

|

| 146 |

+

extensions/stable-diffusion-webui-composable-lora/readme/fig9.png filter=lfs diff=lfs merge=lfs -text

|

| 147 |

+

extensions/stable-diffusion-webui-dumpunet/images/IN00.jpg filter=lfs diff=lfs merge=lfs -text

|

| 148 |

+

extensions/stable-diffusion-webui-dumpunet/images/IN05.jpg filter=lfs diff=lfs merge=lfs -text

|

| 149 |

+

extensions/stable-diffusion-webui-dumpunet/images/OUT06.jpg filter=lfs diff=lfs merge=lfs -text

|

| 150 |

+

extensions/stable-diffusion-webui-dumpunet/images/OUT11.jpg filter=lfs diff=lfs merge=lfs -text

|

| 151 |

+

extensions/stable-diffusion-webui-dumpunet/images/README_00_01_color.png filter=lfs diff=lfs merge=lfs -text

|

| 152 |

+

extensions/stable-diffusion-webui-dumpunet/images/README_00_01_gray.png filter=lfs diff=lfs merge=lfs -text

|

| 153 |

+

extensions/stable-diffusion-webui-dumpunet/images/README_02.png filter=lfs diff=lfs merge=lfs -text

|

| 154 |

+

extensions/stable-diffusion-webui-dumpunet/images/attn-IN01.png filter=lfs diff=lfs merge=lfs -text

|

| 155 |

+

extensions/stable-diffusion-webui-dumpunet/images/attn-OUT10.png filter=lfs diff=lfs merge=lfs -text

|

| 156 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/highres.png filter=lfs diff=lfs merge=lfs -text

|

| 157 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_10x12.png filter=lfs diff=lfs merge=lfs -text

|

| 158 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_1x120.png filter=lfs diff=lfs merge=lfs -text

|

| 159 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_2x60.png filter=lfs diff=lfs merge=lfs -text

|

| 160 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_3x40.png filter=lfs diff=lfs merge=lfs -text

|

| 161 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x15.png filter=lfs diff=lfs merge=lfs -text

|

| 162 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x23.png filter=lfs diff=lfs merge=lfs -text

|

| 163 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x3.png filter=lfs diff=lfs merge=lfs -text

|

| 164 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x30.png filter=lfs diff=lfs merge=lfs -text

|

| 165 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x5.png filter=lfs diff=lfs merge=lfs -text

|

| 166 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_4x8.png filter=lfs diff=lfs merge=lfs -text

|

| 167 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_6x20.png filter=lfs diff=lfs merge=lfs -text

|

| 168 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/pg_8x15.png filter=lfs diff=lfs merge=lfs -text

|

| 169 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_10.png filter=lfs diff=lfs merge=lfs -text

|

| 170 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_120.png filter=lfs diff=lfs merge=lfs -text

|

| 171 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_20.png filter=lfs diff=lfs merge=lfs -text

|

| 172 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_30.png filter=lfs diff=lfs merge=lfs -text

|

| 173 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_60.png filter=lfs diff=lfs merge=lfs -text

|

| 174 |

+

extensions/stable-diffusion-webui-hires-fix-progressive/img/std_90.png filter=lfs diff=lfs merge=lfs -text

|

| 175 |

+

extensions/stable-diffusion-webui-intm/images/IMAGE.png filter=lfs diff=lfs merge=lfs -text

|

| 176 |

+

extensions/stable-diffusion-webui-rembg/preview.png filter=lfs diff=lfs merge=lfs -text

|

| 177 |

+

extensions/stable-diffusion-webui-sonar/img/momentum.png filter=lfs diff=lfs merge=lfs -text

|

| 178 |

+

extensions/stable-diffusion-webui-tripclipskip/images/xy_plot.jpg filter=lfs diff=lfs merge=lfs -text

|

| 179 |

+

extensions/stable-diffusion-webui-two-shot/gradio-3.16.2-py3-none-any.whl filter=lfs diff=lfs merge=lfs -text

|

extensions/1-sd-dynamic-thresholding/.github/FUNDING.yml

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

github: mcmonkey4eva

|

extensions/1-sd-dynamic-thresholding/.github/workflows/publish.yml

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: Publish to Comfy registry

|

| 2 |

+

on:

|

| 3 |

+

workflow_dispatch:

|

| 4 |

+

push:

|

| 5 |

+

branches:

|

| 6 |

+

- master

|

| 7 |

+

paths:

|

| 8 |

+

- "pyproject.toml"

|

| 9 |

+

|

| 10 |

+

jobs:

|

| 11 |

+

publish-node:

|

| 12 |

+

name: Publish Custom Node to registry

|

| 13 |

+

runs-on: ubuntu-latest

|

| 14 |

+

steps:

|

| 15 |

+

- name: Check out code

|

| 16 |

+

uses: actions/checkout@v4

|

| 17 |

+

- name: Publish Custom Node

|

| 18 |

+

uses: Comfy-Org/publish-node-action@main

|

| 19 |

+

with:

|

| 20 |

+

## Add your own personal access token to your Github Repository secrets and reference it here.

|

| 21 |

+

personal_access_token: ${{ secrets.REGISTRY_ACCESS_TOKEN }}

|

extensions/1-sd-dynamic-thresholding/.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

__pycache__/

|

extensions/1-sd-dynamic-thresholding/LICENSE.txt

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

The MIT License (MIT)

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2023 Alex "mcmonkey" Goodwin

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

extensions/1-sd-dynamic-thresholding/README.md

ADDED

|

@@ -0,0 +1,120 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Stable Diffusion Dynamic Thresholding (CFG Scale Fix)

|

| 2 |

+

|

| 3 |

+

### Concept

|

| 4 |

+

|

| 5 |

+

Extension for [SwarmUI](https://github.com/mcmonkeyprojects/SwarmUI), [ComfyUI](https://github.com/comfyanonymous/ComfyUI), and [AUTOMATIC1111 Stable Diffusion WebUI](https://github.com/AUTOMATIC1111/stable-diffusion-webui) that enables a way to use higher CFG Scales without color issues.

|

| 6 |

+

|

| 7 |

+

This works by clamping latents between steps. You can read more [here](https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/3962) or [here](https://github.com/AUTOMATIC1111/stable-diffusion-webui/issues/3268) or [this tweet](https://twitter.com/Birchlabs/status/1582165379832348672).

|

| 8 |

+

|

| 9 |

+

--------------

|

| 10 |

+

|

| 11 |

+

### Credit

|

| 12 |

+

|

| 13 |

+

The core functionality of this PR was originally developed by [Birch-san](https://github.com/Birch-san) and ported to the WebUI by [dtan3847](https://github.com/dtan3847), then converted to an Auto WebUI extension and given a UI by [mcmonkey4eva](https://github.com/mcmonkey4eva), further development and research done by [mcmonkey4eva](https://github.com/mcmonkey4eva) and JDMLeverton. Ported by ComfyUI by [TwoDukes](https://github.com/TwoDukes) and [mcmonkey4eva](https://github.com/mcmonkey4eva). Ported to SwarmUI by [mcmonkey4eva](https://github.com/mcmonkey4eva).

|

| 14 |

+

|

| 15 |

+

--------------

|

| 16 |

+

|

| 17 |

+

### Examples

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

--------------

|

| 25 |

+

|

| 26 |

+

### Demo Grid

|

| 27 |

+

|

| 28 |

+

View at <https://sd.mcmonkey.org/dynthresh/>.

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

(Was generated via [this YAML config](https://gist.github.com/mcmonkey4eva/fccd29172f44424dfc0217a482c824f6) for the [Infinite Grid Generator](https://github.com/mcmonkeyprojects/sd-infinity-grid-generator-script))

|

| 33 |

+

|

| 34 |

+

--------------

|

| 35 |

+

|

| 36 |

+

### Installation and Usage

|

| 37 |

+

|

| 38 |

+

#### SwarmUI

|

| 39 |

+

|

| 40 |

+

- Supported out-of-the-box on default installations.

|

| 41 |

+

- If using a custom installation, just make sure the backend you use has this repo installed per the instructions specific to the backend as written below.

|

| 42 |

+

- It's under the "Display Advanced Options" parameter checkbox.

|

| 43 |

+

|

| 44 |

+

#### Auto WebUI

|

| 45 |

+

|

| 46 |

+

- You must have the [AUTOMATIC1111 Stable Diffusion WebUI](https://github.com/AUTOMATIC1111/stable-diffusion-webui) already installed and working. Refer to that project's readme for help with that.

|

| 47 |

+

- Open the WebUI, go to the `Extensions` tab

|

| 48 |

+

- -EITHER- Option **A**:

|

| 49 |

+

- go to the `Available` tab with

|

| 50 |

+

- click `Load from` (with the default list)

|

| 51 |

+

- Scroll down to find `Dynamic Thresholding (CFG Scale Fix)`, or use `CTRL+F` to find it

|

| 52 |

+

- -OR- Option **B**:

|

| 53 |

+

- Click on `Install from URL`

|

| 54 |

+

- Copy/paste this project's URL into the `URL for extension's git repository` textbox: `https://github.com/mcmonkeyprojects/sd-dynamic-thresholding`

|

| 55 |

+

- Click `Install`

|

| 56 |

+

- Restart or reload the WebUI

|

| 57 |

+

- Go to txt2img or img2img

|

| 58 |

+

- Check the `Enable Dynamic Thresholding (CFG Scale Fix)` box

|

| 59 |

+

- Read the info on-page and set the sliders where you want em.

|

| 60 |

+

- Click generate.

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

#### ComfyUI

|

| 64 |

+

|

| 65 |

+

- Must have [ComfyUI](https://github.com/comfyanonymous/ComfyUI) already installed and working. Refer to that project's readme for help with that.

|

| 66 |

+

- -EITHER- Option **A**: (TODO: Manager install)

|

| 67 |

+

- -OR- Option **B**:

|

| 68 |

+

- `cd ComfyUI/custom_nodes`

|

| 69 |

+

- `git clone https://github.com/mcmonkeyprojects/sd-dynamic-thresholding`

|

| 70 |

+

- restart ComfyUI

|

| 71 |

+

- Add node `advanced/mcmonkey/DynamicThresholdingSimple` (or `Full`)

|

| 72 |

+

- Link your model to the input, and then link the output model to your KSampler's input

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

--------------

|

| 77 |

+

|

| 78 |

+

### Supported Auto WebUI Extensions

|

| 79 |

+

|

| 80 |

+

- This can be configured within the [Infinity Grid Generator](https://github.com/mcmonkeyprojects/sd-infinity-grid-generator-script#supported-extensions) extension, see the readme of that project for details.

|

| 81 |

+

|

| 82 |

+

### ComfyUI Compatibility

|

| 83 |

+

|

| 84 |

+

- This would work with any variant of the `KSampler` node, including custom ones, so long as they do not totally override the internal sampling function (most don't).

|

| 85 |

+

|

| 86 |

+

----------------------

|

| 87 |

+

|

| 88 |

+

### Licensing pre-note:

|

| 89 |

+

|

| 90 |

+

This is an open source project, provided entirely freely, for everyone to use and contribute to.

|

| 91 |

+

|

| 92 |

+

If you make any changes that could benefit the community as a whole, please contribute upstream.

|

| 93 |

+

|

| 94 |

+

### The short of the license is:

|

| 95 |

+

|

| 96 |

+

You can do basically whatever you want, except you may not hold any developer liable for what you do with the software.

|

| 97 |

+

|

| 98 |

+

### The long version of the license follows:

|

| 99 |

+

|

| 100 |

+

The MIT License (MIT)

|

| 101 |

+

|

| 102 |

+

Copyright (c) 2023 Alex "mcmonkey" Goodwin

|

| 103 |

+

|

| 104 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 105 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 106 |

+

in the Software without restriction, including without limitation the rights

|

| 107 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 108 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 109 |

+

furnished to do so, subject to the following conditions:

|

| 110 |

+

|

| 111 |

+

The above copyright notice and this permission notice shall be included in all

|

| 112 |

+

copies or substantial portions of the Software.

|

| 113 |

+

|

| 114 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 115 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 116 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 117 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 118 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 119 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 120 |

+

SOFTWARE.

|

extensions/1-sd-dynamic-thresholding/__init__.py

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from . import dynthres_comfyui

|

| 2 |

+

|

| 3 |

+

NODE_CLASS_MAPPINGS = {

|

| 4 |

+

"DynamicThresholdingSimple": dynthres_comfyui.DynamicThresholdingSimpleComfyNode,

|

| 5 |

+

"DynamicThresholdingFull": dynthres_comfyui.DynamicThresholdingComfyNode,

|

| 6 |

+

}

|

extensions/1-sd-dynamic-thresholding/__pycache__/dynthres_core.cpython-310.pyc

ADDED

|

Binary file (4.49 kB). View file

|

|

|

extensions/1-sd-dynamic-thresholding/__pycache__/dynthres_unipc.cpython-310.pyc

ADDED

|

Binary file (4.92 kB). View file

|

|

|

extensions/1-sd-dynamic-thresholding/dynthres_comfyui.py

ADDED

|

@@ -0,0 +1,86 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .dynthres_core import DynThresh

|

| 2 |

+

|

| 3 |

+

class DynamicThresholdingComfyNode:

|

| 4 |

+

|

| 5 |

+

@classmethod

|

| 6 |

+

def INPUT_TYPES(s):

|

| 7 |

+

return {

|

| 8 |

+

"required": {

|

| 9 |

+

"model": ("MODEL",),

|

| 10 |

+

"mimic_scale": ("FLOAT", {"default": 7.0, "min": 0.0, "max": 100.0, "step": 0.5}),

|

| 11 |

+

"threshold_percentile": ("FLOAT", {"default": 1.0, "min": 0.0, "max": 1.0, "step": 0.01}),

|

| 12 |

+

"mimic_mode": (DynThresh.Modes, ),

|

| 13 |

+

"mimic_scale_min": ("FLOAT", {"default": 0.0, "min": 0.0, "max": 100.0, "step": 0.5}),

|

| 14 |

+

"cfg_mode": (DynThresh.Modes, ),

|

| 15 |

+

"cfg_scale_min": ("FLOAT", {"default": 0.0, "min": 0.0, "max": 100.0, "step": 0.5}),

|

| 16 |

+

"sched_val": ("FLOAT", {"default": 1.0, "min": 0.0, "max": 100.0, "step": 0.01}),

|

| 17 |

+

"separate_feature_channels": (["enable", "disable"], ),

|

| 18 |

+

"scaling_startpoint": (DynThresh.Startpoints, ),

|

| 19 |

+

"variability_measure": (DynThresh.Variabilities, ),

|

| 20 |

+

"interpolate_phi": ("FLOAT", {"default": 1.0, "min": 0.0, "max": 1.0, "step": 0.01}),

|

| 21 |

+

}

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

RETURN_TYPES = ("MODEL",)

|

| 25 |

+

FUNCTION = "patch"

|

| 26 |

+

CATEGORY = "advanced/mcmonkey"

|

| 27 |

+

|

| 28 |

+

def patch(self, model, mimic_scale, threshold_percentile, mimic_mode, mimic_scale_min, cfg_mode, cfg_scale_min, sched_val, separate_feature_channels, scaling_startpoint, variability_measure, interpolate_phi):

|

| 29 |

+

|

| 30 |

+

dynamic_thresh = DynThresh(mimic_scale, threshold_percentile, mimic_mode, mimic_scale_min, cfg_mode, cfg_scale_min, sched_val, 0, 999, separate_feature_channels == "enable", scaling_startpoint, variability_measure, interpolate_phi)

|

| 31 |

+

|

| 32 |

+

def sampler_dyn_thresh(args):

|

| 33 |

+

input = args["input"]

|

| 34 |

+

cond = input - args["cond"]

|

| 35 |

+

uncond = input - args["uncond"]

|

| 36 |

+

cond_scale = args["cond_scale"]

|

| 37 |

+

time_step = model.model.model_sampling.timestep(args["sigma"])

|

| 38 |

+

time_step = time_step[0].item()

|

| 39 |

+

dynamic_thresh.step = 999 - time_step

|

| 40 |

+

|

| 41 |

+

if cond_scale == mimic_scale:

|

| 42 |

+

return input - (uncond + (cond - uncond) * cond_scale)

|

| 43 |

+

else:

|

| 44 |

+

return input - dynamic_thresh.dynthresh(cond, uncond, cond_scale, None)

|

| 45 |

+

|

| 46 |

+

m = model.clone()

|

| 47 |

+

m.set_model_sampler_cfg_function(sampler_dyn_thresh)

|

| 48 |

+

return (m, )

|

| 49 |

+

|

| 50 |

+

class DynamicThresholdingSimpleComfyNode:

|

| 51 |

+

|

| 52 |

+

@classmethod

|

| 53 |

+

def INPUT_TYPES(s):

|

| 54 |

+

return {

|

| 55 |

+

"required": {

|

| 56 |

+

"model": ("MODEL",),

|

| 57 |

+

"mimic_scale": ("FLOAT", {"default": 7.0, "min": 0.0, "max": 100.0, "step": 0.5}),

|

| 58 |

+

"threshold_percentile": ("FLOAT", {"default": 1.0, "min": 0.0, "max": 1.0, "step": 0.01}),

|

| 59 |

+

}

|

| 60 |

+

}

|

| 61 |

+

|

| 62 |

+

RETURN_TYPES = ("MODEL",)

|

| 63 |

+

FUNCTION = "patch"

|

| 64 |

+

CATEGORY = "advanced/mcmonkey"

|

| 65 |

+

|

| 66 |

+

def patch(self, model, mimic_scale, threshold_percentile):

|

| 67 |

+

|

| 68 |

+

dynamic_thresh = DynThresh(mimic_scale, threshold_percentile, "CONSTANT", 0, "CONSTANT", 0, 0, 0, 999, False, "MEAN", "AD", 1)

|

| 69 |

+

|

| 70 |

+

def sampler_dyn_thresh(args):

|

| 71 |

+

input = args["input"]

|

| 72 |

+

cond = input - args["cond"]

|

| 73 |

+

uncond = input - args["uncond"]

|

| 74 |

+

cond_scale = args["cond_scale"]

|

| 75 |

+

time_step = model.model.model_sampling.timestep(args["sigma"])

|

| 76 |

+

time_step = time_step[0].item()

|

| 77 |

+

dynamic_thresh.step = 999 - time_step

|

| 78 |

+

|

| 79 |

+

if cond_scale == mimic_scale:

|

| 80 |

+

return input - (uncond + (cond - uncond) * cond_scale)

|

| 81 |

+

else:

|

| 82 |

+

return input - dynamic_thresh.dynthresh(cond, uncond, cond_scale, None)

|

| 83 |

+

|

| 84 |

+

m = model.clone()

|

| 85 |

+

m.set_model_sampler_cfg_function(sampler_dyn_thresh)

|

| 86 |

+

return (m, )

|

extensions/1-sd-dynamic-thresholding/dynthres_core.py

ADDED

|

@@ -0,0 +1,167 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch, math

|

| 2 |

+

|

| 3 |

+

######################### DynThresh Core #########################

|

| 4 |

+

|

| 5 |

+

class DynThresh:

|

| 6 |

+

|

| 7 |

+

Modes = ["Constant", "Linear Down", "Cosine Down", "Half Cosine Down", "Linear Up", "Cosine Up", "Half Cosine Up", "Power Up", "Power Down", "Linear Repeating", "Cosine Repeating", "Sawtooth"]

|

| 8 |

+

Startpoints = ["MEAN", "ZERO"]

|

| 9 |

+

Variabilities = ["AD", "STD"]

|

| 10 |

+

|

| 11 |

+

def __init__(self, mimic_scale, threshold_percentile, mimic_mode, mimic_scale_min, cfg_mode, cfg_scale_min, sched_val, experiment_mode, max_steps, separate_feature_channels, scaling_startpoint, variability_measure, interpolate_phi):

|

| 12 |

+

self.mimic_scale = mimic_scale

|

| 13 |

+

self.threshold_percentile = threshold_percentile

|

| 14 |

+

self.mimic_mode = mimic_mode

|

| 15 |

+

self.cfg_mode = cfg_mode

|

| 16 |

+

self.max_steps = max_steps

|

| 17 |

+

self.cfg_scale_min = cfg_scale_min

|

| 18 |

+

self.mimic_scale_min = mimic_scale_min

|

| 19 |

+

self.experiment_mode = experiment_mode

|

| 20 |

+

self.sched_val = sched_val

|

| 21 |

+

self.sep_feat_channels = separate_feature_channels

|

| 22 |

+

self.scaling_startpoint = scaling_startpoint

|

| 23 |

+

self.variability_measure = variability_measure

|

| 24 |

+

self.interpolate_phi = interpolate_phi

|

| 25 |

+

|

| 26 |

+

def interpret_scale(self, scale, mode, min):

|

| 27 |

+

scale -= min

|

| 28 |

+

max = self.max_steps - 1

|

| 29 |

+

frac = self.step / max

|

| 30 |

+

if mode == "Constant":

|

| 31 |

+

pass

|

| 32 |

+

elif mode == "Linear Down":

|

| 33 |

+

scale *= 1.0 - frac

|

| 34 |

+

elif mode == "Half Cosine Down":

|

| 35 |

+

scale *= math.cos(frac)

|

| 36 |

+

elif mode == "Cosine Down":

|

| 37 |

+

scale *= math.cos(frac * 1.5707)

|

| 38 |

+

elif mode == "Linear Up":

|

| 39 |

+

scale *= frac

|

| 40 |

+

elif mode == "Half Cosine Up":

|

| 41 |

+

scale *= 1.0 - math.cos(frac)

|

| 42 |

+

elif mode == "Cosine Up":

|

| 43 |

+

scale *= 1.0 - math.cos(frac * 1.5707)

|

| 44 |

+

elif mode == "Power Up":

|

| 45 |

+

scale *= math.pow(frac, self.sched_val)

|

| 46 |

+

elif mode == "Power Down":

|

| 47 |

+

scale *= 1.0 - math.pow(frac, self.sched_val)

|

| 48 |

+

elif mode == "Linear Repeating":

|

| 49 |

+

portion = (frac * self.sched_val) % 1.0

|

| 50 |

+

scale *= (0.5 - portion) * 2 if portion < 0.5 else (portion - 0.5) * 2

|

| 51 |

+

elif mode == "Cosine Repeating":

|

| 52 |

+

scale *= math.cos(frac * 6.28318 * self.sched_val) * 0.5 + 0.5

|

| 53 |

+

elif mode == "Sawtooth":

|

| 54 |

+

scale *= (frac * self.sched_val) % 1.0

|

| 55 |

+

scale += min

|

| 56 |

+

return scale

|

| 57 |

+

|

| 58 |

+

def dynthresh(self, cond, uncond, cfg_scale, weights):

|

| 59 |

+

mimic_scale = self.interpret_scale(self.mimic_scale, self.mimic_mode, self.mimic_scale_min)

|

| 60 |

+

cfg_scale = self.interpret_scale(cfg_scale, self.cfg_mode, self.cfg_scale_min)

|

| 61 |

+

# uncond shape is (batch, 4, height, width)

|

| 62 |

+

conds_per_batch = cond.shape[0] / uncond.shape[0]

|

| 63 |

+

assert conds_per_batch == int(conds_per_batch), "Expected # of conds per batch to be constant across batches"

|

| 64 |

+

cond_stacked = cond.reshape((-1, int(conds_per_batch)) + uncond.shape[1:])

|

| 65 |

+

|

| 66 |

+

### Normal first part of the CFG Scale logic, basically

|

| 67 |

+

diff = cond_stacked - uncond.unsqueeze(1)

|

| 68 |

+

if weights is not None:

|

| 69 |

+

diff = diff * weights

|

| 70 |

+

relative = diff.sum(1)

|

| 71 |

+

|

| 72 |

+

### Get the normal result for both mimic and normal scale

|

| 73 |

+

mim_target = uncond + relative * mimic_scale

|

| 74 |

+

cfg_target = uncond + relative * cfg_scale

|

| 75 |

+

### If we weren't doing mimic scale, we'd just return cfg_target here

|

| 76 |

+

|

| 77 |

+

### Now recenter the values relative to their average rather than absolute, to allow scaling from average

|

| 78 |

+

mim_flattened = mim_target.flatten(2)

|

| 79 |

+

cfg_flattened = cfg_target.flatten(2)

|

| 80 |

+

mim_means = mim_flattened.mean(dim=2).unsqueeze(2)

|

| 81 |

+

cfg_means = cfg_flattened.mean(dim=2).unsqueeze(2)

|

| 82 |

+

mim_centered = mim_flattened - mim_means

|

| 83 |

+

cfg_centered = cfg_flattened - cfg_means

|

| 84 |

+

|

| 85 |

+

if self.sep_feat_channels:

|

| 86 |

+

if self.variability_measure == 'STD':

|

| 87 |

+

mim_scaleref = mim_centered.std(dim=2).unsqueeze(2)

|

| 88 |

+

cfg_scaleref = cfg_centered.std(dim=2).unsqueeze(2)

|

| 89 |

+

else: # 'AD'

|

| 90 |

+

mim_scaleref = mim_centered.abs().max(dim=2).values.unsqueeze(2)

|

| 91 |

+

cfg_scaleref = torch.quantile(cfg_centered.abs(), self.threshold_percentile, dim=2).unsqueeze(2)

|

| 92 |

+

|

| 93 |

+

else:

|

| 94 |

+

if self.variability_measure == 'STD':

|

| 95 |

+

mim_scaleref = mim_centered.std()

|

| 96 |

+

cfg_scaleref = cfg_centered.std()

|

| 97 |

+

else: # 'AD'

|

| 98 |

+

mim_scaleref = mim_centered.abs().max()

|

| 99 |

+

cfg_scaleref = torch.quantile(cfg_centered.abs(), self.threshold_percentile)

|

| 100 |

+

|

| 101 |

+

if self.scaling_startpoint == 'ZERO':

|

| 102 |

+

scaling_factor = mim_scaleref / cfg_scaleref

|

| 103 |

+

result = cfg_flattened * scaling_factor

|

| 104 |

+

|

| 105 |

+

else: # 'MEAN'

|

| 106 |

+

if self.variability_measure == 'STD':

|

| 107 |

+

cfg_renormalized = (cfg_centered / cfg_scaleref) * mim_scaleref

|

| 108 |

+

else: # 'AD'

|

| 109 |

+

### Get the maximum value of all datapoints (with an optional threshold percentile on the uncond)

|

| 110 |

+

max_scaleref = torch.maximum(mim_scaleref, cfg_scaleref)

|

| 111 |

+

### Clamp to the max

|

| 112 |

+

cfg_clamped = cfg_centered.clamp(-max_scaleref, max_scaleref)

|

| 113 |

+

### Now shrink from the max to normalize and grow to the mimic scale (instead of the CFG scale)

|

| 114 |

+

cfg_renormalized = (cfg_clamped / max_scaleref) * mim_scaleref

|

| 115 |

+

|

| 116 |

+

### Now add it back onto the averages to get into real scale again and return

|

| 117 |

+

result = cfg_renormalized + cfg_means

|

| 118 |

+

|

| 119 |

+

actual_res = result.unflatten(2, mim_target.shape[2:])

|

| 120 |

+

|

| 121 |

+

if self.interpolate_phi != 1.0:

|

| 122 |

+

actual_res = actual_res * self.interpolate_phi + cfg_target * (1.0 - self.interpolate_phi)

|

| 123 |

+

|

| 124 |

+

if self.experiment_mode == 1:

|

| 125 |

+

num = actual_res.cpu().numpy()

|

| 126 |

+

for y in range(0, 64):

|

| 127 |

+

for x in range (0, 64):

|

| 128 |

+

if num[0][0][y][x] > 1.0:

|

| 129 |

+

num[0][1][y][x] *= 0.5

|

| 130 |

+

if num[0][1][y][x] > 1.0:

|

| 131 |

+

num[0][1][y][x] *= 0.5

|

| 132 |

+

if num[0][2][y][x] > 1.5:

|

| 133 |

+

num[0][2][y][x] *= 0.5

|

| 134 |

+

actual_res = torch.from_numpy(num).to(device=uncond.device)

|

| 135 |

+

elif self.experiment_mode == 2:

|

| 136 |

+

num = actual_res.cpu().numpy()

|

| 137 |

+

for y in range(0, 64):

|

| 138 |

+

for x in range (0, 64):

|

| 139 |

+

over_scale = False

|

| 140 |

+

for z in range(0, 4):

|

| 141 |

+

if abs(num[0][z][y][x]) > 1.5:

|

| 142 |

+

over_scale = True

|

| 143 |

+

if over_scale:

|

| 144 |

+

for z in range(0, 4):

|

| 145 |

+

num[0][z][y][x] *= 0.7

|

| 146 |

+

actual_res = torch.from_numpy(num).to(device=uncond.device)

|

| 147 |

+

elif self.experiment_mode == 3:

|

| 148 |

+

coefs = torch.tensor([

|

| 149 |

+

# R G B W

|

| 150 |

+

[0.298, 0.207, 0.208, 0.0], # L1

|

| 151 |

+

[0.187, 0.286, 0.173, 0.0], # L2

|

| 152 |

+

[-0.158, 0.189, 0.264, 0.0], # L3

|

| 153 |

+

[-0.184, -0.271, -0.473, 1.0], # L4

|

| 154 |

+

], device=uncond.device)

|

| 155 |

+

res_rgb = torch.einsum("laxy,ab -> lbxy", actual_res, coefs)

|

| 156 |

+

max_r, max_g, max_b, max_w = res_rgb[0][0].max(), res_rgb[0][1].max(), res_rgb[0][2].max(), res_rgb[0][3].max()

|

| 157 |

+

max_rgb = max(max_r, max_g, max_b)

|

| 158 |

+

print(f"test max = r={max_r}, g={max_g}, b={max_b}, w={max_w}, rgb={max_rgb}")

|

| 159 |

+

if self.step / (self.max_steps - 1) > 0.2:

|

| 160 |

+

if max_rgb < 2.0 and max_w < 3.0:

|

| 161 |

+

res_rgb /= max_rgb / 2.4

|

| 162 |

+

else:

|

| 163 |

+

if max_rgb > 2.4 and max_w > 3.0:

|

| 164 |

+

res_rgb /= max_rgb / 2.4

|

| 165 |

+

actual_res = torch.einsum("laxy,ab -> lbxy", res_rgb, coefs.inverse())

|

| 166 |

+

|

| 167 |

+

return actual_res

|

extensions/1-sd-dynamic-thresholding/dynthres_unipc.py

ADDED

|

@@ -0,0 +1,111 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import torch

|

| 3 |

+

import math

|

| 4 |

+

import traceback

|

| 5 |

+

from modules import shared

|

| 6 |

+

try:

|

| 7 |

+

from modules.models.diffusion import uni_pc

|

| 8 |

+

except Exception as e:

|

| 9 |

+

from modules import unipc as uni_pc

|

| 10 |

+

|

| 11 |

+

######################### UniPC Implementation logic #########################

|

| 12 |

+

|

| 13 |

+

# The majority of this is straight from modules.models/diffusion/uni_pc/sampler.py

|

| 14 |

+

# Unfortunately that's not an easy middle-injection point, so, just copypasta'd it all

|

| 15 |

+

# It's like they designed it to intentionally be as difficult to inject into as possible :(

|

| 16 |

+

# (It has hooks but not in useful locations)

|

| 17 |

+

# I stripped the original comments for brevity.

|

| 18 |

+

# Some never-used code (scheduler modes, noise modes, guidance modes) have been removed as well for brevity.

|

| 19 |

+

# The actual impl comes down to just the last line in particular, and the `before_sample` insert to track step count.

|

| 20 |

+

|

| 21 |

+

class CustomUniPCSampler(uni_pc.sampler.UniPCSampler):

|

| 22 |

+

def __init__(self, model, **kwargs):

|

| 23 |

+

super().__init__(model, *kwargs)

|

| 24 |

+

@torch.no_grad()

|

| 25 |

+

def sample(self, S, batch_size, shape, conditioning=None, callback=None, normals_sequence=None, img_callback=None,

|

| 26 |

+

quantize_x0=False, eta=0., mask=None, x0=None, temperature=1., noise_dropout=0., score_corrector=None,

|

| 27 |

+

corrector_kwargs=None, verbose=True, x_T=None, log_every_t=100, unconditional_guidance_scale=1.,

|

| 28 |

+

unconditional_conditioning=None, **kwargs):

|

| 29 |

+

if conditioning is not None:

|

| 30 |

+

if isinstance(conditioning, dict):

|

| 31 |

+

ctmp = conditioning[list(conditioning.keys())[0]]

|

| 32 |

+

while isinstance(ctmp, list): ctmp = ctmp[0]

|

| 33 |

+

cbs = ctmp.shape[0]

|

| 34 |

+

if cbs != batch_size:

|

| 35 |

+

print(f"Warning: Got {cbs} conditionings but batch-size is {batch_size}")

|

| 36 |

+

|

| 37 |

+

elif isinstance(conditioning, list):

|

| 38 |

+

for ctmp in conditioning:

|

| 39 |

+

if ctmp.shape[0] != batch_size:

|

| 40 |

+

print(f"Warning: Got {cbs} conditionings but batch-size is {batch_size}")

|

| 41 |

+

else:

|

| 42 |

+

if conditioning.shape[0] != batch_size:

|

| 43 |

+

print(f"Warning: Got {conditioning.shape[0]} conditionings but batch-size is {batch_size}")

|

| 44 |

+

C, H, W = shape

|

| 45 |

+

size = (batch_size, C, H, W)

|

| 46 |

+

device = self.model.betas.device

|

| 47 |

+

if x_T is None:

|

| 48 |

+

img = torch.randn(size, device=device)

|

| 49 |

+

else:

|

| 50 |

+

img = x_T

|

| 51 |

+

ns = uni_pc.uni_pc.NoiseScheduleVP('discrete', alphas_cumprod=self.alphas_cumprod)

|

| 52 |

+