Commit

•

6b2f29a

1

Parent(s):

a426b60

up

Browse files

README.md

CHANGED

|

@@ -1,15 +1,22 @@

|

|

| 1 |

---

|

| 2 |

tags:

|

| 3 |

-

-

|

| 4 |

---

|

| 5 |

|

| 6 |

-

# Denoising Diffusion

|

| 7 |

|

| 8 |

-

**Paper**: [Denoising Diffusion

|

| 9 |

|

| 10 |

**Abstract**:

|

| 11 |

|

| 12 |

-

*

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 13 |

|

| 14 |

## Usage

|

| 15 |

|

|

@@ -19,13 +26,13 @@ from diffusers import DiffusionPipeline

|

|

| 19 |

import PIL.Image

|

| 20 |

import numpy as np

|

| 21 |

|

| 22 |

-

model_id = "fusing/

|

| 23 |

|

| 24 |

# load model and scheduler

|

| 25 |

ddpm = DiffusionPipeline.from_pretrained(model_id)

|

| 26 |

|

| 27 |

# run pipeline in inference (sample random noise and denoise)

|

| 28 |

-

image = ddpm()

|

| 29 |

|

| 30 |

# process image to PIL

|

| 31 |

image_processed = image.cpu().permute(0, 2, 3, 1)

|

|

@@ -39,7 +46,7 @@ image_pil.save("test.png")

|

|

| 39 |

|

| 40 |

## Samples

|

| 41 |

|

| 42 |

-

1.

|

| 7 |

|

| 8 |

+

**Paper**: [Denoising Diffusion Implicit Models](https://arxiv.org/abs/2010.02502)

|

| 9 |

|

| 10 |

**Abstract**:

|

| 11 |

|

| 12 |

+

*Denoising diffusion probabilistic models (DDPMs) have achieved high quality image generation without adversarial training, yet they require simulating a Markov chain for many steps to produce a sample. To accelerate sampling, we present denoising diffusion implicit models (DDIMs), a more efficient class of iterative implicit probabilistic models with the same training procedure as DDPMs. In DDPMs, the generative process is defined as the reverse of a Markovian diffusion process. We construct a class of non-Markovian diffusion processes that lead to the same training objective, but whose reverse process can be much faster to sample from. We empirically demonstrate that DDIMs can produce high quality samples 10× to 50× faster in terms of wall-clock time compared to DDPMs, allow us to trade off computation for sample quality, and can perform semantically meaningful image interpolation directly in the latent space.*

|

| 13 |

+

|

| 14 |

+

**Explanation on `eta` and `num_inference_steps`**

|

| 15 |

+

|

| 16 |

+

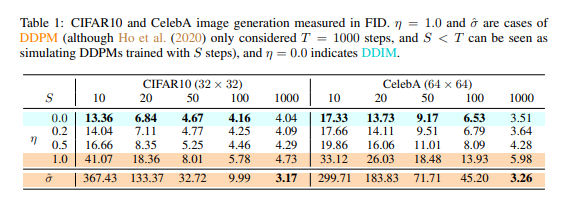

- `num_inference_steps` is called *S* in the following table

|

| 17 |

+

- `eta` is called *η* in the following table

|

| 18 |

+

|

| 19 |

+

|

| 20 |

|

| 21 |

## Usage

|

| 22 |

|

|

|

|

| 26 |

import PIL.Image

|

| 27 |

import numpy as np

|

| 28 |

|

| 29 |

+

model_id = "fusing/ddim-celeba-hq"

|

| 30 |

|

| 31 |

# load model and scheduler

|

| 32 |

ddpm = DiffusionPipeline.from_pretrained(model_id)

|

| 33 |

|

| 34 |

# run pipeline in inference (sample random noise and denoise)

|

| 35 |

+

image = ddpm(eta=0.0, num_inference_steps=50)

|

| 36 |

|

| 37 |

# process image to PIL

|

| 38 |

image_processed = image.cpu().permute(0, 2, 3, 1)

|

|

|

|

| 46 |

|

| 47 |

## Samples

|

| 48 |

|

| 49 |

+

1.

|

| 50 |

+

2.

|

| 51 |

+

3.

|

| 52 |

+

4.

|