File size: 1,773 Bytes

22e24fc 96b4655 6641744 96b4655 674c987 6641744 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 |

---

license: mit

language:

- es

metrics:

- accuracy

pipeline_tag: fill-mask

widget:

- text: Vamos a comer unos [MASK]

example_title: "Vamos a comer unos tacos"

tags:

- code

- nlp

- custom

- bilma

tokenizer:

- yes

---

# BILMA (Bert In Latin aMericA)

Bilma is a BERT implementation in tensorflow and trained on the Masked Language Model task under the https://sadit.github.io/regional-spanish-models-talk-2022/ datasets.

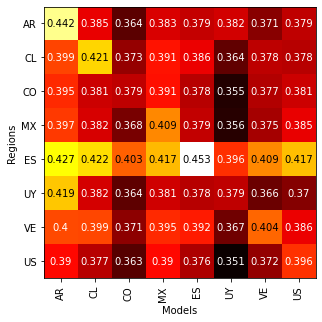

The accuracy of the models trained on the MLM task for different regions are:

# Pre-requisites

You will need TensorFlow 2.4 or newer.

# Quick guide

You can see the demo notebooks for a quick guide on how to use the models.

Clone this repository and then run

```

bash download-emoji15-bilma.sh

```

to download the MX model. Then to load the model you can use the code:

```

from bilma import bilma_model

vocab_file = "vocab_file_All.txt"

model_file = "bilma_small_MX_epoch-1_classification_epochs-13.h5"

model = bilma_model.load(model_file)

tokenizer = bilma_model.tokenizer(vocab_file=vocab_file,

max_length=280)

```

Now you will need some text:

```

texts = ["Tenemos tres dias sin internet ni senal de celular en el pueblo.",

"Incomunicados en el siglo XXI tampoco hay servicio de telefonia fija",

"Vamos a comer unos tacos",

"Los del banco no dejan de llamarme"]

toks = tokenizer.tokenize(texts)

```

With this, you are ready to use the model

```

p = model.predict(toks)

tokenizer.decode_emo(p[1])

```

which produces the output:

each emoji correspond to each entry in `texts`. |