metadata

license: apache-2.0

tags:

- alpaca

- gpt4

- gpt-j

- instruction

- finetuning

- lora

- peft

datasets:

- vicgalle/alpaca-gpt4

pipeline_tag: conversational

base_model: EleutherAI/gpt-j-6b

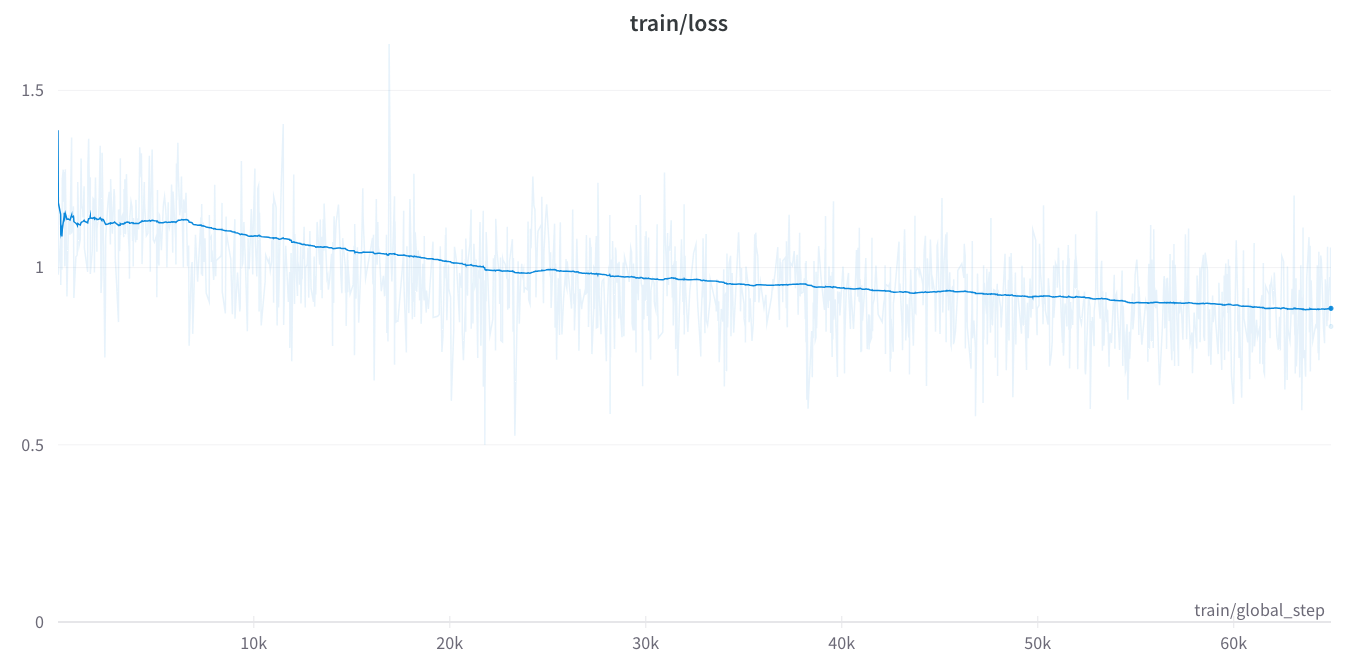

GPT-J 6B model was finetuned on GPT-4 generations of the Alpaca prompts on MonsterAPI's no-code LLM finetuner, using LoRA for ~ 65,000 steps, auto-optmised to run on 1 A6000 GPU with no out of memory issues and without needing me to write any code or setup a GPU server with libraries to run this experiment. The finetuner does it all for us by itself.

Documentation on no-code LLM finetuner: https://docs.monsterapi.ai/fine-tune-a-large-language-model-llm