Upload folder using huggingface_hub

Browse files- .gitattributes +2 -0

- .ipynb_checkpoints/config-checkpoint.json +28 -0

- checkpoint-1840/config.json +28 -0

- checkpoint-1840/model.safetensors +3 -0

- checkpoint-1840/optimizer.pt +3 -0

- checkpoint-1840/rng_state.pth +3 -0

- checkpoint-1840/scheduler.pt +3 -0

- checkpoint-1840/trainer_state.json +37 -0

- checkpoint-1840/training_args.bin +3 -0

- classification_report.json +1 -0

- config.json +28 -0

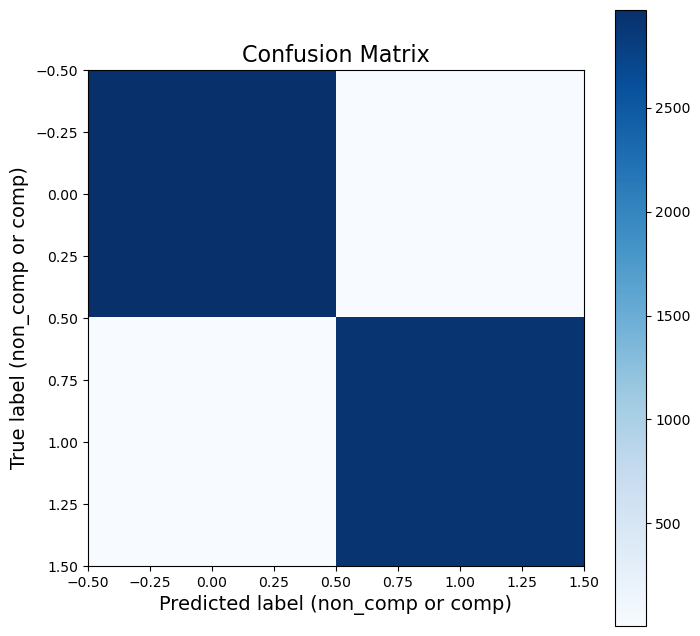

- confusion_matrix.png +0 -0

- detailed_confusion_matrix.png +0 -0

- fold_results.json +67 -0

- metrics.json +1 -0

- metrics_all_fold.json +44 -0

- metrics_ci_bounds.json +26 -0

- metrics_mean.json +8 -0

- metrics_std.json +8 -0

- metrics_visualisation.png +0 -0

- model.safetensors +3 -0

- precision_recall_curve.png +0 -0

- reduced_main_data.csv +3 -0

- roc_curve.png +0 -0

- test_data_for_future_evaluation.csv +3 -0

- test_top_repo_data.csv +0 -0

- top_repo_data.csv +0 -0

- tracker_carbon_statistics.json +33 -0

- training_args.bin +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

reduced_main_data.csv filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

test_data_for_future_evaluation.csv filter=lfs diff=lfs merge=lfs -text

|

.ipynb_checkpoints/config-checkpoint.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "distilroberta-base",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"RobertaForSequenceClassification"

|

| 5 |

+

],

|

| 6 |

+

"attention_probs_dropout_prob": 0.1,

|

| 7 |

+

"bos_token_id": 0,

|

| 8 |

+

"classifier_dropout": null,

|

| 9 |

+

"eos_token_id": 2,

|

| 10 |

+

"hidden_act": "gelu",

|

| 11 |

+

"hidden_dropout_prob": 0.1,

|

| 12 |

+

"hidden_size": 768,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"intermediate_size": 3072,

|

| 15 |

+

"layer_norm_eps": 1e-05,

|

| 16 |

+

"max_position_embeddings": 514,

|

| 17 |

+

"model_type": "roberta",

|

| 18 |

+

"num_attention_heads": 12,

|

| 19 |

+

"num_hidden_layers": 6,

|

| 20 |

+

"pad_token_id": 1,

|

| 21 |

+

"position_embedding_type": "absolute",

|

| 22 |

+

"problem_type": "single_label_classification",

|

| 23 |

+

"torch_dtype": "float32",

|

| 24 |

+

"transformers_version": "4.35.0",

|

| 25 |

+

"type_vocab_size": 1,

|

| 26 |

+

"use_cache": true,

|

| 27 |

+

"vocab_size": 50265

|

| 28 |

+

}

|

checkpoint-1840/config.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "distilroberta-base",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"RobertaForSequenceClassification"

|

| 5 |

+

],

|

| 6 |

+

"attention_probs_dropout_prob": 0.1,

|

| 7 |

+

"bos_token_id": 0,

|

| 8 |

+

"classifier_dropout": null,

|

| 9 |

+

"eos_token_id": 2,

|

| 10 |

+

"hidden_act": "gelu",

|

| 11 |

+

"hidden_dropout_prob": 0.1,

|

| 12 |

+

"hidden_size": 768,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"intermediate_size": 3072,

|

| 15 |

+

"layer_norm_eps": 1e-05,

|

| 16 |

+

"max_position_embeddings": 514,

|

| 17 |

+

"model_type": "roberta",

|

| 18 |

+

"num_attention_heads": 12,

|

| 19 |

+

"num_hidden_layers": 6,

|

| 20 |

+

"pad_token_id": 1,

|

| 21 |

+

"position_embedding_type": "absolute",

|

| 22 |

+

"problem_type": "single_label_classification",

|

| 23 |

+

"torch_dtype": "float32",

|

| 24 |

+

"transformers_version": "4.35.0",

|

| 25 |

+

"type_vocab_size": 1,

|

| 26 |

+

"use_cache": true,

|

| 27 |

+

"vocab_size": 50265

|

| 28 |

+

}

|

checkpoint-1840/model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:65991d02e936acfa38384baa6366ba8a943dce353cb716b29a18650cfc29a590

|

| 3 |

+

size 328492280

|

checkpoint-1840/optimizer.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8b3b333c16839fa82647f5686e93d13f32b010d46765bab63ed8166643d38154

|

| 3 |

+

size 657047610

|

checkpoint-1840/rng_state.pth

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7d167b935a74aa253d1aa8950b2f24089232dac154efb8ac3f4c8a615e8446dd

|

| 3 |

+

size 14244

|

checkpoint-1840/scheduler.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1b6f5d79dc65499bbc5ce5338f192aaac4cabe3d9de7659ebb3455dfecb8c648

|

| 3 |

+

size 1064

|

checkpoint-1840/trainer_state.json

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"best_metric": null,

|

| 3 |

+

"best_model_checkpoint": null,

|

| 4 |

+

"epoch": 4.993215739484397,

|

| 5 |

+

"eval_steps": 500,

|

| 6 |

+

"global_step": 1840,

|

| 7 |

+

"is_hyper_param_search": false,

|

| 8 |

+

"is_local_process_zero": true,

|

| 9 |

+

"is_world_process_zero": true,

|

| 10 |

+

"log_history": [

|

| 11 |

+

{

|

| 12 |

+

"epoch": 1.36,

|

| 13 |

+

"learning_rate": 4.9800000000000004e-05,

|

| 14 |

+

"loss": 0.0281,

|

| 15 |

+

"step": 500

|

| 16 |

+

},

|

| 17 |

+

{

|

| 18 |

+

"epoch": 2.71,

|

| 19 |

+

"learning_rate": 3.4810224965023004e-05,

|

| 20 |

+

"loss": 0.0472,

|

| 21 |

+

"step": 1000

|

| 22 |

+

},

|

| 23 |

+

{

|

| 24 |

+

"epoch": 4.07,

|

| 25 |

+

"learning_rate": 7.614811590589446e-06,

|

| 26 |

+

"loss": 0.0231,

|

| 27 |

+

"step": 1500

|

| 28 |

+

}

|

| 29 |

+

],

|

| 30 |

+

"logging_steps": 500,

|

| 31 |

+

"max_steps": 1840,

|

| 32 |

+

"num_train_epochs": 5,

|

| 33 |

+

"save_steps": 500,

|

| 34 |

+

"total_flos": 1.5591942691405824e+16,

|

| 35 |

+

"trial_name": null,

|

| 36 |

+

"trial_params": null

|

| 37 |

+

}

|

checkpoint-1840/training_args.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8482030ab2426c1370f4f8a5abc57fb9e3570c2d3f610e3261bf2a9bfa841aa0

|

| 3 |

+

size 4664

|

classification_report.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"0": {"precision": 0.9986559139784946, "recall": 0.9983204568357407, "f1-score": 0.9984881572316479, "support": 2977}, "1": {"precision": 0.9982853223593965, "recall": 0.9986277873070326, "f1-score": 0.9984565254673299, "support": 2915}, "accuracy": 0.9984725050916496, "macro avg": {"precision": 0.9984706181689456, "recall": 0.9984741220713866, "f1-score": 0.9984723413494889, "support": 5892}, "weighted avg": {"precision": 0.9984725679890731, "recall": 0.9984725050916496, "f1-score": 0.9984725077759473, "support": 5892}}

|

config.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "binary_classification_train_comp",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"RobertaModel"

|

| 5 |

+

],

|

| 6 |

+

"attention_probs_dropout_prob": 0.1,

|

| 7 |

+

"bos_token_id": 0,

|

| 8 |

+

"classifier_dropout": null,

|

| 9 |

+

"eos_token_id": 2,

|

| 10 |

+

"hidden_act": "gelu",

|

| 11 |

+

"hidden_dropout_prob": 0.1,

|

| 12 |

+

"hidden_size": 768,

|

| 13 |

+

"initializer_range": 0.02,

|

| 14 |

+

"intermediate_size": 3072,

|

| 15 |

+

"layer_norm_eps": 1e-05,

|

| 16 |

+

"max_position_embeddings": 514,

|

| 17 |

+

"model_type": "roberta",

|

| 18 |

+

"num_attention_heads": 12,

|

| 19 |

+

"num_hidden_layers": 6,

|

| 20 |

+

"pad_token_id": 1,

|

| 21 |

+

"position_embedding_type": "absolute",

|

| 22 |

+

"problem_type": "single_label_classification",

|

| 23 |

+

"torch_dtype": "float32",

|

| 24 |

+

"transformers_version": "4.35.0",

|

| 25 |

+

"type_vocab_size": 1,

|

| 26 |

+

"use_cache": true,

|

| 27 |

+

"vocab_size": 50265

|

| 28 |

+

}

|

confusion_matrix.png

ADDED

|

detailed_confusion_matrix.png

ADDED

|

fold_results.json

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"0": {

|

| 3 |

+

"eval_loss": 0.49251872301101685,

|

| 4 |

+

"eval_precision": 0.8383937316356513,

|

| 5 |

+

"eval_recall": 0.8806584362139918,

|

| 6 |

+

"eval_acc": 0.8569489224503648,

|

| 7 |

+

"eval_mcc": 0.7149044085568931,

|

| 8 |

+

"eval_f1": 0.8590065228299046,

|

| 9 |

+

"eval_auc": 0.9368403646060125,

|

| 10 |

+

"eval_runtime": 43.2643,

|

| 11 |

+

"eval_samples_per_second": 136.209,

|

| 12 |

+

"eval_steps_per_second": 2.15,

|

| 13 |

+

"epoch": 4.99

|

| 14 |

+

},

|

| 15 |

+

"1": {

|

| 16 |

+

"eval_loss": 0.1094377413392067,

|

| 17 |

+

"eval_precision": 0.9633649932157394,

|

| 18 |

+

"eval_recall": 0.9742710120068611,

|

| 19 |

+

"eval_acc": 0.96894620736467,

|

| 20 |

+

"eval_mcc": 0.9379515794159662,

|

| 21 |

+

"eval_f1": 0.9687873102507248,

|

| 22 |

+

"eval_auc": 0.9945646576898399,

|

| 23 |

+

"eval_runtime": 44.6925,

|

| 24 |

+

"eval_samples_per_second": 131.857,

|

| 25 |

+

"eval_steps_per_second": 2.081,

|

| 26 |

+

"epoch": 4.99

|

| 27 |

+

},

|

| 28 |

+

"2": {

|

| 29 |

+

"eval_loss": 0.03799332678318024,

|

| 30 |

+

"eval_precision": 0.9870616275110657,

|

| 31 |

+

"eval_recall": 0.9945111492281303,

|

| 32 |

+

"eval_acc": 0.9908350305498982,

|

| 33 |

+

"eval_mcc": 0.9816968472799382,

|

| 34 |

+

"eval_f1": 0.9907723855092277,

|

| 35 |

+

"eval_auc": 0.9989923317187055,

|

| 36 |

+

"eval_runtime": 43.1075,

|

| 37 |

+

"eval_samples_per_second": 136.682,

|

| 38 |

+

"eval_steps_per_second": 2.157,

|

| 39 |

+

"epoch": 4.99

|

| 40 |

+

},

|

| 41 |

+

"3": {

|

| 42 |

+

"eval_loss": 0.01312282308936119,

|

| 43 |

+

"eval_precision": 0.9958918178705922,

|

| 44 |

+

"eval_recall": 0.9979416809605489,

|

| 45 |

+

"eval_acc": 0.9969450101832994,

|

| 46 |

+

"eval_mcc": 0.9938915364089936,

|

| 47 |

+

"eval_f1": 0.9969156956819739,

|

| 48 |

+

"eval_auc": 0.9997945368465266,

|

| 49 |

+

"eval_runtime": 45.5654,

|

| 50 |

+

"eval_samples_per_second": 129.309,

|

| 51 |

+

"eval_steps_per_second": 2.041,

|

| 52 |

+

"epoch": 4.99

|

| 53 |

+

},

|

| 54 |

+

"4": {

|

| 55 |

+

"eval_loss": 0.005135194398462772,

|

| 56 |

+

"eval_precision": 0.9982853223593965,

|

| 57 |

+

"eval_recall": 0.9986277873070326,

|

| 58 |

+

"eval_acc": 0.9984725050916496,

|

| 59 |

+

"eval_mcc": 0.9969447402341746,

|

| 60 |

+

"eval_f1": 0.9984565254673299,

|

| 61 |

+

"eval_auc": 0.9999125369974838,

|

| 62 |

+

"eval_runtime": 49.4472,

|

| 63 |

+

"eval_samples_per_second": 119.157,

|

| 64 |

+

"eval_steps_per_second": 1.881,

|

| 65 |

+

"epoch": 4.99

|

| 66 |

+

}

|

| 67 |

+

}

|

metrics.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{"precision": 0.9982853223593965, "recall": 0.9986277873070326, "acc": 0.9984725050916496, "mcc": 0.9969447402341746, "f1": 0.9984565254673299, "auc": 0.9999125369974838}

|

metrics_all_fold.json

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"precision": [

|

| 3 |

+

0.8383937316356513,

|

| 4 |

+

0.9633649932157394,

|

| 5 |

+

0.9870616275110657,

|

| 6 |

+

0.9958918178705922,

|

| 7 |

+

0.9982853223593965

|

| 8 |

+

],

|

| 9 |

+

"recall": [

|

| 10 |

+

0.8806584362139918,

|

| 11 |

+

0.9742710120068611,

|

| 12 |

+

0.9945111492281303,

|

| 13 |

+

0.9979416809605489,

|

| 14 |

+

0.9986277873070326

|

| 15 |

+

],

|

| 16 |

+

"f1": [

|

| 17 |

+

0.8590065228299046,

|

| 18 |

+

0.9687873102507248,

|

| 19 |

+

0.9907723855092277,

|

| 20 |

+

0.9969156956819739,

|

| 21 |

+

0.9984565254673299

|

| 22 |

+

],

|

| 23 |

+

"auc": [

|

| 24 |

+

0.9368403646060125,

|

| 25 |

+

0.9945646576898399,

|

| 26 |

+

0.9989923317187055,

|

| 27 |

+

0.9997945368465266,

|

| 28 |

+

0.9999125369974838

|

| 29 |

+

],

|

| 30 |

+

"acc": [

|

| 31 |

+

0.8569489224503648,

|

| 32 |

+

0.96894620736467,

|

| 33 |

+

0.9908350305498982,

|

| 34 |

+

0.9969450101832994,

|

| 35 |

+

0.9984725050916496

|

| 36 |

+

],

|

| 37 |

+

"mcc": [

|

| 38 |

+

0.7149044085568931,

|

| 39 |

+

0.9379515794159662,

|

| 40 |

+

0.9816968472799382,

|

| 41 |

+

0.9938915364089936,

|

| 42 |

+

0.9969447402341746

|

| 43 |

+

]

|

| 44 |

+

}

|

metrics_ci_bounds.json

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"precision": {

|

| 3 |

+

"ci_lower": 0.872780485843686,

|

| 4 |

+

"ci_upper": 1.0404185111932922

|

| 5 |

+

},

|

| 6 |

+

"recall": {

|

| 7 |

+

"ci_lower": 0.9065070275143534,

|

| 8 |

+

"ci_upper": 1.0318969987722724

|

| 9 |

+

},

|

| 10 |

+

"f1": {

|

| 11 |

+

"ci_lower": 0.8892599568883727,

|

| 12 |

+

"ci_upper": 1.0363154190072918

|

| 13 |

+

},

|

| 14 |

+

"auc": {

|

| 15 |

+

"ci_lower": 0.9517755451801476,

|

| 16 |

+

"ci_upper": 1.0202662259632802

|

| 17 |

+

},

|

| 18 |

+

"acc": {

|

| 19 |

+

"ci_lower": 0.8877593873405121,

|

| 20 |

+

"ci_upper": 1.0370996829154409

|

| 21 |

+

},

|

| 22 |

+

"mcc": {

|

| 23 |

+

"ci_lower": 0.7762794118041381,

|

| 24 |

+

"ci_upper": 1.0738762329542482

|

| 25 |

+

}

|

| 26 |

+

}

|

metrics_mean.json

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"precision": 0.956599498518489,

|

| 3 |

+

"recall": 0.9692020131433129,

|

| 4 |

+

"f1": 0.9627876879478322,

|

| 5 |

+

"auc": 0.9860208855717139,

|

| 6 |

+

"acc": 0.9624295351279765,

|

| 7 |

+

"mcc": 0.9250778223791931

|

| 8 |

+

}

|

metrics_std.json

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"precision": 0.06750539018289854,

|

| 3 |

+

"recall": 0.050492714389445276,

|

| 4 |

+

"f1": 0.05921709187495754,

|

| 5 |

+

"auc": 0.027580199185211717,

|

| 6 |

+

"acc": 0.060137161015769824,

|

| 7 |

+

"mcc": 0.11983790364407107

|

| 8 |

+

}

|

metrics_visualisation.png

ADDED

|

model.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ba78e30ea5990c11835a6c6a7b03ba0c39607b0f8c9d6e8cc10948f6eac8f978

|

| 3 |

+

size 328485128

|

precision_recall_curve.png

ADDED

|

reduced_main_data.csv

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:41d8e1e3865f3312ed20c352a03a598802f2f71c7016e8ef21522680dbdb45b9

|

| 3 |

+

size 134634243

|

roc_curve.png

ADDED

|

test_data_for_future_evaluation.csv

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dc736021d43f2a6b45129f7f04e264c2650030eae35f3b441399d7295bbf0c30

|

| 3 |

+

size 27483092

|

test_top_repo_data.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

top_repo_data.csv

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tracker_carbon_statistics.json

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cloud_provider": "",

|

| 3 |

+

"cloud_region": "",

|

| 4 |

+

"codecarbon_version": "2.3.4",

|

| 5 |

+

"country_iso_code": "NOR",

|

| 6 |

+

"country_name": "Norway",

|

| 7 |

+

"cpu_count": 192,

|

| 8 |

+

"cpu_energy": 0.13407618624252984,

|

| 9 |

+

"cpu_model": "AMD EPYC 7642 48-Core Processor",

|

| 10 |

+

"cpu_power": 115.58872123619882,

|

| 11 |

+

"duration": 4398.068885803223,

|

| 12 |

+

"emissions": 0.04812536324960169,

|

| 13 |

+

"emissions_rate": 1.0942385055620274e-05,

|

| 14 |

+

"energy_consumed": 1.7468371415463408,

|

| 15 |

+

"gpu_count": 4,

|

| 16 |

+

"gpu_energy": 1.1546827076343176,

|

| 17 |

+

"gpu_model": "4 x NVIDIA GeForce RTX 3090",

|

| 18 |

+

"gpu_power": 930.33821452628,

|

| 19 |

+

"latitude": 59.9016,

|

| 20 |

+

"longitude": 10.7343,

|

| 21 |

+

"on_cloud": "N",

|

| 22 |

+

"os": "Linux-4.18.0-513.18.1.el8_9.x86_64-x86_64-with-glibc2.28",

|

| 23 |

+

"project_name": "codecarbon",

|

| 24 |

+

"pue": 1.0,

|

| 25 |

+

"python_version": "3.10.8",

|

| 26 |

+

"ram_energy": 0.4580782476694937,

|

| 27 |

+

"ram_power": 377.6938190460205,

|

| 28 |

+

"ram_total_size": 1007.1835174560547,

|

| 29 |

+

"region": "oslo county",

|

| 30 |

+

"run_id": "e316b659-b759-4762-8600-b944027dd60d",

|

| 31 |

+

"timestamp": "2024-04-05T15:17:11",

|

| 32 |

+

"tracking_mode": "machine"

|

| 33 |

+

}

|

training_args.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8482030ab2426c1370f4f8a5abc57fb9e3570c2d3f610e3261bf2a9bfa841aa0

|

| 3 |

+

size 4664

|