add examples in readme

Browse files

README.md

CHANGED

|

@@ -53,7 +53,6 @@ pipe.to("cuda")

|

|

| 53 |

pipe.load_lora_weights(adapter_id)

|

| 54 |

pipe.fuse_lora()

|

| 55 |

|

| 56 |

-

|

| 57 |

prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

|

| 58 |

|

| 59 |

# disable guidance_scale by passing 0

|

|

@@ -62,21 +61,206 @@ image = pipe(prompt=prompt, num_inference_steps=4, guidance_scale=0).images[0]

|

|

| 62 |

|

| 63 |

|

| 64 |

|

| 65 |

-

###

|

| 66 |

|

| 67 |

-

|

| 68 |

|

| 69 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 70 |

|

| 71 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 72 |

|

| 73 |

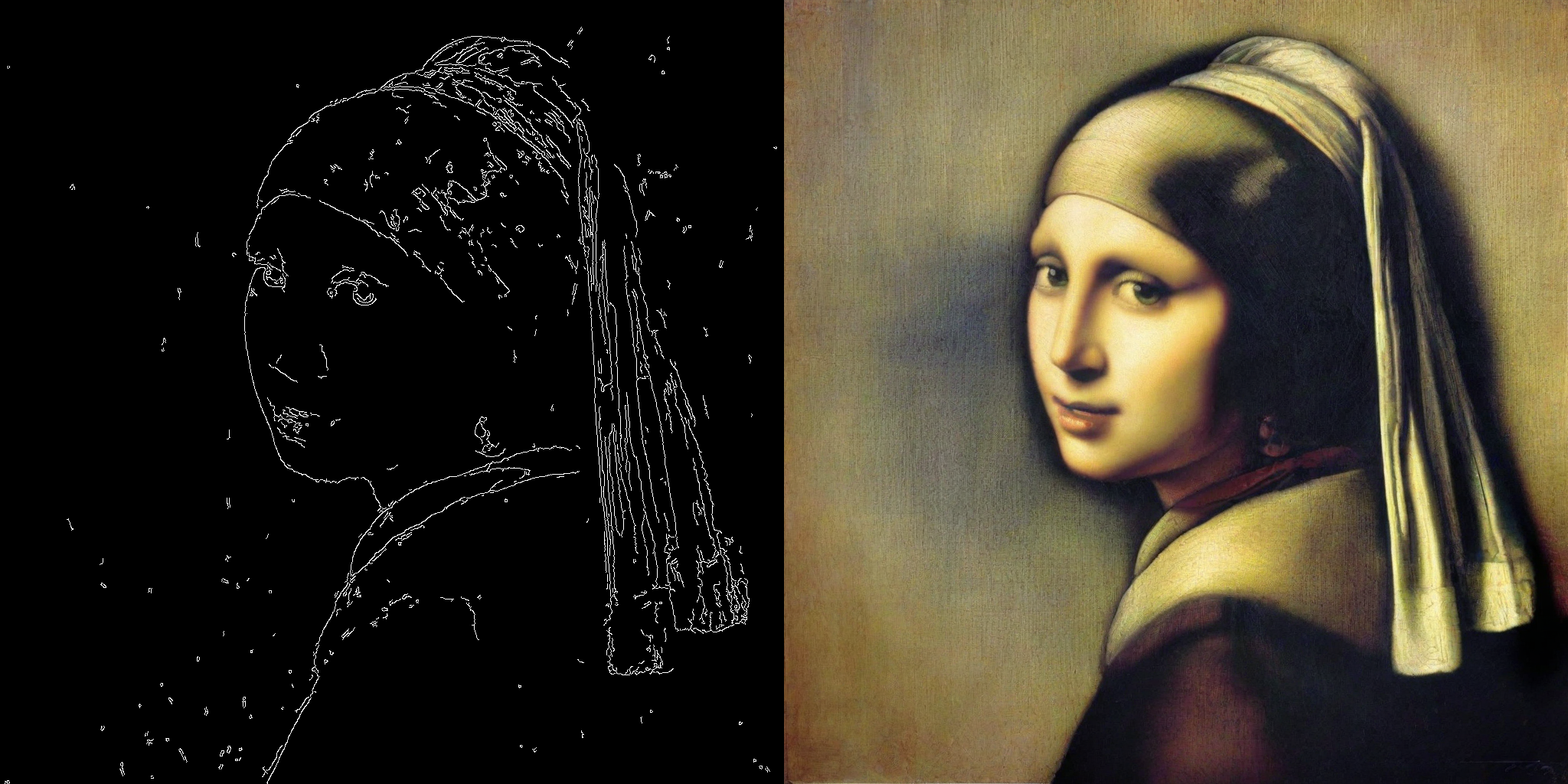

### ControlNet

|

| 74 |

|

| 75 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 76 |

|

| 77 |

### T2I Adapter

|

| 78 |

|

| 79 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 80 |

|

| 81 |

## Speed Benchmark

|

| 82 |

|

|

|

|

| 53 |

pipe.load_lora_weights(adapter_id)

|

| 54 |

pipe.fuse_lora()

|

| 55 |

|

|

|

|

| 56 |

prompt = "Self-portrait oil painting, a beautiful cyborg with golden hair, 8k"

|

| 57 |

|

| 58 |

# disable guidance_scale by passing 0

|

|

|

|

| 61 |

|

| 62 |

|

| 63 |

|

| 64 |

+

### Inpainting

|

| 65 |

|

| 66 |

+

LCM-LoRA can be used for inpainting as well.

|

| 67 |

|

| 68 |

+

```python

|

| 69 |

+

import torch

|

| 70 |

+

from diffusers import AutoPipelineForInpainting, LCMScheduler

|

| 71 |

+

from diffusers.utils import load_image, make_image_grid

|

| 72 |

+

|

| 73 |

+

pipe = AutoPipelineForInpainting.from_pretrained(

|

| 74 |

+

"diffusers/stable-diffusion-xl-1.0-inpainting-0.1",

|

| 75 |

+

torch_dtype=torch.float16,

|

| 76 |

+

variant="fp16",

|

| 77 |

+

).to("cuda")

|

| 78 |

+

|

| 79 |

+

# set scheduler

|

| 80 |

+

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

| 81 |

+

|

| 82 |

+

# load LCM-LoRA

|

| 83 |

+

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl")

|

| 84 |

+

pipe.fuse_lora()

|

| 85 |

+

|

| 86 |

+

# load base and mask image

|

| 87 |

+

init_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint.png").resize((1024, 1024))

|

| 88 |

+

mask_image = load_image("https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/diffusers/inpaint_mask.png").resize((1024, 1024))

|

| 89 |

+

|

| 90 |

+

prompt = "a castle on top of a mountain, highly detailed, 8k"

|

| 91 |

+

generator = torch.manual_seed(42)

|

| 92 |

+

image = pipe(

|

| 93 |

+

prompt=prompt,

|

| 94 |

+

image=init_image,

|

| 95 |

+

mask_image=mask_image,

|

| 96 |

+

generator=generator,

|

| 97 |

+

num_inference_steps=5,

|

| 98 |

+

guidance_scale=4,

|

| 99 |

+

).images[0]

|

| 100 |

+

make_image_grid([init_image, mask_image, image], rows=1, cols=3)

|

| 101 |

+

```

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

## Combine with styled LoRAs

|

| 107 |

|

| 108 |

+

LCM-LoRA can be combined with other LoRAs to generate styled-images in very few steps (4-8). In the following example, we'll use the LCM-LoRA with the [papercut LoRA](TheLastBen/Papercut_SDXL).

|

| 109 |

+

To learn more about how to combine LoRAs, refer to [this guide](https://huggingface.co/docs/diffusers/tutorials/using_peft_for_inference#combine-multiple-adapters).

|

| 110 |

+

|

| 111 |

+

```python

|

| 112 |

+

import torch

|

| 113 |

+

from diffusers import DiffusionPipeline, LCMScheduler

|

| 114 |

+

|

| 115 |

+

pipe = DiffusionPipeline.from_pretrained(

|

| 116 |

+

"stabilityai/stable-diffusion-xl-base-1.0",

|

| 117 |

+

variant="fp16",

|

| 118 |

+

torch_dtype=torch.float16

|

| 119 |

+

).to("cuda")

|

| 120 |

+

|

| 121 |

+

# set scheduler

|

| 122 |

+

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

| 123 |

+

|

| 124 |

+

# load LoRAs

|

| 125 |

+

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl", adapter_name="lcm")

|

| 126 |

+

pipe.load_lora_weights("TheLastBen/Papercut_SDXL", weight_name="papercut.safetensors", adapter_name="papercut")

|

| 127 |

+

|

| 128 |

+

# Combine LoRAs

|

| 129 |

+

pipe.set_adapters(["lcm", "papercut"], adapter_weights=[1.0, 0.8])

|

| 130 |

+

|

| 131 |

+

prompt = "papercut, a cute fox"

|

| 132 |

+

generator = torch.manual_seed(0)

|

| 133 |

+

image = pipe(prompt, num_inference_steps=4, guidance_scale=1, generator=generator).images[0]

|

| 134 |

+

image

|

| 135 |

+

```

|

| 136 |

+

|

| 137 |

+

|

| 138 |

|

| 139 |

### ControlNet

|

| 140 |

|

| 141 |

+

```python

|

| 142 |

+

import torch

|

| 143 |

+

import cv2

|

| 144 |

+

import numpy as np

|

| 145 |

+

from PIL import Image

|

| 146 |

+

|

| 147 |

+

from diffusers import StableDiffusionXLControlNetPipeline, ControlNetModel, LCMScheduler

|

| 148 |

+

from diffusers.utils import load_image

|

| 149 |

+

|

| 150 |

+

image = load_image(

|

| 151 |

+

"https://hf.co/datasets/huggingface/documentation-images/resolve/main/diffusers/input_image_vermeer.png"

|

| 152 |

+

).resize((1024, 1024))

|

| 153 |

+

|

| 154 |

+

image = np.array(image)

|

| 155 |

+

|

| 156 |

+

low_threshold = 100

|

| 157 |

+

high_threshold = 200

|

| 158 |

+

|

| 159 |

+

image = cv2.Canny(image, low_threshold, high_threshold)

|

| 160 |

+

image = image[:, :, None]

|

| 161 |

+

image = np.concatenate([image, image, image], axis=2)

|

| 162 |

+

canny_image = Image.fromarray(image)

|

| 163 |

+

|

| 164 |

+

controlnet = ControlNetModel.from_pretrained("diffusers/controlnet-canny-sdxl-1.0-small", torch_dtype=torch.float16, variant="fp16")

|

| 165 |

+

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

|

| 166 |

+

"stabilityai/stable-diffusion-xl-base-1.0",

|

| 167 |

+

controlnet=controlnet,

|

| 168 |

+

torch_dtype=torch.float16,

|

| 169 |

+

safety_checker=None,

|

| 170 |

+

variant="fp16"

|

| 171 |

+

).to("cuda")

|

| 172 |

+

|

| 173 |

+

# set scheduler

|

| 174 |

+

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

| 175 |

+

|

| 176 |

+

# load LCM-LoRA

|

| 177 |

+

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl")

|

| 178 |

+

pipe.fuse_lora()

|

| 179 |

+

|

| 180 |

+

generator = torch.manual_seed(0)

|

| 181 |

+

image = pipe(

|

| 182 |

+

"picture of the mona lisa",

|

| 183 |

+

image=canny_image,

|

| 184 |

+

num_inference_steps=5,

|

| 185 |

+

guidance_scale=1.5,

|

| 186 |

+

controlnet_conditioning_scale=0.5,

|

| 187 |

+

cross_attention_kwargs={"scale": 1},

|

| 188 |

+

generator=generator,

|

| 189 |

+

).images[0]

|

| 190 |

+

make_image_grid([canny_image, image], rows=1, cols=2)

|

| 191 |

+

```

|

| 192 |

+

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

|

| 196 |

+

<Tip>

|

| 197 |

+

The inference parameters in this example might not work for all examples, so we recommend you to try different values for `num_inference_steps`, `guidance_scale`, `controlnet_conditioning_scale` and `cross_attention_kwargs` parameters and choose the best one.

|

| 198 |

+

</Tip>

|

| 199 |

|

| 200 |

### T2I Adapter

|

| 201 |

|

| 202 |

+

This example shows how to use the LCM-LoRA with the [Canny T2I-Adapter](TencentARC/t2i-adapter-canny-sdxl-1.0) and SDXL.

|

| 203 |

+

|

| 204 |

+

```python

|

| 205 |

+

import torch

|

| 206 |

+

import cv2

|

| 207 |

+

import numpy as np

|

| 208 |

+

from PIL import Image

|

| 209 |

+

|

| 210 |

+

from diffusers import StableDiffusionXLAdapterPipeline, T2IAdapter, LCMScheduler

|

| 211 |

+

from diffusers.utils import load_image, make_image_grid

|

| 212 |

+

|

| 213 |

+

# Prepare image

|

| 214 |

+

# Detect the canny map in low resolution to avoid high-frequency details

|

| 215 |

+

image = load_image(

|

| 216 |

+

"https://huggingface.co/Adapter/t2iadapter/resolve/main/figs_SDXLV1.0/org_canny.jpg"

|

| 217 |

+

).resize((384, 384))

|

| 218 |

+

|

| 219 |

+

image = np.array(image)

|

| 220 |

+

|

| 221 |

+

low_threshold = 100

|

| 222 |

+

high_threshold = 200

|

| 223 |

+

|

| 224 |

+

image = cv2.Canny(image, low_threshold, high_threshold)

|

| 225 |

+

image = image[:, :, None]

|

| 226 |

+

image = np.concatenate([image, image, image], axis=2)

|

| 227 |

+

canny_image = Image.fromarray(image).resize((1024, 1024))

|

| 228 |

+

|

| 229 |

+

# load adapter

|

| 230 |

+

adapter = T2IAdapter.from_pretrained("TencentARC/t2i-adapter-canny-sdxl-1.0", torch_dtype=torch.float16, varient="fp16").to("cuda")

|

| 231 |

+

|

| 232 |

+

pipe = StableDiffusionXLAdapterPipeline.from_pretrained(

|

| 233 |

+

"stabilityai/stable-diffusion-xl-base-1.0",

|

| 234 |

+

adapter=adapter,

|

| 235 |

+

torch_dtype=torch.float16,

|

| 236 |

+

variant="fp16",

|

| 237 |

+

).to("cuda")

|

| 238 |

+

|

| 239 |

+

# set scheduler

|

| 240 |

+

pipe.scheduler = LCMScheduler.from_config(pipe.scheduler.config)

|

| 241 |

+

|

| 242 |

+

# load LCM-LoRA

|

| 243 |

+

pipe.load_lora_weights("latent-consistency/lcm-lora-sdxl")

|

| 244 |

+

|

| 245 |

+

prompt = "Mystical fairy in real, magic, 4k picture, high quality"

|

| 246 |

+

negative_prompt = "extra digit, fewer digits, cropped, worst quality, low quality, glitch, deformed, mutated, ugly, disfigured"

|

| 247 |

+

|

| 248 |

+

generator = torch.manual_seed(0)

|

| 249 |

+

image = pipe(

|

| 250 |

+

prompt=prompt,

|

| 251 |

+

negative_prompt=negative_prompt,

|

| 252 |

+

image=canny_image,

|

| 253 |

+

num_inference_steps=4,

|

| 254 |

+

guidance_scale=1.5,

|

| 255 |

+

adapter_conditioning_scale=0.8,

|

| 256 |

+

adapter_conditioning_factor=1,

|

| 257 |

+

generator=generator,

|

| 258 |

+

).images[0]

|

| 259 |

+

make_image_grid([canny_image, image], rows=1, cols=2)

|

| 260 |

+

```

|

| 261 |

+

|

| 262 |

+

|

| 263 |

+

|

| 264 |

|

| 265 |

## Speed Benchmark

|

| 266 |

|