Commit

·

84fdf13

1

Parent(s):

1f047e6

more epochs

Browse files- .ipynb_checkpoints/train-checkpoint.ipynb +296 -0

- confusion_matrix_normalized.png +0 -0

- results.png +0 -0

- train.ipynb +0 -0

.ipynb_checkpoints/train-checkpoint.ipynb

ADDED

|

@@ -0,0 +1,296 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "6a013a36-e156-4212-8ade-5fee79e33680",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"Install dependencies"

|

| 9 |

+

]

|

| 10 |

+

},

|

| 11 |

+

{

|

| 12 |

+

"cell_type": "code",

|

| 13 |

+

"execution_count": null,

|

| 14 |

+

"id": "acabbaee-35be-452b-8573-4d0974fa6340",

|

| 15 |

+

"metadata": {},

|

| 16 |

+

"outputs": [],

|

| 17 |

+

"source": [

|

| 18 |

+

"!pip3 install torch torchvision torchaudio\n",

|

| 19 |

+

"!pip3 install matplotlib\n",

|

| 20 |

+

"!pip3 install ultralytics roboflow"

|

| 21 |

+

]

|

| 22 |

+

},

|

| 23 |

+

{

|

| 24 |

+

"cell_type": "code",

|

| 25 |

+

"execution_count": null,

|

| 26 |

+

"id": "fb8218b5-61c9-4fe3-b5c6-1643beb39e28",

|

| 27 |

+

"metadata": {},

|

| 28 |

+

"outputs": [],

|

| 29 |

+

"source": [

|

| 30 |

+

"import torch\n",

|

| 31 |

+

"from ultralytics import YOLO\n",

|

| 32 |

+

"from pathlib import Path\n",

|

| 33 |

+

"import os\n",

|

| 34 |

+

"import json\n",

|

| 35 |

+

"import yaml\n",

|

| 36 |

+

"import pandas as pd\n",

|

| 37 |

+

"import matplotlib.pyplot as plt\n",

|

| 38 |

+

"import matplotlib.image as mpimg"

|

| 39 |

+

]

|

| 40 |

+

},

|

| 41 |

+

{

|

| 42 |

+

"cell_type": "code",

|

| 43 |

+

"execution_count": null,

|

| 44 |

+

"id": "4bccbb25",

|

| 45 |

+

"metadata": {},

|

| 46 |

+

"outputs": [],

|

| 47 |

+

"source": [

|

| 48 |

+

"\n",

|

| 49 |

+

"device = \"cuda:0\" if torch.cuda.is_available() else \"cpu\"\n",

|

| 50 |

+

"\n",

|

| 51 |

+

"print(f\"Using device: {device} ({'GPU' if device != 'cpu' else 'CPU'})\")\n"

|

| 52 |

+

]

|

| 53 |

+

},

|

| 54 |

+

{

|

| 55 |

+

"cell_type": "code",

|

| 56 |

+

"execution_count": null,

|

| 57 |

+

"metadata": {},

|

| 58 |

+

"outputs": [],

|

| 59 |

+

"source": [

|

| 60 |

+

"\n",

|

| 61 |

+

"CONFIG = {\n",

|

| 62 |

+

" 'model': 'yolo11m.pt', # Choose model size: n, s, m, l, x\n",

|

| 63 |

+

" 'data': 'datasets/Hardhat-or-Hat.v1-without-hat.yolov11/data.yaml', \n",

|

| 64 |

+

" 'epochs': 35,\n",

|

| 65 |

+

" 'batch': 2 if device != 'cpu' else 4, # Adjust batch \n",

|

| 66 |

+

" 'imgsz': 640,\n",

|

| 67 |

+

" 'patience': 5,\n",

|

| 68 |

+

" 'device': device, \n",

|

| 69 |

+

"}\n",

|

| 70 |

+

"os.environ[\"PYTORCH_CUDA_ALLOC_CONF\"] = \"expandable_segments:True\"\n"

|

| 71 |

+

]

|

| 72 |

+

},

|

| 73 |

+

{

|

| 74 |

+

"cell_type": "code",

|

| 75 |

+

"execution_count": null,

|

| 76 |

+

"id": "d349b982",

|

| 77 |

+

"metadata": {},

|

| 78 |

+

"outputs": [],

|

| 79 |

+

"source": [

|

| 80 |

+

"\n",

|

| 81 |

+

"save_dir = Path('runs/detect')\n",

|

| 82 |

+

"save_dir.mkdir(parents=True, exist_ok=True)\n",

|

| 83 |

+

"\n",

|

| 84 |

+

"this_path = os.getcwd()\n",

|

| 85 |

+

"\n",

|

| 86 |

+

"os.environ['ULTRALYTICS_CONFIG_DIR'] = this_path\n",

|

| 87 |

+

"\n",

|

| 88 |

+

"data_file = f'{this_path}/{CONFIG['data']}'\n",

|

| 89 |

+

"with open(data_file, 'r') as f:\n",

|

| 90 |

+

" data = yaml.safe_load(f)\n",

|

| 91 |

+

" \n",

|

| 92 |

+

"\n",

|

| 93 |

+

"data['train'] = f'{this_path}/{CONFIG['data'].rsplit('/', 1)[0]}/train/images'\n",

|

| 94 |

+

"data['val'] = f'{this_path}/{CONFIG['data'].rsplit('/', 1)[0]}/valid/images'\n",

|

| 95 |

+

"data['test'] = f'{this_path}/{CONFIG['data'].rsplit('/', 1)[0]}/test/images'\n",

|

| 96 |

+

"\n",

|

| 97 |

+

"with open(data_file, 'w') as f:\n",

|

| 98 |

+

" yaml.safe_dump(data, f)\n"

|

| 99 |

+

]

|

| 100 |

+

},

|

| 101 |

+

{

|

| 102 |

+

"cell_type": "code",

|

| 103 |

+

"execution_count": null,

|

| 104 |

+

"id": "4f831042",

|

| 105 |

+

"metadata": {},

|

| 106 |

+

"outputs": [],

|

| 107 |

+

"source": [

|

| 108 |

+

"\n",

|

| 109 |

+

"model = YOLO(CONFIG['model'])"

|

| 110 |

+

]

|

| 111 |

+

},

|

| 112 |

+

{

|

| 113 |

+

"cell_type": "code",

|

| 114 |

+

"execution_count": null,

|

| 115 |

+

"id": "20208cb5",

|

| 116 |

+

"metadata": {},

|

| 117 |

+

"outputs": [],

|

| 118 |

+

"source": [

|

| 119 |

+

"\n",

|

| 120 |

+

"results = model.train(\n",

|

| 121 |

+

" data=CONFIG['data'],\n",

|

| 122 |

+

" epochs=CONFIG['epochs'],\n",

|

| 123 |

+

" batch=CONFIG['batch'],\n",

|

| 124 |

+

" imgsz=CONFIG['imgsz'],\n",

|

| 125 |

+

" patience=CONFIG['patience'],\n",

|

| 126 |

+

" device=CONFIG['device'],\n",

|

| 127 |

+

" \n",

|

| 128 |

+

" verbose=True,\n",

|

| 129 |

+

" \n",

|

| 130 |

+

" optimizer='SGD',\n",

|

| 131 |

+

" lr0=0.001,\n",

|

| 132 |

+

" lrf=0.01,\n",

|

| 133 |

+

" momentum=0.9,\n",

|

| 134 |

+

" weight_decay=0.0005,\n",

|

| 135 |

+

" warmup_epochs=3,\n",

|

| 136 |

+

" warmup_bias_lr=0.01,\n",

|

| 137 |

+

" warmup_momentum=0.8,\n",

|

| 138 |

+

" amp=False,\n",

|

| 139 |

+

" \n",

|

| 140 |

+

" # Augmentations\n",

|

| 141 |

+

" augment=True,\n",

|

| 142 |

+

" hsv_h=0.015, # Image HSV-Hue augmentationc\n",

|

| 143 |

+

" hsv_s=0.7, # Image HSV-Saturation augmentation\n",

|

| 144 |

+

" hsv_v=0.4, # Image HSV-Value augmentation\n",

|

| 145 |

+

" degrees=10, # Image rotation (+/- deg)\n",

|

| 146 |

+

" translate=0.1, # Image translation (+/- fraction)\n",

|

| 147 |

+

" scale=0.3, # Image scale (+/- gain)\n",

|

| 148 |

+

" shear=0.0, # Image shear (+/- deg)\n",

|

| 149 |

+

" perspective=0.0, # Image perspective\n",

|

| 150 |

+

" flipud=0.1, # Image flip up-down\n",

|

| 151 |

+

" fliplr=0.1, # Image flip left-right\n",

|

| 152 |

+

" mosaic=1.0, # Image mosaic\n",

|

| 153 |

+

" mixup=0.0, # Image mixup\n",

|

| 154 |

+

" \n",

|

| 155 |

+

")\n"

|

| 156 |

+

]

|

| 157 |

+

},

|

| 158 |

+

{

|

| 159 |

+

"cell_type": "code",

|

| 160 |

+

"execution_count": null,

|

| 161 |

+

"id": "06211243",

|

| 162 |

+

"metadata": {},

|

| 163 |

+

"outputs": [],

|

| 164 |

+

"source": [

|

| 165 |

+

"\n",

|

| 166 |

+

"file_path = f\"{str(results.save_dir)}\" \n",

|

| 167 |

+

"results_csv_path = f\"{file_path}/results.csv\" "

|

| 168 |

+

]

|

| 169 |

+

},

|

| 170 |

+

{

|

| 171 |

+

"cell_type": "code",

|

| 172 |

+

"execution_count": null,

|

| 173 |

+

"id": "e67532ea",

|

| 174 |

+

"metadata": {},

|

| 175 |

+

"outputs": [],

|

| 176 |

+

"source": [

|

| 177 |

+

"\n",

|

| 178 |

+

"try:\n",

|

| 179 |

+

" result_metrics = pd.read_csv(results_csv_path)\n",

|

| 180 |

+

"except FileNotFoundError:\n",

|

| 181 |

+

" print(f\"File not found: {results_csv_path}\")\n",

|

| 182 |

+

" exit()\n",

|

| 183 |

+

"\n",

|

| 184 |

+

"\n",

|

| 185 |

+

"metrics = {\n",

|

| 186 |

+

" \"Train Box Loss\": \"train/box_loss\",\n",

|

| 187 |

+

" \"Train Class Loss\": \"train/cls_loss\",\n",

|

| 188 |

+

" \"Train DFL Loss\": \"train/dfl_loss\",\n",

|

| 189 |

+

" \"Validation Box Loss\": \"val/box_loss\",\n",

|

| 190 |

+

" \"Validation Class Loss\": \"val/cls_loss\",\n",

|

| 191 |

+

" \"Validation DFL Loss\": \"val/dfl_loss\",\n",

|

| 192 |

+

" \"Precision (B)\": \"metrics/precision(B)\",\n",

|

| 193 |

+

" \"Recall (B)\": \"metrics/recall(B)\",\n",

|

| 194 |

+

" \"mAP@0.5 (B)\": \"metrics/mAP50(B)\",\n",

|

| 195 |

+

" \"mAP@0.5:0.95 (B)\": \"metrics/mAP50-95(B)\",\n",

|

| 196 |

+

"}\n",

|

| 197 |

+

"\n",

|

| 198 |

+

"%matplotlib inline\n",

|

| 199 |

+

"\n",

|

| 200 |

+

"available_metrics = {name: col for name, col in metrics.items() if col in result_metrics.columns}\n",

|

| 201 |

+

"missing_metrics = [name for name in metrics if name not in available_metrics]\n",

|

| 202 |

+

"\n",

|

| 203 |

+

"if missing_metrics:\n",

|

| 204 |

+

" print(f\"Missing metrics: {', '.join(missing_metrics)}\")\n",

|

| 205 |

+

"else:\n",

|

| 206 |

+

" print(\"All expected metrics are present.\")\n",

|

| 207 |

+

"\n",

|

| 208 |

+

"for metric_name, col in available_metrics.items():\n",

|

| 209 |

+

" plt.figure()\n",

|

| 210 |

+

" plt.plot(result_metrics[\"epoch\"], result_metrics[col], label=metric_name)\n",

|

| 211 |

+

" plt.title(metric_name)\n",

|

| 212 |

+

" plt.xlabel(\"Epoch\")\n",

|

| 213 |

+

" plt.ylabel(metric_name)\n",

|

| 214 |

+

" plt.legend()\n",

|

| 215 |

+

" plt.grid()\n",

|

| 216 |

+

" plt.show()\n",

|

| 217 |

+

"\n",

|

| 218 |

+

"final_epoch = result_metrics.iloc[-1]\n",

|

| 219 |

+

"final_metrics = {name: final_epoch[col] for name, col in available_metrics.items()}\n",

|

| 220 |

+

"\n",

|

| 221 |

+

"print(\"\\nFinal Metrics Summary (Last Epoch):\")\n",

|

| 222 |

+

"for name, value in final_metrics.items():\n",

|

| 223 |

+

" print(f\"{name}: {value:.4f}\")\n",

|

| 224 |

+

"\n",

|

| 225 |

+

"print(\"\\nImprovement Trends:\")\n",

|

| 226 |

+

"for metric_name, col in available_metrics.items():\n",

|

| 227 |

+

" initial = result_metrics[col].iloc[0]\n",

|

| 228 |

+

" final = result_metrics[col].iloc[-1]\n",

|

| 229 |

+

" trend = \"improved\" if final < initial else \"worsened\"\n",

|

| 230 |

+

" print(f\"{metric_name}: {trend} (Initial: {initial:.4f}, Final: {final:.4f})\")\n"

|

| 231 |

+

]

|

| 232 |

+

},

|

| 233 |

+

{

|

| 234 |

+

"cell_type": "code",

|

| 235 |

+

"execution_count": null,

|

| 236 |

+

"id": "cd2fb43f",

|

| 237 |

+

"metadata": {},

|

| 238 |

+

"outputs": [],

|

| 239 |

+

"source": [

|

| 240 |

+

"\n",

|

| 241 |

+

"\n",

|

| 242 |

+

"img = mpimg.imread(f\"{file_path}/confusion_matrix_normalized.png\") \n",

|

| 243 |

+

"plt.imshow(img)\n",

|

| 244 |

+

"plt.axis('off') \n",

|

| 245 |

+

"plt.show()\n",

|

| 246 |

+

"\n",

|

| 247 |

+

"img = mpimg.imread(f\"{file_path}/F1_curve.png\") \n",

|

| 248 |

+

"plt.imshow(img)\n",

|

| 249 |

+

"plt.axis('off') \n",

|

| 250 |

+

"plt.show()\n",

|

| 251 |

+

"\n",

|

| 252 |

+

"img = mpimg.imread(f\"{file_path}/P_curve.png\") \n",

|

| 253 |

+

"plt.imshow(img)\n",

|

| 254 |

+

"plt.axis('off') \n",

|

| 255 |

+

"plt.show()\n",

|

| 256 |

+

"\n",

|

| 257 |

+

"img = mpimg.imread(f\"{file_path}/R_curve.png\") \n",

|

| 258 |

+

"plt.imshow(img)\n",

|

| 259 |

+

"plt.axis('off') \n",

|

| 260 |

+

"plt.show()\n",

|

| 261 |

+

"\n",

|

| 262 |

+

"img = mpimg.imread(f\"{file_path}/PR_curve.png\") \n",

|

| 263 |

+

"plt.imshow(img)\n",

|

| 264 |

+

"plt.axis('off') \n",

|

| 265 |

+

"plt.show()\n",

|

| 266 |

+

"\n",

|

| 267 |

+

"img = mpimg.imread(f\"{file_path}/results.png\") \n",

|

| 268 |

+

"plt.imshow(img)\n",

|

| 269 |

+

"plt.axis('off') \n",

|

| 270 |

+

"plt.show()\n",

|

| 271 |

+

"\n"

|

| 272 |

+

]

|

| 273 |

+

}

|

| 274 |

+

],

|

| 275 |

+

"metadata": {

|

| 276 |

+

"kernelspec": {

|

| 277 |

+

"display_name": "Python 3",

|

| 278 |

+

"language": "python",

|

| 279 |

+

"name": "python3"

|

| 280 |

+

},

|

| 281 |

+

"language_info": {

|

| 282 |

+

"codemirror_mode": {

|

| 283 |

+

"name": "ipython",

|

| 284 |

+

"version": 3

|

| 285 |

+

},

|

| 286 |

+

"file_extension": ".py",

|

| 287 |

+

"mimetype": "text/x-python",

|

| 288 |

+

"name": "python",

|

| 289 |

+

"nbconvert_exporter": "python",

|

| 290 |

+

"pygments_lexer": "ipython3",

|

| 291 |

+

"version": "3.12.7"

|

| 292 |

+

}

|

| 293 |

+

},

|

| 294 |

+

"nbformat": 4,

|

| 295 |

+

"nbformat_minor": 5

|

| 296 |

+

}

|

confusion_matrix_normalized.png

CHANGED

|

|

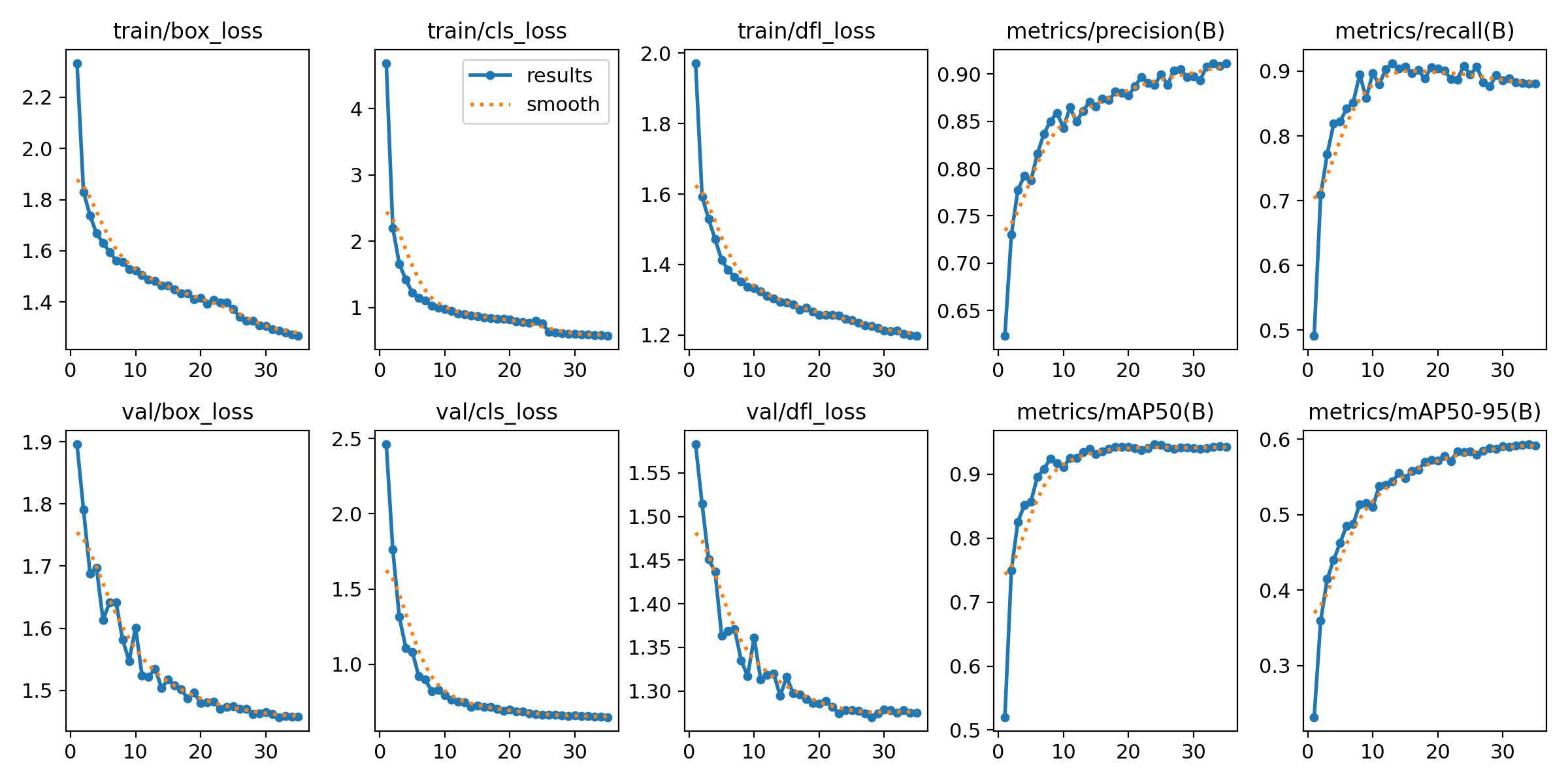

results.png

CHANGED

|

|

train.ipynb

CHANGED

|

The diff for this file is too large to render.

See raw diff

|

|

|