Commit

•

16ff440

1

Parent(s):

7c65494

Create README.md

Browse files

README.md

ADDED

|

@@ -0,0 +1,47 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

language:

|

| 3 |

+

- en

|

| 4 |

+

datasets:

|

| 5 |

+

- common_voice

|

| 6 |

+

tags:

|

| 7 |

+

- speech

|

| 8 |

+

license: apache-2.0

|

| 9 |

+

---

|

| 10 |

+

|

| 11 |

+

# UniSpeech-Large

|

| 12 |

+

|

| 13 |

+

[Microsoft's UniSpeech](https://www.microsoft.com/en-us/research/publication/unispeech-unified-speech-representation-learning-with-labeled-and-unlabeled-data/)

|

| 14 |

+

|

| 15 |

+

The large model pretrained on 16kHz sampled speech audio and phonetic labels. When using the model make sure that your speech input is also sampled at 16kHz and your text in converted into a sequence of phonemes. Note that this model should be fine-tuned on a downstream task, like Automatic Speech Recognition. Check out [this blog](https://huggingface.co/blog/fine-tune-wav2vec2-english) for more an in-detail explanation of how to fine-tune the model.

|

| 16 |

+

|

| 17 |

+

[Paper: UniSpeech: Unified Speech Representation Learning

|

| 18 |

+

with Labeled and Unlabeled Data](https://arxiv.org/abs/2101.07597)

|

| 19 |

+

|

| 20 |

+

Authors: Chengyi Wang, Yu Wu, Yao Qian, Kenichi Kumatani, Shujie Liu, Furu Wei, Michael Zeng, Xuedong Huang

|

| 21 |

+

|

| 22 |

+

**Abstract**

|

| 23 |

+

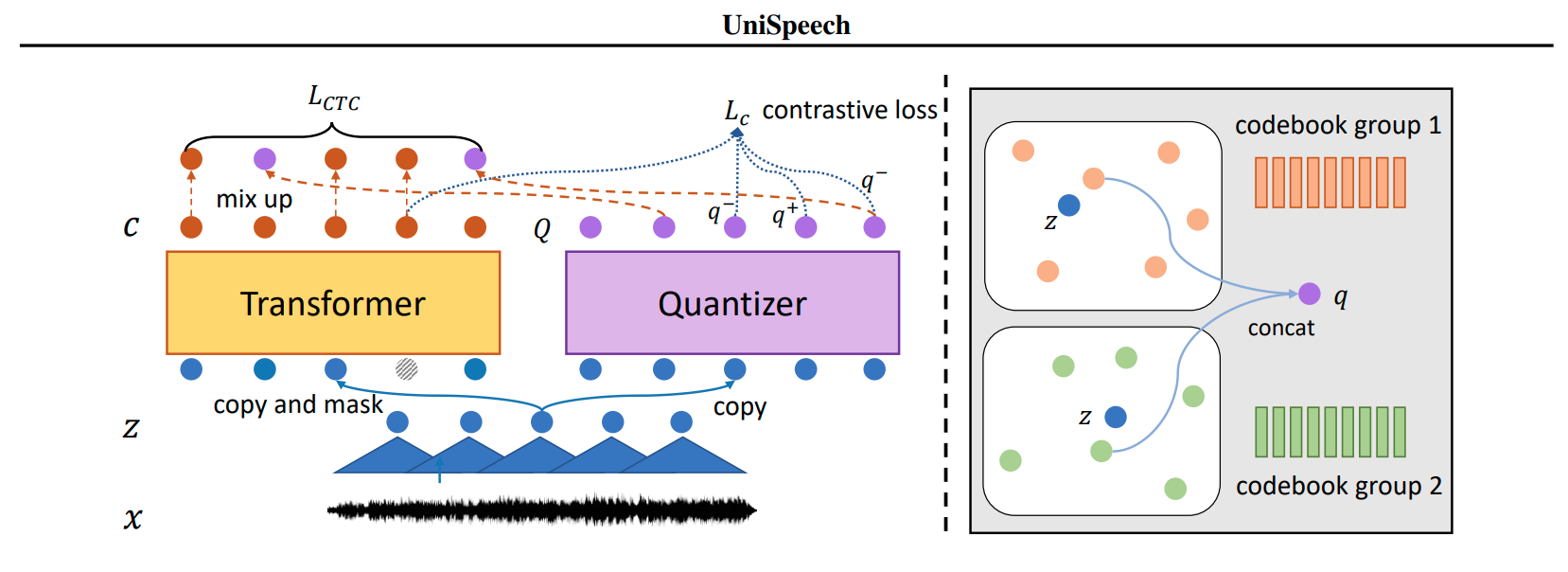

*In this paper, we propose a unified pre-training approach called UniSpeech to learn speech representations with both unlabeled and labeled data, in which supervised phonetic CTC learning and phonetically-aware contrastive self-supervised learning are conducted in a multi-task learning manner. The resultant representations can capture information more correlated with phonetic structures and improve the generalization across languages and domains. We evaluate the effectiveness of UniSpeech for cross-lingual representation learning on public CommonVoice corpus. The results show that UniSpeech outperforms self-supervised pretraining and supervised transfer learning for speech recognition by a maximum of 13.4% and 17.8% relative phone error rate reductions respectively (averaged over all testing languages). The transferability of UniSpeech is also demonstrated on a domain-shift speech recognition task, i.e., a relative word error rate reduction of 6% against the previous approach.*

|

| 24 |

+

|

| 25 |

+

The original model can be found under https://github.com/microsoft/UniSpeech/tree/main/UniSpeech.

|

| 26 |

+

|

| 27 |

+

# Usage

|

| 28 |

+

|

| 29 |

+

This is an English pre-trained speech model that has to be fine-tuned on a downstream task like speech recognition or audio classification before it can be

|

| 30 |

+

used in inference. The model was pre-trained in English and should therefore perform well only in English.

|

| 31 |

+

|

| 32 |

+

**Note**: The model was pre-trained on phonemes rather than characters. This means that one should make sure that the input text is converted to a sequence

|

| 33 |

+

of phonemes before fine-tuning.

|

| 34 |

+

|

| 35 |

+

## Speech Recognition

|

| 36 |

+

|

| 37 |

+

To fine-tune the model for speech recognition, see [the official speech recognition example](https://github.com/huggingface/transformers/tree/master/examples/pytorch/speech-recognition).

|

| 38 |

+

|

| 39 |

+

## Speech Classification

|

| 40 |

+

|

| 41 |

+

To fine-tune the model for speech classification, see [the official audio classification example](https://github.com/huggingface/transformers/tree/master/examples/pytorch/audio-classification).

|

| 42 |

+

|

| 43 |

+

# License

|

| 44 |

+

|

| 45 |

+

The official license can be found [here](https://github.com/microsoft/UniSpeech/blob/main/LICENSE)

|

| 46 |

+

|

| 47 |

+

|