End of training

Browse files- README.md +5 -5

- all_results.json +19 -0

- egy_training_log.txt +2 -0

- eval_results.json +13 -0

- train_results.json +9 -0

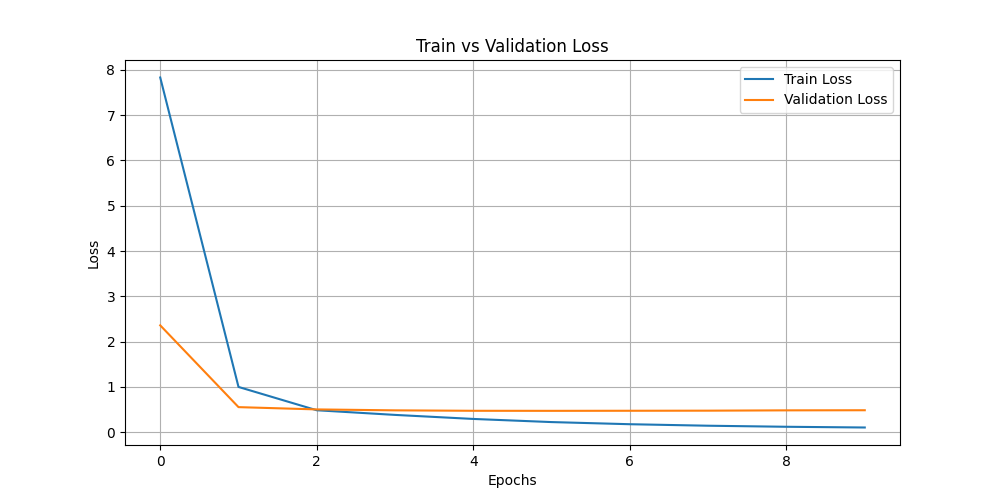

- train_vs_val_loss.png +0 -0

- trainer_state.json +260 -0

README.md

CHANGED

|

@@ -18,11 +18,11 @@ should probably proofread and complete it, then remove this comment. -->

|

|

| 18 |

|

| 19 |

This model is a fine-tuned version of [riotu-lab/ArabianGPT-01B](https://huggingface.co/riotu-lab/ArabianGPT-01B) on an unknown dataset.

|

| 20 |

It achieves the following results on the evaluation set:

|

| 21 |

-

- Loss: 0.

|

| 22 |

-

- Bleu: 0.

|

| 23 |

-

- Rouge1: 0.

|

| 24 |

-

- Rouge2: 0.

|

| 25 |

-

- Rougel: 0.

|

| 26 |

|

| 27 |

## Model description

|

| 28 |

|

|

|

|

| 18 |

|

| 19 |

This model is a fine-tuned version of [riotu-lab/ArabianGPT-01B](https://huggingface.co/riotu-lab/ArabianGPT-01B) on an unknown dataset.

|

| 20 |

It achieves the following results on the evaluation set:

|

| 21 |

+

- Loss: 0.4720

|

| 22 |

+

- Bleu: 0.2297

|

| 23 |

+

- Rouge1: 0.5777

|

| 24 |

+

- Rouge2: 0.3341

|

| 25 |

+

- Rougel: 0.5758

|

| 26 |

|

| 27 |

## Model description

|

| 28 |

|

all_results.json

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 11.0,

|

| 3 |

+

"eval_bleu": 0.22970619705356748,

|

| 4 |

+

"eval_loss": 0.47202983498573303,

|

| 5 |

+

"eval_rouge1": 0.5777164933812552,

|

| 6 |

+

"eval_rouge2": 0.33405816844574837,

|

| 7 |

+

"eval_rougeL": 0.5758449342460217,

|

| 8 |

+

"eval_runtime": 1.2669,

|

| 9 |

+

"eval_samples": 304,

|

| 10 |

+

"eval_samples_per_second": 239.949,

|

| 11 |

+

"eval_steps_per_second": 29.994,

|

| 12 |

+

"perplexity": 1.603245215811176,

|

| 13 |

+

"total_flos": 876634767360000.0,

|

| 14 |

+

"train_loss": 0.9867972013005457,

|

| 15 |

+

"train_runtime": 2886.3238,

|

| 16 |

+

"train_samples": 1220,

|

| 17 |

+

"train_samples_per_second": 8.454,

|

| 18 |

+

"train_steps_per_second": 1.06

|

| 19 |

+

}

|

egy_training_log.txt

CHANGED

|

@@ -170,3 +170,5 @@ INFO:root:Epoch 11.0: Train Loss = 0.1042, Eval Loss = 0.4852388799190521

|

|

| 170 |

INFO:absl:Using default tokenizer.

|

| 171 |

WARNING:huggingface_hub.utils._http:'(MaxRetryError("HTTPSConnectionPool(host='hf-hub-lfs-us-east-1.s3-accelerate.amazonaws.com', port=443): Max retries exceeded with url: /repos/4e/c5/4ec5c12bd4b31fb218515ee480d86e26419d948a9a596a06a6ad09ce77d37e3a/7e7cfbb2940a8097a9e6e8e5bc5cd9f274cf2ba28fd92151cbe4dd0e72ae5712?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Content-Sha256=UNSIGNED-PAYLOAD&X-Amz-Credential=AKIA2JU7TKAQLC2QXPN7%2F20240831%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240831T191048Z&X-Amz-Expires=86400&X-Amz-Signature=d59b69eb9175411687ff7810cd33c6c85c44bf981f49b4f8ae534408ec3562e2&X-Amz-SignedHeaders=host&partNumber=2&uploadId=xNqFvN721XV0SFXx42EP0sJDJW.W2QqIOgUvk5YQWI9SYvlnvF8kCxC_2mjzHt0k5t51hFB.oftAU6tGWfNhGNpUPE9HMn_UnOlRe.JGXG5J64HYNr3rofly6kQEo7ZO&x-id=UploadPart (Caused by SSLError(SSLEOFError(8, 'EOF occurred in violation of protocol (_ssl.c:2406)')))"), '(Request ID: 784209eb-439a-4fa9-b9f8-072965b6bd5d)')' thrown while requesting PUT https://hf-hub-lfs-us-east-1.s3-accelerate.amazonaws.com/repos/4e/c5/4ec5c12bd4b31fb218515ee480d86e26419d948a9a596a06a6ad09ce77d37e3a/7e7cfbb2940a8097a9e6e8e5bc5cd9f274cf2ba28fd92151cbe4dd0e72ae5712?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Content-Sha256=UNSIGNED-PAYLOAD&X-Amz-Credential=AKIA2JU7TKAQLC2QXPN7%2F20240831%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240831T191048Z&X-Amz-Expires=86400&X-Amz-Signature=d59b69eb9175411687ff7810cd33c6c85c44bf981f49b4f8ae534408ec3562e2&X-Amz-SignedHeaders=host&partNumber=2&uploadId=xNqFvN721XV0SFXx42EP0sJDJW.W2QqIOgUvk5YQWI9SYvlnvF8kCxC_2mjzHt0k5t51hFB.oftAU6tGWfNhGNpUPE9HMn_UnOlRe.JGXG5J64HYNr3rofly6kQEo7ZO&x-id=UploadPart

|

| 172 |

WARNING:huggingface_hub.utils._http:Retrying in 1s [Retry 1/5].

|

|

|

|

|

|

|

|

|

| 170 |

INFO:absl:Using default tokenizer.

|

| 171 |

WARNING:huggingface_hub.utils._http:'(MaxRetryError("HTTPSConnectionPool(host='hf-hub-lfs-us-east-1.s3-accelerate.amazonaws.com', port=443): Max retries exceeded with url: /repos/4e/c5/4ec5c12bd4b31fb218515ee480d86e26419d948a9a596a06a6ad09ce77d37e3a/7e7cfbb2940a8097a9e6e8e5bc5cd9f274cf2ba28fd92151cbe4dd0e72ae5712?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Content-Sha256=UNSIGNED-PAYLOAD&X-Amz-Credential=AKIA2JU7TKAQLC2QXPN7%2F20240831%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240831T191048Z&X-Amz-Expires=86400&X-Amz-Signature=d59b69eb9175411687ff7810cd33c6c85c44bf981f49b4f8ae534408ec3562e2&X-Amz-SignedHeaders=host&partNumber=2&uploadId=xNqFvN721XV0SFXx42EP0sJDJW.W2QqIOgUvk5YQWI9SYvlnvF8kCxC_2mjzHt0k5t51hFB.oftAU6tGWfNhGNpUPE9HMn_UnOlRe.JGXG5J64HYNr3rofly6kQEo7ZO&x-id=UploadPart (Caused by SSLError(SSLEOFError(8, 'EOF occurred in violation of protocol (_ssl.c:2406)')))"), '(Request ID: 784209eb-439a-4fa9-b9f8-072965b6bd5d)')' thrown while requesting PUT https://hf-hub-lfs-us-east-1.s3-accelerate.amazonaws.com/repos/4e/c5/4ec5c12bd4b31fb218515ee480d86e26419d948a9a596a06a6ad09ce77d37e3a/7e7cfbb2940a8097a9e6e8e5bc5cd9f274cf2ba28fd92151cbe4dd0e72ae5712?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Content-Sha256=UNSIGNED-PAYLOAD&X-Amz-Credential=AKIA2JU7TKAQLC2QXPN7%2F20240831%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20240831T191048Z&X-Amz-Expires=86400&X-Amz-Signature=d59b69eb9175411687ff7810cd33c6c85c44bf981f49b4f8ae534408ec3562e2&X-Amz-SignedHeaders=host&partNumber=2&uploadId=xNqFvN721XV0SFXx42EP0sJDJW.W2QqIOgUvk5YQWI9SYvlnvF8kCxC_2mjzHt0k5t51hFB.oftAU6tGWfNhGNpUPE9HMn_UnOlRe.JGXG5J64HYNr3rofly6kQEo7ZO&x-id=UploadPart

|

| 172 |

WARNING:huggingface_hub.utils._http:Retrying in 1s [Retry 1/5].

|

| 173 |

+

INFO:__main__:*** Evaluate ***

|

| 174 |

+

INFO:absl:Using default tokenizer.

|

eval_results.json

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 11.0,

|

| 3 |

+

"eval_bleu": 0.22970619705356748,

|

| 4 |

+

"eval_loss": 0.47202983498573303,

|

| 5 |

+

"eval_rouge1": 0.5777164933812552,

|

| 6 |

+

"eval_rouge2": 0.33405816844574837,

|

| 7 |

+

"eval_rougeL": 0.5758449342460217,

|

| 8 |

+

"eval_runtime": 1.2669,

|

| 9 |

+

"eval_samples": 304,

|

| 10 |

+

"eval_samples_per_second": 239.949,

|

| 11 |

+

"eval_steps_per_second": 29.994,

|

| 12 |

+

"perplexity": 1.603245215811176

|

| 13 |

+

}

|

train_results.json

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"epoch": 11.0,

|

| 3 |

+

"total_flos": 876634767360000.0,

|

| 4 |

+

"train_loss": 0.9867972013005457,

|

| 5 |

+

"train_runtime": 2886.3238,

|

| 6 |

+

"train_samples": 1220,

|

| 7 |

+

"train_samples_per_second": 8.454,

|

| 8 |

+

"train_steps_per_second": 1.06

|

| 9 |

+

}

|

train_vs_val_loss.png

ADDED

|

trainer_state.json

ADDED

|

@@ -0,0 +1,260 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"best_metric": 0.47202983498573303,

|

| 3 |

+

"best_model_checkpoint": "/home/iais_marenpielka/Bouthaina/res_nw_yem/checkpoint-918",

|

| 4 |

+

"epoch": 11.0,

|

| 5 |

+

"eval_steps": 500,

|

| 6 |

+

"global_step": 1683,

|

| 7 |

+

"is_hyper_param_search": false,

|

| 8 |

+

"is_local_process_zero": true,

|

| 9 |

+

"is_world_process_zero": true,

|

| 10 |

+

"log_history": [

|

| 11 |

+

{

|

| 12 |

+

"epoch": 1.0,

|

| 13 |

+

"grad_norm": 43.000205993652344,

|

| 14 |

+

"learning_rate": 1.53e-05,

|

| 15 |

+

"loss": 7.8314,

|

| 16 |

+

"step": 153

|

| 17 |

+

},

|

| 18 |

+

{

|

| 19 |

+

"epoch": 1.0,

|

| 20 |

+

"eval_bleu": 0.010356123410675664,

|

| 21 |

+

"eval_loss": 2.359743595123291,

|

| 22 |

+

"eval_rouge1": 0.09828786255795381,

|

| 23 |

+

"eval_rouge2": 0.0018177296310952823,

|

| 24 |

+

"eval_rougeL": 0.09639405964210626,

|

| 25 |

+

"eval_runtime": 1.1837,

|

| 26 |

+

"eval_samples_per_second": 256.818,

|

| 27 |

+

"eval_steps_per_second": 32.102,

|

| 28 |

+

"step": 153

|

| 29 |

+

},

|

| 30 |

+

{

|

| 31 |

+

"epoch": 2.0,

|

| 32 |

+

"grad_norm": 1.3453600406646729,

|

| 33 |

+

"learning_rate": 3.06e-05,

|

| 34 |

+

"loss": 0.9995,

|

| 35 |

+

"step": 306

|

| 36 |

+

},

|

| 37 |

+

{

|

| 38 |

+

"epoch": 2.0,

|

| 39 |

+

"eval_bleu": 0.11609954154539694,

|

| 40 |

+

"eval_loss": 0.5537932515144348,

|

| 41 |

+

"eval_rouge1": 0.47363801342590006,

|

| 42 |

+

"eval_rouge2": 0.18538347478382655,

|

| 43 |

+

"eval_rougeL": 0.4727595492611425,

|

| 44 |

+

"eval_runtime": 9.2767,

|

| 45 |

+

"eval_samples_per_second": 32.77,

|

| 46 |

+

"eval_steps_per_second": 4.096,

|

| 47 |

+

"step": 306

|

| 48 |

+

},

|

| 49 |

+

{

|

| 50 |

+

"epoch": 3.0,

|

| 51 |

+

"grad_norm": 1.2846729755401611,

|

| 52 |

+

"learning_rate": 4.5900000000000004e-05,

|

| 53 |

+

"loss": 0.4848,

|

| 54 |

+

"step": 459

|

| 55 |

+

},

|

| 56 |

+

{

|

| 57 |

+

"epoch": 3.0,

|

| 58 |

+

"eval_bleu": 0.14721751205352876,

|

| 59 |

+

"eval_loss": 0.5034472942352295,

|

| 60 |

+

"eval_rouge1": 0.500839694175635,

|

| 61 |

+

"eval_rouge2": 0.21735831386751384,

|

| 62 |

+

"eval_rougeL": 0.5001625449149274,

|

| 63 |

+

"eval_runtime": 10.2581,

|

| 64 |

+

"eval_samples_per_second": 29.635,

|

| 65 |

+

"eval_steps_per_second": 3.704,

|

| 66 |

+

"step": 459

|

| 67 |

+

},

|

| 68 |

+

{

|

| 69 |

+

"epoch": 4.0,

|

| 70 |

+

"grad_norm": 1.2576143741607666,

|

| 71 |

+

"learning_rate": 4.7812500000000003e-05,

|

| 72 |

+

"loss": 0.3823,

|

| 73 |

+

"step": 612

|

| 74 |

+

},

|

| 75 |

+

{

|

| 76 |

+

"epoch": 4.0,

|

| 77 |

+

"eval_bleu": 0.1911807573581778,

|

| 78 |

+

"eval_loss": 0.4827471375465393,

|

| 79 |

+

"eval_rouge1": 0.5330693017126298,

|

| 80 |

+

"eval_rouge2": 0.27439720992270494,

|

| 81 |

+

"eval_rougeL": 0.531424425624079,

|

| 82 |

+

"eval_runtime": 5.4191,

|

| 83 |

+

"eval_samples_per_second": 56.098,

|

| 84 |

+

"eval_steps_per_second": 7.012,

|

| 85 |

+

"step": 612

|

| 86 |

+

},

|

| 87 |

+

{

|

| 88 |

+

"epoch": 5.0,

|

| 89 |

+

"grad_norm": 1.7599295377731323,

|

| 90 |

+

"learning_rate": 4.482421875e-05,

|

| 91 |

+

"loss": 0.293,

|

| 92 |

+

"step": 765

|

| 93 |

+

},

|

| 94 |

+

{

|

| 95 |

+

"epoch": 5.0,

|

| 96 |

+

"eval_bleu": 0.20959557277319146,

|

| 97 |

+

"eval_loss": 0.4732062816619873,

|

| 98 |

+

"eval_rouge1": 0.5619100572084703,

|

| 99 |

+

"eval_rouge2": 0.30991934212678557,

|

| 100 |

+

"eval_rougeL": 0.5587085347101918,

|

| 101 |

+

"eval_runtime": 2.8763,

|

| 102 |

+

"eval_samples_per_second": 105.69,

|

| 103 |

+

"eval_steps_per_second": 13.211,

|

| 104 |

+

"step": 765

|

| 105 |

+

},

|

| 106 |

+

{

|

| 107 |

+

"epoch": 6.0,

|

| 108 |

+

"grad_norm": 1.4758834838867188,

|

| 109 |

+

"learning_rate": 4.18359375e-05,

|

| 110 |

+

"loss": 0.2239,

|

| 111 |

+

"step": 918

|

| 112 |

+

},

|

| 113 |

+

{

|

| 114 |

+

"epoch": 6.0,

|

| 115 |

+

"eval_bleu": 0.22970619705356748,

|

| 116 |

+

"eval_loss": 0.47202983498573303,

|

| 117 |

+

"eval_rouge1": 0.5777164933812552,

|

| 118 |

+

"eval_rouge2": 0.33405816844574837,

|

| 119 |

+

"eval_rougeL": 0.5758449342460217,

|

| 120 |

+

"eval_runtime": 1.7018,

|

| 121 |

+

"eval_samples_per_second": 178.629,

|

| 122 |

+

"eval_steps_per_second": 22.329,

|

| 123 |

+

"step": 918

|

| 124 |

+

},

|

| 125 |

+

{

|

| 126 |

+

"epoch": 7.0,

|

| 127 |

+

"grad_norm": 1.1557574272155762,

|

| 128 |

+

"learning_rate": 3.884765625e-05,

|

| 129 |

+

"loss": 0.1766,

|

| 130 |

+

"step": 1071

|

| 131 |

+

},

|

| 132 |

+

{

|

| 133 |

+

"epoch": 7.0,

|

| 134 |

+

"eval_bleu": 0.23015149161791434,

|

| 135 |

+

"eval_loss": 0.47373390197753906,

|

| 136 |

+

"eval_rouge1": 0.588487328464713,

|

| 137 |

+

"eval_rouge2": 0.3467647604638442,

|

| 138 |

+

"eval_rougeL": 0.5872191002290259,

|

| 139 |

+

"eval_runtime": 19.3271,

|

| 140 |

+

"eval_samples_per_second": 15.729,

|

| 141 |

+

"eval_steps_per_second": 1.966,

|

| 142 |

+

"step": 1071

|

| 143 |

+

},

|

| 144 |

+

{

|

| 145 |

+

"epoch": 8.0,

|

| 146 |

+

"grad_norm": 1.236377477645874,

|

| 147 |

+

"learning_rate": 3.5859375e-05,

|

| 148 |

+

"loss": 0.1434,

|

| 149 |

+

"step": 1224

|

| 150 |

+

},

|

| 151 |

+

{

|

| 152 |

+

"epoch": 8.0,

|

| 153 |

+

"eval_bleu": 0.24506232565608202,

|

| 154 |

+

"eval_loss": 0.47583380341529846,

|

| 155 |

+

"eval_rouge1": 0.5938395263674476,

|

| 156 |

+

"eval_rouge2": 0.3647516376509473,

|

| 157 |

+

"eval_rougeL": 0.5903133777624114,

|

| 158 |

+

"eval_runtime": 12.6597,

|

| 159 |

+

"eval_samples_per_second": 24.013,

|

| 160 |

+

"eval_steps_per_second": 3.002,

|

| 161 |

+

"step": 1224

|

| 162 |

+

},

|

| 163 |

+

{

|

| 164 |

+

"epoch": 9.0,

|

| 165 |

+

"grad_norm": 1.2275965213775635,

|

| 166 |

+

"learning_rate": 3.287109375e-05,

|

| 167 |

+

"loss": 0.1202,

|

| 168 |

+

"step": 1377

|

| 169 |

+

},

|

| 170 |

+

{

|

| 171 |

+

"epoch": 9.0,

|

| 172 |

+

"eval_bleu": 0.2508515461433435,

|

| 173 |

+

"eval_loss": 0.4827924072742462,

|

| 174 |

+

"eval_rouge1": 0.6046956358537867,

|

| 175 |

+

"eval_rouge2": 0.3682856780453533,

|

| 176 |

+

"eval_rougeL": 0.6015063720236549,

|

| 177 |

+

"eval_runtime": 30.4628,

|

| 178 |

+

"eval_samples_per_second": 9.979,

|

| 179 |

+

"eval_steps_per_second": 1.247,

|

| 180 |

+

"step": 1377

|

| 181 |

+

},

|

| 182 |

+

{

|

| 183 |

+

"epoch": 10.0,

|

| 184 |

+

"grad_norm": 0.9903411865234375,

|

| 185 |

+

"learning_rate": 2.9882812500000002e-05,

|

| 186 |

+

"loss": 0.1042,

|

| 187 |

+

"step": 1530

|

| 188 |

+

},

|

| 189 |

+

{

|

| 190 |

+

"epoch": 10.0,

|

| 191 |

+

"eval_bleu": 0.24386094085391116,

|

| 192 |

+

"eval_loss": 0.4852388799190521,

|

| 193 |

+

"eval_rouge1": 0.5980129828644593,

|

| 194 |

+

"eval_rouge2": 0.3703776134768108,

|

| 195 |

+

"eval_rougeL": 0.5951273362785527,

|

| 196 |

+

"eval_runtime": 23.4553,

|

| 197 |

+

"eval_samples_per_second": 12.961,

|

| 198 |

+

"eval_steps_per_second": 1.62,

|

| 199 |

+

"step": 1530

|

| 200 |

+

},

|

| 201 |

+

{

|

| 202 |

+

"epoch": 11.0,

|

| 203 |

+

"grad_norm": 1.104041337966919,

|

| 204 |

+

"learning_rate": 2.689453125e-05,

|

| 205 |

+

"loss": 0.0955,

|

| 206 |

+

"step": 1683

|

| 207 |

+

},

|

| 208 |

+

{

|

| 209 |

+

"epoch": 11.0,

|

| 210 |

+

"eval_bleu": 0.25597221159625966,

|

| 211 |

+

"eval_loss": 0.4884573221206665,

|

| 212 |

+

"eval_rouge1": 0.6115922153776887,

|

| 213 |

+

"eval_rouge2": 0.37952408975600893,

|

| 214 |

+

"eval_rougeL": 0.6085537919616412,

|

| 215 |

+

"eval_runtime": 1.297,

|

| 216 |

+

"eval_samples_per_second": 234.387,

|

| 217 |

+

"eval_steps_per_second": 29.298,

|

| 218 |

+

"step": 1683

|

| 219 |

+

},

|

| 220 |

+

{

|

| 221 |

+

"epoch": 11.0,

|

| 222 |

+

"step": 1683,

|

| 223 |

+

"total_flos": 876634767360000.0,

|

| 224 |

+

"train_loss": 0.9867972013005457,

|

| 225 |

+

"train_runtime": 2886.3238,

|

| 226 |

+

"train_samples_per_second": 8.454,

|

| 227 |

+

"train_steps_per_second": 1.06

|

| 228 |

+

}

|

| 229 |

+

],

|

| 230 |

+

"logging_steps": 500,

|

| 231 |

+

"max_steps": 3060,

|

| 232 |

+

"num_input_tokens_seen": 0,

|

| 233 |

+

"num_train_epochs": 20,

|

| 234 |

+

"save_steps": 500,

|

| 235 |

+

"stateful_callbacks": {

|

| 236 |

+

"EarlyStoppingCallback": {

|

| 237 |

+

"args": {

|

| 238 |

+

"early_stopping_patience": 5,

|

| 239 |

+

"early_stopping_threshold": 0.0

|

| 240 |

+

},

|

| 241 |

+

"attributes": {

|

| 242 |

+

"early_stopping_patience_counter": 0

|

| 243 |

+

}

|

| 244 |

+

},

|

| 245 |

+

"TrainerControl": {

|

| 246 |

+

"args": {

|

| 247 |

+

"should_epoch_stop": false,

|

| 248 |

+

"should_evaluate": false,

|

| 249 |

+

"should_log": false,

|

| 250 |

+

"should_save": true,

|

| 251 |

+

"should_training_stop": true

|

| 252 |

+

},

|

| 253 |

+

"attributes": {}

|

| 254 |

+

}

|

| 255 |

+

},

|

| 256 |

+

"total_flos": 876634767360000.0,

|

| 257 |

+

"train_batch_size": 8,

|

| 258 |

+

"trial_name": null,

|

| 259 |

+

"trial_params": null

|

| 260 |

+

}

|