File size: 1,630 Bytes

9a257be |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 |

---

language: en

license: mit

tags:

- vision

- image-segmentation

model_name: openmmlab/upernet-swin-base

---

# UperNet, Swin Transformer base-sized backbone

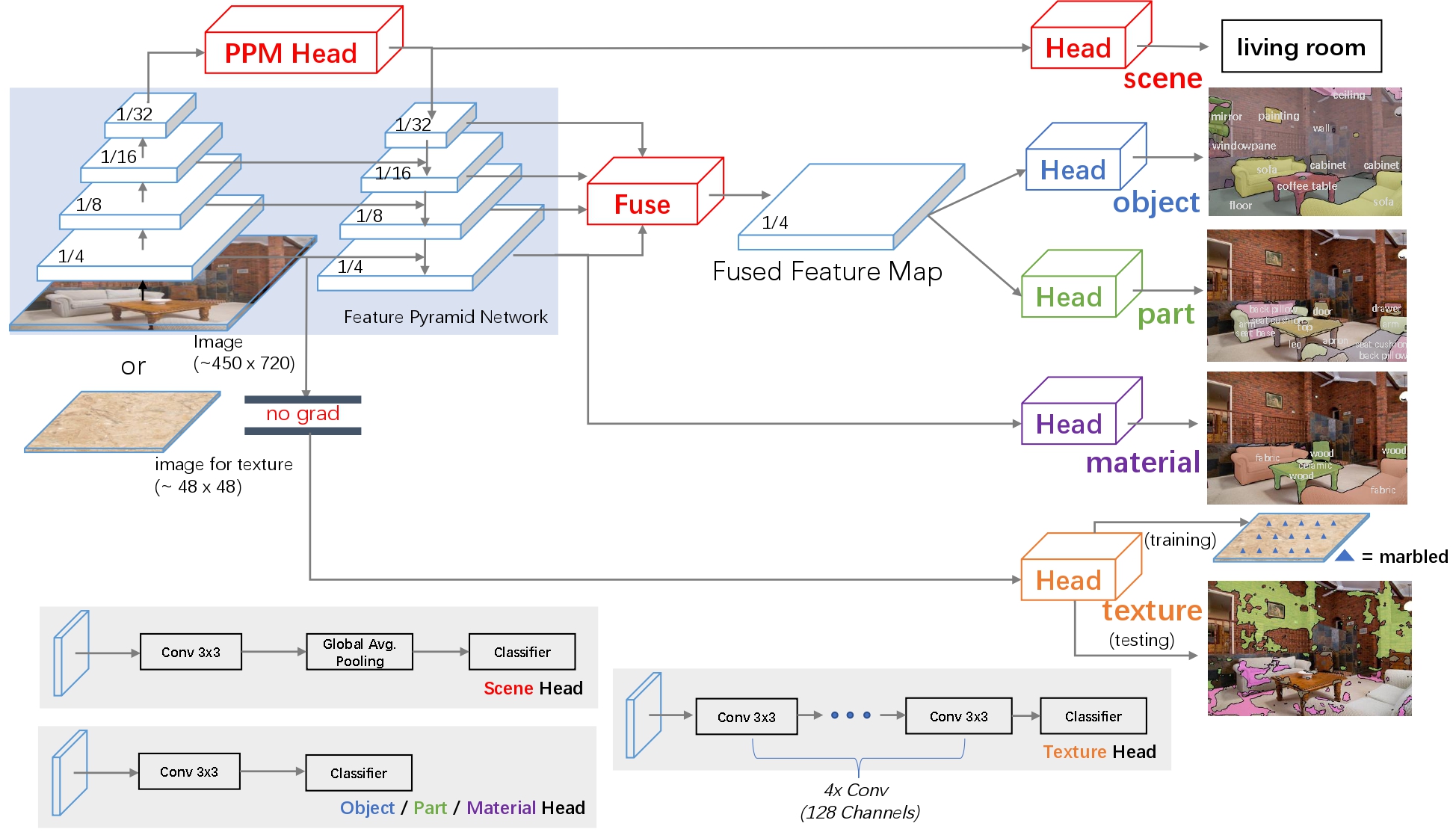

UperNet framework for semantic segmentation, leveraging a Swin Transformer backbone. UperNet was introduced in the paper [Unified Perceptual Parsing for Scene Understanding](https://arxiv.org/abs/1807.10221) by Xiao et al.

Combining UperNet with a Swin Transformer backbone was introduced in the paper [Swin Transformer: Hierarchical Vision Transformer using Shifted Windows](https://arxiv.org/abs/2103.14030).

Disclaimer: The team releasing UperNet + Swin Transformer did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

UperNet is a framework for semantic segmentation. It consists of several components, including a backbone, a Feature Pyramid Network (FPN) and a Pyramid Pooling Module (PPM).

Any visual backbone can be plugged into the UperNet framework. The framework predicts a semantic label per pixel.

## Intended uses & limitations

You can use the raw model for semantic segmentation. See the [model hub](https://huggingface.co/models?search=openmmlab/upernet) to look for

fine-tuned versions (with various backbones) on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/docs/transformers/main/en/model_doc/upernet#transformers.UperNetForSemanticSegmentation).

|