Merve Noyan

commited on

Commit

•

ad382c8

1

Parent(s):

5af142a

update

Browse files- app.py +85 -86

- example_images/art_critic.png +0 -0

- example_images/chicken_on_money.png +0 -0

- example_images/dragons_playing.png +0 -0

- example_images/dummy_pdf.png +0 -0

- example_images/example_images_ai2d_example_2.jpeg +0 -0

- example_images/example_images_meme_french.jpg +0 -0

- example_images/example_images_surfing_dog.jpg +0 -0

- example_images/example_images_tree_fortress.jpg +0 -0

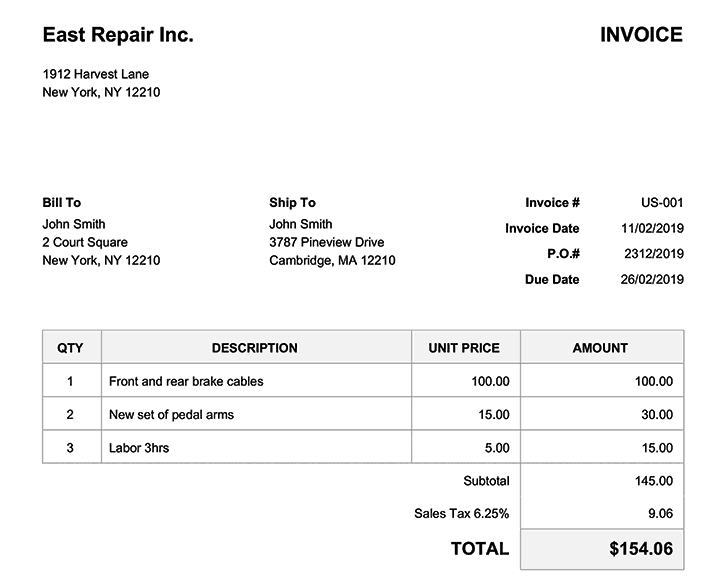

- example_images/examples_invoice.png +0 -0

- example_images/examples_wat_arun.jpg +0 -0

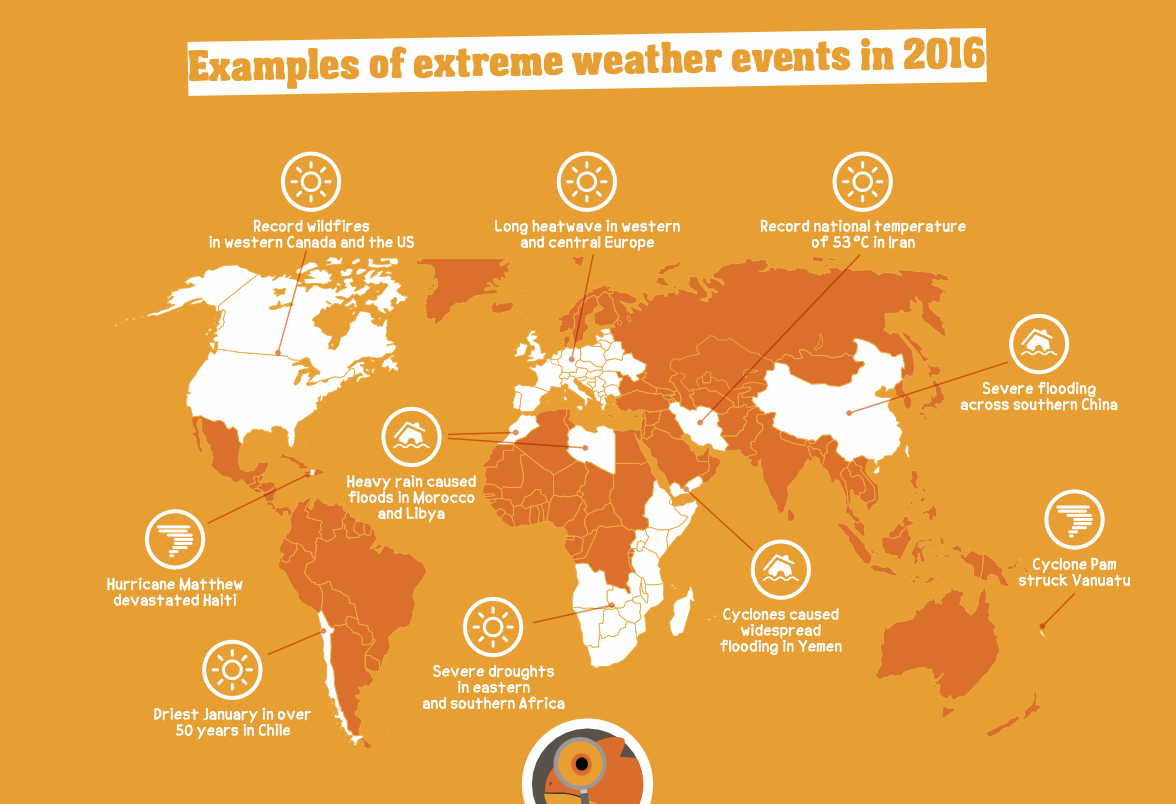

- example_images/examples_weather_events.png +0 -0

- example_images/gaulois.png +0 -3

- example_images/mmmu_example.jpeg +0 -0

- example_images/mmmu_example_2.png +0 -0

- example_images/paper_with_text.png +0 -0

- example_images/polar_bear_coke.png +0 -0

- example_images/rococo_1.jpg +0 -0

- example_images/travel_tips.jpg +0 -0

app.py

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

import gradio as gr

|

| 2 |

-

from transformers import AutoProcessor,

|

| 3 |

import re

|

| 4 |

import time

|

| 5 |

from PIL import Image

|

|

@@ -11,10 +11,10 @@ import subprocess

|

|

| 11 |

|

| 12 |

processor = AutoProcessor.from_pretrained("HuggingFaceTB/SmolVLM-Instruct")

|

| 13 |

|

| 14 |

-

model =

|

| 15 |

torch_dtype=torch.bfloat16,

|

| 16 |

#_attn_implementation="flash_attention_2"

|

| 17 |

-

|

| 18 |

|

| 19 |

@spaces.GPU

|

| 20 |

def model_inference(

|

|

@@ -74,8 +74,8 @@ def model_inference(

|

|

| 74 |

return generated_texts[0]

|

| 75 |

|

| 76 |

|

| 77 |

-

with gr.Blocks(

|

| 78 |

-

gr.Markdown("## SmolVLM")

|

| 79 |

gr.Markdown("Play with [HuggingFaceTB/SmolVLM-Instruct](https://huggingface.co/HuggingFaceTB/SmolVLM-Instruct) in this demo. To get started, upload an image and text or try one of the examples.")

|

| 80 |

with gr.Column():

|

| 81 |

image_input = gr.Image(label="Upload your Image", type="pil", scale=1)

|

|

@@ -85,88 +85,86 @@ with gr.Blocks(fill_height=True) as demo:

|

|

| 85 |

submit_btn = gr.Button("Submit")

|

| 86 |

output = gr.Textbox(label="Output")

|

| 87 |

|

| 88 |

-

with gr.Accordion(label="Example Inputs and Advanced Generation Parameters"):

|

| 89 |

examples=[

|

| 90 |

-

["example_images/

|

| 91 |

-

["example_images/

|

| 92 |

-

["example_images/

|

| 93 |

-

["example_images/

|

| 94 |

-

["example_images/

|

| 95 |

-

|

| 96 |

-

|

| 97 |

-

|

| 98 |

-

|

| 99 |

-

|

| 100 |

-

|

| 101 |

-

|

| 102 |

-

|

| 103 |

-

|

| 104 |

-

|

| 105 |

-

|

| 106 |

-

|

| 107 |

-

|

| 108 |

-

|

| 109 |

-

|

| 110 |

-

|

| 111 |

-

|

| 112 |

-

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

|

| 116 |

-

|

| 117 |

-

|

| 118 |

-

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

|

| 122 |

-

|

| 123 |

-

|

| 124 |

-

|

| 125 |

-

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

|

| 129 |

-

|

| 130 |

-

|

| 131 |

-

|

| 132 |

-

|

| 133 |

-

|

| 134 |

-

|

| 135 |

-

|

| 136 |

-

|

| 137 |

-

|

| 138 |

-

|

| 139 |

-

|

| 140 |

-

|

| 141 |

-

|

| 142 |

-

|

| 143 |

-

|

| 144 |

-

|

| 145 |

-

|

| 146 |

-

|

| 147 |

-

|

| 148 |

-

|

| 149 |

-

|

| 150 |

-

|

| 151 |

-

|

| 152 |

-

|

| 153 |

-

|

| 154 |

-

|

| 155 |

-

|

| 156 |

-

|

| 157 |

-

|

| 158 |

-

|

| 159 |

-

|

| 160 |

-

|

| 161 |

-

|

| 162 |

-

|

| 163 |

-

|

| 164 |

-

|

| 165 |

-

|

| 166 |

-

|

| 167 |

-

|

| 168 |

-

|

| 169 |

-

)

|

| 170 |

gr.Examples(

|

| 171 |

examples = examples,

|

| 172 |

inputs=[image_input, query_input, assistant_prefix, decoding_strategy, temperature,

|

|

@@ -174,6 +172,7 @@ with gr.Blocks(fill_height=True) as demo:

|

|

| 174 |

outputs=output,

|

| 175 |

fn=model_inference

|

| 176 |

)

|

|

|

|

| 177 |

|

| 178 |

submit_btn.click(model_inference, inputs = [image_input, query_input, assistant_prefix, decoding_strategy, temperature,

|

| 179 |

max_new_tokens, repetition_penalty, top_p], outputs=output)

|

|

|

|

| 1 |

import gradio as gr

|

| 2 |

+

from transformers import AutoProcessor, AutoModelForVision2Seq

|

| 3 |

import re

|

| 4 |

import time

|

| 5 |

from PIL import Image

|

|

|

|

| 11 |

|

| 12 |

processor = AutoProcessor.from_pretrained("HuggingFaceTB/SmolVLM-Instruct")

|

| 13 |

|

| 14 |

+

model = AutoModelForVision2Seq.from_pretrained("HuggingFaceTB/SmolVLM-Instruct",

|

| 15 |

torch_dtype=torch.bfloat16,

|

| 16 |

#_attn_implementation="flash_attention_2"

|

| 17 |

+

).to("cuda")

|

| 18 |

|

| 19 |

@spaces.GPU

|

| 20 |

def model_inference(

|

|

|

|

| 74 |

return generated_texts[0]

|

| 75 |

|

| 76 |

|

| 77 |

+

with gr.Blocks() as demo:

|

| 78 |

+

gr.Markdown("## SmolVLM: Small yet Mighty 💫")

|

| 79 |

gr.Markdown("Play with [HuggingFaceTB/SmolVLM-Instruct](https://huggingface.co/HuggingFaceTB/SmolVLM-Instruct) in this demo. To get started, upload an image and text or try one of the examples.")

|

| 80 |

with gr.Column():

|

| 81 |

image_input = gr.Image(label="Upload your Image", type="pil", scale=1)

|

|

|

|

| 85 |

submit_btn = gr.Button("Submit")

|

| 86 |

output = gr.Textbox(label="Output")

|

| 87 |

|

|

|

|

| 88 |

examples=[

|

| 89 |

+

["example_images/rococo.jpg", "What art era is this?", None, "Greedy", 0.4, 512, 1.2, 0.8],

|

| 90 |

+

["example_images/examples_wat_arun.jpg", "Give me travel tips for the area around this monument.", None, "Greedy", 0.4, 512, 1.2, 0.8],

|

| 91 |

+

["example_images/examples_invoice.png", "What is the due date and the invoice date?", None, "Greedy", 0.4, 512, 1.2, 0.8],

|

| 92 |

+

["example_images/s2w_example.png", "What is this UI about?", None, "Greedy", 0.4, 512, 1.2, 0.8],

|

| 93 |

+

["example_images/examples_weather_events.png", "Where do the severe droughts happen according to this diagram?", None, "Greedy", 0.4, 512, 1.2, 0.8],

|

| 94 |

+

]

|

| 95 |

+

|

| 96 |

+

with gr.Accordion(label="Advanced Generation Parameters", open=False):

|

| 97 |

+

|

| 98 |

+

# Hyper-parameters for generation

|

| 99 |

+

max_new_tokens = gr.Slider(

|

| 100 |

+

minimum=8,

|

| 101 |

+

maximum=1024,

|

| 102 |

+

value=512,

|

| 103 |

+

step=1,

|

| 104 |

+

interactive=True,

|

| 105 |

+

label="Maximum number of new tokens to generate",

|

| 106 |

+

)

|

| 107 |

+

repetition_penalty = gr.Slider(

|

| 108 |

+

minimum=0.01,

|

| 109 |

+

maximum=5.0,

|

| 110 |

+

value=1.2,

|

| 111 |

+

step=0.01,

|

| 112 |

+

interactive=True,

|

| 113 |

+

label="Repetition penalty",

|

| 114 |

+

info="1.0 is equivalent to no penalty",

|

| 115 |

+

)

|

| 116 |

+

temperature = gr.Slider(

|

| 117 |

+

minimum=0.0,

|

| 118 |

+

maximum=5.0,

|

| 119 |

+

value=0.4,

|

| 120 |

+

step=0.1,

|

| 121 |

+

interactive=True,

|

| 122 |

+

label="Sampling temperature",

|

| 123 |

+

info="Higher values will produce more diverse outputs.",

|

| 124 |

+

)

|

| 125 |

+

top_p = gr.Slider(

|

| 126 |

+

minimum=0.01,

|

| 127 |

+

maximum=0.99,

|

| 128 |

+

value=0.8,

|

| 129 |

+

step=0.01,

|

| 130 |

+

interactive=True,

|

| 131 |

+

label="Top P",

|

| 132 |

+

info="Higher values is equivalent to sampling more low-probability tokens.",

|

| 133 |

+

)

|

| 134 |

+

decoding_strategy = gr.Radio(

|

| 135 |

+

[

|

| 136 |

+

"Greedy",

|

| 137 |

+

"Top P Sampling",

|

| 138 |

+

],

|

| 139 |

+

value="Greedy",

|

| 140 |

+

label="Decoding strategy",

|

| 141 |

+

interactive=True,

|

| 142 |

+

info="Higher values is equivalent to sampling more low-probability tokens.",

|

| 143 |

+

)

|

| 144 |

+

decoding_strategy.change(

|

| 145 |

+

fn=lambda selection: gr.Slider(

|

| 146 |

+

visible=(

|

| 147 |

+

selection in ["contrastive_sampling", "beam_sampling", "Top P Sampling", "sampling_top_k"]

|

| 148 |

+

)

|

| 149 |

+

),

|

| 150 |

+

inputs=decoding_strategy,

|

| 151 |

+

outputs=temperature,

|

| 152 |

+

)

|

| 153 |

+

|

| 154 |

+

decoding_strategy.change(

|

| 155 |

+

fn=lambda selection: gr.Slider(

|

| 156 |

+

visible=(

|

| 157 |

+

selection in ["contrastive_sampling", "beam_sampling", "Top P Sampling", "sampling_top_k"]

|

| 158 |

+

)

|

| 159 |

+

),

|

| 160 |

+

inputs=decoding_strategy,

|

| 161 |

+

outputs=repetition_penalty,

|

| 162 |

+

)

|

| 163 |

+

decoding_strategy.change(

|

| 164 |

+

fn=lambda selection: gr.Slider(visible=(selection in ["Top P Sampling"])),

|

| 165 |

+

inputs=decoding_strategy,

|

| 166 |

+

outputs=top_p,

|

| 167 |

+

)

|

|

|

|

| 168 |

gr.Examples(

|

| 169 |

examples = examples,

|

| 170 |

inputs=[image_input, query_input, assistant_prefix, decoding_strategy, temperature,

|

|

|

|

| 172 |

outputs=output,

|

| 173 |

fn=model_inference

|

| 174 |

)

|

| 175 |

+

|

| 176 |

|

| 177 |

submit_btn.click(model_inference, inputs = [image_input, query_input, assistant_prefix, decoding_strategy, temperature,

|

| 178 |

max_new_tokens, repetition_penalty, top_p], outputs=output)

|

example_images/art_critic.png

DELETED

|

Binary file (87.1 kB)

|

|

|

example_images/chicken_on_money.png

DELETED

|

Binary file (420 kB)

|

|

|

example_images/dragons_playing.png

DELETED

|

Binary file (626 kB)

|

|

|

example_images/dummy_pdf.png

DELETED

|

Binary file (76.9 kB)

|

|

|

example_images/example_images_ai2d_example_2.jpeg

DELETED

|

Binary file (89.4 kB)

|

|

|

example_images/example_images_meme_french.jpg

DELETED

|

Binary file (70.7 kB)

|

|

|

example_images/example_images_surfing_dog.jpg

DELETED

|

Binary file (283 kB)

|

|

|

example_images/example_images_tree_fortress.jpg

DELETED

|

Binary file (154 kB)

|

|

|

example_images/examples_invoice.png

ADDED

|

example_images/examples_wat_arun.jpg

ADDED

|

example_images/examples_weather_events.png

ADDED

|

example_images/gaulois.png

DELETED

Git LFS Details

|

example_images/mmmu_example.jpeg

DELETED

|

Binary file (17.4 kB)

|

|

|

example_images/mmmu_example_2.png

DELETED

|

Binary file (54.8 kB)

|

|

|

example_images/paper_with_text.png

DELETED

|

Binary file (975 kB)

|

|

|

example_images/polar_bear_coke.png

DELETED

|

Binary file (440 kB)

|

|

|

example_images/rococo_1.jpg

DELETED

|

Binary file (849 kB)

|

|

|

example_images/travel_tips.jpg

DELETED

|

Binary file (209 kB)

|

|

|