Spaces:

Running

on

Zero

Running

on

Zero

SingleZombie

commited on

Commit

•

ff715ca

1

Parent(s):

21b374f

upload files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- LICENSE.md +14 -0

- README.md +208 -0

- config/config_boxer.yaml +27 -0

- config/config_carturn.yaml +30 -0

- config/config_dog.yaml +27 -0

- config/config_music.yaml +27 -0

- install.py +95 -0

- requirements.txt +11 -0

- run_fresco.ipynb +0 -0

- run_fresco.py +318 -0

- src/ControlNet/annotator/canny/__init__.py +6 -0

- src/ControlNet/annotator/ckpts/ckpts.txt +1 -0

- src/ControlNet/annotator/hed/__init__.py +96 -0

- src/ControlNet/annotator/midas/LICENSE +21 -0

- src/ControlNet/annotator/midas/__init__.py +42 -0

- src/ControlNet/annotator/midas/api.py +169 -0

- src/ControlNet/annotator/midas/midas/__init__.py +0 -0

- src/ControlNet/annotator/midas/midas/base_model.py +16 -0

- src/ControlNet/annotator/midas/midas/blocks.py +342 -0

- src/ControlNet/annotator/midas/midas/dpt_depth.py +109 -0

- src/ControlNet/annotator/midas/midas/midas_net.py +76 -0

- src/ControlNet/annotator/midas/midas/midas_net_custom.py +128 -0

- src/ControlNet/annotator/midas/midas/transforms.py +234 -0

- src/ControlNet/annotator/midas/midas/vit.py +491 -0

- src/ControlNet/annotator/midas/utils.py +189 -0

- src/ControlNet/annotator/mlsd/LICENSE +201 -0

- src/ControlNet/annotator/mlsd/__init__.py +43 -0

- src/ControlNet/annotator/mlsd/models/mbv2_mlsd_large.py +292 -0

- src/ControlNet/annotator/mlsd/models/mbv2_mlsd_tiny.py +275 -0

- src/ControlNet/annotator/mlsd/utils.py +580 -0

- src/ControlNet/annotator/openpose/LICENSE +108 -0

- src/ControlNet/annotator/openpose/__init__.py +49 -0

- src/ControlNet/annotator/openpose/body.py +219 -0

- src/ControlNet/annotator/openpose/hand.py +86 -0

- src/ControlNet/annotator/openpose/model.py +219 -0

- src/ControlNet/annotator/openpose/util.py +164 -0

- src/ControlNet/annotator/util.py +38 -0

- src/EGNet/README.md +49 -0

- src/EGNet/dataset.py +283 -0

- src/EGNet/model.py +208 -0

- src/EGNet/resnet.py +301 -0

- src/EGNet/run.py +68 -0

- src/EGNet/sal2edge.m +34 -0

- src/EGNet/solver.py +230 -0

- src/EGNet/vgg.py +273 -0

- src/diffusion_hacked.py +957 -0

- src/ebsynth/blender/guide.py +104 -0

- src/ebsynth/blender/histogram_blend.py +50 -0

- src/ebsynth/blender/poisson_fusion.py +93 -0

- src/ebsynth/blender/video_sequence.py +187 -0

LICENSE.md

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

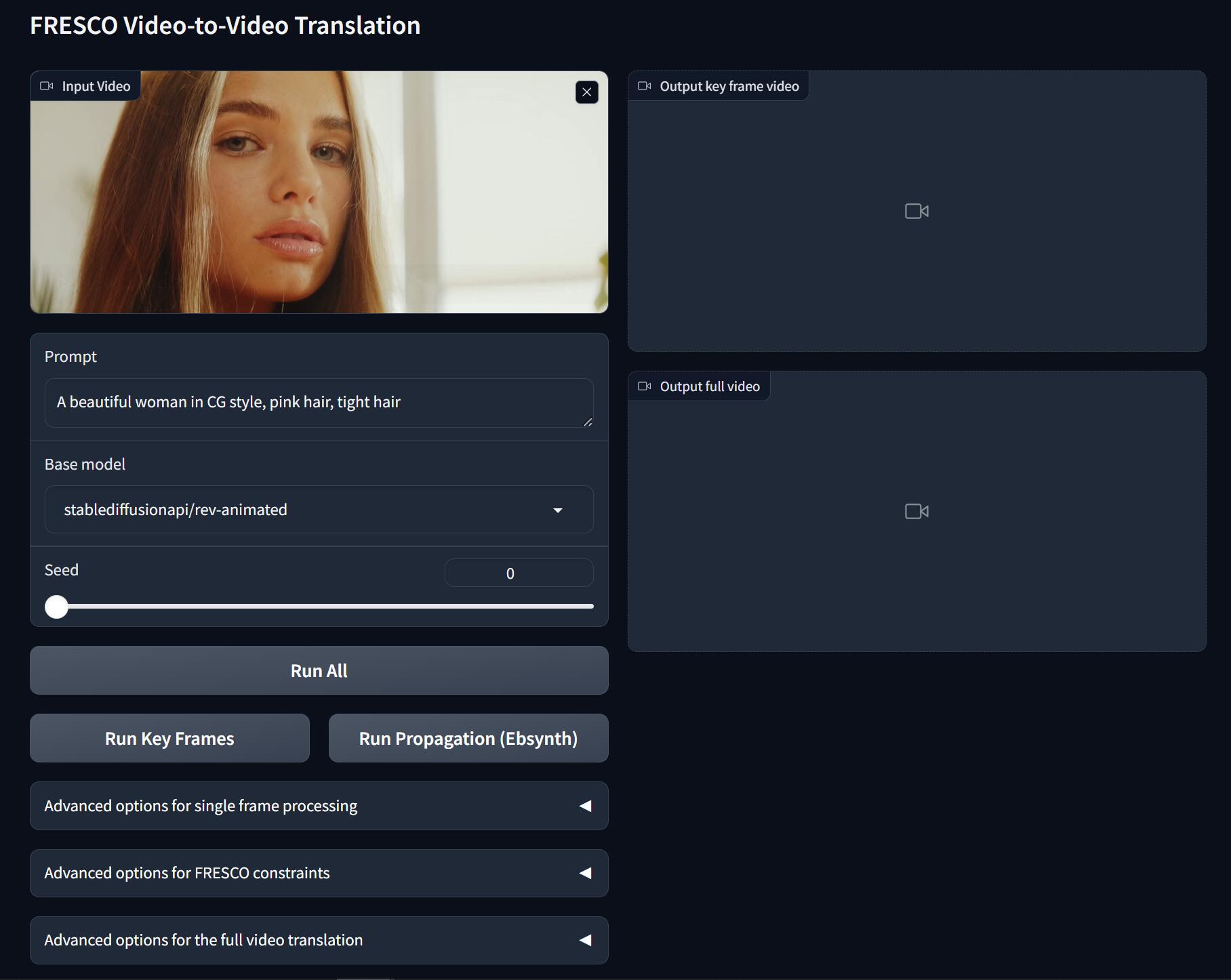

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

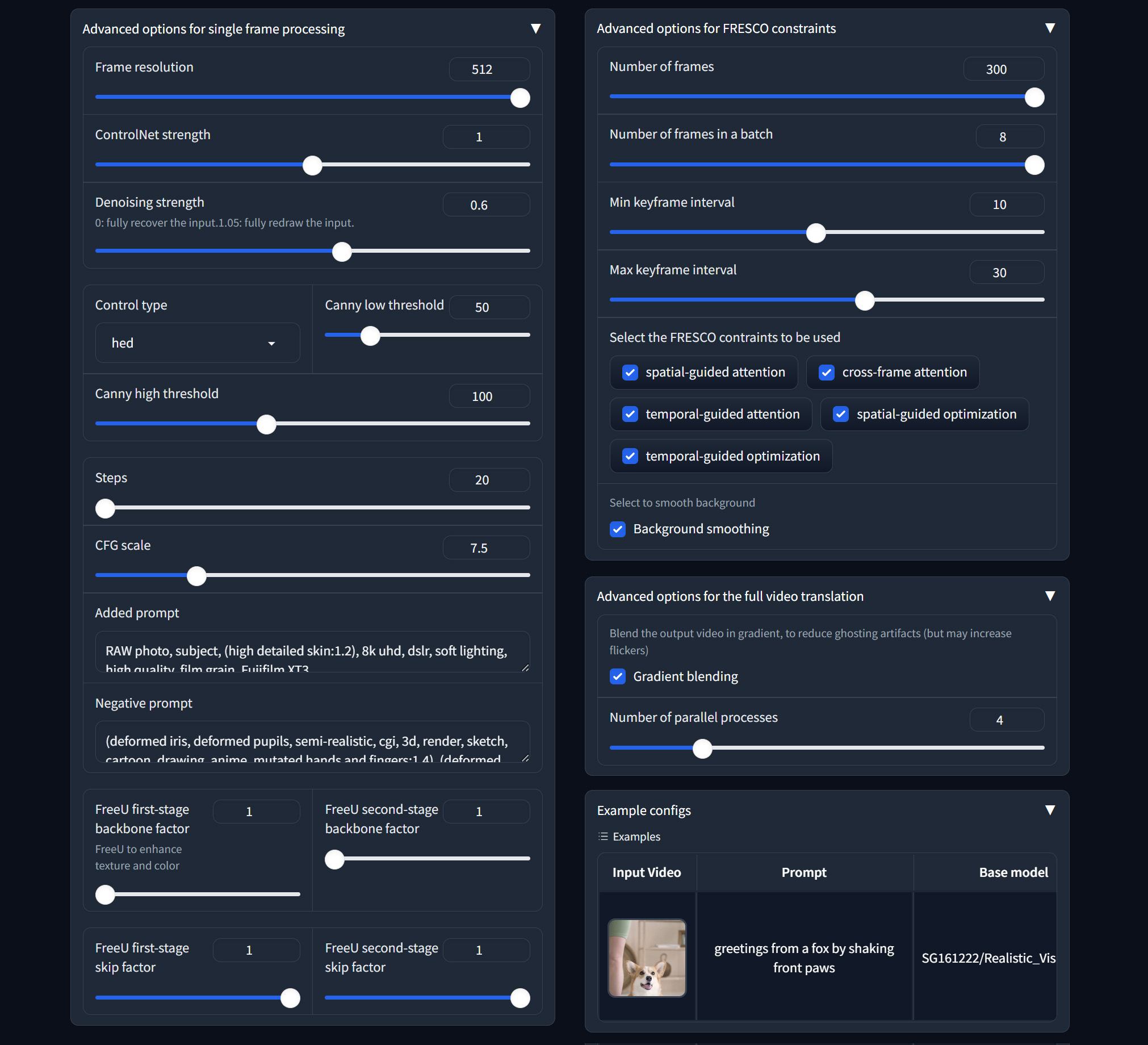

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# S-Lab License 1.0

|

| 2 |

+

|

| 3 |

+

Copyright 2024 S-Lab

|

| 4 |

+

|

| 5 |

+

Redistribution and use for non-commercial purpose in source and binary forms, with or without modification, are permitted provided that the following conditions are met:

|

| 6 |

+

1. Redistributions of source code must retain the above copyright notice, this list of conditions and the following disclaimer.

|

| 7 |

+

2. Redistributions in binary form must reproduce the above copyright notice, this list of conditions and the following disclaimer in the documentation and/or other materials provided with the distribution.

|

| 8 |

+

3. Neither the name of the copyright holder nor the names of its contributors may be used to endorse or promote products derived from this software without specific prior written permission.\

|

| 9 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS" AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

|

| 10 |

+

4. In the event that redistribution and/or use for commercial purpose in source or binary forms, with or without modification is required, please contact the contributor(s) of the work.

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

---

|

| 14 |

+

For the commercial use of the code, please consult Prof. Chen Change Loy (ccloy@ntu.edu.sg)

|

README.md

ADDED

|

@@ -0,0 +1,208 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# FRESCO - Official PyTorch Implementation

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

**FRESCO: Spatial-Temporal Correspondence for Zero-Shot Video Translation**<br>

|

| 5 |

+

[Shuai Yang](https://williamyang1991.github.io/), [Yifan Zhou](https://zhouyifan.net/), [Ziwei Liu](https://liuziwei7.github.io/) and [Chen Change Loy](https://www.mmlab-ntu.com/person/ccloy/)<br>

|

| 6 |

+

in CVPR 2024 <br>

|

| 7 |

+

[**Project Page**](https://www.mmlab-ntu.com/project/fresco/) | [**Paper**](https://arxiv.org/abs/2403.12962) | [**Supplementary Video**](https://youtu.be/jLnGx5H-wLw) | [**Input Data and Video Results**](https://drive.google.com/file/d/12BFx3hp8_jp9m0EmKpw-cus2SABPQx2Q/view?usp=sharing) <br>

|

| 8 |

+

|

| 9 |

+

**Abstract:** *The remarkable efficacy of text-to-image diffusion models has motivated extensive exploration of their potential application in video domains.

|

| 10 |

+

Zero-shot methods seek to extend image diffusion models to videos without necessitating model training.

|

| 11 |

+

Recent methods mainly focus on incorporating inter-frame correspondence into attention mechanisms. However, the soft constraint imposed on determining where to attend to valid features can sometimes be insufficient, resulting in temporal inconsistency.

|

| 12 |

+

In this paper, we introduce FRESCO, intra-frame correspondence alongside inter-frame correspondence to establish a more robust spatial-temporal constraint. This enhancement ensures a more consistent transformation of semantically similar content across frames. Beyond mere attention guidance, our approach involves an explicit update of features to achieve high spatial-temporal consistency with the input video, significantly improving the visual coherence of the resulting translated videos.

|

| 13 |

+

Extensive experiments demonstrate the effectiveness of our proposed framework in producing high-quality, coherent videos, marking a notable improvement over existing zero-shot methods.*

|

| 14 |

+

|

| 15 |

+

**Features**:<br>

|

| 16 |

+

- **Temporal consistency**: use intra-and inter-frame constraint with better consistency and coverage than optical flow alone.

|

| 17 |

+

- Compared with our previous work [Rerender-A-Video](https://github.com/williamyang1991/Rerender_A_Video), FRESCO is more robust to large and quick motion.

|

| 18 |

+

- **Zero-shot**: no training or fine-tuning required.

|

| 19 |

+

- **Flexibility**: compatible with off-the-shelf models (e.g., [ControlNet](https://github.com/lllyasviel/ControlNet), [LoRA](https://civitai.com/)) for customized translation.

|

| 20 |

+

|

| 21 |

+

https://github.com/williamyang1991/FRESCO/assets/18130694/aad358af-4d27-4f18-b069-89a1abd94d38

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

## Updates

|

| 25 |

+

- [03/2023] Paper is released.

|

| 26 |

+

- [03/2023] Code is released.

|

| 27 |

+

- [03/2024] This website is created.

|

| 28 |

+

|

| 29 |

+

### TODO

|

| 30 |

+

- [ ] Integrate into Diffusers

|

| 31 |

+

- [ ] Add Huggingface web demo

|

| 32 |

+

- [x] ~~Add webUI.~~

|

| 33 |

+

- [x] ~~Update readme~~

|

| 34 |

+

- [x] ~~Upload paper to arXiv, release related material~~

|

| 35 |

+

|

| 36 |

+

## Installation

|

| 37 |

+

|

| 38 |

+

1. Clone the repository.

|

| 39 |

+

|

| 40 |

+

```shell

|

| 41 |

+

git clone https://github.com/williamyang1991/FRESCO.git

|

| 42 |

+

cd FRESCO

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

2. You can simply set up the environment with pip based on [requirements.txt](https://github.com/williamyang1991/FRESCO/blob/main/requirements.txt)

|

| 46 |

+

- Create a conda environment and install torch >= 2.0.0. Here is an example script to install torch 2.0.0 + CUDA 11.8 :

|

| 47 |

+

```

|

| 48 |

+

conda create --name diffusers python==3.8.5

|

| 49 |

+

conda activate diffusers

|

| 50 |

+

pip install torch==2.0.0 torchvision==0.15.1 --index-url https://download.pytorch.org/whl/cu118

|

| 51 |

+

```

|

| 52 |

+

- Run `pip install -r requirements.txt` in an environment where torch is installed.

|

| 53 |

+

- We have tested on torch 2.0.0/2.1.0 and diffusers 0.19.3

|

| 54 |

+

- If you use new versions of diffusers, you need to modify [my_forward()](https://github.com/williamyang1991/FRESCO/blob/fb991262615665de88f7a8f2cc903d9539e1b234/src/diffusion_hacked.py#L496)

|

| 55 |

+

|

| 56 |

+

3. Run the installation script. The required models will be downloaded in `./model`, `./src/ControlNet/annotator` and `./src/ebsynth/deps/ebsynth/bin`.

|

| 57 |

+

- Requires access to huggingface.co

|

| 58 |

+

|

| 59 |

+

```shell

|

| 60 |

+

python install.py

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

4. You can run the demo with `run_fresco.py`

|

| 64 |

+

|

| 65 |

+

```shell

|

| 66 |

+

python run_fresco.py ./config/config_music.yaml

|

| 67 |

+

```

|

| 68 |

+

|

| 69 |

+

5. For issues with Ebsynth, please refer to [issues](https://github.com/williamyang1991/Rerender_A_Video#issues)

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

## (1) Inference

|

| 73 |

+

|

| 74 |

+

### WebUI (recommended)

|

| 75 |

+

|

| 76 |

+

```

|

| 77 |

+

python webUI.py

|

| 78 |

+

```

|

| 79 |

+

The Gradio app also allows you to flexibly change the inference options. Just try it for more details.

|

| 80 |

+

|

| 81 |

+

Upload your video, input the prompt, select the model and seed, and hit:

|

| 82 |

+

- **Run Key Frames**: detect keyframes, translate all keyframes.

|

| 83 |

+

- **Run Propagation**: propagate the keyframes to other frames for full video translation

|

| 84 |

+

- **Run All**: **Run Key Frames** and **Run Propagation**

|

| 85 |

+

|

| 86 |

+

Select the model:

|

| 87 |

+

- **Base model**: base Stable Diffusion model (SD 1.5)

|

| 88 |

+

- Stable Diffusion 1.5: official model

|

| 89 |

+

- [rev-Animated](https://huggingface.co/stablediffusionapi/rev-animated): a semi-realistic (2.5D) model

|

| 90 |

+

- [realistic-Vision](https://huggingface.co/SG161222/Realistic_Vision_V2.0): a photo-realistic model

|

| 91 |

+

- [flat2d-animerge](https://huggingface.co/stablediffusionapi/flat-2d-animerge): a cartoon model

|

| 92 |

+

- You can add other models on huggingface.co by modifying this [line](https://github.com/williamyang1991/FRESCO/blob/1afcca9c7b1bc1ac68254f900be9bd768fbb6988/webUI.py#L362)

|

| 93 |

+

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

We provide abundant advanced options to play with

|

| 97 |

+

|

| 98 |

+

</details>

|

| 99 |

+

|

| 100 |

+

<details id="option1">

|

| 101 |

+

<summary> <b>Advanced options for single frame processing</b></summary>

|

| 102 |

+

|

| 103 |

+

1. **Frame resolution**: resize the short side of the video to 512.

|

| 104 |

+

2. ControlNet related:

|

| 105 |

+

- **ControlNet strength**: how well the output matches the input control edges

|

| 106 |

+

- **Control type**: HED edge, Canny edge, Depth map

|

| 107 |

+

- **Canny low/high threshold**: low values for more edge details

|

| 108 |

+

3. SDEdit related:

|

| 109 |

+

- **Denoising strength**: repaint degree (low value to make the output look more like the original video)

|

| 110 |

+

- **Preserve color**: preserve the color of the original video

|

| 111 |

+

4. SD related:

|

| 112 |

+

- **Steps**: denoising step

|

| 113 |

+

- **CFG scale**: how well the output matches the prompt

|

| 114 |

+

- **Added prompt/Negative prompt**: supplementary prompts

|

| 115 |

+

5. FreeU related:

|

| 116 |

+

- **FreeU first/second-stage backbone factor**: =1 do nothing; >1 enhance output color and details

|

| 117 |

+

- **FreeU first/second-stage skip factor**: =1 do nothing; <1 enhance output color and details

|

| 118 |

+

|

| 119 |

+

</details>

|

| 120 |

+

|

| 121 |

+

<details id="option2">

|

| 122 |

+

<summary> <b>Advanced options for FRESCO constraints</b></summary>

|

| 123 |

+

|

| 124 |

+

1. Keyframe related

|

| 125 |

+

- **Number of frames**: Total frames to be translated

|

| 126 |

+

- **Number of frames in a batch**: To avoid out-of-memory, use small batch size

|

| 127 |

+

- **Min keyframe interval (s_min)**: The keyframes will be detected at least every s_min frames

|

| 128 |

+

- **Max keyframe interval (s_max)**: The keyframes will be detected at most every s_max frames

|

| 129 |

+

2. FRESCO constraints

|

| 130 |

+

- FRESCO-guided Attention:

|

| 131 |

+

- **spatial-guided attention**: Check to enable spatial-guided attention

|

| 132 |

+

- **cross-frame attention**: Check to enable efficient cross-frame attention

|

| 133 |

+

- **temporal-guided attention**: Check to enable temporal-guided attention

|

| 134 |

+

- FRESCO-guided optimization:

|

| 135 |

+

- **spatial-guided optimization**: Check to enable spatial-guided optimization

|

| 136 |

+

- **temporal-guided optimization**: Check to enable temporal-guided optimization

|

| 137 |

+

3. **Background smoothing**: Check to enable background smoothing (best for static background)

|

| 138 |

+

|

| 139 |

+

</details>

|

| 140 |

+

|

| 141 |

+

<details id="option3">

|

| 142 |

+

<summary> <b>Advanced options for the full video translation</b></summary>

|

| 143 |

+

|

| 144 |

+

1. **Gradient blending**: apply Poisson Blending to reduce ghosting artifacts. May slow the process and increase flickers.

|

| 145 |

+

2. **Number of parallel processes**: multiprocessing to speed up the process. Large value (4) is recommended.

|

| 146 |

+

</details>

|

| 147 |

+

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

### Command Line

|

| 151 |

+

|

| 152 |

+

We provide a flexible script `run_fresco.py` to run our method.

|

| 153 |

+

|

| 154 |

+

Set the options via a config file. For example,

|

| 155 |

+

```shell

|

| 156 |

+

python run_fresco.py ./config/config_music.yaml

|

| 157 |

+

```

|

| 158 |

+

We provide some examples of the config in `config` directory.

|

| 159 |

+

Most options in the config is the same as those in WebUI.

|

| 160 |

+

Please check the explanations in the WebUI section.

|

| 161 |

+

|

| 162 |

+

We provide a separate Ebsynth python script `video_blend.py` with the temporal blending algorithm introduced in

|

| 163 |

+

[Stylizing Video by Example](https://dcgi.fel.cvut.cz/home/sykorad/ebsynth.html) for interpolating style between key frames.

|

| 164 |

+

It can work on your own stylized key frames independently of our FRESCO algorithm.

|

| 165 |

+

For the details, please refer to our previous work [Rerender-A-Video](https://github.com/williamyang1991/Rerender_A_Video/tree/main?tab=readme-ov-file#our-ebsynth-implementation)

|

| 166 |

+

|

| 167 |

+

## (2) Results

|

| 168 |

+

|

| 169 |

+

### Key frame translation

|

| 170 |

+

|

| 171 |

+

<table class="center">

|

| 172 |

+

<tr>

|

| 173 |

+

<td><img src="https://github.com/williamyang1991/FRESCO/assets/18130694/e8d5776a-37c5-49ae-8ab4-15669df6f572" raw=true></td>

|

| 174 |

+

<td><img src="https://github.com/williamyang1991/FRESCO/assets/18130694/8a792af6-555c-4e82-ac1e-5c2e1ee35fdb" raw=true></td>

|

| 175 |

+

<td><img src="https://github.com/williamyang1991/FRESCO/assets/18130694/10f9a964-85ac-4433-84c5-1611a6c2c434" raw=true></td>

|

| 176 |

+

<td><img src="https://github.com/williamyang1991/FRESCO/assets/18130694/0ec0fbf9-90dd-4d8b-964d-945b5f6687c2" raw=true></td>

|

| 177 |

+

</tr>

|

| 178 |

+

<tr>

|

| 179 |

+

<td width=26.5% align="center">a red car turns in the winter</td>

|

| 180 |

+

<td width=26.5% align="center">an African American boxer wearing black boxing gloves punches towards the camera, cartoon style</td>

|

| 181 |

+

<td width=26.5% align="center">a cartoon spiderman in black suit, black shoes and white gloves is dancing</td>

|

| 182 |

+

<td width=20.5% align="center">a beautiful woman holding her glasses in CG style</td>

|

| 183 |

+

</tr>

|

| 184 |

+

</table>

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

### Full video translation

|

| 188 |

+

|

| 189 |

+

https://github.com/williamyang1991/FRESCO/assets/18130694/bf8bfb82-5cb7-4b2f-8169-cf8dbf408b54

|

| 190 |

+

|

| 191 |

+

## Citation

|

| 192 |

+

|

| 193 |

+

If you find this work useful for your research, please consider citing our paper:

|

| 194 |

+

|

| 195 |

+

```bibtex

|

| 196 |

+

@inproceedings{yang2024fresco,

|

| 197 |

+

title = {FRESCO: Spatial-Temporal Correspondence for Zero-Shot Video Translation},

|

| 198 |

+

author = {Yang, Shuai and Zhou, Yifan and Liu, Ziwei and and Loy, Chen Change},

|

| 199 |

+

booktitle = {CVPR},

|

| 200 |

+

year = {2024},

|

| 201 |

+

}

|

| 202 |

+

```

|

| 203 |

+

|

| 204 |

+

## Acknowledgments

|

| 205 |

+

|

| 206 |

+

The code is mainly developed based on [Rerender-A-Video](https://github.com/williamyang1991/Rerender_A_Video), [ControlNet](https://github.com/lllyasviel/ControlNet), [Stable Diffusion](https://github.com/Stability-AI/stablediffusion), [GMFlow](https://github.com/haofeixu/gmflow) and [Ebsynth](https://github.com/jamriska/ebsynth).

|

| 207 |

+

|

| 208 |

+

|

config/config_boxer.yaml

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# data

|

| 2 |

+

file_path: './data/boxer-punching-towards-camera.mp4'

|

| 3 |

+

save_path: './output/boxer-punching-towards-camera/'

|

| 4 |

+

mininterv: 2 # for keyframe selection

|

| 5 |

+

maxinterv: 2 # for keyframe selection

|

| 6 |

+

|

| 7 |

+

# diffusion

|

| 8 |

+

seed: 0

|

| 9 |

+

prompt: 'An African American boxer wearing black boxing gloves punches towards the camera, cartoon style'

|

| 10 |

+

sd_path: 'stablediffusionapi/flat-2d-animerge'

|

| 11 |

+

use_controlnet: True

|

| 12 |

+

controlnet_type: 'depth' # 'hed', 'canny'

|

| 13 |

+

cond_scale: 0.7

|

| 14 |

+

use_freeu: False

|

| 15 |

+

|

| 16 |

+

# video-to-video translation

|

| 17 |

+

batch_size: 8

|

| 18 |

+

num_inference_steps: 20

|

| 19 |

+

num_warmup_steps: 5

|

| 20 |

+

end_opt_step: 15

|

| 21 |

+

run_ebsynth: False

|

| 22 |

+

max_process: 4

|

| 23 |

+

|

| 24 |

+

# supporting model

|

| 25 |

+

gmflow_path: './model/gmflow_sintel-0c07dcb3.pth'

|

| 26 |

+

sod_path: './model/epoch_resnet.pth'

|

| 27 |

+

use_salinecy: True

|

config/config_carturn.yaml

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# data

|

| 2 |

+

file_path: './data/car-turn.mp4'

|

| 3 |

+

save_path: './output/car-turn/'

|

| 4 |

+

mininterv: 5 # for keyframe selection

|

| 5 |

+

maxinterv: 5 # for keyframe selection

|

| 6 |

+

|

| 7 |

+

# diffusion

|

| 8 |

+

seed: 0

|

| 9 |

+

prompt: 'a red car turns in the winter'

|

| 10 |

+

# sd_path: 'runwayml/stable-diffusion-v1-5'

|

| 11 |

+

# sd_path: 'stablediffusionapi/rev-animated'

|

| 12 |

+

# sd_path: 'stablediffusionapi/flat-2d-animerge'

|

| 13 |

+

sd_path: 'SG161222/Realistic_Vision_V2.0'

|

| 14 |

+

use_controlnet: True

|

| 15 |

+

controlnet_type: 'hed' # 'depth', 'canny'

|

| 16 |

+

cond_scale: 0.7

|

| 17 |

+

use_freeu: False

|

| 18 |

+

|

| 19 |

+

# video-to-video translation

|

| 20 |

+

batch_size: 8

|

| 21 |

+

num_inference_steps: 20

|

| 22 |

+

num_warmup_steps: 5

|

| 23 |

+

end_opt_step: 15

|

| 24 |

+

run_ebsynth: False

|

| 25 |

+

max_process: 4

|

| 26 |

+

|

| 27 |

+

# supporting model

|

| 28 |

+

gmflow_path: './model/gmflow_sintel-0c07dcb3.pth'

|

| 29 |

+

sod_path: './model/epoch_resnet.pth'

|

| 30 |

+

use_salinecy: True

|

config/config_dog.yaml

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# data

|

| 2 |

+

file_path: './data/dog.mp4'

|

| 3 |

+

save_path: './output/dog/'

|

| 4 |

+

mininterv: 10 # for keyframe selection

|

| 5 |

+

maxinterv: 30 # for keyframe selection

|

| 6 |

+

|

| 7 |

+

# diffusion

|

| 8 |

+

seed: 0

|

| 9 |

+

prompt: 'greetings from a fox by shaking front paws'

|

| 10 |

+

sd_path: 'SG161222/Realistic_Vision_V2.0'

|

| 11 |

+

use_controlnet: True

|

| 12 |

+

controlnet_type: 'hed' # 'depth', 'canny'

|

| 13 |

+

cond_scale: 1.0

|

| 14 |

+

use_freeu: False

|

| 15 |

+

|

| 16 |

+

# video-to-video translation

|

| 17 |

+

batch_size: 8

|

| 18 |

+

num_inference_steps: 20

|

| 19 |

+

num_warmup_steps: 8

|

| 20 |

+

end_opt_step: 15

|

| 21 |

+

run_ebsynth: False

|

| 22 |

+

max_process: 4

|

| 23 |

+

|

| 24 |

+

# supporting model

|

| 25 |

+

gmflow_path: './model/gmflow_sintel-0c07dcb3.pth'

|

| 26 |

+

sod_path: './model/epoch_resnet.pth'

|

| 27 |

+

use_salinecy: True

|

config/config_music.yaml

ADDED

|

@@ -0,0 +1,27 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# data

|

| 2 |

+

file_path: './data/music.mp4'

|

| 3 |

+

save_path: './output/music/'

|

| 4 |

+

mininterv: 10 # for keyframe selection

|

| 5 |

+

maxinterv: 30 # for keyframe selection

|

| 6 |

+

|

| 7 |

+

# diffusion

|

| 8 |

+

seed: 0

|

| 9 |

+

prompt: 'A beautiful woman with headphones listening to music in CG cyberpunk style, neon, closed eyes, colorful'

|

| 10 |

+

sd_path: 'stablediffusionapi/rev-animated'

|

| 11 |

+

use_controlnet: True

|

| 12 |

+

controlnet_type: 'hed' # 'depth', 'canny'

|

| 13 |

+

cond_scale: 1.0

|

| 14 |

+

use_freeu: False

|

| 15 |

+

|

| 16 |

+

# video-to-video translation

|

| 17 |

+

batch_size: 8

|

| 18 |

+

num_inference_steps: 20

|

| 19 |

+

num_warmup_steps: 3

|

| 20 |

+

end_opt_step: 15

|

| 21 |

+

run_ebsynth: False

|

| 22 |

+

max_process: 4

|

| 23 |

+

|

| 24 |

+

# supporting model

|

| 25 |

+

gmflow_path: './model/gmflow_sintel-0c07dcb3.pth'

|

| 26 |

+

sod_path: './model/epoch_resnet.pth'

|

| 27 |

+

use_salinecy: True

|

install.py

ADDED

|

@@ -0,0 +1,95 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import platform

|

| 3 |

+

|

| 4 |

+

import requests

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

def build_ebsynth():

|

| 8 |

+

if os.path.exists('src/ebsynth/deps/ebsynth/bin/ebsynth'):

|

| 9 |

+

print('Ebsynth has been built.')

|

| 10 |

+

return

|

| 11 |

+

|

| 12 |

+

os_str = platform.system()

|

| 13 |

+

|

| 14 |

+

if os_str == 'Windows':

|

| 15 |

+

print('Build Ebsynth Windows 64 bit.',

|

| 16 |

+

'If you want to build for 32 bit, please modify install.py.')

|

| 17 |

+

cmd = '.\\build-win64-cpu+cuda.bat'

|

| 18 |

+

exe_file = 'src/ebsynth/deps/ebsynth/bin/ebsynth.exe'

|

| 19 |

+

elif os_str == 'Linux':

|

| 20 |

+

cmd = 'bash build-linux-cpu+cuda.sh'

|

| 21 |

+

exe_file = 'src/ebsynth/deps/ebsynth/bin/ebsynth'

|

| 22 |

+

elif os_str == 'Darwin':

|

| 23 |

+

cmd = 'sh build-macos-cpu_only.sh'

|

| 24 |

+

exe_file = 'src/ebsynth/deps/ebsynth/bin/ebsynth.app'

|

| 25 |

+

else:

|

| 26 |

+

print('Cannot recognize OS. Ebsynth installation stopped.')

|

| 27 |

+

return

|

| 28 |

+

|

| 29 |

+

os.chdir('src/ebsynth/deps/ebsynth')

|

| 30 |

+

print(cmd)

|

| 31 |

+

os.system(cmd)

|

| 32 |

+

os.chdir('../../../..')

|

| 33 |

+

if os.path.exists(exe_file):

|

| 34 |

+

print('Ebsynth installed successfully.')

|

| 35 |

+

else:

|

| 36 |

+

print('Failed to install Ebsynth.')

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

def download(url, dir, name=None):

|

| 40 |

+

os.makedirs(dir, exist_ok=True)

|

| 41 |

+

if name is None:

|

| 42 |

+

name = url.split('/')[-1]

|

| 43 |

+

path = os.path.join(dir, name)

|

| 44 |

+

if not os.path.exists(path):

|

| 45 |

+

print(f'Install {name} ...')

|

| 46 |

+

open(path, 'wb').write(requests.get(url).content)

|

| 47 |

+

print('Install successfully.')

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def download_gmflow_ckpt():

|

| 51 |

+

url = ('https://huggingface.co/PKUWilliamYang/Rerender/'

|

| 52 |

+

'resolve/main/models/gmflow_sintel-0c07dcb3.pth')

|

| 53 |

+

download(url, 'model')

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

def download_egnet_ckpt():

|

| 57 |

+

url = ('https://huggingface.co/PKUWilliamYang/Rerender/'

|

| 58 |

+

'resolve/main/models/epoch_resnet.pth')

|

| 59 |

+

download(url, 'model')

|

| 60 |

+

|

| 61 |

+

def download_hed_ckpt():

|

| 62 |

+

url = ('https://huggingface.co/lllyasviel/Annotators/'

|

| 63 |

+

'resolve/main/ControlNetHED.pth')

|

| 64 |

+

download(url, 'src/ControlNet/annotator/ckpts')

|

| 65 |

+

|

| 66 |

+

def download_depth_ckpt():

|

| 67 |

+

url = ('https://huggingface.co/lllyasviel/ControlNet/'

|

| 68 |

+

'resolve/main/annotator/ckpts/dpt_hybrid-midas-501f0c75.pt')

|

| 69 |

+

download(url, 'src/ControlNet/annotator/ckpts')

|

| 70 |

+

|

| 71 |

+

def download_ebsynth_ckpt():

|

| 72 |

+

os_str = platform.system()

|

| 73 |

+

if os_str == 'Linux':

|

| 74 |

+

url = ('https://huggingface.co/PKUWilliamYang/Rerender/'

|

| 75 |

+

'resolve/main/models/ebsynth')

|

| 76 |

+

download(url, 'src/ebsynth/deps/ebsynth/bin')

|

| 77 |

+

elif os_str == 'Windows':

|

| 78 |

+

url = ('https://huggingface.co/PKUWilliamYang/Rerender/'

|

| 79 |

+

'resolve/main/models/ebsynth.exe')

|

| 80 |

+

download(url, 'src/ebsynth/deps/ebsynth/bin')

|

| 81 |

+

url = ('https://huggingface.co/PKUWilliamYang/Rerender/'

|

| 82 |

+

'resolve/main/models/ebsynth_cpu.dll')

|

| 83 |

+

download(url, 'src/ebsynth/deps/ebsynth/bin')

|

| 84 |

+

url = ('https://huggingface.co/PKUWilliamYang/Rerender/'

|

| 85 |

+

'resolve/main/models/ebsynth_cpu.exe')

|

| 86 |

+

download(url, 'src/ebsynth/deps/ebsynth/bin')

|

| 87 |

+

else:

|

| 88 |

+

print('No available compiled Ebsynth.')

|

| 89 |

+

|

| 90 |

+

#build_ebsynth()

|

| 91 |

+

download_ebsynth_ckpt()

|

| 92 |

+

download_gmflow_ckpt()

|

| 93 |

+

download_egnet_ckpt()

|

| 94 |

+

download_hed_ckpt()

|

| 95 |

+

download_depth_ckpt()

|

requirements.txt

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

diffusers[torch]==0.19.3

|

| 2 |

+

transformers

|

| 3 |

+

opencv-python

|

| 4 |

+

einops

|

| 5 |

+

matplotlib

|

| 6 |

+

timm

|

| 7 |

+

av

|

| 8 |

+

basicsr==1.4.2

|

| 9 |

+

numba==0.57.0

|

| 10 |

+

imageio-ffmpeg

|

| 11 |

+

gradio==3.44.4

|

run_fresco.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

run_fresco.py

ADDED

|

@@ -0,0 +1,318 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

#os.environ['CUDA_VISIBLE_DEVICES'] = "6"

|

| 3 |

+

|

| 4 |

+

# In China, set this to use huggingface

|

| 5 |

+

# os.environ['HF_ENDPOINT'] = 'https://hf-mirror.com'

|

| 6 |

+

|

| 7 |

+

import cv2

|

| 8 |

+

import io

|

| 9 |

+

import gc

|

| 10 |

+

import yaml

|

| 11 |

+

import argparse

|

| 12 |

+

import torch

|

| 13 |

+

import torchvision

|

| 14 |

+

import diffusers

|

| 15 |

+

from diffusers import StableDiffusionPipeline, AutoencoderKL, DDPMScheduler, ControlNetModel

|

| 16 |

+

|

| 17 |

+

from src.utils import *

|

| 18 |

+

from src.keyframe_selection import get_keyframe_ind

|

| 19 |

+

from src.diffusion_hacked import apply_FRESCO_attn, apply_FRESCO_opt, disable_FRESCO_opt

|

| 20 |

+

from src.diffusion_hacked import get_flow_and_interframe_paras, get_intraframe_paras

|

| 21 |

+

from src.pipe_FRESCO import inference

|

| 22 |

+

|

| 23 |

+

def get_models(config):

|

| 24 |

+

print('\n' + '=' * 100)

|

| 25 |

+

print('creating models...')

|

| 26 |

+

import sys

|

| 27 |

+

sys.path.append("./src/ebsynth/deps/gmflow/")

|

| 28 |

+

sys.path.append("./src/EGNet/")

|

| 29 |

+

sys.path.append("./src/ControlNet/")

|

| 30 |

+

|

| 31 |

+

from gmflow.gmflow import GMFlow

|

| 32 |

+

from model import build_model

|

| 33 |

+

from annotator.hed import HEDdetector

|

| 34 |

+

from annotator.canny import CannyDetector

|

| 35 |

+

from annotator.midas import MidasDetector

|

| 36 |

+

|

| 37 |

+

# optical flow

|

| 38 |

+

flow_model = GMFlow(feature_channels=128,

|

| 39 |

+

num_scales=1,

|

| 40 |

+

upsample_factor=8,

|

| 41 |

+

num_head=1,

|

| 42 |

+

attention_type='swin',

|

| 43 |

+

ffn_dim_expansion=4,

|

| 44 |

+

num_transformer_layers=6,

|

| 45 |

+

).to('cuda')

|

| 46 |

+

|

| 47 |

+

checkpoint = torch.load(config['gmflow_path'], map_location=lambda storage, loc: storage)

|

| 48 |

+

weights = checkpoint['model'] if 'model' in checkpoint else checkpoint

|

| 49 |

+

flow_model.load_state_dict(weights, strict=False)

|

| 50 |

+

flow_model.eval()

|

| 51 |

+

print('create optical flow estimation model successfully!')

|

| 52 |

+

|

| 53 |

+

# saliency detection

|

| 54 |

+

sod_model = build_model('resnet')

|

| 55 |

+

sod_model.load_state_dict(torch.load(config['sod_path']))

|

| 56 |

+

sod_model.to("cuda").eval()

|

| 57 |

+

print('create saliency detection model successfully!')

|

| 58 |

+

|

| 59 |

+

# controlnet

|

| 60 |

+

if config['controlnet_type'] not in ['hed', 'depth', 'canny']:

|

| 61 |

+

print('unsupported control type, set to hed')

|

| 62 |

+

config['controlnet_type'] = 'hed'

|

| 63 |

+

controlnet = ControlNetModel.from_pretrained("lllyasviel/sd-controlnet-"+config['controlnet_type'],

|

| 64 |

+

torch_dtype=torch.float16)

|

| 65 |

+

controlnet.to("cuda")

|

| 66 |

+

if config['controlnet_type'] == 'depth':

|

| 67 |

+

detector = MidasDetector()

|

| 68 |

+

elif config['controlnet_type'] == 'canny':

|

| 69 |

+

detector = CannyDetector()

|

| 70 |

+

else:

|

| 71 |

+

detector = HEDdetector()

|

| 72 |

+

print('create controlnet model-' + config['controlnet_type'] + ' successfully!')

|

| 73 |

+

|

| 74 |

+

# diffusion model

|

| 75 |

+

vae = AutoencoderKL.from_pretrained("stabilityai/sd-vae-ft-mse", torch_dtype=torch.float16)

|

| 76 |

+

pipe = StableDiffusionPipeline.from_pretrained(config['sd_path'], vae=vae, torch_dtype=torch.float16)

|

| 77 |

+

pipe.scheduler = DDPMScheduler.from_config(pipe.scheduler.config)

|

| 78 |

+

#noise_scheduler = DDPMScheduler.from_pretrained("runwayml/stable-diffusion-v1-5", subfolder="scheduler")

|

| 79 |

+

pipe.to("cuda")

|

| 80 |

+

pipe.scheduler.set_timesteps(config['num_inference_steps'], device=pipe._execution_device)

|

| 81 |

+

|

| 82 |

+

if config['use_freeu']:

|

| 83 |

+

from src.free_lunch_utils import apply_freeu

|

| 84 |

+

apply_freeu(pipe, b1=1.2, b2=1.5, s1=1.0, s2=1.0)

|

| 85 |

+

|

| 86 |

+

frescoProc = apply_FRESCO_attn(pipe)

|

| 87 |

+

frescoProc.controller.disable_controller()

|

| 88 |

+

apply_FRESCO_opt(pipe)

|

| 89 |

+

print('create diffusion model ' + config['sd_path'] + ' successfully!')

|

| 90 |

+

|

| 91 |

+

for param in flow_model.parameters():

|

| 92 |

+

param.requires_grad = False

|

| 93 |

+

for param in sod_model.parameters():

|

| 94 |

+

param.requires_grad = False

|

| 95 |

+

for param in controlnet.parameters():

|

| 96 |

+

param.requires_grad = False

|

| 97 |

+

for param in pipe.unet.parameters():

|

| 98 |

+

param.requires_grad = False

|

| 99 |

+

|

| 100 |

+

return pipe, frescoProc, controlnet, detector, flow_model, sod_model

|

| 101 |

+

|

| 102 |

+

def apply_control(x, detector, config):

|

| 103 |

+

if config['controlnet_type'] == 'depth':

|

| 104 |

+

detected_map, _ = detector(x)

|

| 105 |

+

elif config['controlnet_type'] == 'canny':

|

| 106 |

+

detected_map = detector(x, 50, 100)

|

| 107 |

+

else:

|

| 108 |

+

detected_map = detector(x)

|

| 109 |

+

return detected_map

|

| 110 |

+

|

| 111 |

+

def run_keyframe_translation(config):

|

| 112 |

+

pipe, frescoProc, controlnet, detector, flow_model, sod_model = get_models(config)

|

| 113 |

+

device = pipe._execution_device

|

| 114 |

+

guidance_scale = 7.5

|

| 115 |

+

do_classifier_free_guidance = guidance_scale > 1

|

| 116 |

+

assert(do_classifier_free_guidance)

|

| 117 |

+

timesteps = pipe.scheduler.timesteps

|

| 118 |

+

cond_scale = [config['cond_scale']] * config['num_inference_steps']

|

| 119 |

+

dilate = Dilate(device=device)

|

| 120 |

+

|

| 121 |

+

base_prompt = config['prompt']

|

| 122 |

+

if 'Realistic' in config['sd_path'] or 'realistic' in config['sd_path']:

|

| 123 |

+

a_prompt = ', RAW photo, subject, (high detailed skin:1.2), 8k uhd, dslr, soft lighting, high quality, film grain, Fujifilm XT3, '

|

| 124 |

+

n_prompt = '(deformed iris, deformed pupils, semi-realistic, cgi, 3d, render, sketch, cartoon, drawing, anime, mutated hands and fingers:1.4), (deformed, distorted, disfigured:1.3), poorly drawn, bad anatomy, wrong anatomy, extra limb, missing limb, floating limbs, disconnected limbs, mutation, mutated, ugly, disgusting, amputation'

|

| 125 |

+

else:

|

| 126 |

+

a_prompt = ', best quality, extremely detailed, '

|

| 127 |

+

n_prompt = 'longbody, lowres, bad anatomy, bad hands, missing finger, extra digit, fewer digits, cropped, worst quality, low quality'

|

| 128 |

+

|

| 129 |

+

print('\n' + '=' * 100)

|

| 130 |

+

print('key frame selection for \"%s\"...'%(config['file_path']))

|

| 131 |

+

|

| 132 |

+

video_cap = cv2.VideoCapture(config['file_path'])

|

| 133 |

+

frame_num = int(video_cap.get(cv2.CAP_PROP_FRAME_COUNT))

|

| 134 |

+

|

| 135 |

+

# you can set extra_prompts for individual keyframe

|

| 136 |

+

# for example, extra_prompts[38] = ', closed eyes' to specify the person frame38 closes the eyes

|

| 137 |

+

extra_prompts = [''] * frame_num

|

| 138 |

+

|

| 139 |

+

keys = get_keyframe_ind(config['file_path'], frame_num, config['mininterv'], config['maxinterv'])

|

| 140 |

+

|

| 141 |

+

os.makedirs(config['save_path'], exist_ok=True)

|

| 142 |

+

os.makedirs(config['save_path']+'keys', exist_ok=True)

|

| 143 |

+

os.makedirs(config['save_path']+'video', exist_ok=True)

|

| 144 |

+

|

| 145 |

+

sublists = [keys[i:i+config['batch_size']-2] for i in range(2, len(keys), config['batch_size']-2)]

|

| 146 |

+

sublists[0].insert(0, keys[0])

|

| 147 |

+

sublists[0].insert(1, keys[1])

|

| 148 |

+

if len(sublists) > 1 and len(sublists[-1]) < 3:

|

| 149 |

+

add_num = 3 - len(sublists[-1])

|

| 150 |

+

sublists[-1] = sublists[-2][-add_num:] + sublists[-1]

|

| 151 |

+

sublists[-2] = sublists[-2][:-add_num]

|

| 152 |

+

|

| 153 |

+

if not sublists[-2]:

|

| 154 |

+

del sublists[-2]

|

| 155 |

+

|

| 156 |

+

print('processing %d batches:\nkeyframe indexes'%(len(sublists)), sublists)

|

| 157 |

+

|

| 158 |

+

print('\n' + '=' * 100)

|

| 159 |

+

print('video to video translation...')

|

| 160 |

+

|

| 161 |

+

batch_ind = 0

|

| 162 |

+

propagation_mode = batch_ind > 0

|

| 163 |

+

imgs = []

|

| 164 |

+

record_latents = []

|

| 165 |

+

video_cap = cv2.VideoCapture(config['file_path'])

|

| 166 |

+

for i in range(frame_num):

|

| 167 |

+

# prepare a batch of frame based on sublists

|

| 168 |

+

success, frame = video_cap.read()

|

| 169 |

+

frame = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

|

| 170 |

+

img = resize_image(frame, 512)

|

| 171 |

+

H, W, C = img.shape

|

| 172 |

+

Image.fromarray(img).save(os.path.join(config['save_path'], 'video/%04d.png'%(i)))

|

| 173 |

+

if i not in sublists[batch_ind]:

|

| 174 |

+

continue

|

| 175 |

+

|

| 176 |

+

imgs += [img]

|

| 177 |

+

if i != sublists[batch_ind][-1]:

|

| 178 |

+

continue

|

| 179 |

+

|

| 180 |

+

print('processing batch [%d/%d] with %d frames'%(batch_ind+1, len(sublists), len(sublists[batch_ind])))

|

| 181 |

+

|

| 182 |

+

# prepare input

|

| 183 |

+

batch_size = len(imgs)

|

| 184 |

+

n_prompts = [n_prompt] * len(imgs)

|

| 185 |

+

prompts = [base_prompt + a_prompt + extra_prompts[ind] for ind in sublists[batch_ind]]

|

| 186 |

+

if propagation_mode: # restore the extra_prompts from previous batch

|

| 187 |

+

assert len(imgs) == len(sublists[batch_ind]) + 2

|

| 188 |

+

prompts = ref_prompt + prompts

|

| 189 |

+

|

| 190 |

+

prompt_embeds = pipe._encode_prompt(

|

| 191 |

+

prompts,

|

| 192 |

+

device,

|

| 193 |

+

1,

|

| 194 |

+

do_classifier_free_guidance,

|

| 195 |

+

n_prompts,

|

| 196 |

+

)

|

| 197 |

+

|

| 198 |

+

imgs_torch = torch.cat([numpy2tensor(img) for img in imgs], dim=0)

|

| 199 |

+

edges = torch.cat([numpy2tensor(apply_control(img, detector, config)[:, :, None]) for img in imgs], dim=0)

|

| 200 |

+

edges = edges.repeat(1,3,1,1).cuda() * 0.5 + 0.5

|

| 201 |

+

if do_classifier_free_guidance:

|

| 202 |

+

edges = torch.cat([edges.to(pipe.unet.dtype)] * 2)

|

| 203 |

+

|

| 204 |

+

if config['use_salinecy']:

|

| 205 |

+

saliency = get_saliency(imgs, sod_model, dilate)

|

| 206 |

+

else:

|

| 207 |

+

saliency = None

|

| 208 |

+

|

| 209 |

+

# prepare parameters for inter-frame and intra-frame consistency

|

| 210 |

+

flows, occs, attn_mask, interattn_paras = get_flow_and_interframe_paras(flow_model, imgs)

|

| 211 |

+

correlation_matrix = get_intraframe_paras(pipe, imgs_torch, frescoProc,

|

| 212 |

+

prompt_embeds, seed = config['seed'])

|

| 213 |

+

|

| 214 |

+

'''

|

| 215 |

+

Flexible settings for attention:

|

| 216 |

+

* Turn off FRESCO-guided attention: frescoProc.controller.disable_controller()

|

| 217 |

+

Then you can turn on one specific attention submodule

|

| 218 |

+

* Turn on Cross-frame attention: frescoProc.controller.enable_cfattn(attn_mask)

|

| 219 |

+

* Turn on Spatial-guided attention: frescoProc.controller.enable_intraattn()

|

| 220 |

+

* Turn on Temporal-guided attention: frescoProc.controller.enable_interattn(interattn_paras)

|

| 221 |

+

|

| 222 |

+

Flexible settings for optimization:

|

| 223 |

+

* Turn off Spatial-guided optimization: set optimize_temporal = False in apply_FRESCO_opt()

|

| 224 |

+

* Turn off Temporal-guided optimization: set correlation_matrix = [] in apply_FRESCO_opt()

|

| 225 |

+

* Turn off FRESCO-guided optimization: disable_FRESCO_opt(pipe)

|

| 226 |

+

|

| 227 |

+

Flexible settings for background smoothing:

|

| 228 |

+

* Turn off background smoothing: set saliency = None in apply_FRESCO_opt()

|

| 229 |

+

'''

|

| 230 |

+

# Turn on all FRESCO support

|

| 231 |

+

frescoProc.controller.enable_controller(interattn_paras=interattn_paras, attn_mask=attn_mask)

|

| 232 |

+

apply_FRESCO_opt(pipe, steps = timesteps[:config['end_opt_step']],

|

| 233 |

+

flows = flows, occs = occs, correlation_matrix=correlation_matrix,

|

| 234 |

+

saliency=saliency, optimize_temporal = True)

|

| 235 |

+

|

| 236 |

+

gc.collect()

|

| 237 |

+

torch.cuda.empty_cache()

|

| 238 |

+

|

| 239 |

+

# run!

|

| 240 |

+

latents = inference(pipe, controlnet, frescoProc,

|

| 241 |

+

imgs_torch, prompt_embeds, edges, timesteps,

|

| 242 |

+

cond_scale, config['num_inference_steps'], config['num_warmup_steps'],

|

| 243 |

+

do_classifier_free_guidance, config['seed'], guidance_scale, config['use_controlnet'],

|

| 244 |

+

record_latents, propagation_mode,

|

| 245 |

+

flows = flows, occs = occs, saliency=saliency, repeat_noise=True)

|

| 246 |

+

|

| 247 |

+

gc.collect()

|

| 248 |

+

torch.cuda.empty_cache()

|

| 249 |

+

|

| 250 |

+

with torch.no_grad():

|

| 251 |

+

image = pipe.vae.decode(latents / pipe.vae.config.scaling_factor, return_dict=False)[0]

|

| 252 |

+

image = torch.clamp(image, -1 , 1)

|

| 253 |

+

save_imgs = tensor2numpy(image)

|

| 254 |

+

bias = 2 if propagation_mode else 0

|

| 255 |

+

for ind, num in enumerate(sublists[batch_ind]):

|

| 256 |

+

Image.fromarray(save_imgs[ind+bias]).save(os.path.join(config['save_path'], 'keys/%04d.png'%(num)))

|

| 257 |

+

|

| 258 |

+

gc.collect()

|

| 259 |

+

torch.cuda.empty_cache()

|

| 260 |

+

|

| 261 |

+

batch_ind += 1

|

| 262 |

+

# current batch uses the last frame of the previous batch as ref

|

| 263 |

+

ref_prompt= [prompts[0], prompts[-1]]

|

| 264 |

+

imgs = [imgs[0], imgs[-1]]

|

| 265 |

+

propagation_mode = batch_ind > 0

|

| 266 |

+

if batch_ind == len(sublists):

|

| 267 |

+

gc.collect()

|

| 268 |

+

torch.cuda.empty_cache()

|

| 269 |

+

break

|

| 270 |

+

return keys

|

| 271 |

+

|

| 272 |

+

def run_full_video_translation(config, keys):

|

| 273 |

+

print('\n' + '=' * 100)

|

| 274 |

+

if not config['run_ebsynth']:

|

| 275 |

+

print('to translate full video with ebsynth, install ebsynth and run:')

|

| 276 |

+

else:

|

| 277 |

+

print('translating full video with:')

|

| 278 |

+

|

| 279 |

+

video_cap = cv2.VideoCapture(config['file_path'])

|

| 280 |

+

fps = int(video_cap.get(cv2.CAP_PROP_FPS))

|

| 281 |

+

o_video = os.path.join(config['save_path'], 'blend.mp4')

|

| 282 |

+

max_process = config['max_process']

|

| 283 |

+

save_path = config['save_path']

|

| 284 |

+

key_ind = io.StringIO()

|

| 285 |

+

for k in keys:

|

| 286 |

+

print('%d'%(k), end=' ', file=key_ind)

|

| 287 |

+

cmd = (

|

| 288 |

+

f'python video_blend.py {save_path} --key keys '

|

| 289 |

+

f'--key_ind {key_ind.getvalue()} --output {o_video} --fps {fps} '

|

| 290 |

+

f'--n_proc {max_process} -ps')

|

| 291 |

+

|

| 292 |

+

print('\n```')

|

| 293 |

+

print(cmd)

|

| 294 |

+

print('```')

|

| 295 |

+

|

| 296 |

+

if config['run_ebsynth']:

|

| 297 |

+

os.system(cmd)

|

| 298 |

+

|

| 299 |

+

print('\n' + '=' * 100)

|

| 300 |

+

print('Done')

|

| 301 |

+

|

| 302 |

+

if __name__ == '__main__':

|

| 303 |

+

parser = argparse.ArgumentParser()

|

| 304 |

+

parser.add_argument('config_path', type=str,

|

| 305 |

+

default='./config/config_carturn.yaml',

|

| 306 |

+

help='The configuration file.')

|

| 307 |

+

opt = parser.parse_args()

|