Upload 243 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +3 -0

- img/docker_logs.png +0 -0

- img/langchain+chatglm.png +3 -0

- img/langchain+chatglm2.png +0 -0

- img/qr_code_36.jpg +0 -0

- img/qr_code_37.jpg +0 -0

- img/qr_code_38.jpg +0 -0

- img/qr_code_39.jpg +0 -0

- img/vue_0521_0.png +0 -0

- img/vue_0521_1.png +3 -0

- img/vue_0521_2.png +3 -0

- img/webui_0419.png +0 -0

- img/webui_0510_0.png +0 -0

- img/webui_0510_1.png +0 -0

- img/webui_0510_2.png +0 -0

- img/webui_0521_0.png +0 -0

- loader/RSS_loader.py +54 -0

- loader/__init__.py +14 -0

- loader/__pycache__/__init__.cpython-310.pyc +0 -0

- loader/__pycache__/__init__.cpython-311.pyc +0 -0

- loader/__pycache__/dialogue.cpython-310.pyc +0 -0

- loader/__pycache__/image_loader.cpython-310.pyc +0 -0

- loader/__pycache__/image_loader.cpython-311.pyc +0 -0

- loader/__pycache__/pdf_loader.cpython-310.pyc +0 -0

- loader/dialogue.py +131 -0

- loader/image_loader.py +42 -0

- loader/pdf_loader.py +58 -0

- models/__init__.py +4 -0

- models/__pycache__/__init__.cpython-310.pyc +0 -0

- models/__pycache__/chatglm_llm.cpython-310.pyc +0 -0

- models/__pycache__/fastchat_openai_llm.cpython-310.pyc +0 -0

- models/__pycache__/llama_llm.cpython-310.pyc +0 -0

- models/__pycache__/moss_llm.cpython-310.pyc +0 -0

- models/__pycache__/shared.cpython-310.pyc +0 -0

- models/base/__init__.py +13 -0

- models/base/__pycache__/__init__.cpython-310.pyc +0 -0

- models/base/__pycache__/base.cpython-310.pyc +0 -0

- models/base/__pycache__/remote_rpc_model.cpython-310.pyc +0 -0

- models/base/base.py +41 -0

- models/base/lavis_blip2_multimodel.py +26 -0

- models/base/remote_rpc_model.py +33 -0

- models/chatglm_llm.py +83 -0

- models/fastchat_openai_llm.py +137 -0

- models/llama_llm.py +185 -0

- models/loader/__init__.py +2 -0

- models/loader/__pycache__/__init__.cpython-310.pyc +0 -0

- models/loader/__pycache__/args.cpython-310.pyc +0 -0

- models/loader/__pycache__/loader.cpython-310.pyc +0 -0

- models/loader/args.py +55 -0

- models/loader/loader.py +447 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,6 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

img/langchain+chatglm.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

img/vue_0521_1.png filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

img/vue_0521_2.png filter=lfs diff=lfs merge=lfs -text

|

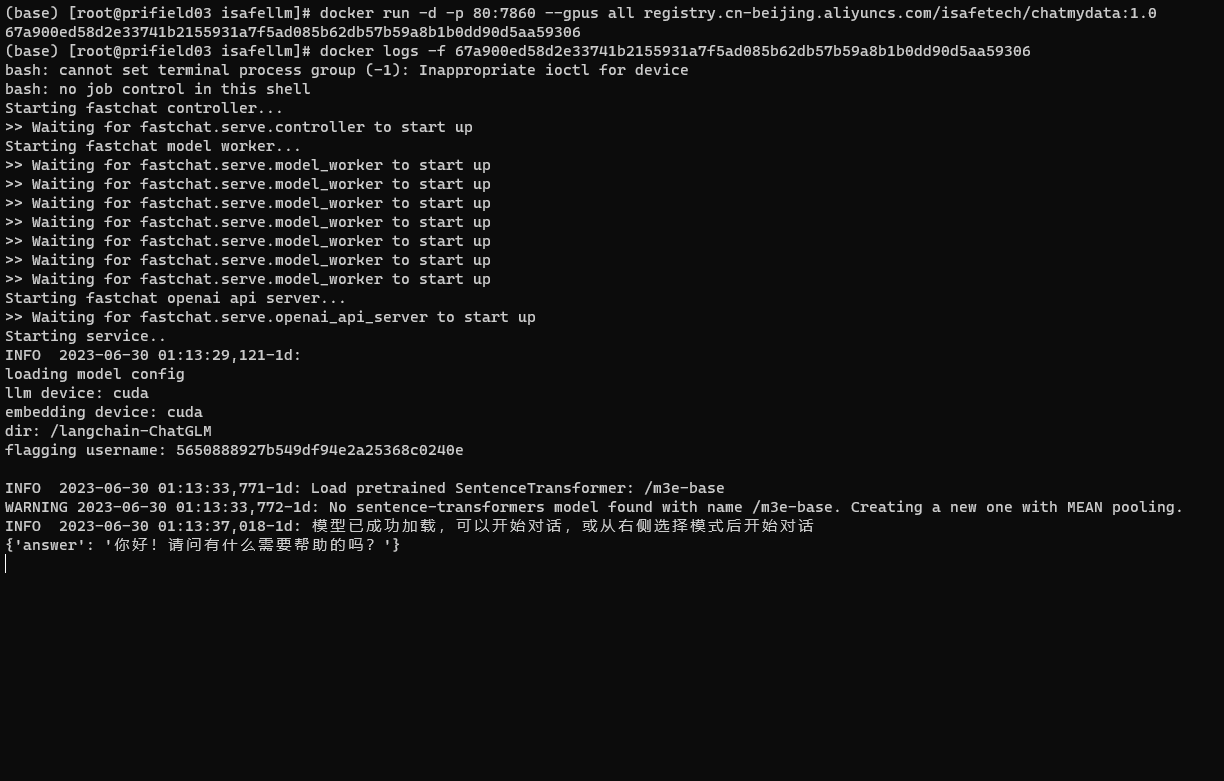

img/docker_logs.png

ADDED

|

img/langchain+chatglm.png

ADDED

|

Git LFS Details

|

img/langchain+chatglm2.png

ADDED

|

img/qr_code_36.jpg

ADDED

|

img/qr_code_37.jpg

ADDED

|

img/qr_code_38.jpg

ADDED

|

img/qr_code_39.jpg

ADDED

|

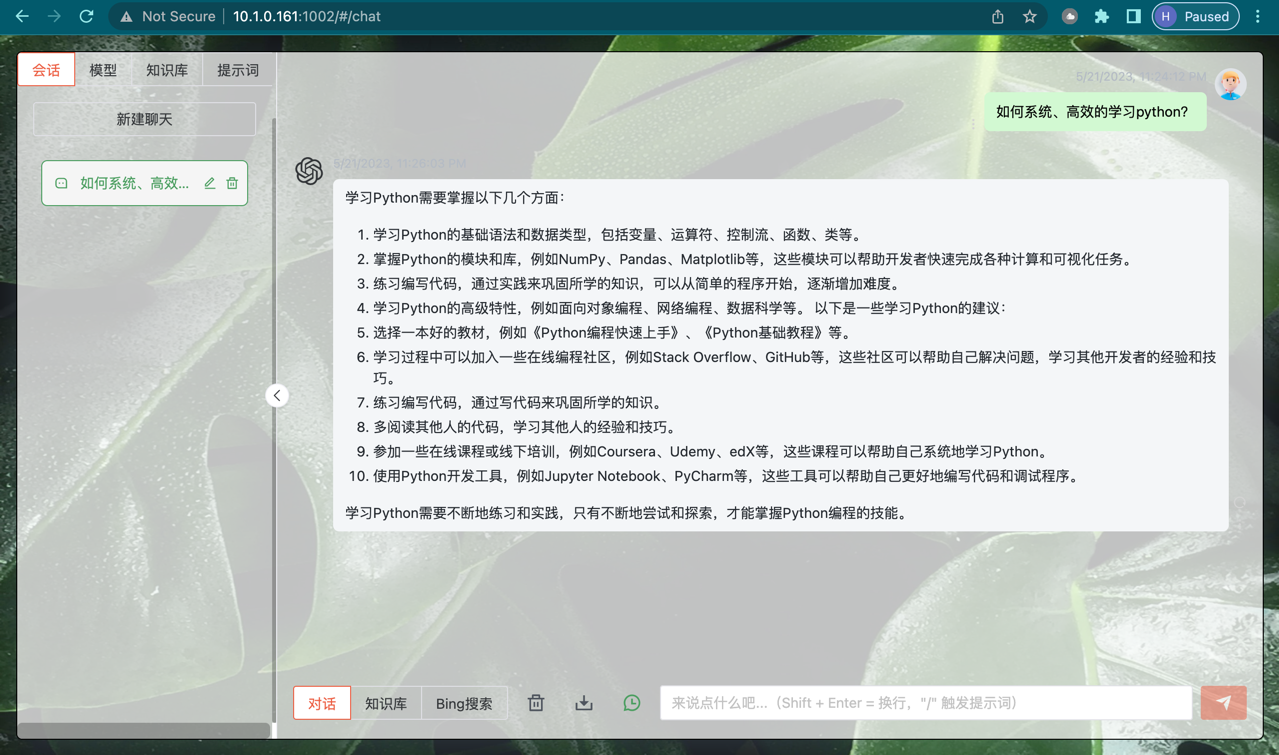

img/vue_0521_0.png

ADDED

|

img/vue_0521_1.png

ADDED

|

Git LFS Details

|

img/vue_0521_2.png

ADDED

|

Git LFS Details

|

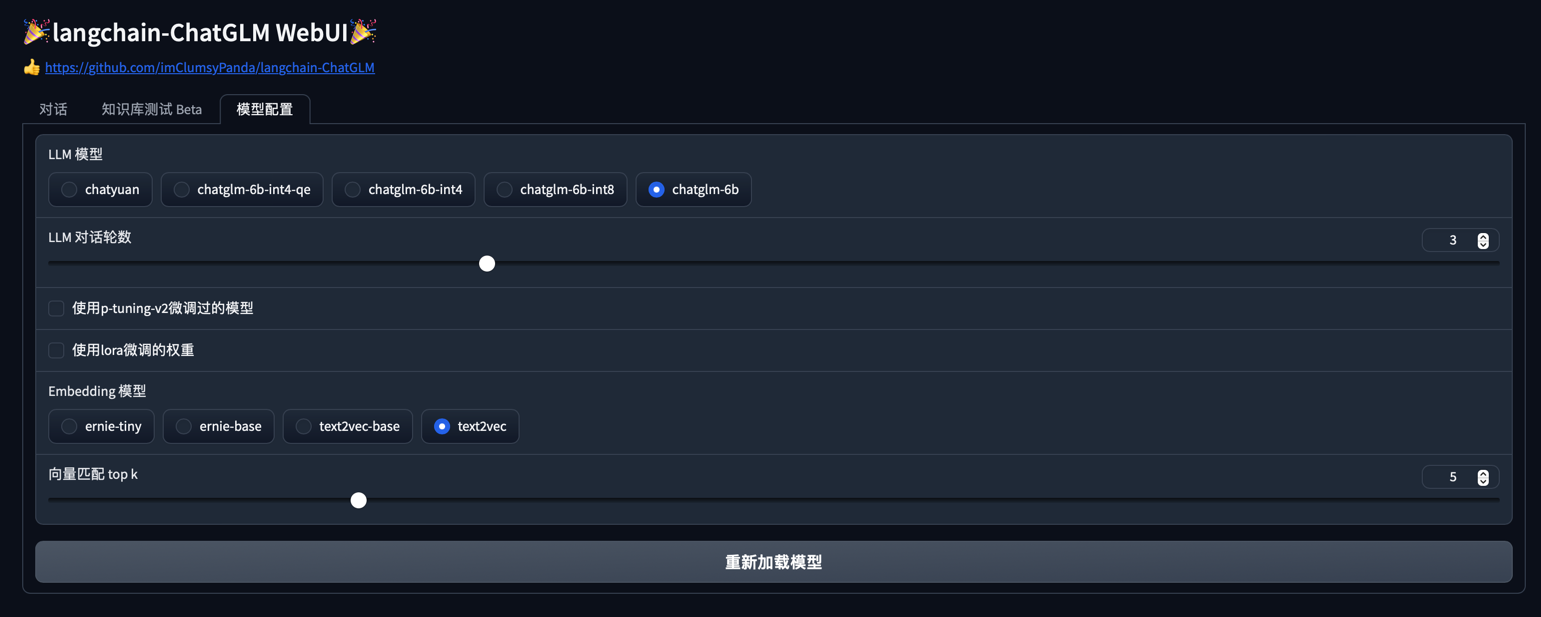

img/webui_0419.png

ADDED

|

img/webui_0510_0.png

ADDED

|

img/webui_0510_1.png

ADDED

|

img/webui_0510_2.png

ADDED

|

img/webui_0521_0.png

ADDED

|

loader/RSS_loader.py

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from langchain.docstore.document import Document

|

| 2 |

+

import feedparser

|

| 3 |

+

import html2text

|

| 4 |

+

import ssl

|

| 5 |

+

import time

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class RSS_Url_loader:

|

| 9 |

+

def __init__(self, urls=None,interval=60):

|

| 10 |

+

'''可用参数urls数组或者是字符串形式的url列表'''

|

| 11 |

+

self.urls = []

|

| 12 |

+

self.interval = interval

|

| 13 |

+

if urls is not None:

|

| 14 |

+

try:

|

| 15 |

+

if isinstance(urls, str):

|

| 16 |

+

urls = [urls]

|

| 17 |

+

elif isinstance(urls, list):

|

| 18 |

+

pass

|

| 19 |

+

else:

|

| 20 |

+

raise TypeError('urls must be a list or a string.')

|

| 21 |

+

self.urls = urls

|

| 22 |

+

except:

|

| 23 |

+

Warning('urls must be a list or a string.')

|

| 24 |

+

|

| 25 |

+

#定时代码还要考虑是不是引入其他类,暂时先不对外开放

|

| 26 |

+

def scheduled_execution(self):

|

| 27 |

+

while True:

|

| 28 |

+

docs = self.load()

|

| 29 |

+

return docs

|

| 30 |

+

time.sleep(self.interval)

|

| 31 |

+

|

| 32 |

+

def load(self):

|

| 33 |

+

if hasattr(ssl, '_create_unverified_context'):

|

| 34 |

+

ssl._create_default_https_context = ssl._create_unverified_context

|

| 35 |

+

documents = []

|

| 36 |

+

for url in self.urls:

|

| 37 |

+

parsed = feedparser.parse(url)

|

| 38 |

+

for entry in parsed.entries:

|

| 39 |

+

if "content" in entry:

|

| 40 |

+

data = entry.content[0].value

|

| 41 |

+

else:

|

| 42 |

+

data = entry.description or entry.summary

|

| 43 |

+

data = html2text.html2text(data)

|

| 44 |

+

metadata = {"title": entry.title, "link": entry.link}

|

| 45 |

+

documents.append(Document(page_content=data, metadata=metadata))

|

| 46 |

+

return documents

|

| 47 |

+

|

| 48 |

+

if __name__=="__main__":

|

| 49 |

+

#需要在配置文件中加入urls的配置,或者是在用户界面上加入urls的配置

|

| 50 |

+

urls = ["https://www.zhihu.com/rss", "https://www.36kr.com/feed"]

|

| 51 |

+

loader = RSS_Url_loader(urls)

|

| 52 |

+

docs = loader.load()

|

| 53 |

+

for doc in docs:

|

| 54 |

+

print(doc)

|

loader/__init__.py

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .image_loader import UnstructuredPaddleImageLoader

|

| 2 |

+

from .pdf_loader import UnstructuredPaddlePDFLoader

|

| 3 |

+

from .dialogue import (

|

| 4 |

+

Person,

|

| 5 |

+

Dialogue,

|

| 6 |

+

Turn,

|

| 7 |

+

DialogueLoader

|

| 8 |

+

)

|

| 9 |

+

|

| 10 |

+

__all__ = [

|

| 11 |

+

"UnstructuredPaddleImageLoader",

|

| 12 |

+

"UnstructuredPaddlePDFLoader",

|

| 13 |

+

"DialogueLoader",

|

| 14 |

+

]

|

loader/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (414 Bytes). View file

|

|

|

loader/__pycache__/__init__.cpython-311.pyc

ADDED

|

Binary file (531 Bytes). View file

|

|

|

loader/__pycache__/dialogue.cpython-310.pyc

ADDED

|

Binary file (4.95 kB). View file

|

|

|

loader/__pycache__/image_loader.cpython-310.pyc

ADDED

|

Binary file (2.23 kB). View file

|

|

|

loader/__pycache__/image_loader.cpython-311.pyc

ADDED

|

Binary file (3.94 kB). View file

|

|

|

loader/__pycache__/pdf_loader.cpython-310.pyc

ADDED

|

Binary file (2.57 kB). View file

|

|

|

loader/dialogue.py

ADDED

|

@@ -0,0 +1,131 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

from abc import ABC

|

| 3 |

+

from typing import List

|

| 4 |

+

from langchain.docstore.document import Document

|

| 5 |

+

from langchain.document_loaders.base import BaseLoader

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class Person:

|

| 9 |

+

def __init__(self, name, age):

|

| 10 |

+

self.name = name

|

| 11 |

+

self.age = age

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

class Dialogue:

|

| 15 |

+

"""

|

| 16 |

+

Build an abstract dialogue model using classes and methods to represent different dialogue elements.

|

| 17 |

+

This class serves as a fundamental framework for constructing dialogue models.

|

| 18 |

+

"""

|

| 19 |

+

|

| 20 |

+

def __init__(self, file_path: str):

|

| 21 |

+

self.file_path = file_path

|

| 22 |

+

self.turns = []

|

| 23 |

+

|

| 24 |

+

def add_turn(self, turn):

|

| 25 |

+

"""

|

| 26 |

+

Create an instance of a conversation participant

|

| 27 |

+

:param turn:

|

| 28 |

+

:return:

|

| 29 |

+

"""

|

| 30 |

+

self.turns.append(turn)

|

| 31 |

+

|

| 32 |

+

def parse_dialogue(self):

|

| 33 |

+

"""

|

| 34 |

+

The parse_dialogue function reads the specified dialogue file and parses each dialogue turn line by line.

|

| 35 |

+

For each turn, the function extracts the name of the speaker and the message content from the text,

|

| 36 |

+

creating a Turn instance. If the speaker is not already present in the participants dictionary,

|

| 37 |

+

a new Person instance is created. Finally, the parsed Turn instance is added to the Dialogue object.

|

| 38 |

+

|

| 39 |

+

Please note that this sample code assumes that each line in the file follows a specific format:

|

| 40 |

+

<speaker>:\r\n<message>\r\n\r\n. If your file has a different format or includes other metadata,

|

| 41 |

+

you may need to adjust the parsing logic accordingly.

|

| 42 |

+

"""

|

| 43 |

+

participants = {}

|

| 44 |

+

speaker_name = None

|

| 45 |

+

message = None

|

| 46 |

+

|

| 47 |

+

with open(self.file_path, encoding='utf-8') as file:

|

| 48 |

+

lines = file.readlines()

|

| 49 |

+

for i, line in enumerate(lines):

|

| 50 |

+

line = line.strip()

|

| 51 |

+

if not line:

|

| 52 |

+

continue

|

| 53 |

+

|

| 54 |

+

if speaker_name is None:

|

| 55 |

+

speaker_name, _ = line.split(':', 1)

|

| 56 |

+

elif message is None:

|

| 57 |

+

message = line

|

| 58 |

+

if speaker_name not in participants:

|

| 59 |

+

participants[speaker_name] = Person(speaker_name, None)

|

| 60 |

+

|

| 61 |

+

speaker = participants[speaker_name]

|

| 62 |

+

turn = Turn(speaker, message)

|

| 63 |

+

self.add_turn(turn)

|

| 64 |

+

|

| 65 |

+

# Reset speaker_name and message for the next turn

|

| 66 |

+

speaker_name = None

|

| 67 |

+

message = None

|

| 68 |

+

|

| 69 |

+

def display(self):

|

| 70 |

+

for turn in self.turns:

|

| 71 |

+

print(f"{turn.speaker.name}: {turn.message}")

|

| 72 |

+

|

| 73 |

+

def export_to_file(self, file_path):

|

| 74 |

+

with open(file_path, 'w', encoding='utf-8') as file:

|

| 75 |

+

for turn in self.turns:

|

| 76 |

+

file.write(f"{turn.speaker.name}: {turn.message}\n")

|

| 77 |

+

|

| 78 |

+

def to_dict(self):

|

| 79 |

+

dialogue_dict = {"turns": []}

|

| 80 |

+

for turn in self.turns:

|

| 81 |

+

turn_dict = {

|

| 82 |

+

"speaker": turn.speaker.name,

|

| 83 |

+

"message": turn.message

|

| 84 |

+

}

|

| 85 |

+

dialogue_dict["turns"].append(turn_dict)

|

| 86 |

+

return dialogue_dict

|

| 87 |

+

|

| 88 |

+

def to_json(self):

|

| 89 |

+

dialogue_dict = self.to_dict()

|

| 90 |

+

return json.dumps(dialogue_dict, ensure_ascii=False, indent=2)

|

| 91 |

+

|

| 92 |

+

def participants_to_export(self):

|

| 93 |

+

"""

|

| 94 |

+

participants_to_export

|

| 95 |

+

:return:

|

| 96 |

+

"""

|

| 97 |

+

participants = set()

|

| 98 |

+

for turn in self.turns:

|

| 99 |

+

participants.add(turn.speaker.name)

|

| 100 |

+

return ', '.join(participants)

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

class Turn:

|

| 104 |

+

def __init__(self, speaker, message):

|

| 105 |

+

self.speaker = speaker

|

| 106 |

+

self.message = message

|

| 107 |

+

|

| 108 |

+

|

| 109 |

+

class DialogueLoader(BaseLoader, ABC):

|

| 110 |

+

"""Load dialogue."""

|

| 111 |

+

|

| 112 |

+

def __init__(self, file_path: str):

|

| 113 |

+

"""Initialize with dialogue."""

|

| 114 |

+

self.file_path = file_path

|

| 115 |

+

dialogue = Dialogue(file_path=file_path)

|

| 116 |

+

dialogue.parse_dialogue()

|

| 117 |

+

self.dialogue = dialogue

|

| 118 |

+

|

| 119 |

+

def load(self) -> List[Document]:

|

| 120 |

+

"""Load from dialogue."""

|

| 121 |

+

documents = []

|

| 122 |

+

participants = self.dialogue.participants_to_export()

|

| 123 |

+

|

| 124 |

+

for turn in self.dialogue.turns:

|

| 125 |

+

metadata = {"source": f"Dialogue File:{self.dialogue.file_path},"

|

| 126 |

+

f"speaker:{turn.speaker.name},"

|

| 127 |

+

f"participant:{participants}"}

|

| 128 |

+

turn_document = Document(page_content=turn.message, metadata=metadata.copy())

|

| 129 |

+

documents.append(turn_document)

|

| 130 |

+

|

| 131 |

+

return documents

|

loader/image_loader.py

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Loader that loads image files."""

|

| 2 |

+

from typing import List

|

| 3 |

+

|

| 4 |

+

from langchain.document_loaders.unstructured import UnstructuredFileLoader

|

| 5 |

+

from paddleocr import PaddleOCR

|

| 6 |

+

import os

|

| 7 |

+

import nltk

|

| 8 |

+

from configs.model_config import NLTK_DATA_PATH

|

| 9 |

+

|

| 10 |

+

nltk.data.path = [NLTK_DATA_PATH] + nltk.data.path

|

| 11 |

+

|

| 12 |

+

class UnstructuredPaddleImageLoader(UnstructuredFileLoader):

|

| 13 |

+

"""Loader that uses unstructured to load image files, such as PNGs and JPGs."""

|

| 14 |

+

|

| 15 |

+

def _get_elements(self) -> List:

|

| 16 |

+

def image_ocr_txt(filepath, dir_path="tmp_files"):

|

| 17 |

+

full_dir_path = os.path.join(os.path.dirname(filepath), dir_path)

|

| 18 |

+

if not os.path.exists(full_dir_path):

|

| 19 |

+

os.makedirs(full_dir_path)

|

| 20 |

+

filename = os.path.split(filepath)[-1]

|

| 21 |

+

ocr = PaddleOCR(use_angle_cls=True, lang="ch", use_gpu=False, show_log=False)

|

| 22 |

+

result = ocr.ocr(img=filepath)

|

| 23 |

+

|

| 24 |

+

ocr_result = [i[1][0] for line in result for i in line]

|

| 25 |

+

txt_file_path = os.path.join(full_dir_path, "%s.txt" % (filename))

|

| 26 |

+

with open(txt_file_path, 'w', encoding='utf-8') as fout:

|

| 27 |

+

fout.write("\n".join(ocr_result))

|

| 28 |

+

return txt_file_path

|

| 29 |

+

|

| 30 |

+

txt_file_path = image_ocr_txt(self.file_path)

|

| 31 |

+

from unstructured.partition.text import partition_text

|

| 32 |

+

return partition_text(filename=txt_file_path, **self.unstructured_kwargs)

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

if __name__ == "__main__":

|

| 36 |

+

import sys

|

| 37 |

+

sys.path.append(os.path.dirname(os.path.dirname(__file__)))

|

| 38 |

+

filepath = os.path.join(os.path.dirname(os.path.dirname(__file__)), "knowledge_base", "samples", "content", "test.jpg")

|

| 39 |

+

loader = UnstructuredPaddleImageLoader(filepath, mode="elements")

|

| 40 |

+

docs = loader.load()

|

| 41 |

+

for doc in docs:

|

| 42 |

+

print(doc)

|

loader/pdf_loader.py

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Loader that loads image files."""

|

| 2 |

+

from typing import List

|

| 3 |

+

|

| 4 |

+

from langchain.document_loaders.unstructured import UnstructuredFileLoader

|

| 5 |

+

from paddleocr import PaddleOCR

|

| 6 |

+

import os

|

| 7 |

+

import fitz

|

| 8 |

+

import nltk

|

| 9 |

+

from configs.model_config import NLTK_DATA_PATH

|

| 10 |

+

|

| 11 |

+

nltk.data.path = [NLTK_DATA_PATH] + nltk.data.path

|

| 12 |

+

|

| 13 |

+

class UnstructuredPaddlePDFLoader(UnstructuredFileLoader):

|

| 14 |

+

"""Loader that uses unstructured to load image files, such as PNGs and JPGs."""

|

| 15 |

+

|

| 16 |

+

def _get_elements(self) -> List:

|

| 17 |

+

def pdf_ocr_txt(filepath, dir_path="tmp_files"):

|

| 18 |

+

full_dir_path = os.path.join(os.path.dirname(filepath), dir_path)

|

| 19 |

+

if not os.path.exists(full_dir_path):

|

| 20 |

+

os.makedirs(full_dir_path)

|

| 21 |

+

ocr = PaddleOCR(use_angle_cls=True, lang="ch", use_gpu=False, show_log=False)

|

| 22 |

+

doc = fitz.open(filepath)

|

| 23 |

+

txt_file_path = os.path.join(full_dir_path, f"{os.path.split(filepath)[-1]}.txt")

|

| 24 |

+

img_name = os.path.join(full_dir_path, 'tmp.png')

|

| 25 |

+

with open(txt_file_path, 'w', encoding='utf-8') as fout:

|

| 26 |

+

for i in range(doc.page_count):

|

| 27 |

+

page = doc[i]

|

| 28 |

+

text = page.get_text("")

|

| 29 |

+

fout.write(text)

|

| 30 |

+

fout.write("\n")

|

| 31 |

+

|

| 32 |

+

img_list = page.get_images()

|

| 33 |

+

for img in img_list:

|

| 34 |

+

pix = fitz.Pixmap(doc, img[0])

|

| 35 |

+

if pix.n - pix.alpha >= 4:

|

| 36 |

+

pix = fitz.Pixmap(fitz.csRGB, pix)

|

| 37 |

+

pix.save(img_name)

|

| 38 |

+

|

| 39 |

+

result = ocr.ocr(img_name)

|

| 40 |

+

ocr_result = [i[1][0] for line in result for i in line]

|

| 41 |

+

fout.write("\n".join(ocr_result))

|

| 42 |

+

if os.path.exists(img_name):

|

| 43 |

+

os.remove(img_name)

|

| 44 |

+

return txt_file_path

|

| 45 |

+

|

| 46 |

+

txt_file_path = pdf_ocr_txt(self.file_path)

|

| 47 |

+

from unstructured.partition.text import partition_text

|

| 48 |

+

return partition_text(filename=txt_file_path, **self.unstructured_kwargs)

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

if __name__ == "__main__":

|

| 52 |

+

import sys

|

| 53 |

+

sys.path.append(os.path.dirname(os.path.dirname(__file__)))

|

| 54 |

+

filepath = os.path.join(os.path.dirname(os.path.dirname(__file__)), "knowledge_base", "samples", "content", "test.pdf")

|

| 55 |

+

loader = UnstructuredPaddlePDFLoader(filepath, mode="elements")

|

| 56 |

+

docs = loader.load()

|

| 57 |

+

for doc in docs:

|

| 58 |

+

print(doc)

|

models/__init__.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .chatglm_llm import ChatGLM

|

| 2 |

+

from .llama_llm import LLamaLLM

|

| 3 |

+

from .moss_llm import MOSSLLM

|

| 4 |

+

from .fastchat_openai_llm import FastChatOpenAILLM

|

models/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (338 Bytes). View file

|

|

|

models/__pycache__/chatglm_llm.cpython-310.pyc

ADDED

|

Binary file (2.66 kB). View file

|

|

|

models/__pycache__/fastchat_openai_llm.cpython-310.pyc

ADDED

|

Binary file (4.45 kB). View file

|

|

|

models/__pycache__/llama_llm.cpython-310.pyc

ADDED

|

Binary file (6.45 kB). View file

|

|

|

models/__pycache__/moss_llm.cpython-310.pyc

ADDED

|

Binary file (3.88 kB). View file

|

|

|

models/__pycache__/shared.cpython-310.pyc

ADDED

|

Binary file (1.48 kB). View file

|

|

|

models/base/__init__.py

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from models.base.base import (

|

| 2 |

+

AnswerResult,

|

| 3 |

+

BaseAnswer

|

| 4 |

+

)

|

| 5 |

+

from models.base.remote_rpc_model import (

|

| 6 |

+

RemoteRpcModel

|

| 7 |

+

)

|

| 8 |

+

|

| 9 |

+

__all__ = [

|

| 10 |

+

"AnswerResult",

|

| 11 |

+

"BaseAnswer",

|

| 12 |

+

"RemoteRpcModel",

|

| 13 |

+

]

|

models/base/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (334 Bytes). View file

|

|

|

models/base/__pycache__/base.cpython-310.pyc

ADDED

|

Binary file (1.79 kB). View file

|

|

|

models/base/__pycache__/remote_rpc_model.cpython-310.pyc

ADDED

|

Binary file (1.59 kB). View file

|

|

|

models/base/base.py

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import ABC, abstractmethod

|

| 2 |

+

from typing import Optional, List

|

| 3 |

+

import traceback

|

| 4 |

+

from collections import deque

|

| 5 |

+

from queue import Queue

|

| 6 |

+

from threading import Thread

|

| 7 |

+

|

| 8 |

+

import torch

|

| 9 |

+

import transformers

|

| 10 |

+

from models.loader import LoaderCheckPoint

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

class AnswerResult:

|

| 14 |

+

"""

|

| 15 |

+

消息实体

|

| 16 |

+

"""

|

| 17 |

+

history: List[List[str]] = []

|

| 18 |

+

llm_output: Optional[dict] = None

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

class BaseAnswer(ABC):

|

| 22 |

+

"""上层业务包装器.用于结果生成统一api调用"""

|

| 23 |

+

|

| 24 |

+

@property

|

| 25 |

+

@abstractmethod

|

| 26 |

+

def _check_point(self) -> LoaderCheckPoint:

|

| 27 |

+

"""Return _check_point of llm."""

|

| 28 |

+

|

| 29 |

+

@property

|

| 30 |

+

@abstractmethod

|

| 31 |

+

def _history_len(self) -> int:

|

| 32 |

+

"""Return _history_len of llm."""

|

| 33 |

+

|

| 34 |

+

@abstractmethod

|

| 35 |

+

def set_history_len(self, history_len: int) -> None:

|

| 36 |

+

"""Return _history_len of llm."""

|

| 37 |

+

|

| 38 |

+

def generatorAnswer(self, prompt: str,

|

| 39 |

+

history: List[List[str]] = [],

|

| 40 |

+

streaming: bool = False):

|

| 41 |

+

pass

|

models/base/lavis_blip2_multimodel.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import ABC, abstractmethod

|

| 2 |

+

import torch

|

| 3 |

+

|

| 4 |

+

from models.base import (BaseAnswer,

|

| 5 |

+

AnswerResult)

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class MultimodalAnswerResult(AnswerResult):

|

| 9 |

+

image: str = None

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class LavisBlip2Multimodal(BaseAnswer, ABC):

|

| 13 |

+

|

| 14 |

+

@property

|

| 15 |

+

@abstractmethod

|

| 16 |

+

def _blip2_instruct(self) -> any:

|

| 17 |

+

"""Return _blip2_instruct of blip2."""

|

| 18 |

+

|

| 19 |

+

@property

|

| 20 |

+

@abstractmethod

|

| 21 |

+

def _image_blip2_vis_processors(self) -> dict:

|

| 22 |

+

"""Return _image_blip2_vis_processors of blip2 image processors."""

|

| 23 |

+

|

| 24 |

+

@abstractmethod

|

| 25 |

+

def set_image_path(self, image_path: str):

|

| 26 |

+

"""set set_image_path"""

|

models/base/remote_rpc_model.py

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import ABC, abstractmethod

|

| 2 |

+

import torch

|

| 3 |

+

|

| 4 |

+

from models.base import (BaseAnswer,

|

| 5 |

+

AnswerResult)

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

class MultimodalAnswerResult(AnswerResult):

|

| 9 |

+

image: str = None

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class RemoteRpcModel(BaseAnswer, ABC):

|

| 13 |

+

|

| 14 |

+

@property

|

| 15 |

+

@abstractmethod

|

| 16 |

+

def _api_key(self) -> str:

|

| 17 |

+

"""Return _api_key of client."""

|

| 18 |

+

|

| 19 |

+

@property

|

| 20 |

+

@abstractmethod

|

| 21 |

+

def _api_base_url(self) -> str:

|

| 22 |

+

"""Return _api_base of client host bash url."""

|

| 23 |

+

|

| 24 |

+

@abstractmethod

|

| 25 |

+

def set_api_key(self, api_key: str):

|

| 26 |

+

"""set set_api_key"""

|

| 27 |

+

|

| 28 |

+

@abstractmethod

|

| 29 |

+

def set_api_base_url(self, api_base_url: str):

|

| 30 |

+

"""set api_base_url"""

|

| 31 |

+

@abstractmethod

|

| 32 |

+

def call_model_name(self, model_name):

|

| 33 |

+

"""call model name of client"""

|

models/chatglm_llm.py

ADDED

|

@@ -0,0 +1,83 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import ABC

|

| 2 |

+

from langchain.llms.base import LLM

|

| 3 |

+

from typing import Optional, List

|

| 4 |

+

from models.loader import LoaderCheckPoint

|

| 5 |

+

from models.base import (BaseAnswer,

|

| 6 |

+

AnswerResult)

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class ChatGLM(BaseAnswer, LLM, ABC):

|

| 10 |

+

max_token: int = 10000

|

| 11 |

+

temperature: float = 0.01

|

| 12 |

+

top_p = 0.9

|

| 13 |

+

checkPoint: LoaderCheckPoint = None

|

| 14 |

+

# history = []

|

| 15 |

+

history_len: int = 10

|

| 16 |

+

|

| 17 |

+

def __init__(self, checkPoint: LoaderCheckPoint = None):

|

| 18 |

+

super().__init__()

|

| 19 |

+

self.checkPoint = checkPoint

|

| 20 |

+

|

| 21 |

+

@property

|

| 22 |

+

def _llm_type(self) -> str:

|

| 23 |

+

return "ChatGLM"

|

| 24 |

+

|

| 25 |

+

@property

|

| 26 |

+

def _check_point(self) -> LoaderCheckPoint:

|

| 27 |

+

return self.checkPoint

|

| 28 |

+

|

| 29 |

+

@property

|

| 30 |

+

def _history_len(self) -> int:

|

| 31 |

+

return self.history_len

|

| 32 |

+

|

| 33 |

+

def set_history_len(self, history_len: int = 10) -> None:

|

| 34 |

+

self.history_len = history_len

|

| 35 |

+

|

| 36 |

+

def _call(self, prompt: str, stop: Optional[List[str]] = None) -> str:

|

| 37 |

+

print(f"__call:{prompt}")

|

| 38 |

+

response, _ = self.checkPoint.model.chat(

|

| 39 |

+

self.checkPoint.tokenizer,

|

| 40 |

+

prompt,

|

| 41 |

+

history=[],

|

| 42 |

+

max_length=self.max_token,

|

| 43 |

+

temperature=self.temperature

|

| 44 |

+

)

|

| 45 |

+

print(f"response:{response}")

|

| 46 |

+

print(f"+++++++++++++++++++++++++++++++++++")

|

| 47 |

+

return response

|

| 48 |

+

|

| 49 |

+

def generatorAnswer(self, prompt: str,

|

| 50 |

+

history: List[List[str]] = [],

|

| 51 |

+

streaming: bool = False):

|

| 52 |

+

|

| 53 |

+

if streaming:

|

| 54 |

+

history += [[]]

|

| 55 |

+

for inum, (stream_resp, _) in enumerate(self.checkPoint.model.stream_chat(

|

| 56 |

+

self.checkPoint.tokenizer,

|

| 57 |

+

prompt,

|

| 58 |

+

history=history[-self.history_len:-1] if self.history_len > 1 else [],

|

| 59 |

+

max_length=self.max_token,

|

| 60 |

+

temperature=self.temperature

|

| 61 |

+

)):

|

| 62 |

+

# self.checkPoint.clear_torch_cache()

|

| 63 |

+

history[-1] = [prompt, stream_resp]

|

| 64 |

+

answer_result = AnswerResult()

|

| 65 |

+

answer_result.history = history

|

| 66 |

+

answer_result.llm_output = {"answer": stream_resp}

|

| 67 |

+

yield answer_result

|

| 68 |

+

else:

|

| 69 |

+

response, _ = self.checkPoint.model.chat(

|

| 70 |

+

self.checkPoint.tokenizer,

|

| 71 |

+

prompt,

|

| 72 |

+

history=history[-self.history_len:] if self.history_len > 0 else [],

|

| 73 |

+

max_length=self.max_token,

|

| 74 |

+

temperature=self.temperature

|

| 75 |

+

)

|

| 76 |

+

self.checkPoint.clear_torch_cache()

|

| 77 |

+

history += [[prompt, response]]

|

| 78 |

+

answer_result = AnswerResult()

|

| 79 |

+

answer_result.history = history

|

| 80 |

+

answer_result.llm_output = {"answer": response}

|

| 81 |

+

yield answer_result

|

| 82 |

+

|

| 83 |

+

|

models/fastchat_openai_llm.py

ADDED

|

@@ -0,0 +1,137 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import ABC

|

| 2 |

+

import requests

|

| 3 |

+

from typing import Optional, List

|

| 4 |

+

from langchain.llms.base import LLM

|

| 5 |

+

|

| 6 |

+

from models.loader import LoaderCheckPoint

|

| 7 |

+

from models.base import (RemoteRpcModel,

|

| 8 |

+

AnswerResult)

|

| 9 |

+

from typing import (

|

| 10 |

+

Collection,

|

| 11 |

+

Dict

|

| 12 |

+

)

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

def _build_message_template() -> Dict[str, str]:

|

| 16 |

+

"""

|

| 17 |

+

:return: 结构

|

| 18 |

+

"""

|

| 19 |

+

return {

|

| 20 |

+

"role": "",

|

| 21 |

+

"content": "",

|

| 22 |

+

}

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

class FastChatOpenAILLM(RemoteRpcModel, LLM, ABC):

|

| 26 |

+

api_base_url: str = "http://localhost:8000/v1"

|

| 27 |

+

model_name: str = "chatglm-6b"

|

| 28 |

+

max_token: int = 10000

|

| 29 |

+

temperature: float = 0.01

|

| 30 |

+

top_p = 0.9

|

| 31 |

+

checkPoint: LoaderCheckPoint = None

|

| 32 |

+

history = []

|

| 33 |

+

history_len: int = 10

|

| 34 |

+

|

| 35 |

+

def __init__(self, checkPoint: LoaderCheckPoint = None):

|

| 36 |

+

super().__init__()

|

| 37 |

+

self.checkPoint = checkPoint

|

| 38 |

+

|

| 39 |

+

@property

|

| 40 |

+

def _llm_type(self) -> str:

|

| 41 |

+

return "FastChat"

|

| 42 |

+

|

| 43 |

+

@property

|

| 44 |

+

def _check_point(self) -> LoaderCheckPoint:

|

| 45 |

+

return self.checkPoint

|

| 46 |

+

|

| 47 |

+

@property

|

| 48 |

+

def _history_len(self) -> int:

|

| 49 |

+

return self.history_len

|

| 50 |

+

|

| 51 |

+

def set_history_len(self, history_len: int = 10) -> None:

|

| 52 |

+

self.history_len = history_len

|

| 53 |

+

|

| 54 |

+

@property

|

| 55 |

+

def _api_key(self) -> str:

|

| 56 |

+

pass

|

| 57 |

+

|

| 58 |

+

@property

|

| 59 |

+

def _api_base_url(self) -> str:

|

| 60 |

+

return self.api_base_url

|

| 61 |

+

|

| 62 |

+

def set_api_key(self, api_key: str):

|

| 63 |

+

pass

|

| 64 |

+

|

| 65 |

+

def set_api_base_url(self, api_base_url: str):

|

| 66 |

+

self.api_base_url = api_base_url

|

| 67 |

+

|

| 68 |

+

def call_model_name(self, model_name):

|

| 69 |

+

self.model_name = model_name

|

| 70 |

+

|

| 71 |

+

def _call(self, prompt: str, stop: Optional[List[str]] = None) -> str:

|

| 72 |

+

print(f"__call:{prompt}")

|

| 73 |

+

try:

|

| 74 |

+

import openai

|

| 75 |

+

# Not support yet

|

| 76 |

+

openai.api_key = "EMPTY"

|

| 77 |

+

openai.api_base = self.api_base_url

|

| 78 |

+

except ImportError:

|

| 79 |

+

raise ValueError(

|

| 80 |

+

"Could not import openai python package. "

|

| 81 |

+

"Please install it with `pip install openai`."

|

| 82 |

+

)

|

| 83 |

+

# create a chat completion

|

| 84 |

+

completion = openai.ChatCompletion.create(

|

| 85 |

+

model=self.model_name,

|

| 86 |

+

messages=self.build_message_list(prompt)

|

| 87 |

+

)

|

| 88 |

+

print(f"response:{completion.choices[0].message.content}")

|

| 89 |

+

print(f"+++++++++++++++++++++++++++++++++++")

|

| 90 |

+

return completion.choices[0].message.content

|

| 91 |

+

|

| 92 |

+

# 将历史对话数组转换为文本格式

|

| 93 |

+

def build_message_list(self, query) -> Collection[Dict[str, str]]:

|

| 94 |

+

build_message_list: Collection[Dict[str, str]] = []

|

| 95 |

+

history = self.history[-self.history_len:] if self.history_len > 0 else []

|

| 96 |

+

for i, (old_query, response) in enumerate(history):

|

| 97 |

+

user_build_message = _build_message_template()

|

| 98 |

+

user_build_message['role'] = 'user'

|

| 99 |

+

user_build_message['content'] = old_query

|

| 100 |

+

system_build_message = _build_message_template()

|

| 101 |

+

system_build_message['role'] = 'system'

|

| 102 |

+

system_build_message['content'] = response

|

| 103 |

+

build_message_list.append(user_build_message)

|

| 104 |

+

build_message_list.append(system_build_message)

|

| 105 |

+

|

| 106 |

+

user_build_message = _build_message_template()

|

| 107 |

+

user_build_message['role'] = 'user'

|

| 108 |

+

user_build_message['content'] = query

|

| 109 |

+

build_message_list.append(user_build_message)

|

| 110 |

+

return build_message_list

|

| 111 |

+

|

| 112 |

+

def generatorAnswer(self, prompt: str,

|

| 113 |

+

history: List[List[str]] = [],

|

| 114 |

+

streaming: bool = False):

|

| 115 |

+

|

| 116 |

+

try:

|

| 117 |

+

import openai

|

| 118 |

+

# Not support yet

|

| 119 |

+

openai.api_key = "EMPTY"

|

| 120 |

+

openai.api_base = self.api_base_url

|

| 121 |

+

except ImportError:

|

| 122 |

+

raise ValueError(

|

| 123 |

+

"Could not import openai python package. "

|

| 124 |

+

"Please install it with `pip install openai`."

|

| 125 |

+

)

|

| 126 |

+

# create a chat completion

|

| 127 |

+

completion = openai.ChatCompletion.create(

|

| 128 |

+

model=self.model_name,

|

| 129 |

+

messages=self.build_message_list(prompt)

|

| 130 |

+

)

|

| 131 |

+

|

| 132 |

+

history += [[prompt, completion.choices[0].message.content]]

|

| 133 |

+

answer_result = AnswerResult()

|

| 134 |

+

answer_result.history = history

|

| 135 |

+

answer_result.llm_output = {"answer": completion.choices[0].message.content}

|

| 136 |

+

|

| 137 |

+

yield answer_result

|

models/llama_llm.py

ADDED

|

@@ -0,0 +1,185 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import ABC

|

| 2 |

+

|

| 3 |

+

from langchain.llms.base import LLM

|

| 4 |

+

import random

|

| 5 |

+

import torch

|

| 6 |

+

import transformers

|

| 7 |

+

from transformers.generation.logits_process import LogitsProcessor

|

| 8 |

+

from transformers.generation.utils import LogitsProcessorList, StoppingCriteriaList

|

| 9 |

+

from typing import Optional, List, Dict, Any

|

| 10 |

+

from models.loader import LoaderCheckPoint

|

| 11 |

+

from models.base import (BaseAnswer,

|

| 12 |

+

AnswerResult)

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

class InvalidScoreLogitsProcessor(LogitsProcessor):

|

| 16 |

+

def __call__(self, input_ids: torch.LongTensor, scores: torch.FloatTensor) -> torch.FloatTensor:

|

| 17 |

+

if torch.isnan(scores).any() or torch.isinf(scores).any():

|

| 18 |

+

scores.zero_()

|

| 19 |

+

scores[..., 5] = 5e4

|

| 20 |

+

return scores

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

class LLamaLLM(BaseAnswer, LLM, ABC):

|

| 24 |

+

checkPoint: LoaderCheckPoint = None

|

| 25 |

+

# history = []

|

| 26 |

+

history_len: int = 3

|

| 27 |

+

max_new_tokens: int = 500

|

| 28 |

+

num_beams: int = 1

|

| 29 |

+

temperature: float = 0.5

|

| 30 |

+

top_p: float = 0.4

|

| 31 |

+

top_k: int = 10

|

| 32 |

+

repetition_penalty: float = 1.2

|

| 33 |

+

encoder_repetition_penalty: int = 1

|

| 34 |

+

min_length: int = 0

|

| 35 |

+

logits_processor: LogitsProcessorList = None

|

| 36 |

+

stopping_criteria: Optional[StoppingCriteriaList] = None

|

| 37 |

+

eos_token_id: Optional[int] = [2]

|

| 38 |

+

|

| 39 |

+

state: object = {'max_new_tokens': 50,

|

| 40 |

+

'seed': 1,

|

| 41 |

+

'temperature': 0, 'top_p': 0.1,

|

| 42 |

+

'top_k': 40, 'typical_p': 1,

|

| 43 |

+

'repetition_penalty': 1.2,

|

| 44 |

+

'encoder_repetition_penalty': 1,

|

| 45 |

+

'no_repeat_ngram_size': 0,

|

| 46 |

+

'min_length': 0,

|

| 47 |

+

'penalty_alpha': 0,

|

| 48 |

+

'num_beams': 1,

|

| 49 |

+

'length_penalty': 1,

|

| 50 |

+

'early_stopping': False, 'add_bos_token': True, 'ban_eos_token': False,

|

| 51 |

+

'truncation_length': 2048, 'custom_stopping_strings': '',

|

| 52 |

+

'cpu_memory': 0, 'auto_devices': False, 'disk': False, 'cpu': False, 'bf16': False,

|

| 53 |

+

'load_in_8bit': False, 'wbits': 'None', 'groupsize': 'None', 'model_type': 'None',

|

| 54 |

+

'pre_layer': 0, 'gpu_memory_0': 0}

|

| 55 |

+

|

| 56 |

+

def __init__(self, checkPoint: LoaderCheckPoint = None):

|

| 57 |

+

super().__init__()

|

| 58 |

+

self.checkPoint = checkPoint

|

| 59 |

+

|

| 60 |

+

@property

|

| 61 |

+

def _llm_type(self) -> str:

|

| 62 |

+

return "LLamaLLM"

|

| 63 |

+

|

| 64 |

+

@property

|

| 65 |

+

def _check_point(self) -> LoaderCheckPoint:

|

| 66 |

+

return self.checkPoint

|

| 67 |

+

|

| 68 |

+

def encode(self, prompt, add_special_tokens=True, add_bos_token=True, truncation_length=None):

|

| 69 |

+

input_ids = self.checkPoint.tokenizer.encode(str(prompt), return_tensors='pt',

|

| 70 |

+

add_special_tokens=add_special_tokens)

|

| 71 |

+

# This is a hack for making replies more creative.

|

| 72 |

+

if not add_bos_token and input_ids[0][0] == self.checkPoint.tokenizer.bos_token_id:

|

| 73 |

+

input_ids = input_ids[:, 1:]

|

| 74 |

+

|

| 75 |

+

# Llama adds this extra token when the first character is '\n', and this

|

| 76 |

+

# compromises the stopping criteria, so we just remove it

|

| 77 |

+

if type(self.checkPoint.tokenizer) is transformers.LlamaTokenizer and input_ids[0][0] == 29871:

|

| 78 |

+

input_ids = input_ids[:, 1:]

|

| 79 |

+

|

| 80 |

+

# Handling truncation

|

| 81 |

+

if truncation_length is not None:

|

| 82 |

+

input_ids = input_ids[:, -truncation_length:]

|

| 83 |

+

|

| 84 |

+

return input_ids.cuda()

|

| 85 |

+

|

| 86 |

+

def decode(self, output_ids):

|

| 87 |

+

reply = self.checkPoint.tokenizer.decode(output_ids, skip_special_tokens=True)

|

| 88 |

+

return reply

|

| 89 |

+

|

| 90 |

+

# 将历史对话数组转换为文本格式

|

| 91 |

+

def history_to_text(self, query, history):

|

| 92 |

+

"""

|

| 93 |

+

历史对话软提示

|

| 94 |

+

这段代码首先定义了一个名为 history_to_text 的函数,用于将 self.history

|

| 95 |

+

数组转换为所需的文本格式。然后,我们将格式化后的历史文本

|

| 96 |

+

再用 self.encode 将其转换为向量表示。最后,将历史对话向量与当前输入的对话向量拼接在一起。

|

| 97 |

+

:return:

|

| 98 |

+

"""

|

| 99 |

+

formatted_history = ''

|

| 100 |

+

history = history[-self.history_len:] if self.history_len > 0 else []

|

| 101 |

+

if len(history) > 0:

|

| 102 |

+

for i, (old_query, response) in enumerate(history):

|

| 103 |

+

formatted_history += "### Human:{}\n### Assistant:{}\n".format(old_query, response)

|

| 104 |

+

formatted_history += "### Human:{}\n### Assistant:".format(query)

|

| 105 |

+

return formatted_history

|

| 106 |

+

|

| 107 |

+

def prepare_inputs_for_generation(self,

|

| 108 |

+

input_ids: torch.LongTensor):

|

| 109 |

+

"""

|

| 110 |

+

预生成注意力掩码和 输入序列中每个位置的索引的张量

|

| 111 |

+

# TODO 没有思路

|

| 112 |

+

:return:

|

| 113 |

+

"""

|

| 114 |

+

|

| 115 |

+

mask_positions = torch.zeros((1, input_ids.shape[1]), dtype=input_ids.dtype).to(self.checkPoint.model.device)

|

| 116 |

+

|

| 117 |

+

attention_mask = self.get_masks(input_ids, input_ids.device)

|

| 118 |

+

|

| 119 |

+

position_ids = self.get_position_ids(

|

| 120 |

+

input_ids,

|

| 121 |

+

device=input_ids.device,

|

| 122 |

+

mask_positions=mask_positions

|

| 123 |

+

)

|

| 124 |

+

|

| 125 |

+

return input_ids, position_ids, attention_mask

|

| 126 |

+

|

| 127 |

+

@property

|

| 128 |

+

def _history_len(self) -> int:

|

| 129 |

+

return self.history_len

|

| 130 |

+

|

| 131 |

+

def set_history_len(self, history_len: int = 10) -> None:

|

| 132 |

+

self.history_len = history_len

|

| 133 |

+

|

| 134 |

+

def _call(self, prompt: str, stop: Optional[List[str]] = None) -> str:

|

| 135 |

+

print(f"__call:{prompt}")

|

| 136 |

+

if self.logits_processor is None:

|

| 137 |

+

self.logits_processor = LogitsProcessorList()

|

| 138 |

+

self.logits_processor.append(InvalidScoreLogitsProcessor())

|

| 139 |

+

|

| 140 |

+

gen_kwargs = {

|

| 141 |

+

"max_new_tokens": self.max_new_tokens,

|

| 142 |

+

"num_beams": self.num_beams,

|

| 143 |

+

"top_p": self.top_p,

|

| 144 |

+

"do_sample": True,

|

| 145 |

+

"top_k": self.top_k,

|

| 146 |

+

"repetition_penalty": self.repetition_penalty,

|

| 147 |

+

"encoder_repetition_penalty": self.encoder_repetition_penalty,

|

| 148 |

+

"min_length": self.min_length,

|

| 149 |

+

"temperature": self.temperature,

|

| 150 |

+

"eos_token_id": self.checkPoint.tokenizer.eos_token_id,

|

| 151 |

+

"logits_processor": self.logits_processor}

|

| 152 |

+

|

| 153 |

+

# 向量转换

|

| 154 |

+

input_ids = self.encode(prompt, add_bos_token=self.state['add_bos_token'], truncation_length=self.max_new_tokens)

|

| 155 |

+

# input_ids, position_ids, attention_mask = self.prepare_inputs_for_generation(input_ids=filler_input_ids)

|

| 156 |

+

|

| 157 |

+

|

| 158 |