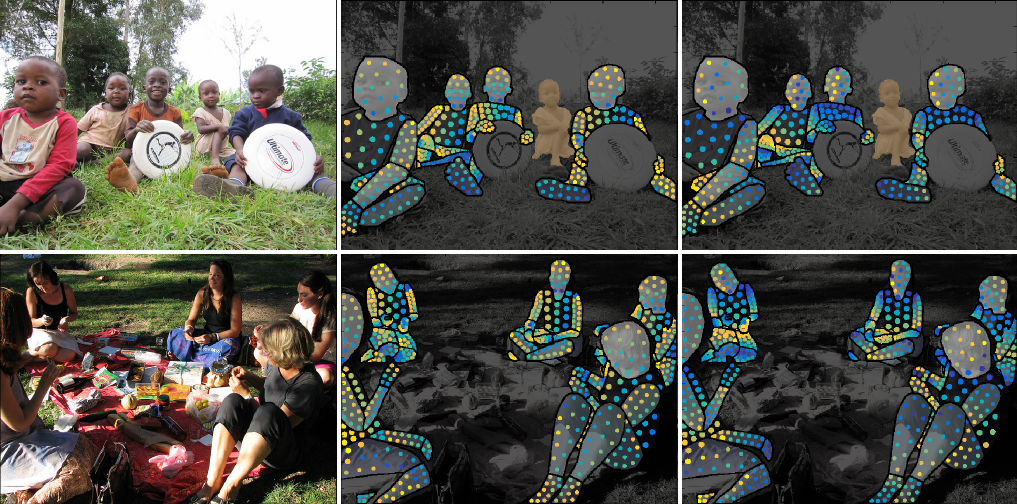

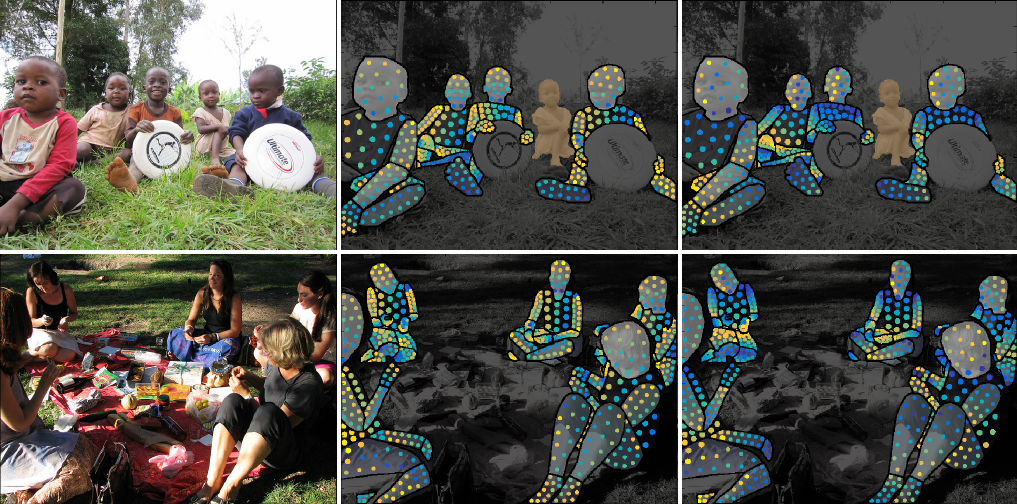

Figure 1. Annotation examples from the DensePose COCO dataset.

DensePose COCO dataset contains about 50K annotated persons on images from the [COCO dataset](https://cocodataset.org/#home) The images are available for download from the [COCO Dataset download page](https://cocodataset.org/#download): [train2014](http://images.cocodataset.org/zips/train2014.zip), [val2014](http://images.cocodataset.org/zips/val2014.zip). The details on available annotations and their download links are given below. ### Chart-based Annotations Chart-based DensePose COCO annotations are available for the instances of category `person` and correspond to the model shown in Figure 2. They include `dp_x`, `dp_y`, `dp_I`, `dp_U` and `dp_V` fields for annotated points (~100 points per annotated instance) and `dp_masks` field, which encodes coarse segmentation into 14 parts in the following order: `Torso`, `Right Hand`, `Left Hand`, `Left Foot`, `Right Foot`, `Upper Leg Right`, `Upper Leg Left`, `Lower Leg Right`, `Lower Leg Left`, `Upper Arm Left`, `Upper Arm Right`, `Lower Arm Left`, `Lower Arm Right`, `Head`.

Figure 2. Human body charts (fine segmentation) and the associated 14 body parts depicted with rounded rectangles (coarse segmentation).

The dataset splits used in the training schedules are `train2014`, `valminusminival2014` and `minival2014`. `train2014` and `valminusminival2014` are used for training, and `minival2014` is used for validation. The table with annotation download links, which summarizes the number of annotated instances and images for each of the dataset splits is given below:| Name | # inst | # images | file size | download |

|---|---|---|---|---|

| densepose_train2014 | 39210 | 26437 | 526M | densepose_train2014.json |

| densepose_valminusminival2014 | 7297 | 5984 | 105M | densepose_valminusminival2014.json |

| densepose_minival2014 | 2243 | 1508 | 31M | densepose_minival2014.json |

| Name | # inst | # images | file size | download |

|---|---|---|---|---|

| densepose_train2014_cse | 39210 | 26437 | 554M | densepose_train2014_cse.json |

| densepose_valminusminival2014_cse | 7297 | 5984 | 110M | densepose_valminusminival2014_cse.json |

| densepose_minival2014_cse | 2243 | 1508 | 32M | densepose_minival2014_cse.json |

Figure 3. Annotation examples from the PoseTrack dataset.

DensePose PoseTrack dataset contains annotated image sequences. To download the images for this dataset, please follow the instructions from the [PoseTrack Download Page](https://posetrack.net/users/download.php). ### Chart-based Annotations Chart-based DensePose PoseTrack annotations are available for the instances with category `person` and correspond to the model shown in Figure 2. They include `dp_x`, `dp_y`, `dp_I`, `dp_U` and `dp_V` fields for annotated points (~100 points per annotated instance) and `dp_masks` field, which encodes coarse segmentation into the same 14 parts as in DensePose COCO. The dataset splits used in the training schedules are `posetrack_train2017` (train set) and `posetrack_val2017` (validation set). The table with annotation download links, which summarizes the number of annotated instances, instance tracks and images for the dataset splits is given below:| Name | # inst | # images | # tracks | file size | download |

|---|---|---|---|---|---|

| densepose_posetrack_train2017 | 8274 | 1680 | 36 | 118M | densepose_posetrack_train2017.json |

| densepose_posetrack_val2017 | 4753 | 782 | 46 | 59M | densepose_posetrack_val2017.json |

Figure 4. Example images from the DensePose Chimps dataset.

DensePose Chimps dataset contains annotated images of chimpanzees. To download the images for this dataset, please use the URL specified in `image_url` field in the annotations. ### Chart-based Annotations Chart-based DensePose Chimps annotations correspond to the human model shown in Figure 2, the instances are thus annotated to belong to the `person` category. They include `dp_x`, `dp_y`, `dp_I`, `dp_U` and `dp_V` fields for annotated points (~3 points per annotated instance) and `dp_masks` field, which encodes foreground mask in RLE format. Chart-base DensePose Chimps annotations are used for validation only. The table with annotation download link, which summarizes the number of annotated instances and images is given below:| Name | # inst | # images | file size | download |

|---|---|---|---|---|

| densepose_chimps | 930 | 654 | 6M | densepose_chimps_full_v2.json |

| Name | # inst | # images | file size | download |

|---|---|---|---|---|

| densepose_chimps_cse_train | 500 | 350 | 3M | densepose_chimps_cse_train.json |

| densepose_chimps_cse_val | 430 | 304 | 3M | densepose_chimps_cse_val.json |

Figure 5. Example images from the DensePose LVIS dataset.

DensePose LVIS dataset contains segmentation and DensePose annotations for animals on images from the [LVIS dataset](https://www.lvisdataset.org/dataset). The images are available for download through the links: [train2017](http://images.cocodataset.org/zips/train2017.zip), [val2017](http://images.cocodataset.org/zips/val2017.zip). ### Continuous Surface Embeddings Annotations Continuous surface embeddings (CSE) annotations for DensePose LVIS include `dp_x`, `dp_y` and `dp_vertex` point-based annotations (~3 points per annotated instance) and a `ref_model` field which refers to a 3D model that corresponds to the instance. Instances from 9 animal categories were annotated with CSE DensePose data: bear, cow, cat, dog, elephant, giraffe, horse, sheep and zebra. Foreground masks are available from instance segmentation annotations (`segmentation` field) in polygon format, they are stored as a 2D list `[[x1 y1 x2 y2...],[x1 y1 ...],...]`. We used two datasets, each constising of one training (`train`) and validation (`val`) subsets: the first one (`ds1`) was used in [Neverova et al, 2020](https://arxiv.org/abs/2011.12438). The second one (`ds2`), was used in [Neverova et al, 2021](). The summary of the available datasets is given below:| All Data | Selected Animals (9 categories) |

File | ||||||

|---|---|---|---|---|---|---|---|---|

| Name | # cat | # img | # segm | # img | # segm | # dp | size | download |

| ds1_train | 556 | 4141 | 23985 | 4141 | 9472 | 5184 | 46M | densepose_lvis_v1_ds1_train_v1.json |

| ds1_val | 251 | 571 | 3281 | 571 | 1537 | 1036 | 5M | densepose_lvis_v1_ds1_val_v1.json |

| ds2_train | 1203 | 99388 | 1270141 | 13746 | 46964 | 18932 | 1051M | densepose_lvis_v1_ds2_train_v1.json |

| ds2_val | 9 | 2690 | 9155 | 2690 | 9155 | 3604 | 24M | densepose_lvis_v1_ds2_val_v1.json |