Spaces:

Runtime error

Runtime error

update

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- README.md +0 -14

- __pycache__/model.cpython-37.pyc +0 -0

- __pycache__/model.cpython-38.pyc +0 -0

- api/61.png +0 -0

- app.py +36 -147

- bird.jpeg +0 -0

- model.py +515 -0

- models/VLE/__init__.py +0 -11

- models/VLE/__pycache__/__init__.cpython-39.pyc +0 -0

- models/VLE/__pycache__/configuration_vle.cpython-39.pyc +0 -0

- models/VLE/__pycache__/modeling_vle.cpython-39.pyc +0 -0

- models/VLE/__pycache__/pipeline_vle.cpython-39.pyc +0 -0

- models/VLE/__pycache__/processing_vle.cpython-39.pyc +0 -0

- models/VLE/configuration_vle.py +0 -143

- models/VLE/modeling_vle.py +0 -709

- models/VLE/pipeline_vle.py +0 -166

- models/VLE/processing_vle.py +0 -149

- qa9.jpg +0 -3

- requirements.txt +1 -4

- timm/__init__.py +4 -0

- timm/__pycache__/__init__.cpython-37.pyc +0 -0

- timm/__pycache__/__init__.cpython-38.pyc +0 -0

- timm/__pycache__/version.cpython-37.pyc +0 -0

- timm/__pycache__/version.cpython-38.pyc +0 -0

- timm/data/__init__.py +12 -0

- timm/data/__pycache__/__init__.cpython-37.pyc +0 -0

- timm/data/__pycache__/__init__.cpython-38.pyc +0 -0

- timm/data/__pycache__/auto_augment.cpython-37.pyc +0 -0

- timm/data/__pycache__/auto_augment.cpython-38.pyc +0 -0

- timm/data/__pycache__/config.cpython-37.pyc +0 -0

- timm/data/__pycache__/config.cpython-38.pyc +0 -0

- timm/data/__pycache__/constants.cpython-37.pyc +0 -0

- timm/data/__pycache__/constants.cpython-38.pyc +0 -0

- timm/data/__pycache__/dataset.cpython-37.pyc +0 -0

- timm/data/__pycache__/dataset.cpython-38.pyc +0 -0

- timm/data/__pycache__/dataset_factory.cpython-37.pyc +0 -0

- timm/data/__pycache__/dataset_factory.cpython-38.pyc +0 -0

- timm/data/__pycache__/distributed_sampler.cpython-37.pyc +0 -0

- timm/data/__pycache__/distributed_sampler.cpython-38.pyc +0 -0

- timm/data/__pycache__/loader.cpython-37.pyc +0 -0

- timm/data/__pycache__/loader.cpython-38.pyc +0 -0

- timm/data/__pycache__/mixup.cpython-37.pyc +0 -0

- timm/data/__pycache__/mixup.cpython-38.pyc +0 -0

- timm/data/__pycache__/random_erasing.cpython-37.pyc +0 -0

- timm/data/__pycache__/random_erasing.cpython-38.pyc +0 -0

- timm/data/__pycache__/real_labels.cpython-37.pyc +0 -0

- timm/data/__pycache__/real_labels.cpython-38.pyc +0 -0

- timm/data/__pycache__/transforms.cpython-37.pyc +0 -0

- timm/data/__pycache__/transforms.cpython-38.pyc +0 -0

- timm/data/__pycache__/transforms_factory.cpython-37.pyc +0 -0

README.md

DELETED

|

@@ -1,14 +0,0 @@

|

|

| 1 |

-

---

|

| 2 |

-

title: VQA CAP GPT

|

| 3 |

-

emoji: 😻

|

| 4 |

-

colorFrom: gray

|

| 5 |

-

colorTo: red

|

| 6 |

-

sdk: gradio

|

| 7 |

-

sdk_version: 3.19.1

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: openrail

|

| 11 |

-

duplicated_from: xxx1/VQA_CAP_GPT

|

| 12 |

-

---

|

| 13 |

-

|

| 14 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

__pycache__/model.cpython-37.pyc

ADDED

|

Binary file (12.1 kB). View file

|

|

|

__pycache__/model.cpython-38.pyc

ADDED

|

Binary file (12.2 kB). View file

|

|

|

api/61.png

ADDED

|

app.py

CHANGED

|

@@ -2,129 +2,47 @@ import string

|

|

| 2 |

import gradio as gr

|

| 3 |

import requests

|

| 4 |

import torch

|

| 5 |

-

from models.VLE import VLEForVQA, VLEProcessor, VLEForVQAPipeline

|

| 6 |

from PIL import Image

|

| 7 |

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

|

|

|

|

|

|

|

|

|

| 12 |

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

model_vqa = BlipForQuestionAnswering.from_pretrained("Salesforce/blip-vqa-capfilt-large").to(device)

|

| 20 |

-

|

| 21 |

-

from transformers import BlipProcessor, BlipForConditionalGeneration

|

| 22 |

-

|

| 23 |

-

cap_processor = BlipProcessor.from_pretrained("Salesforce/blip-image-captioning-large")

|

| 24 |

-

cap_model = BlipForConditionalGeneration.from_pretrained("Salesforce/blip-image-captioning-large")

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

def caption(input_image):

|

| 29 |

-

inputs = cap_processor(input_image, return_tensors="pt")

|

| 30 |

-

# inputs["num_beams"] = 1

|

| 31 |

-

# inputs['num_return_sequences'] =1

|

| 32 |

-

out = cap_model.generate(**inputs)

|

| 33 |

-

return "\n".join(cap_processor.batch_decode(out, skip_special_tokens=True))

|

| 34 |

-

import openai

|

| 35 |

-

import os

|

| 36 |

-

openai.api_key= os.getenv('openai_appkey')

|

| 37 |

-

def gpt3_short(question,vqa_answer,caption):

|

| 38 |

-

vqa_answer,vqa_score=vqa_answer

|

| 39 |

-

prompt="This is the caption of a picture: "+caption+". Question: "+question+" VQA model predicts:"+"A: "+vqa_answer[0]+", socre:"+str(vqa_score[0])+\

|

| 40 |

-

"; B: "+vqa_answer[1]+", score:"+str(vqa_score[1])+"; C: "+vqa_answer[2]+", score:"+str(vqa_score[2])+\

|

| 41 |

-

"; D: "+vqa_answer[3]+', score:'+str(vqa_score[3])+\

|

| 42 |

-

". Choose A if it is not in conflict with the description of the picture and A's score is bigger than 0.8; otherwise choose the B, C or D based on the description."

|

| 43 |

-

|

| 44 |

-

# prompt=caption+"\n"+question+"\n"+vqa_answer+"\n Tell me the right answer."

|

| 45 |

-

response = openai.Completion.create(

|

| 46 |

-

engine="text-davinci-003",

|

| 47 |

-

prompt=prompt,

|

| 48 |

-

max_tokens=10,

|

| 49 |

-

n=1,

|

| 50 |

-

stop=None,

|

| 51 |

-

temperature=0.7,

|

| 52 |

-

)

|

| 53 |

-

answer = response.choices[0].text.strip()

|

| 54 |

-

|

| 55 |

-

llm_ans=answer

|

| 56 |

-

choice=set(["A","B","C","D"])

|

| 57 |

-

llm_ans=llm_ans.replace("\n"," ").replace(":"," ").replace("."," " ).replace(","," ")

|

| 58 |

-

sllm_ans=llm_ans.split(" ")

|

| 59 |

-

for cho in sllm_ans:

|

| 60 |

-

if cho in choice:

|

| 61 |

-

llm_ans=cho

|

| 62 |

-

break

|

| 63 |

-

if llm_ans not in choice:

|

| 64 |

-

llm_ans="A"

|

| 65 |

-

llm_ans=vqa_answer[ord(llm_ans)-ord("A")]

|

| 66 |

-

answer=llm_ans

|

| 67 |

|

| 68 |

-

|

| 69 |

-

|

| 70 |

-

|

| 71 |

-

|

| 72 |

-

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

|

| 78 |

-

|

| 79 |

-

|

| 80 |

-

|

| 81 |

-

|

| 82 |

-

|

| 83 |

-

|

| 84 |

-

|

| 85 |

-

|

| 86 |

-

|

| 87 |

-

|

| 88 |

-

|

| 89 |

-

|

| 90 |

-

temperature=0.7,

|

| 91 |

-

)

|

| 92 |

-

answer = response.choices[0].text.strip()

|

| 93 |

-

return answer

|

| 94 |

-

def gpt3(question,vqa_answer,caption):

|

| 95 |

-

prompt=caption+"\n"+question+"\n"+vqa_answer+"\n Tell me the right answer."

|

| 96 |

-

response = openai.Completion.create(

|

| 97 |

-

engine="text-davinci-003",

|

| 98 |

-

prompt=prompt,

|

| 99 |

-

max_tokens=30,

|

| 100 |

-

n=1,

|

| 101 |

-

stop=None,

|

| 102 |

-

temperature=0.7,

|

| 103 |

-

)

|

| 104 |

-

answer = response.choices[0].text.strip()

|

| 105 |

-

# return "input_text:\n"+prompt+"\n\n output_answer:\n"+answer

|

| 106 |

-

return answer

|

| 107 |

|

| 108 |

-

def vle(input_image,input_text):

|

| 109 |

-

vqa_answers = vqa_pipeline({"image":input_image, "question":input_text}, top_k=4)

|

| 110 |

-

# return [" ".join([str(value) for key,value in vqa.items()] )for vqa in vqa_answers]

|

| 111 |

-

return [vqa['answer'] for vqa in vqa_answers],[vqa['score'] for vqa in vqa_answers]

|

| 112 |

-

def inference_chat(input_image,input_text):

|

| 113 |

-

cap=caption(input_image)

|

| 114 |

-

print(cap)

|

| 115 |

-

# inputs = processor(images=input_image, text=input_text,return_tensors="pt")

|

| 116 |

-

# inputs["max_length"] = 10

|

| 117 |

-

# inputs["num_beams"] = 5

|

| 118 |

-

# inputs['num_return_sequences'] =4

|

| 119 |

-

# out = model_vqa.generate(**inputs)

|

| 120 |

-

# out=processor.batch_decode(out, skip_special_tokens=True)

|

| 121 |

|

| 122 |

-

out=vle(input_image,input_text)

|

| 123 |

-

# vqa="\n".join(out[0])

|

| 124 |

-

# gpt3_out=gpt3(input_text,vqa,cap)

|

| 125 |

-

gpt3_out=gpt3_long(input_text,out,cap)

|

| 126 |

-

gpt3_out1=gpt3_short(input_text,out,cap)

|

| 127 |

-

return out[0][0], gpt3_out,gpt3_out1

|

| 128 |

title = """# VQA with VLE and LLM"""

|

| 129 |

description = """**VLE** (Visual-Language Encoder) is an image-text multimodal understanding model built on the pre-trained text and image encoders. See https://github.com/iflytek/VLE for more details.

|

| 130 |

We demonstrate visual question answering systems built with VLE and LLM."""

|

|

@@ -169,14 +87,6 @@ with gr.Blocks(

|

|

| 169 |

caption_output_v1 = gr.Textbox(lines=0, label="VQA + LLM (short answer)")

|

| 170 |

gpt3_output_v1 = gr.Textbox(lines=0, label="VQA+LLM (long answer)")

|

| 171 |

|

| 172 |

-

|

| 173 |

-

|

| 174 |

-

# image_input.change(

|

| 175 |

-

# lambda: ("", [],"","",""),

|

| 176 |

-

# [],

|

| 177 |

-

# [ caption_output, state,caption_output,gpt3_output_v1,caption_output_v1],

|

| 178 |

-

# queue=False,

|

| 179 |

-

# )

|

| 180 |

chat_input.submit(

|

| 181 |

inference_chat,

|

| 182 |

[

|

|

@@ -199,28 +109,7 @@ with gr.Blocks(

|

|

| 199 |

],

|

| 200 |

[caption_output,gpt3_output_v1,caption_output_v1],

|

| 201 |

)

|

| 202 |

-

|

| 203 |

-

cap_submit_button.click(

|

| 204 |

-

caption,

|

| 205 |

-

[

|

| 206 |

-

image_input,

|

| 207 |

-

|

| 208 |

-

],

|

| 209 |

-

[caption_output_v1],

|

| 210 |

-

)

|

| 211 |

-

gpt3_submit_button.click(

|

| 212 |

-

gpt3,

|

| 213 |

-

[

|

| 214 |

-

chat_input,

|

| 215 |

-

caption_output ,

|

| 216 |

-

caption_output_v1,

|

| 217 |

-

],

|

| 218 |

-

[gpt3_output_v1],

|

| 219 |

-

)

|

| 220 |

-

'''

|

| 221 |

-

examples=[['bird.jpeg',"How many birds are there in the tree?","2","2","2"],

|

| 222 |

-

['qa9.jpg',"What type of vehicle is being pulled by the horses ?",'carriage','sled','Sled'],

|

| 223 |

-

['upload4.jpg',"What is this old man doing?","fishing","fishing","Fishing"]]

|

| 224 |

examples = gr.Examples(

|

| 225 |

examples=examples,inputs=[image_input, chat_input,caption_output,caption_output_v1,gpt3_output_v1],

|

| 226 |

)

|

|

|

|

| 2 |

import gradio as gr

|

| 3 |

import requests

|

| 4 |

import torch

|

|

|

|

| 5 |

from PIL import Image

|

| 6 |

|

| 7 |

+

rationale_model_dir = "cooelf/MM-CoT-UnifiedQA-Base-Rationale-Joint"

|

| 8 |

+

vit_model = timm.create_model("vit_base_patch16_384", pretrained=True, num_classes=0)

|

| 9 |

+

vit_model.eval()

|

| 10 |

+

config = resolve_data_config({}, model=vit_model)

|

| 11 |

+

transform = create_transform(**config)

|

| 12 |

+

tokenizer = T5Tokenizer.from_pretrained(rationale_model_dir)

|

| 13 |

+

r_model = T5ForMultimodalGeneration.from_pretrained(rationale_model_dir, patch_size=(577, 768))

|

| 14 |

|

| 15 |

+

def inference_chat(input_image,input_text):

|

| 16 |

+

with torch.no_grad():

|

| 17 |

+

img = Image.open(input_image).convert("RGB")

|

| 18 |

+

input = transform(img).unsqueeze(0)

|

| 19 |

+

out = vit_model.forward_features(input)

|

| 20 |

+

image_features = out.detach()

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 21 |

|

| 22 |

+

input_ids = tokenizer(input_text, return_tensors='pt', padding=True).input_ids

|

| 23 |

+

source = tokenizer.batch_encode_plus(

|

| 24 |

+

[input_text],

|

| 25 |

+

max_length=512,

|

| 26 |

+

pad_to_max_length=True,

|

| 27 |

+

truncation=True,

|

| 28 |

+

padding="max_length",

|

| 29 |

+

return_tensors="pt",

|

| 30 |

+

)

|

| 31 |

+

source_ids = source["input_ids"]

|

| 32 |

+

source_mask = source["attention_mask"]

|

| 33 |

+

rationale = r_model.generate(

|

| 34 |

+

input_ids=source_ids,

|

| 35 |

+

attention_mask=source_mask,

|

| 36 |

+

image_ids=image_features,

|

| 37 |

+

max_length=512,

|

| 38 |

+

num_beams=1,

|

| 39 |

+

do_sample=False

|

| 40 |

+

)

|

| 41 |

+

gpt3_out = tokenizer.batch_decode(rationale, skip_special_tokens=True)[0]

|

| 42 |

+

gpt3_out1 = gpt3_out

|

| 43 |

+

return out[0][0], gpt3_out,gpt3_out1

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 44 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 45 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 46 |

title = """# VQA with VLE and LLM"""

|

| 47 |

description = """**VLE** (Visual-Language Encoder) is an image-text multimodal understanding model built on the pre-trained text and image encoders. See https://github.com/iflytek/VLE for more details.

|

| 48 |

We demonstrate visual question answering systems built with VLE and LLM."""

|

|

|

|

| 87 |

caption_output_v1 = gr.Textbox(lines=0, label="VQA + LLM (short answer)")

|

| 88 |

gpt3_output_v1 = gr.Textbox(lines=0, label="VQA+LLM (long answer)")

|

| 89 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 90 |

chat_input.submit(

|

| 91 |

inference_chat,

|

| 92 |

[

|

|

|

|

| 109 |

],

|

| 110 |

[caption_output,gpt3_output_v1,caption_output_v1],

|

| 111 |

)

|

| 112 |

+

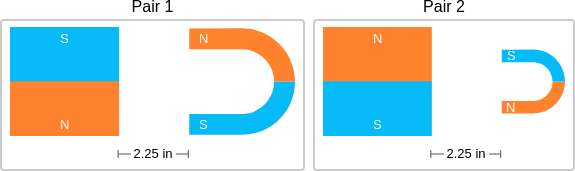

examples=[['api/61.png',"Think about the magnetic force between the magnets in each pair. Which of the following statements is true?","The images below show two pairs of magnets. The magnets in different pairs do not affect each other. All the magnets shown are made of the same material, but some of them are different sizes and shapes.","(A) The magnitude of the magnetic force is the same in both pairs. (B) The magnitude of the magnetic force is smaller in Pair 1. (C) The magnitude of the magnetic force is smaller in Pair 2.","Magnet sizes affect the magnitude of the magnetic force. Imagine magnets that are the same shape and made of the same material. The smaller the magnets, the smaller the magnitude of the magnetic force between them.nMagnet A is the same size in both pairs. But Magnet B is smaller in Pair 2 than in Pair 1. So, the magnitude of the magnetic force is smaller in Pair 2 than in Pair 1."],

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 113 |

examples = gr.Examples(

|

| 114 |

examples=examples,inputs=[image_input, chat_input,caption_output,caption_output_v1,gpt3_output_v1],

|

| 115 |

)

|

bird.jpeg

DELETED

|

Binary file (49.1 kB)

|

|

|

model.py

ADDED

|

@@ -0,0 +1,515 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

'''

|

| 2 |

+

Adapted from https://github.com/huggingface/transformers

|

| 3 |

+

'''

|

| 4 |

+

|

| 5 |

+

from transformers import T5Config, T5ForConditionalGeneration

|

| 6 |

+

from transformers.models.t5.modeling_t5 import T5Stack, __HEAD_MASK_WARNING_MSG, T5Block, T5LayerNorm

|

| 7 |

+

import copy

|

| 8 |

+

from transformers.modeling_outputs import ModelOutput, BaseModelOutput, BaseModelOutputWithPast, BaseModelOutputWithPastAndCrossAttentions, Seq2SeqLMOutput, Seq2SeqModelOutput

|

| 9 |

+

from typing import Any, Callable, Dict, Iterable, List, Optional, Tuple

|

| 10 |

+

import math

|

| 11 |

+

import os

|

| 12 |

+

import warnings

|

| 13 |

+

from typing import Optional, Tuple, Union

|

| 14 |

+

import torch

|

| 15 |

+

from torch import nn

|

| 16 |

+

from torch.nn import CrossEntropyLoss

|

| 17 |

+

from transformers.modeling_outputs import (

|

| 18 |

+

BaseModelOutput,

|

| 19 |

+

Seq2SeqLMOutput,

|

| 20 |

+

)

|

| 21 |

+

from transformers.utils.model_parallel_utils import assert_device_map, get_device_map

|

| 22 |

+

from torch.utils.checkpoint import checkpoint

|

| 23 |

+

|

| 24 |

+

class JointEncoder(T5Stack):

|

| 25 |

+

def __init__(self, config, embed_tokens=None, patch_size=None):

|

| 26 |

+

super().__init__(config)

|

| 27 |

+

|

| 28 |

+

self.embed_tokens = embed_tokens

|

| 29 |

+

self.is_decoder = config.is_decoder

|

| 30 |

+

|

| 31 |

+

self.patch_num, self.patch_dim = patch_size

|

| 32 |

+

self.image_dense = nn.Linear(self.patch_dim, config.d_model)

|

| 33 |

+

self.mha_layer = torch.nn.MultiheadAttention(embed_dim=config.hidden_size, kdim=config.hidden_size, vdim=config.hidden_size, num_heads=1, batch_first=True)

|

| 34 |

+

self.gate_dense = nn.Linear(2*config.hidden_size, config.hidden_size)

|

| 35 |

+

self.sigmoid = nn.Sigmoid()

|

| 36 |

+

|

| 37 |

+

self.block = nn.ModuleList(

|

| 38 |

+

[T5Block(config, has_relative_attention_bias=bool(i == 0)) for i in range(config.num_layers)]

|

| 39 |

+

)

|

| 40 |

+

self.final_layer_norm = T5LayerNorm(config.d_model, eps=config.layer_norm_epsilon)

|

| 41 |

+

self.dropout = nn.Dropout(config.dropout_rate)

|

| 42 |

+

|

| 43 |

+

# Initialize weights and apply final processing

|

| 44 |

+

self.post_init()

|

| 45 |

+

# Model parallel

|

| 46 |

+

self.model_parallel = False

|

| 47 |

+

self.device_map = None

|

| 48 |

+

self.gradient_checkpointing = False

|

| 49 |

+

|

| 50 |

+

def parallelize(self, device_map=None):

|

| 51 |

+

warnings.warn(

|

| 52 |

+

"`T5Stack.parallelize` is deprecated and will be removed in v5 of Transformers, you should load your model"

|

| 53 |

+

" with `device_map='balanced'` in the call to `from_pretrained`. You can also provide your own"

|

| 54 |

+

" `device_map` but it needs to be a dictionary module_name to device, so for instance {'block.0': 0,"

|

| 55 |

+

" 'block.1': 1, ...}",

|

| 56 |

+

FutureWarning,

|

| 57 |

+

)

|

| 58 |

+

# Check validity of device_map

|

| 59 |

+

self.device_map = (

|

| 60 |

+

get_device_map(len(self.block), range(torch.cuda.device_count())) if device_map is None else device_map

|

| 61 |

+

)

|

| 62 |

+

assert_device_map(self.device_map, len(self.block))

|

| 63 |

+

self.model_parallel = True

|

| 64 |

+

self.first_device = "cpu" if "cpu" in self.device_map.keys() else "cuda:" + str(min(self.device_map.keys()))

|

| 65 |

+

self.last_device = "cuda:" + str(max(self.device_map.keys()))

|

| 66 |

+

# Load onto devices

|

| 67 |

+

for k, v in self.device_map.items():

|

| 68 |

+

for layer in v:

|

| 69 |

+

cuda_device = "cuda:" + str(k)

|

| 70 |

+

self.block[layer] = self.block[layer].to(cuda_device)

|

| 71 |

+

|

| 72 |

+

# Set embed_tokens to first layer

|

| 73 |

+

self.embed_tokens = self.embed_tokens.to(self.first_device)

|

| 74 |

+

# Set final layer norm to last device

|

| 75 |

+

self.final_layer_norm = self.final_layer_norm.to(self.last_device)

|

| 76 |

+

|

| 77 |

+

def deparallelize(self):

|

| 78 |

+

warnings.warn(

|

| 79 |

+

"Like `parallelize`, `deparallelize` is deprecated and will be removed in v5 of Transformers.",

|

| 80 |

+

FutureWarning,

|

| 81 |

+

)

|

| 82 |

+

self.model_parallel = False

|

| 83 |

+

self.device_map = None

|

| 84 |

+

self.first_device = "cpu"

|

| 85 |

+

self.last_device = "cpu"

|

| 86 |

+

for i in range(len(self.block)):

|

| 87 |

+

self.block[i] = self.block[i].to("cpu")

|

| 88 |

+

self.embed_tokens = self.embed_tokens.to("cpu")

|

| 89 |

+

self.final_layer_norm = self.final_layer_norm.to("cpu")

|

| 90 |

+

torch.cuda.empty_cache()

|

| 91 |

+

|

| 92 |

+

def get_input_embeddings(self):

|

| 93 |

+

return self.embed_tokens

|

| 94 |

+

|

| 95 |

+

def set_input_embeddings(self, new_embeddings):

|

| 96 |

+

self.embed_tokens = new_embeddings

|

| 97 |

+

|

| 98 |

+

def forward(

|

| 99 |

+

self,

|

| 100 |

+

input_ids=None,

|

| 101 |

+

attention_mask=None,

|

| 102 |

+

encoder_hidden_states=None,

|

| 103 |

+

encoder_attention_mask=None,

|

| 104 |

+

inputs_embeds=None,

|

| 105 |

+

image_ids=None,

|

| 106 |

+

head_mask=None,

|

| 107 |

+

cross_attn_head_mask=None,

|

| 108 |

+

past_key_values=None,

|

| 109 |

+

use_cache=None,

|

| 110 |

+

output_attentions=None,

|

| 111 |

+

output_hidden_states=None,

|

| 112 |

+

return_dict=None,

|

| 113 |

+

):

|

| 114 |

+

# Model parallel

|

| 115 |

+

if self.model_parallel:

|

| 116 |

+

torch.cuda.set_device(self.first_device)

|

| 117 |

+

self.embed_tokens = self.embed_tokens.to(self.first_device)

|

| 118 |

+

use_cache = use_cache if use_cache is not None else self.config.use_cache

|

| 119 |

+

output_attentions = output_attentions if output_attentions is not None else self.config.output_attentions

|

| 120 |

+

output_hidden_states = (

|

| 121 |

+

output_hidden_states if output_hidden_states is not None else self.config.output_hidden_states

|

| 122 |

+

)

|

| 123 |

+

return_dict = return_dict if return_dict is not None else self.config.use_return_dict

|

| 124 |

+

|

| 125 |

+

if input_ids is not None and inputs_embeds is not None:

|

| 126 |

+

err_msg_prefix = "decoder_" if self.is_decoder else ""

|

| 127 |

+

raise ValueError(

|

| 128 |

+

f"You cannot specify both {err_msg_prefix}input_ids and {err_msg_prefix}inputs_embeds at the same time"

|

| 129 |

+

)

|

| 130 |

+

elif input_ids is not None:

|

| 131 |

+

input_shape = input_ids.size()

|

| 132 |

+

input_ids = input_ids.view(-1, input_shape[-1])

|

| 133 |

+

elif inputs_embeds is not None:

|

| 134 |

+

input_shape = inputs_embeds.size()[:-1]

|

| 135 |

+

else:

|

| 136 |

+

err_msg_prefix = "decoder_" if self.is_decoder else ""

|

| 137 |

+

raise ValueError(f"You have to specify either {err_msg_prefix}input_ids or {err_msg_prefix}inputs_embeds")

|

| 138 |

+

|

| 139 |

+

if inputs_embeds is None:

|

| 140 |

+

assert self.embed_tokens is not None, "You have to initialize the model with valid token embeddings"

|

| 141 |

+

inputs_embeds = self.embed_tokens(input_ids)

|

| 142 |

+

|

| 143 |

+

batch_size, seq_length = input_shape

|

| 144 |

+

|

| 145 |

+

# required mask seq length can be calculated via length of past

|

| 146 |

+

mask_seq_length = past_key_values[0][0].shape[2] + seq_length if past_key_values is not None else seq_length

|

| 147 |

+

|

| 148 |

+

if use_cache is True:

|

| 149 |

+

assert self.is_decoder, f"`use_cache` can only be set to `True` if {self} is used as a decoder"

|

| 150 |

+

|

| 151 |

+

if attention_mask is None:

|

| 152 |

+

attention_mask = torch.ones(batch_size, mask_seq_length, device=inputs_embeds.device)

|

| 153 |

+

if self.is_decoder and encoder_attention_mask is None and encoder_hidden_states is not None:

|

| 154 |

+

encoder_seq_length = encoder_hidden_states.shape[1]

|

| 155 |

+

encoder_attention_mask = torch.ones(

|

| 156 |

+

batch_size, encoder_seq_length, device=inputs_embeds.device, dtype=torch.long

|

| 157 |

+

)

|

| 158 |

+

|

| 159 |

+

# initialize past_key_values with `None` if past does not exist

|

| 160 |

+

if past_key_values is None:

|

| 161 |

+

past_key_values = [None] * len(self.block)

|

| 162 |

+

|

| 163 |

+

# We can provide a self-attention mask of dimensions [batch_size, from_seq_length, to_seq_length]

|

| 164 |

+

# ourselves in which case we just need to make it broadcastable to all heads.

|

| 165 |

+

extended_attention_mask = self.get_extended_attention_mask(attention_mask, input_shape)

|

| 166 |

+

|

| 167 |

+

# If a 2D or 3D attention mask is provided for the cross-attention

|

| 168 |

+

# we need to make broadcastable to [batch_size, num_heads, seq_length, seq_length]

|

| 169 |

+

if self.is_decoder and encoder_hidden_states is not None:

|

| 170 |

+

encoder_batch_size, encoder_sequence_length, _ = encoder_hidden_states.size()

|

| 171 |

+

encoder_hidden_shape = (encoder_batch_size, encoder_sequence_length)

|

| 172 |

+

if encoder_attention_mask is None:

|

| 173 |

+

encoder_attention_mask = torch.ones(encoder_hidden_shape, device=inputs_embeds.device)

|

| 174 |

+

encoder_extended_attention_mask = self.invert_attention_mask(encoder_attention_mask)

|

| 175 |

+

else:

|

| 176 |

+

encoder_extended_attention_mask = None

|

| 177 |

+

|

| 178 |

+

# Prepare head mask if needed

|

| 179 |

+

head_mask = self.get_head_mask(head_mask, self.config.num_layers)

|

| 180 |

+

cross_attn_head_mask = self.get_head_mask(cross_attn_head_mask, self.config.num_layers)

|

| 181 |

+

present_key_value_states = () if use_cache else None

|

| 182 |

+

all_hidden_states = () if output_hidden_states else None

|

| 183 |

+

all_attentions = () if output_attentions else None

|

| 184 |

+

all_cross_attentions = () if (output_attentions and self.is_decoder) else None

|

| 185 |

+

position_bias = None

|

| 186 |

+

encoder_decoder_position_bias = None

|

| 187 |

+

|

| 188 |

+

hidden_states = self.dropout(inputs_embeds)

|

| 189 |

+

|

| 190 |

+

for i, (layer_module, past_key_value) in enumerate(zip(self.block, past_key_values)):

|

| 191 |

+

layer_head_mask = head_mask[i]

|

| 192 |

+

cross_attn_layer_head_mask = cross_attn_head_mask[i]

|

| 193 |

+

# Model parallel

|

| 194 |

+

if self.model_parallel:

|

| 195 |

+

torch.cuda.set_device(hidden_states.device)

|

| 196 |

+

# Ensure that attention_mask is always on the same device as hidden_states

|

| 197 |

+

if attention_mask is not None:

|

| 198 |

+

attention_mask = attention_mask.to(hidden_states.device)

|

| 199 |

+

if position_bias is not None:

|

| 200 |

+

position_bias = position_bias.to(hidden_states.device)

|

| 201 |

+

if encoder_hidden_states is not None:

|

| 202 |

+

encoder_hidden_states = encoder_hidden_states.to(hidden_states.device)

|

| 203 |

+

if encoder_extended_attention_mask is not None:

|

| 204 |

+

encoder_extended_attention_mask = encoder_extended_attention_mask.to(hidden_states.device)

|

| 205 |

+

if encoder_decoder_position_bias is not None:

|

| 206 |

+

encoder_decoder_position_bias = encoder_decoder_position_bias.to(hidden_states.device)

|

| 207 |

+

if layer_head_mask is not None:

|

| 208 |

+

layer_head_mask = layer_head_mask.to(hidden_states.device)

|

| 209 |

+

if cross_attn_layer_head_mask is not None:

|

| 210 |

+

cross_attn_layer_head_mask = cross_attn_layer_head_mask.to(hidden_states.device)

|

| 211 |

+

if output_hidden_states:

|

| 212 |

+

all_hidden_states = all_hidden_states + (hidden_states,)

|

| 213 |

+

|

| 214 |

+

if self.gradient_checkpointing and self.training:

|

| 215 |

+

if use_cache:

|

| 216 |

+

logger.warning_once(

|

| 217 |

+

"`use_cache=True` is incompatible with gradient checkpointing. Setting `use_cache=False`..."

|

| 218 |

+

)

|

| 219 |

+

use_cache = False

|

| 220 |

+

|

| 221 |

+

def create_custom_forward(module):

|

| 222 |

+

def custom_forward(*inputs):

|

| 223 |

+

return tuple(module(*inputs, use_cache, output_attentions))

|

| 224 |

+

|

| 225 |

+

return custom_forward

|

| 226 |

+

|

| 227 |

+

layer_outputs = checkpoint(

|

| 228 |

+

create_custom_forward(layer_module),

|

| 229 |

+

hidden_states,

|

| 230 |

+

extended_attention_mask,

|

| 231 |

+

position_bias,

|

| 232 |

+

encoder_hidden_states,

|

| 233 |

+

encoder_extended_attention_mask,

|

| 234 |

+

encoder_decoder_position_bias,

|

| 235 |

+

layer_head_mask,

|

| 236 |

+

cross_attn_layer_head_mask,

|

| 237 |

+

None, # past_key_value is always None with gradient checkpointing

|

| 238 |

+

)

|

| 239 |

+

else:

|

| 240 |

+

layer_outputs = layer_module(

|

| 241 |

+

hidden_states,

|

| 242 |

+

attention_mask=extended_attention_mask,

|

| 243 |

+

position_bias=position_bias,

|

| 244 |

+

encoder_hidden_states=encoder_hidden_states,

|

| 245 |

+

encoder_attention_mask=encoder_extended_attention_mask,

|

| 246 |

+

encoder_decoder_position_bias=encoder_decoder_position_bias,

|

| 247 |

+

layer_head_mask=layer_head_mask,

|

| 248 |

+

cross_attn_layer_head_mask=cross_attn_layer_head_mask,

|

| 249 |

+

past_key_value=past_key_value,

|

| 250 |

+

use_cache=use_cache,

|

| 251 |

+

output_attentions=output_attentions,

|

| 252 |

+

)

|

| 253 |

+

|

| 254 |

+

# layer_outputs is a tuple with:

|

| 255 |

+

# hidden-states, key-value-states, (self-attention position bias), (self-attention weights), (cross-attention position bias), (cross-attention weights)

|

| 256 |

+

if use_cache is False:

|

| 257 |

+

layer_outputs = layer_outputs[:1] + (None,) + layer_outputs[1:]

|

| 258 |

+

|

| 259 |

+

hidden_states, present_key_value_state = layer_outputs[:2]

|

| 260 |

+

|

| 261 |

+

# We share the position biases between the layers - the first layer store them

|

| 262 |

+

# layer_outputs = hidden-states, key-value-states (self-attention position bias), (self-attention weights),

|

| 263 |

+

# (cross-attention position bias), (cross-attention weights)

|

| 264 |

+

position_bias = layer_outputs[2]

|

| 265 |

+

if self.is_decoder and encoder_hidden_states is not None:

|

| 266 |

+

encoder_decoder_position_bias = layer_outputs[4 if output_attentions else 3]

|

| 267 |

+

# append next layer key value states

|

| 268 |

+

if use_cache:

|

| 269 |

+

present_key_value_states = present_key_value_states + (present_key_value_state,)

|

| 270 |

+

|

| 271 |

+

if output_attentions:

|

| 272 |

+

all_attentions = all_attentions + (layer_outputs[3],)

|

| 273 |

+

if self.is_decoder:

|

| 274 |

+

all_cross_attentions = all_cross_attentions + (layer_outputs[5],)

|

| 275 |

+

|

| 276 |

+

# Model Parallel: If it's the last layer for that device, put things on the next device

|

| 277 |

+

if self.model_parallel:

|

| 278 |

+

for k, v in self.device_map.items():

|

| 279 |

+

if i == v[-1] and "cuda:" + str(k) != self.last_device:

|

| 280 |

+

hidden_states = hidden_states.to("cuda:" + str(k + 1))

|

| 281 |

+

|

| 282 |

+

hidden_states = self.final_layer_norm(hidden_states)

|

| 283 |

+

hidden_states = self.dropout(hidden_states)

|

| 284 |

+

|

| 285 |

+

# Add last layer

|

| 286 |

+

if output_hidden_states:

|

| 287 |

+

all_hidden_states = all_hidden_states + (hidden_states,)

|

| 288 |

+

|

| 289 |

+

image_embedding = self.image_dense(image_ids)

|

| 290 |

+

image_att, _ = self.mha_layer(hidden_states, image_embedding, image_embedding)

|

| 291 |

+

merge = torch.cat([hidden_states, image_att], dim=-1)

|

| 292 |

+

gate = self.sigmoid(self.gate_dense(merge))

|

| 293 |

+

hidden_states = (1 - gate) * hidden_states + gate * image_att

|

| 294 |

+

|

| 295 |

+

if not return_dict:

|

| 296 |

+

return tuple(

|

| 297 |

+

v

|

| 298 |

+

for v in [

|

| 299 |

+

hidden_states,

|

| 300 |

+

present_key_value_states,

|

| 301 |

+

all_hidden_states,

|

| 302 |

+

all_attentions,

|

| 303 |

+

all_cross_attentions,

|

| 304 |

+

]

|

| 305 |

+

if v is not None

|

| 306 |

+

)

|

| 307 |

+

return BaseModelOutputWithPastAndCrossAttentions(

|

| 308 |

+

last_hidden_state=hidden_states,

|

| 309 |

+

past_key_values=present_key_value_states,

|

| 310 |

+

hidden_states=all_hidden_states,

|

| 311 |

+

attentions=all_attentions,

|

| 312 |

+

cross_attentions=all_cross_attentions,

|

| 313 |

+

)

|

| 314 |

+

|

| 315 |

+

|

| 316 |

+

class T5ForMultimodalGeneration(T5ForConditionalGeneration):

|

| 317 |

+

_keys_to_ignore_on_load_missing = [

|

| 318 |

+

r"encoder.embed_tokens.weight",

|

| 319 |

+

r"decoder.embed_tokens.weight",

|

| 320 |

+

r"lm_head.weight",

|

| 321 |

+

]

|

| 322 |

+

_keys_to_ignore_on_load_unexpected = [

|

| 323 |

+

r"decoder.block.0.layer.1.EncDecAttention.relative_attention_bias.weight",

|

| 324 |

+

]

|

| 325 |

+

|

| 326 |

+

def __init__(self, config: T5Config, patch_size):

|

| 327 |

+

super().__init__(config)

|

| 328 |

+

self.model_dim = config.d_model

|

| 329 |

+

|

| 330 |

+

self.shared = nn.Embedding(config.vocab_size, config.d_model)

|

| 331 |

+

|

| 332 |

+

encoder_config = copy.deepcopy(config)

|

| 333 |

+

encoder_config.is_decoder = False

|

| 334 |

+

encoder_config.use_cache = False

|

| 335 |

+

encoder_config.is_encoder_decoder = False

|

| 336 |

+

# self.encoder = T5Stack(encoder_config, self.shared)

|

| 337 |

+

self.encoder = JointEncoder(encoder_config, self.shared, patch_size)

|

| 338 |

+

decoder_config = copy.deepcopy(config)

|

| 339 |

+

decoder_config.is_decoder = True

|

| 340 |

+

decoder_config.is_encoder_decoder = False

|

| 341 |

+

decoder_config.num_layers = config.num_decoder_layers

|

| 342 |

+

self.decoder = T5Stack(decoder_config, self.shared)

|

| 343 |

+

|

| 344 |

+

self.lm_head = nn.Linear(config.d_model, config.vocab_size, bias=False)

|

| 345 |

+

|

| 346 |

+

# Initialize weights and apply final processing

|

| 347 |

+

self.post_init()

|

| 348 |

+

|

| 349 |

+

# Model parallel

|

| 350 |

+

self.model_parallel = False

|

| 351 |

+

self.device_map = None

|

| 352 |

+

|

| 353 |

+

def forward(

|

| 354 |

+

self,

|

| 355 |

+

input_ids: Optional[torch.LongTensor] = None,

|

| 356 |

+

image_ids=None,

|

| 357 |

+

attention_mask: Optional[torch.FloatTensor] = None,

|

| 358 |

+

decoder_input_ids: Optional[torch.LongTensor] = None,

|

| 359 |

+

decoder_attention_mask: Optional[torch.BoolTensor] = None,

|

| 360 |

+

head_mask: Optional[torch.FloatTensor] = None,

|

| 361 |

+

decoder_head_mask: Optional[torch.FloatTensor] = None,

|

| 362 |

+

cross_attn_head_mask: Optional[torch.Tensor] = None,

|

| 363 |

+

encoder_outputs: Optional[Tuple[Tuple[torch.Tensor]]] = None,

|

| 364 |

+

past_key_values: Optional[Tuple[Tuple[torch.Tensor]]] = None,

|

| 365 |

+

inputs_embeds: Optional[torch.FloatTensor] = None,

|

| 366 |

+

decoder_inputs_embeds: Optional[torch.FloatTensor] = None,

|

| 367 |

+

labels: Optional[torch.LongTensor] = None,

|

| 368 |

+

use_cache: Optional[bool] = None,

|

| 369 |

+

output_attentions: Optional[bool] = None,

|

| 370 |

+

output_hidden_states: Optional[bool] = None,

|

| 371 |

+

return_dict: Optional[bool] = None,

|

| 372 |

+

) -> Union[Tuple[torch.FloatTensor], Seq2SeqLMOutput]:

|

| 373 |

+

use_cache = use_cache if use_cache is not None else self.config.use_cache

|

| 374 |

+

return_dict = return_dict if return_dict is not None else self.config.use_return_dict

|

| 375 |

+

|

| 376 |

+

# FutureWarning: head_mask was separated into two input args - head_mask, decoder_head_mask

|

| 377 |

+

if head_mask is not None and decoder_head_mask is None:

|

| 378 |

+

if self.config.num_layers == self.config.num_decoder_layers:

|

| 379 |

+

warnings.warn(__HEAD_MASK_WARNING_MSG, FutureWarning)

|

| 380 |

+

decoder_head_mask = head_mask

|

| 381 |

+

|

| 382 |

+

# Encode if needed (training, first prediction pass)

|

| 383 |

+

if encoder_outputs is None:

|

| 384 |

+

# Convert encoder inputs in embeddings if needed

|

| 385 |

+

encoder_outputs = self.encoder(

|

| 386 |

+

input_ids=input_ids,

|

| 387 |

+

attention_mask=attention_mask,

|

| 388 |

+

inputs_embeds=inputs_embeds,

|

| 389 |

+

image_ids=image_ids,

|

| 390 |

+

head_mask=head_mask,

|

| 391 |

+

output_attentions=output_attentions,

|

| 392 |

+

output_hidden_states=output_hidden_states,

|

| 393 |

+

return_dict=return_dict,

|

| 394 |

+

)

|

| 395 |

+

|

| 396 |

+

elif return_dict and not isinstance(encoder_outputs, BaseModelOutput):

|

| 397 |

+

encoder_outputs = BaseModelOutput(

|

| 398 |

+

last_hidden_state=encoder_outputs[0],

|

| 399 |

+

hidden_states=encoder_outputs[1] if len(encoder_outputs) > 1 else None,

|

| 400 |

+

attentions=encoder_outputs[2] if len(encoder_outputs) > 2 else None,

|

| 401 |

+

)

|

| 402 |

+

|

| 403 |

+

hidden_states = encoder_outputs[0]

|

| 404 |

+

|

| 405 |

+

if self.model_parallel:

|

| 406 |

+

torch.cuda.set_device(self.decoder.first_device)

|

| 407 |

+

|

| 408 |

+

if labels is not None and decoder_input_ids is None and decoder_inputs_embeds is None:

|

| 409 |

+

# get decoder inputs from shifting lm labels to the right

|

| 410 |

+

decoder_input_ids = self._shift_right(labels)

|

| 411 |

+

|

| 412 |

+

# Set device for model parallelism

|

| 413 |

+

if self.model_parallel:

|

| 414 |

+

torch.cuda.set_device(self.decoder.first_device)

|

| 415 |

+

hidden_states = hidden_states.to(self.decoder.first_device)

|

| 416 |

+

if decoder_input_ids is not None:

|

| 417 |

+

decoder_input_ids = decoder_input_ids.to(self.decoder.first_device)

|

| 418 |

+

if attention_mask is not None:

|

| 419 |

+

attention_mask = attention_mask.to(self.decoder.first_device)

|

| 420 |

+

if decoder_attention_mask is not None:

|

| 421 |

+

decoder_attention_mask = decoder_attention_mask.to(self.decoder.first_device)

|

| 422 |

+

|

| 423 |

+

# Decode

|

| 424 |

+

decoder_outputs = self.decoder(

|

| 425 |

+

input_ids=decoder_input_ids,

|

| 426 |

+

attention_mask=decoder_attention_mask,

|

| 427 |

+

inputs_embeds=decoder_inputs_embeds,

|

| 428 |

+

past_key_values=past_key_values,

|

| 429 |

+

encoder_hidden_states=hidden_states,

|

| 430 |

+

encoder_attention_mask=attention_mask,

|

| 431 |

+

head_mask=decoder_head_mask,

|

| 432 |

+

cross_attn_head_mask=cross_attn_head_mask,

|

| 433 |

+

use_cache=use_cache,

|

| 434 |

+

output_attentions=output_attentions,

|

| 435 |

+

output_hidden_states=output_hidden_states,

|

| 436 |

+

return_dict=return_dict,

|

| 437 |

+

)

|

| 438 |

+

|

| 439 |

+

sequence_output = decoder_outputs[0]

|

| 440 |

+

|

| 441 |

+

# Set device for model parallelism

|

| 442 |

+

if self.model_parallel:

|

| 443 |

+

torch.cuda.set_device(self.encoder.first_device)

|

| 444 |

+

self.lm_head = self.lm_head.to(self.encoder.first_device)

|

| 445 |

+

sequence_output = sequence_output.to(self.lm_head.weight.device)

|

| 446 |

+

|

| 447 |

+

if self.config.tie_word_embeddings:

|

| 448 |

+

# Rescale output before projecting on vocab

|

| 449 |

+

# See https://github.com/tensorflow/mesh/blob/fa19d69eafc9a482aff0b59ddd96b025c0cb207d/mesh_tensorflow/transformer/transformer.py#L586

|

| 450 |

+

sequence_output = sequence_output * (self.model_dim**-0.5)

|

| 451 |

+

|

| 452 |

+

lm_logits = self.lm_head(sequence_output)

|

| 453 |

+

|

| 454 |

+

loss = None

|

| 455 |

+

if labels is not None:

|

| 456 |

+

loss_fct = CrossEntropyLoss(ignore_index=-100)

|

| 457 |

+

loss = loss_fct(lm_logits.view(-1, lm_logits.size(-1)), labels.view(-1))

|

| 458 |

+

# TODO(thom): Add z_loss https://github.com/tensorflow/mesh/blob/fa19d69eafc9a482aff0b59ddd96b025c0cb207d/mesh_tensorflow/layers.py#L666

|

| 459 |

+

|

| 460 |

+

if not return_dict:

|

| 461 |

+

output = (lm_logits,) + decoder_outputs[1:] + encoder_outputs

|

| 462 |

+

return ((loss,) + output) if loss is not None else output

|

| 463 |

+

|

| 464 |

+

return Seq2SeqLMOutput(

|

| 465 |

+

loss=loss,

|

| 466 |

+

logits=lm_logits,

|

| 467 |

+

past_key_values=decoder_outputs.past_key_values,

|

| 468 |

+

decoder_hidden_states=decoder_outputs.hidden_states,

|

| 469 |

+

decoder_attentions=decoder_outputs.attentions,

|

| 470 |

+

cross_attentions=decoder_outputs.cross_attentions,

|

| 471 |

+

encoder_last_hidden_state=encoder_outputs.last_hidden_state,

|

| 472 |

+

encoder_hidden_states=encoder_outputs.hidden_states,

|

| 473 |

+

encoder_attentions=encoder_outputs.attentions,

|

| 474 |

+

)

|

| 475 |

+

|

| 476 |

+

def prepare_inputs_for_generation(

|

| 477 |

+

self, decoder_input_ids, past=None, attention_mask=None, use_cache=None, encoder_outputs=None, **kwargs

|

| 478 |

+

):

|

| 479 |

+

# cut decoder_input_ids if past is used

|

| 480 |

+

if past is not None:

|

| 481 |

+

decoder_input_ids = decoder_input_ids[:, -1:]

|

| 482 |

+

|

| 483 |

+

output = {

|

| 484 |

+

"input_ids": None, # encoder_outputs is defined. input_ids not needed

|

| 485 |

+

"encoder_outputs": encoder_outputs,

|

| 486 |

+

"past_key_values": past,

|

| 487 |

+

"decoder_input_ids": decoder_input_ids,

|

| 488 |

+

"attention_mask": attention_mask,

|

| 489 |

+

"use_cache": use_cache, # change this to avoid caching (presumably for debugging)

|

| 490 |

+

}

|

| 491 |

+

|

| 492 |

+

if "image_ids" in kwargs:

|

| 493 |

+

output["image_ids"] = kwargs['image_ids']

|

| 494 |

+

|

| 495 |

+

return output

|

| 496 |

+

|

| 497 |

+

def test_step(self, tokenizer, batch, **kwargs):

|

| 498 |

+

device = next(self.parameters()).device

|

| 499 |

+

input_ids = batch['input_ids'].to(device)

|

| 500 |

+

image_ids = batch['image_ids'].to(device)

|

| 501 |

+

|

| 502 |

+

output = self.generate(

|

| 503 |

+

input_ids=input_ids,

|

| 504 |

+

image_ids=image_ids,

|

| 505 |

+

**kwargs

|

| 506 |

+

)

|

| 507 |

+

|

| 508 |

+

generated_sents = tokenizer.batch_decode(output, skip_special_tokens=True)

|

| 509 |

+

targets = tokenizer.batch_decode(batch['labels'], skip_special_tokens=True)

|

| 510 |

+

|

| 511 |

+

result = {}

|

| 512 |

+

result['preds'] = generated_sents

|

| 513 |

+

result['targets'] = targets

|

| 514 |

+

|

| 515 |

+

return result

|

models/VLE/__init__.py

DELETED

|

@@ -1,11 +0,0 @@

|

|

| 1 |

-

from .modeling_vle import (

|

| 2 |

-

VLEModel,

|

| 3 |

-

VLEForVQA,

|

| 4 |

-

VLEForITM,

|

| 5 |

-

VLEForMLM,

|

| 6 |

-

VLEForPBC

|

| 7 |

-

)

|

| 8 |

-

|

| 9 |

-

from .configuration_vle import VLEConfig

|

| 10 |

-

from .processing_vle import VLEProcessor

|

| 11 |

-

from .pipeline_vle import VLEForVQAPipeline, VLEForITMPipeline, VLEForPBCPipeline

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

models/VLE/__pycache__/__init__.cpython-39.pyc

DELETED

|

Binary file (498 Bytes)

|

|

|

models/VLE/__pycache__/configuration_vle.cpython-39.pyc

DELETED

|

Binary file (4.27 kB)

|

|

|

models/VLE/__pycache__/modeling_vle.cpython-39.pyc

DELETED

|

Binary file (18.5 kB)

|

|

|

models/VLE/__pycache__/pipeline_vle.cpython-39.pyc

DELETED

|

Binary file (6.38 kB)

|

|

|

models/VLE/__pycache__/processing_vle.cpython-39.pyc

DELETED

|

Binary file (6.16 kB)

|

|

|

models/VLE/configuration_vle.py

DELETED

|

@@ -1,143 +0,0 @@

|

|

| 1 |

-

# coding=utf-8

|

| 2 |

-

# Copyright The HuggingFace Inc. team. All rights reserved.

|

| 3 |

-

#

|

| 4 |

-

# Licensed under the Apache License, Version 2.0 (the "License");

|

| 5 |

-

# you may not use this file except in compliance with the License.

|

| 6 |

-

# You may obtain a copy of the License at

|

| 7 |

-

#

|

| 8 |

-

# http://www.apache.org/licenses/LICENSE-2.0

|

| 9 |

-

#

|

| 10 |

-

# Unless required by applicable law or agreed to in writing, software

|

| 11 |

-

# distributed under the License is distributed on an "AS IS" BASIS,

|

| 12 |

-

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 13 |

-

# See the License for the specific language governing permissions and

|

| 14 |

-

# limitations under the License.

|

| 15 |

-

""" VLE model configuration"""

|

| 16 |

-

|

| 17 |

-

import copy

|

| 18 |

-

|

| 19 |

-

from transformers.configuration_utils import PretrainedConfig

|

| 20 |

-

from transformers.utils import logging

|

| 21 |

-

from transformers.models.auto.configuration_auto import AutoConfig

|

| 22 |

-

from transformers.models.clip.configuration_clip import CLIPVisionConfig

|

| 23 |

-

from typing import Union, Dict

|

| 24 |

-

|

| 25 |

-

logger = logging.get_logger(__name__)

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

class VLEConfig(PretrainedConfig):

|

| 29 |

-

r"""

|

| 30 |

-

[`VLEConfig`] is the configuration class to store the configuration of a

|

| 31 |

-

[`VLEModel`]. It is used to instantiate [`VLEModel`] model according to the

|

| 32 |

-

specified arguments, defining the text model and vision model configs.

|

| 33 |

-

|

| 34 |

-

Configuration objects inherit from [`PretrainedConfig`] and can be used to control the model outputs. Read the

|

| 35 |

-

documentation from [`PretrainedConfig`] for more information.