Spaces:

Running

Running

first commit

Browse files- app.py +50 -366

- examples/1385_jpg.rf.3c67cb92e2922dba0e6dba86f69df40b.jpg +0 -0

- examples/1491_jpg.rf.3c658e83538de0fa5a3f4e13d7d85f12.jpg +0 -0

- examples/1550_jpg.rf.3d067be9580ec32dbee5a89c675d8459.jpg +0 -0

- examples/194_jpg.rf.3e3dd592d034bb5ee27a978553819f42.jpg +0 -0

- examples/2256_jpg.rf.3afd7903eaf3f3c5aa8da4bbb928bc19.jpg +0 -0

- examples/239_jpg.rf.3dcc0799277fb78a2ab21db7761ccaeb.jpg +0 -0

- examples/2777_jpg.rf.3b60ea7f7e70552e70e41528052018bd.jpg +0 -0

- examples/2860_jpg.rf.3bb87fa4f938af5abfb1e17676ec1dad.jpg +0 -0

- examples/2871_jpg.rf.3b6eadfbb369abc2b3bcb52b406b74f2.jpg +0 -0

- examples/2921_jpg.rf.3b952f91f27a6248091e7601c22323ad.jpg +0 -0

- examples/_annotations.coco.json +388 -0

- examples/tumor1.json +7 -0

- examples/tumor10.json +7 -0

- examples/tumor2.json +7 -0

- examples/tumor3.json +7 -0

- examples/tumor4.json +7 -0

- examples/tumor5.json +7 -0

- examples/tumor6.json +7 -0

- examples/tumor7.json +7 -0

- examples/tumor8.json +7 -0

- examples/tumor9.json +7 -0

- requirements.txt +1 -5

- vae-oid.npz +0 -3

app.py

CHANGED

|

@@ -1,37 +1,12 @@

|

|

| 1 |

-

"""

|

| 2 |

-

CellVision AI - Intelligent Cell Imaging Analysis

|

| 3 |

-

|

| 4 |

-

This module provides a Gradio web application for performing intelligent cell imaging analysis

|

| 5 |

-

using the PaliGemma model from Google. The app allows users to segment or detect cells in images

|

| 6 |

-

and generate descriptive text based on the input image and prompt.

|

| 7 |

-

|

| 8 |

-

Dependencies:

|

| 9 |

-

- gradio

|

| 10 |

-

- transformers

|

| 11 |

-

- torch

|

| 12 |

-

- jax

|

| 13 |

-

- flax

|

| 14 |

-

- spaces

|

| 15 |

-

- PIL

|

| 16 |

-

- numpy

|

| 17 |

-

- huggingface_hub

|

| 18 |

-

|

| 19 |

-

"""

|

| 20 |

-

|

| 21 |

-

import os

|

| 22 |

-

import functools

|

| 23 |

-

import re

|

| 24 |

-

|

| 25 |

-

import PIL.Image

|

| 26 |

import gradio as gr

|

| 27 |

-

|

| 28 |

-

import

|

| 29 |

-

|

| 30 |

-

import

|

| 31 |

-

|

|

|

|

|

|

|

| 32 |

|

| 33 |

-

from transformers import PaliGemmaForConditionalGeneration, PaliGemmaProcessor

|

| 34 |

-

from peft import PeftConfig, PeftModel

|

| 35 |

from huggingface_hub import login

|

| 36 |

import spaces

|

| 37 |

|

|

@@ -39,278 +14,38 @@ import spaces

|

|

| 39 |

hf_token = os.getenv("HF_TOKEN")

|

| 40 |

login(token=hf_token, add_to_git_credential=True)

|

| 41 |

|

| 42 |

-

|

| 43 |

-

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 44 |

-

|

| 45 |

-

model_id = "google/paligemma-3b-pt-224"

|

| 46 |

-

adapter_model_id = "dwb2023/paligemma-tumor-detection-ft"

|

| 47 |

-

|

| 48 |

-

# model_id = "google/paligemma-3b-ft-refcoco-seg-224"

|

| 49 |

-

model = PaliGemmaForConditionalGeneration.from_pretrained(model_id).eval().to(device)

|

| 50 |

-

|

| 51 |

-

model = PeftModel.from_pretrained(model, adapter_model_id).to(device)

|

| 52 |

-

model = model.merge_and_unload()

|

| 53 |

-

model.save_pretrained("merged_adapters")

|

| 54 |

-

|

| 55 |

-

processor = PaliGemmaProcessor.from_pretrained(model_id)

|

| 56 |

-

|

| 57 |

-

@spaces.GPU(duration=120)

|

| 58 |

-

def infer(

|

| 59 |

-

image: PIL.Image.Image,

|

| 60 |

-

text: str,

|

| 61 |

-

max_new_tokens: int

|

| 62 |

-

) -> str:

|

| 63 |

-

"""

|

| 64 |

-

Perform inference using the PaliGemma model.

|

| 65 |

-

|

| 66 |

-

Args:

|

| 67 |

-

image (PIL.Image.Image): Input image.

|

| 68 |

-

text (str): Input text prompt.

|

| 69 |

-

max_new_tokens (int): Maximum number of new tokens to generate.

|

| 70 |

-

|

| 71 |

-

Returns:

|

| 72 |

-

str: Generated text based on the input image and prompt.

|

| 73 |

-

"""

|

| 74 |

-

inputs = processor(text=text, images=image, return_tensors="pt").to(device)

|

| 75 |

-

with torch.inference_mode():

|

| 76 |

-

generated_ids = model.generate(

|

| 77 |

-

**inputs,

|

| 78 |

-

max_new_tokens=max_new_tokens,

|

| 79 |

-

do_sample=False

|

| 80 |

-

)

|

| 81 |

-

result = processor.batch_decode(generated_ids, skip_special_tokens=True)

|

| 82 |

-

return result[0][len(text):].lstrip("\n")

|

| 83 |

-

|

| 84 |

-

def parse_segmentation(input_image, input_text):

|

| 85 |

-

"""

|

| 86 |

-

Parse segmentation output tokens into masks and bounding boxes.

|

| 87 |

-

|

| 88 |

-

Args:

|

| 89 |

-

input_image (PIL.Image.Image): Input image.

|

| 90 |

-

input_text (str): Input text specifying entities to segment or detect.

|

| 91 |

-

|

| 92 |

-

Returns:

|

| 93 |

-

tuple: A tuple containing the annotated image and a boolean indicating if annotations are present.

|

| 94 |

-

"""

|

| 95 |

-

out = infer(input_image, input_text, max_new_tokens=100)

|

| 96 |

-

objs = extract_objs(out.lstrip("\n"), input_image.size[0], input_image.size[1], unique_labels=True)

|

| 97 |

-

labels = set(obj.get('name') for obj in objs if obj.get('name'))

|

| 98 |

-

color_map = {l: COLORS[i % len(COLORS)] for i, l in enumerate(labels)}

|

| 99 |

-

highlighted_text = [(obj['content'], obj.get('name')) for obj in objs]

|

| 100 |

-

annotated_img = (

|

| 101 |

-

input_image,

|

| 102 |

-

[

|

| 103 |

-

(

|

| 104 |

-

obj['mask'] if obj.get('mask') is not None else obj['xyxy'],

|

| 105 |

-

obj['name'] or '',

|

| 106 |

-

)

|

| 107 |

-

for obj in objs

|

| 108 |

-

if 'mask' in obj or 'xyxy' in obj

|

| 109 |

-

],

|

| 110 |

-

)

|

| 111 |

-

has_annotations = bool(annotated_img[1])

|

| 112 |

-

return annotated_img

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

### Postprocessing Utils for Segmentation Tokens

|

| 116 |

-

|

| 117 |

-

_MODEL_PATH = 'vae-oid.npz'

|

| 118 |

-

|

| 119 |

-

_SEGMENT_DETECT_RE = re.compile(

|

| 120 |

-

r'(.*?)' +

|

| 121 |

-

r'<loc(\d{4})>' * 4 + r'\s*' +

|

| 122 |

-

'(?:%s)?' % (r'<seg(\d{3})>' * 16) +

|

| 123 |

-

r'\s*([^;<>]+)? ?(?:; )?',

|

| 124 |

-

)

|

| 125 |

-

|

| 126 |

-

COLORS = ['#4285f4', '#db4437', '#f4b400', '#0f9d58', '#e48ef1']

|

| 127 |

-

|

| 128 |

-

def _get_params(checkpoint):

|

| 129 |

-

"""

|

| 130 |

-

Convert PyTorch checkpoint to Flax params.

|

| 131 |

-

|

| 132 |

-

Args:

|

| 133 |

-

checkpoint (dict): PyTorch checkpoint dictionary.

|

| 134 |

-

|

| 135 |

-

Returns:

|

| 136 |

-

dict: Flax parameters.

|

| 137 |

-

"""

|

| 138 |

-

def transp(kernel):

|

| 139 |

-

return np.transpose(kernel, (2, 3, 1, 0))

|

| 140 |

-

|

| 141 |

-

def conv(name):

|

| 142 |

-

return {

|

| 143 |

-

'bias': checkpoint[name + '.bias'],

|

| 144 |

-

'kernel': transp(checkpoint[name + '.weight']),

|

| 145 |

-

}

|

| 146 |

-

|

| 147 |

-

def resblock(name):

|

| 148 |

-

return {

|

| 149 |

-

'Conv_0': conv(name + '.0'),

|

| 150 |

-

'Conv_1': conv(name + '.2'),

|

| 151 |

-

'Conv_2': conv(name + '.4'),

|

| 152 |

-

}

|

| 153 |

-

|

| 154 |

-

return {

|

| 155 |

-

'_embeddings': checkpoint['_vq_vae._embedding'],

|

| 156 |

-

'Conv_0': conv('decoder.0'),

|

| 157 |

-

'ResBlock_0': resblock('decoder.2.net'),

|

| 158 |

-

'ResBlock_1': resblock('decoder.3.net'),

|

| 159 |

-

'ConvTranspose_0': conv('decoder.4'),

|

| 160 |

-

'ConvTranspose_1': conv('decoder.6'),

|

| 161 |

-

'ConvTranspose_2': conv('decoder.8'),

|

| 162 |

-

'ConvTranspose_3': conv('decoder.10'),

|

| 163 |

-

'Conv_1': conv('decoder.12'),

|

| 164 |

-

}

|

| 165 |

-

|

| 166 |

-

|

| 167 |

-

def _quantized_values_from_codebook_indices(codebook_indices, embeddings):

|

| 168 |

-

"""

|

| 169 |

-

Get quantized values from codebook indices.

|

| 170 |

-

|

| 171 |

-

Args:

|

| 172 |

-

codebook_indices (jax.numpy.ndarray): Codebook indices.

|

| 173 |

-

embeddings (jax.numpy.ndarray): Embeddings.

|

| 174 |

-

|

| 175 |

-

Returns:

|

| 176 |

-

jax.numpy.ndarray: Quantized values.

|

| 177 |

-

"""

|

| 178 |

-

batch_size, num_tokens = codebook_indices.shape

|

| 179 |

-

assert num_tokens == 16, codebook_indices.shape

|

| 180 |

-

unused_num_embeddings, embedding_dim = embeddings.shape

|

| 181 |

-

|

| 182 |

-

encodings = jnp.take(embeddings, codebook_indices.reshape((-1)), axis=0)

|

| 183 |

-

encodings = encodings.reshape((batch_size, 4, 4, embedding_dim))

|

| 184 |

-

return encodings

|

| 185 |

-

|

| 186 |

-

|

| 187 |

-

@functools.cache

|

| 188 |

-

def _get_reconstruct_masks():

|

| 189 |

-

"""

|

| 190 |

-

Reconstruct masks from codebook indices.

|

| 191 |

-

|

| 192 |

-

Returns:

|

| 193 |

-

function: A function that expects indices shaped `[B, 16]` of dtype int32, each

|

| 194 |

-

ranging from 0 to 127 (inclusive), and returns decoded masks sized

|

| 195 |

-

`[B, 64, 64, 1]`, of dtype float32, in range [-1, 1].

|

| 196 |

-

"""

|

| 197 |

-

|

| 198 |

-

class ResBlock(nn.Module):

|

| 199 |

-

features: int

|

| 200 |

-

|

| 201 |

-

@nn.compact

|

| 202 |

-

def __call__(self, x):

|

| 203 |

-

original_x = x

|

| 204 |

-

x = nn.Conv(features=self.features, kernel_size=(3, 3), padding=1)(x)

|

| 205 |

-

x = nn.relu(x)

|

| 206 |

-

x = nn.Conv(features=self.features, kernel_size=(3, 3), padding=1)(x)

|

| 207 |

-

x = nn.relu(x)

|

| 208 |

-

x = nn.Conv(features=self.features, kernel_size=(1, 1), padding=0)(x)

|

| 209 |

-

return x + original_x

|

| 210 |

-

|

| 211 |

-

class Decoder(nn.Module):

|

| 212 |

-

"""Upscales quantized vectors to mask."""

|

| 213 |

-

|

| 214 |

-

@nn.compact

|

| 215 |

-

def __call__(self, x):

|

| 216 |

-

num_res_blocks = 2

|

| 217 |

-

dim = 128

|

| 218 |

-

num_upsample_layers = 4

|

| 219 |

-

|

| 220 |

-

x = nn.Conv(features=dim, kernel_size=(1, 1), padding=0)(x)

|

| 221 |

-

x = nn.relu(x)

|

| 222 |

-

|

| 223 |

-

for _ in range(num_res_blocks):

|

| 224 |

-

x = ResBlock(features=dim)(x)

|

| 225 |

-

|

| 226 |

-

for _ in range(num_upsample_layers):

|

| 227 |

-

x = nn.ConvTranspose(

|

| 228 |

-

features=dim,

|

| 229 |

-

kernel_size=(4, 4),

|

| 230 |

-

strides=(2, 2),

|

| 231 |

-

padding=2,

|

| 232 |

-

transpose_kernel=True,

|

| 233 |

-

)(x)

|

| 234 |

-

x = nn.relu(x)

|

| 235 |

-

dim //= 2

|

| 236 |

-

|

| 237 |

-

x = nn.Conv(features=1, kernel_size=(1, 1), padding=0)(x)

|

| 238 |

-

|

| 239 |

-

return x

|

| 240 |

-

|

| 241 |

-

def reconstruct_masks(codebook_indices):

|

| 242 |

-

"""

|

| 243 |

-

Reconstruct masks from codebook indices.

|

| 244 |

-

|

| 245 |

-

Args:

|

| 246 |

-

codebook_indices (jax.numpy.ndarray): Codebook indices.

|

| 247 |

-

|

| 248 |

-

Returns:

|

| 249 |

-

jax.numpy.ndarray: Reconstructed masks.

|

| 250 |

-

"""

|

| 251 |

-

quantized = _quantized_values_from_codebook_indices(

|

| 252 |

-

codebook_indices, params['_embeddings']

|

| 253 |

-

)

|

| 254 |

-

return Decoder().apply({'params': params}, quantized)

|

| 255 |

-

|

| 256 |

-

with open(_MODEL_PATH, 'rb') as f:

|

| 257 |

-

params = _get_params(dict(np.load(f)))

|

| 258 |

-

|

| 259 |

-

return jax.jit(reconstruct_masks, backend='cpu')

|

| 260 |

-

|

| 261 |

-

def extract_objs(text, width, height, unique_labels=False):

|

| 262 |

"""

|

| 263 |

-

|

| 264 |

-

|

| 265 |

-

Args:

|

| 266 |

-

text (str): Input text containing "<loc>" and "<seg>" tokens.

|

| 267 |

-

width (int): Width of the image.

|

| 268 |

-

height (int): Height of the image.

|

| 269 |

-

unique_labels (bool, optional): Whether to enforce unique labels. Defaults to False.

|

| 270 |

|

| 271 |

-

|

| 272 |

-

|

|

|

|

|

|

|

| 273 |

"""

|

| 274 |

-

|

| 275 |

-

|

| 276 |

-

|

| 277 |

-

|

| 278 |

-

|

| 279 |

-

|

| 280 |

-

|

| 281 |

-

|

| 282 |

-

|

| 283 |

-

|

| 284 |

-

|

| 285 |

-

|

| 286 |

-

|

| 287 |

-

|

| 288 |

-

|

| 289 |

-

|

| 290 |

-

|

| 291 |

-

|

| 292 |

-

|

| 293 |

-

|

| 294 |

-

|

| 295 |

-

|

| 296 |

-

|

| 297 |

-

mask[y1:y2, x1:x2] = np.array(m64.resize([x2 - x1, y2 - y1])) / 255.0

|

| 298 |

-

|

| 299 |

-

content = m.group()

|

| 300 |

-

if before:

|

| 301 |

-

objs.append(dict(content=before))

|

| 302 |

-

content = content[len(before):]

|

| 303 |

-

while unique_labels and name in seen:

|

| 304 |

-

name = (name or '') + "'"

|

| 305 |

-

seen.add(name)

|

| 306 |

-

objs.append(dict(

|

| 307 |

-

content=content, xyxy=(x1, y1, x2, y2), mask=mask, name=name))

|

| 308 |

-

text = text[len(before) + len(content):]

|

| 309 |

-

|

| 310 |

-

if text:

|

| 311 |

-

objs.append(dict(content=text))

|

| 312 |

-

|

| 313 |

-

return objs

|

| 314 |

|

| 315 |

#########

|

| 316 |

|

|

@@ -319,90 +54,39 @@ IMAGE_PROMPT="Are these cells healthy or cancerous?"

|

|

| 319 |

|

| 320 |

with gr.Blocks(css="style.css") as demo:

|

| 321 |

gr.Markdown(INTRO_TEXT)

|

| 322 |

-

with gr.Tab("

|

| 323 |

-

with gr.Row():

|

| 324 |

-

with gr.Column():

|

| 325 |

-

image = gr.Image(type="pil")

|

| 326 |

-

seg_input = gr.Text(label="Entities to Segment/Detect")

|

| 327 |

-

|

| 328 |

-

with gr.Column():

|

| 329 |

-

annotated_image = gr.AnnotatedImage(label="Output")

|

| 330 |

-

|

| 331 |

-

seg_btn = gr.Button("Submit")

|

| 332 |

-

examples = [["./examples/cnmc1.bmp", "segment cancerous cells"],

|

| 333 |

-

["./examples/cnmc2.bmp", "detect cancerous cells"],

|

| 334 |

-

["./examples/cnmc3.bmp", "segment healthy cells"],

|

| 335 |

-

["./examples/cnmc4.bmp", "detect healthy cells"],

|

| 336 |

-

["./examples/cnmc5.bmp", "segment cancerous cells"],

|

| 337 |

-

["./examples/cnmc6.bmp", "detect cancerous cells"],

|

| 338 |

-

["./examples/cnmc7.bmp", "segment healthy cells"],

|

| 339 |

-

["./examples/cnmc8.bmp", "detect healthy cells"],

|

| 340 |

-

["./examples/cnmc9.bmp", "segment cancerous cells"],

|

| 341 |

-

["./examples/cart1.jpg", "segment cells"],

|

| 342 |

-

["./examples/cart1.jpg", "detect cells"],

|

| 343 |

-

["./examples/cart2.jpg", "segment cells"],

|

| 344 |

-

["./examples/cart2.jpg", "detect cells"],

|

| 345 |

-

["./examples/cart3.jpg", "segment cells"],

|

| 346 |

-

["./examples/cart3.jpg", "detect cells"]]

|

| 347 |

-

gr.Examples(

|

| 348 |

-

examples=examples,

|

| 349 |

-

inputs=[image, seg_input],

|

| 350 |

-

)

|

| 351 |

-

seg_inputs = [

|

| 352 |

-

image,

|

| 353 |

-

seg_input

|

| 354 |

-

]

|

| 355 |

-

seg_outputs = [

|

| 356 |

-

annotated_image

|

| 357 |

-

]

|

| 358 |

-

seg_btn.click(

|

| 359 |

-

fn=parse_segmentation,

|

| 360 |

-

inputs=seg_inputs,

|

| 361 |

-

outputs=seg_outputs,

|

| 362 |

-

)

|

| 363 |

-

with gr.Tab("Text Generation"):

|

| 364 |

with gr.Row():

|

| 365 |

with gr.Column():

|

| 366 |

image = gr.Image(type="pil")

|

| 367 |

with gr.Column():

|

| 368 |

text_input = gr.Text(label="Input Text")

|

| 369 |

text_output = gr.Text(label="Text Output")

|

| 370 |

-

tokens = gr.Slider(

|

| 371 |

-

label="Max New Tokens",

|

| 372 |

-

info="Set to larger for longer generation.",

|

| 373 |

-

minimum=10,

|

| 374 |

-

maximum=100,

|

| 375 |

-

value=50,

|

| 376 |

-

step=10,

|

| 377 |

-

)

|

| 378 |

chat_btn = gr.Button()

|

| 379 |

|

| 380 |

chat_inputs = [

|

| 381 |

image,

|

| 382 |

-

|

| 383 |

-

tokens

|

| 384 |

]

|

| 385 |

chat_outputs = [

|

| 386 |

text_output

|

| 387 |

]

|

| 388 |

chat_btn.click(

|

| 389 |

-

fn=

|

| 390 |

inputs=chat_inputs,

|

| 391 |

outputs=chat_outputs,

|

| 392 |

)

|

| 393 |

|

| 394 |

-

examples = [["./examples/

|

| 395 |

-

["./examples/

|

| 396 |

-

["./examples/

|

| 397 |

-

["./examples/

|

| 398 |

-

["./examples/

|

| 399 |

-

["./examples/

|

| 400 |

-

["./examples/

|

| 401 |

-

["./examples/

|

| 402 |

-

["./examples/

|

| 403 |

-

["./examples/

|

| 404 |

-

|

| 405 |

-

["./examples/cart3.jpg", IMAGE_PROMPT]]

|

| 406 |

gr.Examples(

|

| 407 |

examples=examples,

|

| 408 |

inputs=chat_inputs,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

import gradio as gr

|

| 2 |

+

from typing import *

|

| 3 |

+

from pillow_heif import register_heif_opener

|

| 4 |

+

register_heif_opener()

|

| 5 |

+

import vision_agent as va

|

| 6 |

+

from vision_agent.tools import register_tool

|

| 7 |

+

|

| 8 |

+

from vision_agent.tools import load_image, owl_v2, overlay_bounding_boxes, save_image

|

| 9 |

|

|

|

|

|

|

|

| 10 |

from huggingface_hub import login

|

| 11 |

import spaces

|

| 12 |

|

|

|

|

| 14 |

hf_token = os.getenv("HF_TOKEN")

|

| 15 |

login(token=hf_token, add_to_git_credential=True)

|

| 16 |

|

| 17 |

+

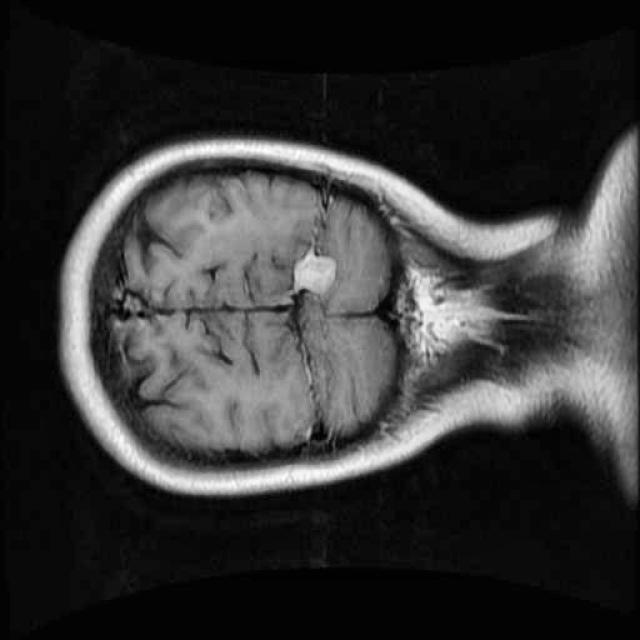

def detect_brain_tumor(image_path: str, output_path: str, debug: bool = False) -> None:

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

"""

|

| 19 |

+

Detects a brain tumor in the given image and saves the image with bounding boxes.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 20 |

|

| 21 |

+

Parameters:

|

| 22 |

+

image_path (str): The path to the input image.

|

| 23 |

+

output_path (str): The path to save the output image with bounding boxes.

|

| 24 |

+

debug (bool): Flag to enable logging for debugging purposes.

|

| 25 |

"""

|

| 26 |

+

# Step 1: Load the image

|

| 27 |

+

image = load_image(image_path)

|

| 28 |

+

if debug:

|

| 29 |

+

print(f"Image loaded from {image_path}")

|

| 30 |

+

|

| 31 |

+

# Step 2: Detect brain tumor using owl_v2

|

| 32 |

+

prompt = "detect brain tumor"

|

| 33 |

+

detections = owl_v2(prompt, image)

|

| 34 |

+

if debug:

|

| 35 |

+

print(f"Detections: {detections}")

|

| 36 |

+

|

| 37 |

+

# Step 3: Overlay bounding boxes on the image

|

| 38 |

+

image_with_bboxes = overlay_bounding_boxes(image, detections)

|

| 39 |

+

if debug:

|

| 40 |

+

print("Bounding boxes overlaid on the image")

|

| 41 |

+

|

| 42 |

+

# Step 4: Save the resulting image

|

| 43 |

+

save_image(image_with_bboxes, output_path)

|

| 44 |

+

if debug:

|

| 45 |

+

print(f"Image saved to {output_path}")

|

| 46 |

+

|

| 47 |

+

# Example usage (uncomment to run):

|

| 48 |

+

# detect_brain_tumor("/content/drive/MyDrive/kaggle/datasets/brain-tumor-image-dataset-semantic-segmentation_old/train_categories/1385_jpg.rf.3c67cb92e2922dba0e6dba86f69df40b.jpg", "/content/drive/MyDrive/kaggle/datasets/brain-tumor-image-dataset-semantic-segmentation_old/output/1385_jpg.rf.3c67cb92e2922dba0e6dba86f69df40b.jpg", debug=True)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 49 |

|

| 50 |

#########

|

| 51 |

|

|

|

|

| 54 |

|

| 55 |

with gr.Blocks(css="style.css") as demo:

|

| 56 |

gr.Markdown(INTRO_TEXT)

|

| 57 |

+

with gr.Tab("Agentic Detection"):

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 58 |

with gr.Row():

|

| 59 |

with gr.Column():

|

| 60 |

image = gr.Image(type="pil")

|

| 61 |

with gr.Column():

|

| 62 |

text_input = gr.Text(label="Input Text")

|

| 63 |

text_output = gr.Text(label="Text Output")

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 64 |

chat_btn = gr.Button()

|

| 65 |

|

| 66 |

chat_inputs = [

|

| 67 |

image,

|

| 68 |

+

"./output/tmp.jpg",

|

|

|

|

| 69 |

]

|

| 70 |

chat_outputs = [

|

| 71 |

text_output

|

| 72 |

]

|

| 73 |

chat_btn.click(

|

| 74 |

+

fn=detect_brain_tumor,

|

| 75 |

inputs=chat_inputs,

|

| 76 |

outputs=chat_outputs,

|

| 77 |

)

|

| 78 |

|

| 79 |

+

examples = [["./examples/194_jpg.rf.3e3dd592d034bb5ee27a978553819f42.jpg"],

|

| 80 |

+

["./examples/239_jpg.rf.3dcc0799277fb78a2ab21db7761ccaeb.jpg"],

|

| 81 |

+

["./examples/1385_jpg.rf.3c67cb92e2922dba0e6dba86f69df40b.jpg"],

|

| 82 |

+

["./examples/1491_jpg.rf.3c658e83538de0fa5a3f4e13d7d85f12.jpg"],

|

| 83 |

+

["./examples/1550_jpg.rf.3d067be9580ec32dbee5a89c675d8459.jpg"],

|

| 84 |

+

["./examples/2256_jpg.rf.3afd7903eaf3f3c5aa8da4bbb928bc19.jpg"],

|

| 85 |

+

["./examples/1550_jpg.rf.3d067be9580ec32dbee5a89c675d8459.jpg"],

|

| 86 |

+

["./examples/1550_jpg.rf.3d067be9580ec32dbee5a89c675d8459.jpg"],

|

| 87 |

+

["./examples/2871_jpg.rf.3b6eadfbb369abc2b3bcb52b406b74f2.jpg"],

|

| 88 |

+

["./examples/2921_jpg.rf.3b952f91f27a6248091e7601c22323ad.jpg"],

|

| 89 |

+

]

|

|

|

|

| 90 |

gr.Examples(

|

| 91 |

examples=examples,

|

| 92 |

inputs=chat_inputs,

|

examples/1385_jpg.rf.3c67cb92e2922dba0e6dba86f69df40b.jpg

ADDED

|

examples/1491_jpg.rf.3c658e83538de0fa5a3f4e13d7d85f12.jpg

ADDED

|

examples/1550_jpg.rf.3d067be9580ec32dbee5a89c675d8459.jpg

ADDED

|

examples/194_jpg.rf.3e3dd592d034bb5ee27a978553819f42.jpg

ADDED

|

examples/2256_jpg.rf.3afd7903eaf3f3c5aa8da4bbb928bc19.jpg

ADDED

|

examples/239_jpg.rf.3dcc0799277fb78a2ab21db7761ccaeb.jpg

ADDED

|

examples/2777_jpg.rf.3b60ea7f7e70552e70e41528052018bd.jpg

ADDED

|

examples/2860_jpg.rf.3bb87fa4f938af5abfb1e17676ec1dad.jpg

ADDED

|

examples/2871_jpg.rf.3b6eadfbb369abc2b3bcb52b406b74f2.jpg

ADDED

|

examples/2921_jpg.rf.3b952f91f27a6248091e7601c22323ad.jpg

ADDED

|

examples/_annotations.coco.json

ADDED

|

@@ -0,0 +1,388 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"info": {

|

| 3 |

+

"year": "2023",

|

| 4 |

+

"version": "1",

|

| 5 |

+

"description": "Exported from roboflow.com",

|

| 6 |

+

"contributor": "",

|

| 7 |

+

"url": "https://public.roboflow.com/object-detection/undefined",

|

| 8 |

+

"date_created": "2023-08-19T04:37:54+00:00"

|

| 9 |

+

},

|

| 10 |

+

"licenses": [

|

| 11 |

+

{

|

| 12 |

+

"id": 1,

|

| 13 |

+

"url": "https://creativecommons.org/licenses/by/4.0/",

|

| 14 |

+

"name": "CC BY 4.0"

|

| 15 |

+

}

|

| 16 |

+

],

|

| 17 |

+

"categories": [

|

| 18 |

+

{

|

| 19 |

+

"id": 0,

|

| 20 |

+

"name": "Tumor",

|

| 21 |

+

"supercategory": "none"

|

| 22 |

+

},

|

| 23 |

+

{

|

| 24 |

+

"id": 1,

|

| 25 |

+

"name": "0",

|

| 26 |

+

"supercategory": "Tumor"

|

| 27 |

+

},

|

| 28 |

+

{

|

| 29 |

+

"id": 2,

|

| 30 |

+

"name": "1",

|

| 31 |

+

"supercategory": "Tumor"

|

| 32 |

+

}

|

| 33 |

+

],

|

| 34 |

+

"images": [

|

| 35 |

+

{

|

| 36 |

+

"id": 0,

|

| 37 |

+

"license": 1,

|

| 38 |

+

"file_name": "2256_jpg.rf.3afd7903eaf3f3c5aa8da4bbb928bc19.jpg",

|

| 39 |

+

"height": 640,

|

| 40 |

+

"width": 640,

|

| 41 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 42 |

+

},

|

| 43 |

+

{

|

| 44 |

+

"id": 1,

|

| 45 |

+

"license": 1,

|

| 46 |

+

"file_name": "2871_jpg.rf.3b6eadfbb369abc2b3bcb52b406b74f2.jpg",

|

| 47 |

+

"height": 640,

|

| 48 |

+

"width": 640,

|

| 49 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 50 |

+

},

|

| 51 |

+

{

|

| 52 |

+

"id": 2,

|

| 53 |

+

"license": 1,

|

| 54 |

+

"file_name": "2921_jpg.rf.3b952f91f27a6248091e7601c22323ad.jpg",

|

| 55 |

+

"height": 640,

|

| 56 |

+

"width": 640,

|

| 57 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 58 |

+

},

|

| 59 |

+

{

|

| 60 |

+

"id": 3,

|

| 61 |

+

"license": 1,

|

| 62 |

+

"file_name": "2777_jpg.rf.3b60ea7f7e70552e70e41528052018bd.jpg",

|

| 63 |

+

"height": 640,

|

| 64 |

+

"width": 640,

|

| 65 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 66 |

+

},

|

| 67 |

+

{

|

| 68 |

+

"id": 4,

|

| 69 |

+

"license": 1,

|

| 70 |

+

"file_name": "2860_jpg.rf.3bb87fa4f938af5abfb1e17676ec1dad.jpg",

|

| 71 |

+

"height": 640,

|

| 72 |

+

"width": 640,

|

| 73 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 74 |

+

},

|

| 75 |

+

{

|

| 76 |

+

"id": 7,

|

| 77 |

+

"license": 1,

|

| 78 |

+

"file_name": "1491_jpg.rf.3c658e83538de0fa5a3f4e13d7d85f12.jpg",

|

| 79 |

+

"height": 640,

|

| 80 |

+

"width": 640,

|

| 81 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 82 |

+

},

|

| 83 |

+

{

|

| 84 |

+

"id": 8,

|

| 85 |

+

"license": 1,

|

| 86 |

+

"file_name": "1385_jpg.rf.3c67cb92e2922dba0e6dba86f69df40b.jpg",

|

| 87 |

+

"height": 640,

|

| 88 |

+

"width": 640,

|

| 89 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 90 |

+

},

|

| 91 |

+

{

|

| 92 |

+

"id": 11,

|

| 93 |

+

"license": 1,

|

| 94 |

+

"file_name": "1550_jpg.rf.3d067be9580ec32dbee5a89c675d8459.jpg",

|

| 95 |

+

"height": 640,

|

| 96 |

+

"width": 640,

|

| 97 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 98 |

+

},

|

| 99 |

+

{

|

| 100 |

+

"id": 16,

|

| 101 |

+

"license": 1,

|

| 102 |

+

"file_name": "239_jpg.rf.3dcc0799277fb78a2ab21db7761ccaeb.jpg",

|

| 103 |

+

"height": 640,

|

| 104 |

+

"width": 640,

|

| 105 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 106 |

+

},

|

| 107 |

+

{

|

| 108 |

+

"id": 17,

|

| 109 |

+

"license": 1,

|

| 110 |

+

"file_name": "194_jpg.rf.3e3dd592d034bb5ee27a978553819f42.jpg",

|

| 111 |

+

"height": 640,

|

| 112 |

+

"width": 640,

|

| 113 |

+

"date_captured": "2023-08-19T04:37:54+00:00"

|

| 114 |

+

}

|

| 115 |

+

],

|

| 116 |

+

"annotations": [

|

| 117 |

+

{

|

| 118 |

+

"id": 0,

|

| 119 |

+

"image_id": 0,

|

| 120 |

+

"category_id": 1,

|

| 121 |

+

"bbox": [

|

| 122 |

+

145,

|

| 123 |

+

239,

|

| 124 |

+

168.75,

|

| 125 |

+

162.5

|

| 126 |

+

],

|

| 127 |

+

"area": 27421.875,

|

| 128 |

+

"segmentation": [

|

| 129 |

+

[

|

| 130 |

+

313.75,

|

| 131 |

+

238.75,

|

| 132 |

+

145,

|

| 133 |

+

238.75,

|

| 134 |

+

145,

|

| 135 |

+

401.25,

|

| 136 |

+

313.75,

|

| 137 |

+

401.25,

|

| 138 |

+

313.75,

|

| 139 |

+

238.75

|

| 140 |

+

]

|

| 141 |

+

],

|

| 142 |

+

"iscrowd": 0

|

| 143 |

+

},

|

| 144 |

+

{

|

| 145 |

+

"id": 1,

|

| 146 |

+

"image_id": 1,

|

| 147 |

+

"category_id": 1,

|

| 148 |

+

"bbox": [

|

| 149 |

+

194,

|

| 150 |

+

176,

|

| 151 |

+

148.75,

|

| 152 |

+

233.75

|

| 153 |

+

],

|

| 154 |

+

"area": 34770.313,

|

| 155 |

+

"segmentation": [

|

| 156 |

+

[

|

| 157 |

+

342.5,

|

| 158 |

+

176.25,

|

| 159 |

+

193.75,

|

| 160 |

+

176.25,

|

| 161 |

+

193.75,

|

| 162 |

+

410,

|

| 163 |

+

342.5,

|

| 164 |

+

410,

|

| 165 |

+

342.5,

|

| 166 |

+

176.25

|

| 167 |

+

]

|

| 168 |

+

],

|

| 169 |

+

"iscrowd": 0

|

| 170 |

+

},

|

| 171 |

+

{

|

| 172 |

+

"id": 2,

|

| 173 |

+

"image_id": 2,

|

| 174 |

+

"category_id": 1,

|

| 175 |

+

"bbox": [

|

| 176 |

+

133,

|

| 177 |

+

173,

|

| 178 |

+

162.5,

|

| 179 |

+

185

|

| 180 |

+

],

|

| 181 |

+

"area": 30062.5,

|

| 182 |

+

"segmentation": [

|

| 183 |

+

[

|

| 184 |

+

295,

|

| 185 |

+

172.5,

|

| 186 |

+

132.5,

|

| 187 |

+

172.5,

|

| 188 |

+

132.5,

|

| 189 |

+

357.5,

|

| 190 |

+

295,

|

| 191 |

+

357.5,

|

| 192 |

+

295,

|

| 193 |

+

172.5

|

| 194 |

+

]

|

| 195 |

+

],

|

| 196 |

+

"iscrowd": 0

|

| 197 |

+

},

|

| 198 |

+

{

|

| 199 |

+

"id": 3,

|

| 200 |

+

"image_id": 3,

|

| 201 |

+

"category_id": 1,

|

| 202 |

+

"bbox": [

|

| 203 |

+

245,

|

| 204 |

+

358,

|

| 205 |

+

138.75,

|

| 206 |

+

166.25

|

| 207 |

+

],

|

| 208 |

+

"area": 23067.188,

|

| 209 |

+

"segmentation": [

|

| 210 |

+

[

|

| 211 |

+

383.75,

|

| 212 |

+

357.5,

|

| 213 |

+

245,

|

| 214 |

+

357.5,

|

| 215 |

+

245,

|

| 216 |

+

523.75,

|

| 217 |

+

383.75,

|

| 218 |

+

523.75,

|

| 219 |

+

383.75,

|

| 220 |

+

357.5

|

| 221 |

+

]

|

| 222 |

+

],

|

| 223 |

+

"iscrowd": 0

|

| 224 |

+

},

|

| 225 |

+

{

|

| 226 |

+

"id": 4,

|

| 227 |

+

"image_id": 4,

|

| 228 |

+

"category_id": 1,

|

| 229 |

+

"bbox": [

|

| 230 |

+

80,

|

| 231 |

+

189,

|

| 232 |

+

112.5,

|

| 233 |

+

132.5

|

| 234 |

+

],

|

| 235 |

+

"area": 14906.25,

|

| 236 |

+

"segmentation": [

|

| 237 |

+

[

|

| 238 |

+

192.5,

|

| 239 |

+

188.75,

|

| 240 |

+

80,

|

| 241 |

+

188.75,

|

| 242 |

+

80,

|

| 243 |

+

321.25,

|

| 244 |

+

192.5,

|

| 245 |

+

321.25,

|

| 246 |

+

192.5,

|

| 247 |

+

188.75

|

| 248 |

+

]

|

| 249 |

+

],

|

| 250 |

+

"iscrowd": 0

|

| 251 |

+

},

|

| 252 |

+

{

|

| 253 |

+

"id": 7,

|

| 254 |

+

"image_id": 7,

|

| 255 |

+

"category_id": 2,

|

| 256 |

+

"bbox": [

|

| 257 |

+

350,

|

| 258 |

+

288,

|

| 259 |

+

42.5,

|

| 260 |

+

52.5

|

| 261 |

+

],

|

| 262 |

+

"area": 2231.25,

|

| 263 |

+

"segmentation": [

|

| 264 |

+

[

|

| 265 |

+

392.5,

|

| 266 |

+

287.5,

|

| 267 |

+

350,

|

| 268 |

+

287.5,

|

| 269 |

+

350,

|

| 270 |

+

340,

|

| 271 |

+

392.5,

|

| 272 |

+

340,

|

| 273 |

+

392.5,

|

| 274 |

+

287.5

|

| 275 |

+

]

|

| 276 |

+

],

|

| 277 |

+

"iscrowd": 0

|

| 278 |

+

},

|

| 279 |

+

{

|

| 280 |

+

"id": 8,

|

| 281 |

+

"image_id": 8,

|

| 282 |

+

"category_id": 2,

|

| 283 |

+

"bbox": [

|

| 284 |

+

239,

|

| 285 |

+

250,

|

| 286 |

+

61.25,

|

| 287 |

+

87.5

|

| 288 |

+

],

|

| 289 |

+

"area": 5359.375,

|

| 290 |

+

"segmentation": [

|

| 291 |

+

[

|

| 292 |

+

300,

|

| 293 |

+

250,

|

| 294 |

+

238.75,

|

| 295 |

+

250,

|

| 296 |

+

238.75,

|

| 297 |

+

337.5,

|

| 298 |

+

300,

|

| 299 |

+

337.5,

|

| 300 |

+

300,

|

| 301 |

+

250

|

| 302 |

+

]

|

| 303 |

+

],

|

| 304 |

+

"iscrowd": 0

|

| 305 |

+

},

|

| 306 |

+

{

|

| 307 |

+

"id": 11,

|

| 308 |

+

"image_id": 11,

|

| 309 |

+

"category_id": 2,

|

| 310 |

+

"bbox": [

|

| 311 |

+

283,

|

| 312 |

+

270,

|

| 313 |

+

111.25,

|

| 314 |

+

102.5

|

| 315 |

+

],

|

| 316 |

+

"area": 11403.125,

|

| 317 |

+

"segmentation": [

|

| 318 |

+

[

|

| 319 |

+

393.75,

|

| 320 |

+

270,

|

| 321 |

+

282.5,

|

| 322 |

+

270,

|

| 323 |

+

282.5,

|

| 324 |

+

372.5,

|

| 325 |

+

393.75,

|

| 326 |

+

372.5,

|

| 327 |

+

393.75,

|

| 328 |

+

270

|

| 329 |

+

]

|

| 330 |

+

],

|

| 331 |

+

"iscrowd": 0

|

| 332 |

+

},

|

| 333 |

+

{

|

| 334 |

+

"id": 16,

|

| 335 |

+

"image_id": 16,

|

| 336 |

+

"category_id": 2,

|

| 337 |

+

"bbox": [

|

| 338 |

+

279,

|

| 339 |

+

236,

|

| 340 |

+

78.75,

|

| 341 |

+

76.25

|

| 342 |

+

],

|

| 343 |

+

"area": 6004.688,

|

| 344 |

+

"segmentation": [

|

| 345 |

+

[

|

| 346 |

+

357.5,

|

| 347 |

+

236.25,

|

| 348 |

+

278.75,

|

| 349 |

+

236.25,

|

| 350 |

+

278.75,

|

| 351 |

+

312.5,

|

| 352 |

+

357.5,

|

| 353 |

+

312.5,

|

| 354 |

+

357.5,

|

| 355 |

+

236.25

|

| 356 |

+

]

|

| 357 |

+

],

|

| 358 |

+

"iscrowd": 0

|

| 359 |

+

},

|

| 360 |

+

{

|

| 361 |

+

"id": 17,

|

| 362 |

+

"image_id": 17,

|

| 363 |

+

"category_id": 2,

|

| 364 |

+

"bbox": [

|

| 365 |

+

286,

|

| 366 |

+

160,

|

| 367 |

+

188.75,

|

| 368 |

+

166.25

|

| 369 |

+

],

|

| 370 |

+

"area": 31379.688,

|

| 371 |

+

"segmentation": [

|

| 372 |

+

[

|

| 373 |

+

475,

|

| 374 |

+

160,

|

| 375 |

+

286.25,

|

| 376 |

+

160,

|

| 377 |

+

286.25,

|

| 378 |

+

326.25,

|

| 379 |

+

475,

|

| 380 |

+

326.25,

|

| 381 |

+

475,

|

| 382 |

+

160

|

| 383 |

+

]

|

| 384 |

+

],

|

| 385 |

+

"iscrowd": 0

|

| 386 |

+

}

|

| 387 |

+

]

|

| 388 |

+

}

|

examples/tumor1.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor1",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor10.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor10",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor2.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor2",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor3.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor3",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor4.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor4",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor5.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor5",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor6.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor6",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor7.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor7",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor8.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor8",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

examples/tumor9.json

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"name": "tumor9",

|

| 3 |

+

"comment": "",

|

| 4 |

+

"model": "",

|

| 5 |

+

"prompt": "detect cell tumor",

|

| 6 |

+

"license": ""

|

| 7 |

+

}

|

requirements.txt

CHANGED

|

@@ -1,6 +1,2 @@

|

|

| 1 |

-

git+https://github.com/huggingface/transformers.git

|

| 2 |

-

torch

|

| 3 |

-

jax

|

| 4 |

-

flax

|

| 5 |

spaces

|

| 6 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

spaces

|

| 2 |

+

vision-agent

|

vae-oid.npz

DELETED

|

@@ -1,3 +0,0 @@

|

|

| 1 |

-

version https://git-lfs.github.com/spec/v1

|

| 2 |

-

oid sha256:5586010257b8536dddefab65e7755077f21d5672d5674dacf911f73ae95a4447

|

| 3 |

-

size 8479556

|

|

|

|

|

|

|

|

|

|

|

|