Spaces:

Runtime error

Runtime error

Eric Michael Martinez

commited on

Commit

•

a05d6e8

1

Parent(s):

c22fd38

update

Browse files- 02_prototyping_a_basic_chatbot_ui.ipynb +36 -9

- 03_using_the_openai_api.ipynb +244 -76

- 04_creating_a_functional_conversational_chatbot.ipynb +1 -9

- 05_deploying_a_chatbot_to_the_web.ipynb +472 -45

- 06_designing_effective_prompts.ipynb +334 -47

- 07_software_engineering_applied_to_llms.ipynb +402 -93

- images/access-tokens.png +0 -0

- images/add-secret.png +0 -0

- images/copy-token.png +0 -0

- images/dropdown.png +0 -0

- images/new-access-token.png +0 -0

- images/profile-settings.png +0 -0

- images/repo.png +0 -0

- images/restart.png +0 -0

- images/secrets.png +0 -0

- images/signup.png +0 -0

- images/space-settings.png +0 -0

02_prototyping_a_basic_chatbot_ui.ipynb

CHANGED

|

@@ -87,14 +87,37 @@

|

|

| 87 |

},

|

| 88 |

{

|

| 89 |

"cell_type": "code",

|

| 90 |

-

"execution_count":

|

| 91 |

"id": "0b28a4e7",

|

| 92 |

"metadata": {

|

| 93 |

"slideshow": {

|

| 94 |

"slide_type": "fragment"

|

| 95 |

}

|

| 96 |

},

|

| 97 |

-

"outputs": [

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 98 |

"source": [

|

| 99 |

"import gradio as gr\n",

|

| 100 |

" \n",

|

|

@@ -102,11 +125,11 @@

|

|

| 102 |

" return f\"{first_name} {last_name} is so cool\"\n",

|

| 103 |

" \n",

|

| 104 |

"with gr.Blocks() as app:\n",

|

| 105 |

-

"

|

| 106 |

-

"

|

| 107 |

-

"

|

| 108 |

-

"

|

| 109 |

-

"

|

| 110 |

" app.launch(share=True)\n"

|

| 111 |

]

|

| 112 |

},

|

|

@@ -227,14 +250,18 @@

|

|

| 227 |

{

|

| 228 |

"cell_type": "markdown",

|

| 229 |

"id": "3b660170",

|

| 230 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 231 |

"source": [

|

| 232 |

"Sweet! That wasn't too bad, not much code to make a cool little UI!"

|

| 233 |

]

|

| 234 |

}

|

| 235 |

],

|

| 236 |

"metadata": {

|

| 237 |

-

"celltoolbar": "

|

| 238 |

"kernelspec": {

|

| 239 |

"display_name": "Python 3 (ipykernel)",

|

| 240 |

"language": "python",

|

|

|

|

| 87 |

},

|

| 88 |

{

|

| 89 |

"cell_type": "code",

|

| 90 |

+

"execution_count": 3,

|

| 91 |

"id": "0b28a4e7",

|

| 92 |

"metadata": {

|

| 93 |

"slideshow": {

|

| 94 |

"slide_type": "fragment"

|

| 95 |

}

|

| 96 |

},

|

| 97 |

+

"outputs": [

|

| 98 |

+

{

|

| 99 |

+

"name": "stdout",

|

| 100 |

+

"output_type": "stream",

|

| 101 |

+

"text": [

|

| 102 |

+

"Running on local URL: http://127.0.0.1:7882\n",

|

| 103 |

+

"Running on public URL: https://d807b00726b10425a4.gradio.live\n",

|

| 104 |

+

"\n",

|

| 105 |

+

"This share link expires in 72 hours. For free permanent hosting and GPU upgrades (NEW!), check out Spaces: https://huggingface.co/spaces\n"

|

| 106 |

+

]

|

| 107 |

+

},

|

| 108 |

+

{

|

| 109 |

+

"data": {

|

| 110 |

+

"text/html": [

|

| 111 |

+

"<div><iframe src=\"https://d807b00726b10425a4.gradio.live\" width=\"100%\" height=\"500\" allow=\"autoplay; camera; microphone; clipboard-read; clipboard-write;\" frameborder=\"0\" allowfullscreen></iframe></div>"

|

| 112 |

+

],

|

| 113 |

+

"text/plain": [

|

| 114 |

+

"<IPython.core.display.HTML object>"

|

| 115 |

+

]

|

| 116 |

+

},

|

| 117 |

+

"metadata": {},

|

| 118 |

+

"output_type": "display_data"

|

| 119 |

+

}

|

| 120 |

+

],

|

| 121 |

"source": [

|

| 122 |

"import gradio as gr\n",

|

| 123 |

" \n",

|

|

|

|

| 125 |

" return f\"{first_name} {last_name} is so cool\"\n",

|

| 126 |

" \n",

|

| 127 |

"with gr.Blocks() as app:\n",

|

| 128 |

+

" first_name_box = gr.Textbox(label=\"First Name\")\n",

|

| 129 |

+

" last_name_box = gr.Textbox(label=\"Last Name\")\n",

|

| 130 |

+

" output_box = gr.Textbox(label=\"Output\", interactive=False)\n",

|

| 131 |

+

" btn = gr.Button(value =\"Send\")\n",

|

| 132 |

+

" btn.click(do_something_cool, inputs = [first_name_box, last_name_box], outputs = [output_box])\n",

|

| 133 |

" app.launch(share=True)\n"

|

| 134 |

]

|

| 135 |

},

|

|

|

|

| 250 |

{

|

| 251 |

"cell_type": "markdown",

|

| 252 |

"id": "3b660170",

|

| 253 |

+

"metadata": {

|

| 254 |

+

"slideshow": {

|

| 255 |

+

"slide_type": "slide"

|

| 256 |

+

}

|

| 257 |

+

},

|

| 258 |

"source": [

|

| 259 |

"Sweet! That wasn't too bad, not much code to make a cool little UI!"

|

| 260 |

]

|

| 261 |

}

|

| 262 |

],

|

| 263 |

"metadata": {

|

| 264 |

+

"celltoolbar": "Slideshow",

|

| 265 |

"kernelspec": {

|

| 266 |

"display_name": "Python 3 (ipykernel)",

|

| 267 |

"language": "python",

|

03_using_the_openai_api.ipynb

CHANGED

|

@@ -44,32 +44,56 @@

|

|

| 44 |

}

|

| 45 |

},

|

| 46 |

"source": [

|

| 47 |

-

"## Managing Application Secrets"

|

|

|

|

|

|

|

|

|

|

|

|

|

| 48 |

]

|

| 49 |

},

|

| 50 |

{

|

| 51 |

"cell_type": "markdown",

|

| 52 |

-

"id": "

|

| 53 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 54 |

"source": [

|

| 55 |

-

"

|

| 56 |

-

"\n",

|

| 57 |

-

"In software development, secrets are often used to authenticate users, grant access to resources, or encrypt/decrypt data. Mismanaging or exposing secrets can lead to severe security breaches and data leaks.\n",

|

| 58 |

-

"\n",

|

| 59 |

-

"Common examples of secrets\n",

|

| 60 |

"* API keys\n",

|

| 61 |

"* Database credentials\n",

|

| 62 |

"* SSH keys\n",

|

| 63 |

"* OAuth access tokens\n",

|

| 64 |

-

"* Encryption/decryption keys

|

| 65 |

-

|

| 66 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 67 |

"* Storing secrets in plain text\n",

|

| 68 |

"* Hardcoding secrets in source code\n",

|

| 69 |

"* Sharing secrets through unsecured channels (e.g., email or messaging apps)\n",

|

| 70 |

"* Using the same secret for multiple purposes\n",

|

| 71 |

-

"* Not rotating or updating secrets regularly

|

| 72 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 73 |

"How attackers might obtain secrets\n",

|

| 74 |

"* Exploiting vulnerabilities in software or infrastructure\n",

|

| 75 |

"* Intercepting unencrypted communications\n",

|

|

@@ -91,7 +115,7 @@

|

|

| 91 |

"* Limit access to secrets on a need-to-know basis\n",

|

| 92 |

"* Implement proper auditing and monitoring of secret usage\n",

|

| 93 |

"\n",

|

| 94 |

-

"

|

| 95 |

"* Many cloud providers offer secret management services (e.g., AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager) that securely store, manage, and rotate secrets.\n",

|

| 96 |

"* These services often provide access control, encryption, and auditing capabilities.\n",

|

| 97 |

"* Integrating cloud secret management services with your application can help secure sensitive information and reduce the risk of exposure.\n",

|

|

@@ -106,7 +130,11 @@

|

|

| 106 |

{

|

| 107 |

"cell_type": "markdown",

|

| 108 |

"id": "ef366b65",

|

| 109 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 110 |

"source": [

|

| 111 |

"#### Using `.dotenv` library to protect secrets in Python"

|

| 112 |

]

|

|

@@ -114,27 +142,23 @@

|

|

| 114 |

{

|

| 115 |

"cell_type": "markdown",

|

| 116 |

"id": "dc39df10",

|

| 117 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 118 |

"source": [

|

| 119 |

" `.dotenv` is a Python library that allows developers to load environment variables from a `.env` file. It helps keep secrets out of source code and makes it easier to manage and update them."

|

| 120 |

]

|

| 121 |

},

|

| 122 |

-

{

|

| 123 |

-

"cell_type": "code",

|

| 124 |

-

"execution_count": null,

|

| 125 |

-

"id": "88252d32",

|

| 126 |

-

"metadata": {},

|

| 127 |

-

"outputs": [],

|

| 128 |

-

"source": [

|

| 129 |

-

" 3. Add secrets as key-value pairs in the `.env` file\n",

|

| 130 |

-

" 4. Load secrets in your Python code using the `load_dotenv()` function\n",

|

| 131 |

-

" 5. Access secrets using `os.environ`"

|

| 132 |

-

]

|

| 133 |

-

},

|

| 134 |

{

|

| 135 |

"cell_type": "markdown",

|

| 136 |

"id": "ae0500ea",

|

| 137 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 138 |

"source": [

|

| 139 |

"Install the `python-dotenv` library"

|

| 140 |

]

|

|

@@ -143,7 +167,11 @@

|

|

| 143 |

"cell_type": "code",

|

| 144 |

"execution_count": 45,

|

| 145 |

"id": "1212333f",

|

| 146 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 147 |

"outputs": [],

|

| 148 |

"source": [

|

| 149 |

"!pip -q install --upgrade python-dotenv"

|

|

@@ -152,7 +180,11 @@

|

|

| 152 |

{

|

| 153 |

"cell_type": "markdown",

|

| 154 |

"id": "faecedf0",

|

| 155 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 156 |

"source": [

|

| 157 |

"Create a `.env` file in this folder using any editor."

|

| 158 |

]

|

|

@@ -160,7 +192,11 @@

|

|

| 160 |

{

|

| 161 |

"cell_type": "markdown",

|

| 162 |

"id": "a880382a",

|

| 163 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 164 |

"source": [

|

| 165 |

"Add secrets as key-value pairs in the `.env` file"

|

| 166 |

]

|

|

@@ -168,15 +204,25 @@

|

|

| 168 |

{

|

| 169 |

"cell_type": "markdown",

|

| 170 |

"id": "04f00703",

|

| 171 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 172 |

"source": [

|

| 173 |

"If you are using my OpenAI service use the following format:"

|

| 174 |

]

|

| 175 |

},

|

| 176 |

{

|

| 177 |

-

"cell_type": "

|

| 178 |

-

"

|

| 179 |

-

"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 180 |

"source": [

|

| 181 |

"OPENAI_API_BASE=<my API base>\n",

|

| 182 |

"OPENAI_API_KEY=<your API key to my service>"

|

|

@@ -185,7 +231,11 @@

|

|

| 185 |

{

|

| 186 |

"cell_type": "markdown",

|

| 187 |

"id": "a952b103",

|

| 188 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 189 |

"source": [

|

| 190 |

"If you are not using my OpenAI service then use the following format:"

|

| 191 |

]

|

|

@@ -194,7 +244,11 @@

|

|

| 194 |

"cell_type": "code",

|

| 195 |

"execution_count": null,

|

| 196 |

"id": "7cf6ed7b",

|

| 197 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 198 |

"outputs": [],

|

| 199 |

"source": [

|

| 200 |

"OPENAI_API_KEY=<your OpenAI API key>"

|

|

@@ -203,7 +257,11 @@

|

|

| 203 |

{

|

| 204 |

"cell_type": "markdown",

|

| 205 |

"id": "955963ed",

|

| 206 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 207 |

"source": [

|

| 208 |

"Then, use the following code to load those secrets into this notebook:"

|

| 209 |

]

|

|

@@ -212,7 +270,11 @@

|

|

| 212 |

"cell_type": "code",

|

| 213 |

"execution_count": 47,

|

| 214 |

"id": "fcadf45e",

|

| 215 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 216 |

"outputs": [

|

| 217 |

{

|

| 218 |

"data": {

|

|

@@ -234,7 +296,11 @@

|

|

| 234 |

{

|

| 235 |

"cell_type": "markdown",

|

| 236 |

"id": "d3b6c394",

|

| 237 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 238 |

"source": [

|

| 239 |

"#### Install Dependencies"

|

| 240 |

]

|

|

@@ -256,7 +322,11 @@

|

|

| 256 |

{

|

| 257 |

"cell_type": "markdown",

|

| 258 |

"id": "f2ed966d",

|

| 259 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 260 |

"source": [

|

| 261 |

"#### Let's make a function to wrap OpenAI functionality and write some basic tests"

|

| 262 |

]

|

|

@@ -264,7 +334,11 @@

|

|

| 264 |

{

|

| 265 |

"cell_type": "markdown",

|

| 266 |

"id": "c1b09026",

|

| 267 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 268 |

"source": [

|

| 269 |

"Start by simply seeing if we can make an API call"

|

| 270 |

]

|

|

@@ -273,7 +347,11 @@

|

|

| 273 |

"cell_type": "code",

|

| 274 |

"execution_count": 2,

|

| 275 |

"id": "0abdd4e9",

|

| 276 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 277 |

"outputs": [

|

| 278 |

{

|

| 279 |

"name": "stdout",

|

|

@@ -300,7 +378,11 @@

|

|

| 300 |

{

|

| 301 |

"cell_type": "markdown",

|

| 302 |

"id": "3f5d1530",

|

| 303 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 304 |

"source": [

|

| 305 |

"Great! Now let's wrap that in a function"

|

| 306 |

]

|

|

@@ -340,7 +422,11 @@

|

|

| 340 |

{

|

| 341 |

"cell_type": "markdown",

|

| 342 |

"id": "f4506fe8",

|

| 343 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 344 |

"source": [

|

| 345 |

"Let's add some tests!\n",

|

| 346 |

"\n",

|

|

@@ -381,7 +467,11 @@

|

|

| 381 |

{

|

| 382 |

"cell_type": "markdown",

|

| 383 |

"id": "ed166c5b",

|

| 384 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 385 |

"source": [

|

| 386 |

"But what if we do what to test the output of the LLM?\n",

|

| 387 |

"\n",

|

|

@@ -402,7 +492,11 @@

|

|

| 402 |

"cell_type": "code",

|

| 403 |

"execution_count": 31,

|

| 404 |

"id": "9c03b774",

|

| 405 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 406 |

"outputs": [],

|

| 407 |

"source": [

|

| 408 |

"import openai\n",

|

|

@@ -421,11 +515,29 @@

|

|

| 421 |

"assert isinstance(get_ai_reply(\"hello\", model=\"gpt-3.5-turbo\"), str)"

|

| 422 |

]

|

| 423 |

},

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 424 |

{

|

| 425 |

"cell_type": "code",

|

| 426 |

"execution_count": 33,

|

| 427 |

"id": "f5ed24da",

|

| 428 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 429 |

"outputs": [

|

| 430 |

{

|

| 431 |

"name": "stdout",

|

|

@@ -444,7 +556,11 @@

|

|

| 444 |

{

|

| 445 |

"cell_type": "markdown",

|

| 446 |

"id": "ee320648",

|

| 447 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 448 |

"source": [

|

| 449 |

"Ok great! Now, an LLM is no good to us if we can't _steer_ it.\n",

|

| 450 |

"\n",

|

|

@@ -455,7 +571,11 @@

|

|

| 455 |

"cell_type": "code",

|

| 456 |

"execution_count": 37,

|

| 457 |

"id": "295839a5",

|

| 458 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 459 |

"outputs": [],

|

| 460 |

"source": [

|

| 461 |

"import openai\n",

|

|

@@ -493,7 +613,11 @@

|

|

| 493 |

{

|

| 494 |

"cell_type": "markdown",

|

| 495 |

"id": "fc9763d6",

|

| 496 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 497 |

"source": [

|

| 498 |

"Let's see if we can get the LLM to follow instructions by adding instructions to the prompt.\n",

|

| 499 |

"\n",

|

|

@@ -504,7 +628,11 @@

|

|

| 504 |

"cell_type": "code",

|

| 505 |

"execution_count": 36,

|

| 506 |

"id": "c5e2a8b3",

|

| 507 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 508 |

"outputs": [

|

| 509 |

{

|

| 510 |

"name": "stdout",

|

|

@@ -521,32 +649,32 @@

|

|

| 521 |

{

|

| 522 |

"cell_type": "markdown",

|

| 523 |

"id": "e59d3275",

|

| 524 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 525 |

"source": [

|

| 526 |

"While the output is more or less controlled, the LLM responds with 'world.' or 'world'. While the word 'world' being in the string is pretty consistent, the punctuation is not.\n",

|

| 527 |

"\n",

|

| 528 |

-

"

|

| 529 |

-

"\n",

|

| 530 |

-

"For now let's assume this is the best we can do.\n",

|

| 531 |

-

"\n",

|

| 532 |

-

"How do we write tests against this?\n",

|

| 533 |

-

"\n",

|

| 534 |

-

"Well, what do have high confidence won't change in the LLM output?\n",

|

| 535 |

"\n",

|

| 536 |

"What is a test that we could write that:\n",

|

| 537 |

"* would pass if the LLM outputs in a manner that is consistent with our expectations (and consistent with its own output)?\n",

|

| 538 |

"* _we want to be true_ about our LLM system, and if it does not then we would want to know immediately and adjust our system?\n",

|

| 539 |

"* if the prompt does not change, that our expectation holds true?\n",

|

| 540 |

-

"* someone changes the prompt in a way that would break the rest of the system, that we would want to prevent that from being merged without fixing the downstream effects

|

| 541 |

-

"\n",

|

| 542 |

-

"That might be a bunch of ways of saying the same thing but I hope you get the point."

|

| 543 |

]

|

| 544 |

},

|

| 545 |

{

|

| 546 |

"cell_type": "code",

|

| 547 |

"execution_count": 38,

|

| 548 |

"id": "d2b2bc15",

|

| 549 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 550 |

"outputs": [],

|

| 551 |

"source": [

|

| 552 |

"# non-deterministic tests\n",

|

|

@@ -559,7 +687,11 @@

|

|

| 559 |

{

|

| 560 |

"cell_type": "markdown",

|

| 561 |

"id": "5e17eefe",

|

| 562 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 563 |

"source": [

|

| 564 |

"Alright that worked!\n",

|

| 565 |

"\n",

|

|

@@ -570,7 +702,11 @@

|

|

| 570 |

"cell_type": "code",

|

| 571 |

"execution_count": 39,

|

| 572 |

"id": "4fd88c05",

|

| 573 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 574 |

"outputs": [],

|

| 575 |

"source": [

|

| 576 |

"import openai\n",

|

|

@@ -615,7 +751,11 @@

|

|

| 615 |

{

|

| 616 |

"cell_type": "markdown",

|

| 617 |

"id": "e0a00cda",

|

| 618 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 619 |

"source": [

|

| 620 |

"Now let's check that it works"

|

| 621 |

]

|

|

@@ -624,7 +764,11 @@

|

|

| 624 |

"cell_type": "code",

|

| 625 |

"execution_count": 42,

|

| 626 |

"id": "977f99bd",

|

| 627 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 628 |

"outputs": [

|

| 629 |

{

|

| 630 |

"name": "stdout",

|

|

@@ -647,7 +791,11 @@

|

|

| 647 |

{

|

| 648 |

"cell_type": "markdown",

|

| 649 |

"id": "2ad45888",

|

| 650 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 651 |

"source": [

|

| 652 |

"Great! Now let's turn that into a test!"

|

| 653 |

]

|

|

@@ -656,7 +804,11 @@

|

|

| 656 |

"cell_type": "code",

|

| 657 |

"execution_count": 43,

|

| 658 |

"id": "83aa7546",

|

| 659 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 660 |

"outputs": [],

|

| 661 |

"source": [

|

| 662 |

"system_message=\"The user will tell you their name. When asked, repeat their name back to them.\"\n",

|

|

@@ -672,7 +824,11 @@

|

|

| 672 |

{

|

| 673 |

"cell_type": "markdown",

|

| 674 |

"id": "25e498c8",

|

| 675 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 676 |

"source": [

|

| 677 |

"Alright here is our final function for integrating with OpenAI!"

|

| 678 |

]

|

|

@@ -681,7 +837,11 @@

|

|

| 681 |

"cell_type": "code",

|

| 682 |

"execution_count": null,

|

| 683 |

"id": "bfc5cd86",

|

| 684 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 685 |

"outputs": [],

|

| 686 |

"source": [

|

| 687 |

"import openai\n",

|

|

@@ -736,7 +896,11 @@

|

|

| 736 |

"cell_type": "code",

|

| 737 |

"execution_count": 44,

|

| 738 |

"id": "159eea8a",

|

| 739 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 740 |

"outputs": [

|

| 741 |

{

|

| 742 |

"name": "stdout",

|

|

@@ -753,14 +917,18 @@

|

|

| 753 |

{

|

| 754 |

"cell_type": "markdown",

|

| 755 |

"id": "9de8f5da",

|

| 756 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 757 |

"source": [

|

| 758 |

"In the next few lessons, we will be building a graphical user interface around this functionality so we can have a real conversational experience."

|

| 759 |

]

|

| 760 |

}

|

| 761 |

],

|

| 762 |

"metadata": {

|

| 763 |

-

"celltoolbar": "

|

| 764 |

"kernelspec": {

|

| 765 |

"display_name": "Python 3 (ipykernel)",

|

| 766 |

"language": "python",

|

|

|

|

| 44 |

}

|

| 45 |

},

|

| 46 |

"source": [

|

| 47 |

+

"## Managing Application Secrets\n",

|

| 48 |

+

"\n",

|

| 49 |

+

"Secrets are sensitive information, such as API keys, passwords, or cryptographic keys, that must be protected to ensure the security and integrity of a system.\n",

|

| 50 |

+

"\n",

|

| 51 |

+

"In software development, secrets are often used to authenticate users, grant access to resources, or encrypt/decrypt data. Mismanaging or exposing secrets can lead to severe security breaches and data leaks."

|

| 52 |

]

|

| 53 |

},

|

| 54 |

{

|

| 55 |

"cell_type": "markdown",

|

| 56 |

+

"id": "2059552f",

|

| 57 |

+

"metadata": {

|

| 58 |

+

"slideshow": {

|

| 59 |

+

"slide_type": "slide"

|

| 60 |

+

}

|

| 61 |

+

},

|

| 62 |

"source": [

|

| 63 |

+

"#### Common examples of secrets\n",

|

|

|

|

|

|

|

|

|

|

|

|

|

| 64 |

"* API keys\n",

|

| 65 |

"* Database credentials\n",

|

| 66 |

"* SSH keys\n",

|

| 67 |

"* OAuth access tokens\n",

|

| 68 |

+

"* Encryption/decryption keys"

|

| 69 |

+

]

|

| 70 |

+

},

|

| 71 |

+

{

|

| 72 |

+

"cell_type": "markdown",

|

| 73 |

+

"id": "a1e650f8",

|

| 74 |

+

"metadata": {

|

| 75 |

+

"slideshow": {

|

| 76 |

+

"slide_type": "slide"

|

| 77 |

+

}

|

| 78 |

+

},

|

| 79 |

+

"source": [

|

| 80 |

+

"#### Common mistakes when handling secrets\n",

|

| 81 |

"* Storing secrets in plain text\n",

|

| 82 |

"* Hardcoding secrets in source code\n",

|

| 83 |

"* Sharing secrets through unsecured channels (e.g., email or messaging apps)\n",

|

| 84 |

"* Using the same secret for multiple purposes\n",

|

| 85 |

+

"* Not rotating or updating secrets regularly"

|

| 86 |

+

]

|

| 87 |

+

},

|

| 88 |

+

{

|

| 89 |

+

"cell_type": "markdown",

|

| 90 |

+

"id": "66de4ac1",

|

| 91 |

+

"metadata": {

|

| 92 |

+

"slideshow": {

|

| 93 |

+

"slide_type": "skip"

|

| 94 |

+

}

|

| 95 |

+

},

|

| 96 |

+

"source": [

|

| 97 |

"How attackers might obtain secrets\n",

|

| 98 |

"* Exploiting vulnerabilities in software or infrastructure\n",

|

| 99 |

"* Intercepting unencrypted communications\n",

|

|

|

|

| 115 |

"* Limit access to secrets on a need-to-know basis\n",

|

| 116 |

"* Implement proper auditing and monitoring of secret usage\n",

|

| 117 |

"\n",

|

| 118 |

+

"Cloud services and secret management\n",

|

| 119 |

"* Many cloud providers offer secret management services (e.g., AWS Secrets Manager, Azure Key Vault, Google Cloud Secret Manager) that securely store, manage, and rotate secrets.\n",

|

| 120 |

"* These services often provide access control, encryption, and auditing capabilities.\n",

|

| 121 |

"* Integrating cloud secret management services with your application can help secure sensitive information and reduce the risk of exposure.\n",

|

|

|

|

| 130 |

{

|

| 131 |

"cell_type": "markdown",

|

| 132 |

"id": "ef366b65",

|

| 133 |

+

"metadata": {

|

| 134 |

+

"slideshow": {

|

| 135 |

+

"slide_type": "slide"

|

| 136 |

+

}

|

| 137 |

+

},

|

| 138 |

"source": [

|

| 139 |

"#### Using `.dotenv` library to protect secrets in Python"

|

| 140 |

]

|

|

|

|

| 142 |

{

|

| 143 |

"cell_type": "markdown",

|

| 144 |

"id": "dc39df10",

|

| 145 |

+

"metadata": {

|

| 146 |

+

"slideshow": {

|

| 147 |

+

"slide_type": "fragment"

|

| 148 |

+

}

|

| 149 |

+

},

|

| 150 |

"source": [

|

| 151 |

" `.dotenv` is a Python library that allows developers to load environment variables from a `.env` file. It helps keep secrets out of source code and makes it easier to manage and update them."

|

| 152 |

]

|

| 153 |

},

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 154 |

{

|

| 155 |

"cell_type": "markdown",

|

| 156 |

"id": "ae0500ea",

|

| 157 |

+

"metadata": {

|

| 158 |

+

"slideshow": {

|

| 159 |

+

"slide_type": "slide"

|

| 160 |

+

}

|

| 161 |

+

},

|

| 162 |

"source": [

|

| 163 |

"Install the `python-dotenv` library"

|

| 164 |

]

|

|

|

|

| 167 |

"cell_type": "code",

|

| 168 |

"execution_count": 45,

|

| 169 |

"id": "1212333f",

|

| 170 |

+

"metadata": {

|

| 171 |

+

"slideshow": {

|

| 172 |

+

"slide_type": "fragment"

|

| 173 |

+

}

|

| 174 |

+

},

|

| 175 |

"outputs": [],

|

| 176 |

"source": [

|

| 177 |

"!pip -q install --upgrade python-dotenv"

|

|

|

|

| 180 |

{

|

| 181 |

"cell_type": "markdown",

|

| 182 |

"id": "faecedf0",

|

| 183 |

+

"metadata": {

|

| 184 |

+

"slideshow": {

|

| 185 |

+

"slide_type": "slide"

|

| 186 |

+

}

|

| 187 |

+

},

|

| 188 |

"source": [

|

| 189 |

"Create a `.env` file in this folder using any editor."

|

| 190 |

]

|

|

|

|

| 192 |

{

|

| 193 |

"cell_type": "markdown",

|

| 194 |

"id": "a880382a",

|

| 195 |

+

"metadata": {

|

| 196 |

+

"slideshow": {

|

| 197 |

+

"slide_type": "fragment"

|

| 198 |

+

}

|

| 199 |

+

},

|

| 200 |

"source": [

|

| 201 |

"Add secrets as key-value pairs in the `.env` file"

|

| 202 |

]

|

|

|

|

| 204 |

{

|

| 205 |

"cell_type": "markdown",

|

| 206 |

"id": "04f00703",

|

| 207 |

+

"metadata": {

|

| 208 |

+

"slideshow": {

|

| 209 |

+

"slide_type": "slide"

|

| 210 |

+

}

|

| 211 |

+

},

|

| 212 |

"source": [

|

| 213 |

"If you are using my OpenAI service use the following format:"

|

| 214 |

]

|

| 215 |

},

|

| 216 |

{

|

| 217 |

+

"cell_type": "code",

|

| 218 |

+

"execution_count": null,

|

| 219 |

+

"id": "33c7bf87",

|

| 220 |

+

"metadata": {

|

| 221 |

+

"slideshow": {

|

| 222 |

+

"slide_type": "fragment"

|

| 223 |

+

}

|

| 224 |

+

},

|

| 225 |

+

"outputs": [],

|

| 226 |

"source": [

|

| 227 |

"OPENAI_API_BASE=<my API base>\n",

|

| 228 |

"OPENAI_API_KEY=<your API key to my service>"

|

|

|

|

| 231 |

{

|

| 232 |

"cell_type": "markdown",

|

| 233 |

"id": "a952b103",

|

| 234 |

+

"metadata": {

|

| 235 |

+

"slideshow": {

|

| 236 |

+

"slide_type": "slide"

|

| 237 |

+

}

|

| 238 |

+

},

|

| 239 |

"source": [

|

| 240 |

"If you are not using my OpenAI service then use the following format:"

|

| 241 |

]

|

|

|

|

| 244 |

"cell_type": "code",

|

| 245 |

"execution_count": null,

|

| 246 |

"id": "7cf6ed7b",

|

| 247 |

+

"metadata": {

|

| 248 |

+

"slideshow": {

|

| 249 |

+

"slide_type": "fragment"

|

| 250 |

+

}

|

| 251 |

+

},

|

| 252 |

"outputs": [],

|

| 253 |

"source": [

|

| 254 |

"OPENAI_API_KEY=<your OpenAI API key>"

|

|

|

|

| 257 |

{

|

| 258 |

"cell_type": "markdown",

|

| 259 |

"id": "955963ed",

|

| 260 |

+

"metadata": {

|

| 261 |

+

"slideshow": {

|

| 262 |

+

"slide_type": "slide"

|

| 263 |

+

}

|

| 264 |

+

},

|

| 265 |

"source": [

|

| 266 |

"Then, use the following code to load those secrets into this notebook:"

|

| 267 |

]

|

|

|

|

| 270 |

"cell_type": "code",

|

| 271 |

"execution_count": 47,

|

| 272 |

"id": "fcadf45e",

|

| 273 |

+

"metadata": {

|

| 274 |

+

"slideshow": {

|

| 275 |

+

"slide_type": "fragment"

|

| 276 |

+

}

|

| 277 |

+

},

|

| 278 |

"outputs": [

|

| 279 |

{

|

| 280 |

"data": {

|

|

|

|

| 296 |

{

|

| 297 |

"cell_type": "markdown",

|

| 298 |

"id": "d3b6c394",

|

| 299 |

+

"metadata": {

|

| 300 |

+

"slideshow": {

|

| 301 |

+

"slide_type": "slide"

|

| 302 |

+

}

|

| 303 |

+

},

|

| 304 |

"source": [

|

| 305 |

"#### Install Dependencies"

|

| 306 |

]

|

|

|

|

| 322 |

{

|

| 323 |

"cell_type": "markdown",

|

| 324 |

"id": "f2ed966d",

|

| 325 |

+

"metadata": {

|

| 326 |

+

"slideshow": {

|

| 327 |

+

"slide_type": "slide"

|

| 328 |

+

}

|

| 329 |

+

},

|

| 330 |

"source": [

|

| 331 |

"#### Let's make a function to wrap OpenAI functionality and write some basic tests"

|

| 332 |

]

|

|

|

|

| 334 |

{

|

| 335 |

"cell_type": "markdown",

|

| 336 |

"id": "c1b09026",

|

| 337 |

+

"metadata": {

|

| 338 |

+

"slideshow": {

|

| 339 |

+

"slide_type": "fragment"

|

| 340 |

+

}

|

| 341 |

+

},

|

| 342 |

"source": [

|

| 343 |

"Start by simply seeing if we can make an API call"

|

| 344 |

]

|

|

|

|

| 347 |

"cell_type": "code",

|

| 348 |

"execution_count": 2,

|

| 349 |

"id": "0abdd4e9",

|

| 350 |

+

"metadata": {

|

| 351 |

+

"slideshow": {

|

| 352 |

+

"slide_type": "fragment"

|

| 353 |

+

}

|

| 354 |

+

},

|

| 355 |

"outputs": [

|

| 356 |

{

|

| 357 |

"name": "stdout",

|

|

|

|

| 378 |

{

|

| 379 |

"cell_type": "markdown",

|

| 380 |

"id": "3f5d1530",

|

| 381 |

+

"metadata": {

|

| 382 |

+

"slideshow": {

|

| 383 |

+

"slide_type": "slide"

|

| 384 |

+

}

|

| 385 |

+

},

|

| 386 |

"source": [

|

| 387 |

"Great! Now let's wrap that in a function"

|

| 388 |

]

|

|

|

|

| 422 |

{

|

| 423 |

"cell_type": "markdown",

|

| 424 |

"id": "f4506fe8",

|

| 425 |

+

"metadata": {

|

| 426 |

+

"slideshow": {

|

| 427 |

+

"slide_type": "slide"

|

| 428 |

+

}

|

| 429 |

+

},

|

| 430 |

"source": [

|

| 431 |

"Let's add some tests!\n",

|

| 432 |

"\n",

|

|

|

|

| 467 |

{

|

| 468 |

"cell_type": "markdown",

|

| 469 |

"id": "ed166c5b",

|

| 470 |

+

"metadata": {

|

| 471 |

+

"slideshow": {

|

| 472 |

+

"slide_type": "slide"

|

| 473 |

+

}

|

| 474 |

+

},

|

| 475 |

"source": [

|

| 476 |

"But what if we do what to test the output of the LLM?\n",

|

| 477 |

"\n",

|

|

|

|

| 492 |

"cell_type": "code",

|

| 493 |

"execution_count": 31,

|

| 494 |

"id": "9c03b774",

|

| 495 |

+

"metadata": {

|

| 496 |

+

"slideshow": {

|

| 497 |

+

"slide_type": "slide"

|

| 498 |

+

}

|

| 499 |

+

},

|

| 500 |

"outputs": [],

|

| 501 |

"source": [

|

| 502 |

"import openai\n",

|

|

|

|

| 515 |

"assert isinstance(get_ai_reply(\"hello\", model=\"gpt-3.5-turbo\"), str)"

|

| 516 |

]

|

| 517 |

},

|

| 518 |

+

{

|

| 519 |

+

"cell_type": "code",

|

| 520 |

+

"execution_count": null,

|

| 521 |

+

"id": "15e09750",

|

| 522 |

+

"metadata": {

|

| 523 |

+

"slideshow": {

|

| 524 |

+

"slide_type": "slide"

|

| 525 |

+

}

|

| 526 |

+

},

|

| 527 |

+

"outputs": [],

|

| 528 |

+

"source": [

|

| 529 |

+

"If we run this enough times we should see that the output for the bottom run is more inconsistent."

|

| 530 |

+

]

|

| 531 |

+

},

|

| 532 |

{

|

| 533 |

"cell_type": "code",

|

| 534 |

"execution_count": 33,

|

| 535 |

"id": "f5ed24da",

|

| 536 |

+

"metadata": {

|

| 537 |

+

"slideshow": {

|

| 538 |

+

"slide_type": "fragment"

|

| 539 |

+

}

|

| 540 |

+

},

|

| 541 |

"outputs": [

|

| 542 |

{

|

| 543 |

"name": "stdout",

|

|

|

|

| 556 |

{

|

| 557 |

"cell_type": "markdown",

|

| 558 |

"id": "ee320648",

|

| 559 |

+

"metadata": {

|

| 560 |

+

"slideshow": {

|

| 561 |

+

"slide_type": "slide"

|

| 562 |

+

}

|

| 563 |

+

},

|

| 564 |

"source": [

|

| 565 |

"Ok great! Now, an LLM is no good to us if we can't _steer_ it.\n",

|

| 566 |

"\n",

|

|

|

|

| 571 |

"cell_type": "code",

|

| 572 |

"execution_count": 37,

|

| 573 |

"id": "295839a5",

|

| 574 |

+

"metadata": {

|

| 575 |

+

"slideshow": {

|

| 576 |

+

"slide_type": "slide"

|

| 577 |

+

}

|

| 578 |

+

},

|

| 579 |

"outputs": [],

|

| 580 |

"source": [

|

| 581 |

"import openai\n",

|

|

|

|

| 613 |

{

|

| 614 |

"cell_type": "markdown",

|

| 615 |

"id": "fc9763d6",

|

| 616 |

+

"metadata": {

|

| 617 |

+

"slideshow": {

|

| 618 |

+

"slide_type": "slide"

|

| 619 |

+

}

|

| 620 |

+

},

|

| 621 |

"source": [

|

| 622 |

"Let's see if we can get the LLM to follow instructions by adding instructions to the prompt.\n",

|

| 623 |

"\n",

|

|

|

|

| 628 |

"cell_type": "code",

|

| 629 |

"execution_count": 36,

|

| 630 |

"id": "c5e2a8b3",

|

| 631 |

+

"metadata": {

|

| 632 |

+

"slideshow": {

|

| 633 |

+

"slide_type": "fragment"

|

| 634 |

+

}

|

| 635 |

+

},

|

| 636 |

"outputs": [

|

| 637 |

{

|

| 638 |

"name": "stdout",

|

|

|

|

| 649 |

{

|

| 650 |

"cell_type": "markdown",

|

| 651 |

"id": "e59d3275",

|

| 652 |

+

"metadata": {

|

| 653 |

+

"slideshow": {

|

| 654 |

+

"slide_type": "slide"

|

| 655 |

+

}

|

| 656 |

+

},

|

| 657 |

"source": [

|

| 658 |

"While the output is more or less controlled, the LLM responds with 'world.' or 'world'. While the word 'world' being in the string is pretty consistent, the punctuation is not.\n",

|

| 659 |

"\n",

|

| 660 |

+

"How do we write tests against this or have confidence with non-determinism?\n",

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 661 |

"\n",

|

| 662 |

"What is a test that we could write that:\n",

|

| 663 |

"* would pass if the LLM outputs in a manner that is consistent with our expectations (and consistent with its own output)?\n",

|

| 664 |

"* _we want to be true_ about our LLM system, and if it does not then we would want to know immediately and adjust our system?\n",

|

| 665 |

"* if the prompt does not change, that our expectation holds true?\n",

|

| 666 |

+

"* someone changes the prompt in a way that would break the rest of the system, that we would want to prevent that from being merged without fixing the downstream effects?"

|

|

|

|

|

|

|

| 667 |

]

|

| 668 |

},

|

| 669 |

{

|

| 670 |

"cell_type": "code",

|

| 671 |

"execution_count": 38,

|

| 672 |

"id": "d2b2bc15",

|

| 673 |

+

"metadata": {

|

| 674 |

+

"slideshow": {

|

| 675 |

+

"slide_type": "slide"

|

| 676 |

+

}

|

| 677 |

+

},

|

| 678 |

"outputs": [],

|

| 679 |

"source": [

|

| 680 |

"# non-deterministic tests\n",

|

|

|

|

| 687 |

{

|

| 688 |

"cell_type": "markdown",

|

| 689 |

"id": "5e17eefe",

|

| 690 |

+

"metadata": {

|

| 691 |

+

"slideshow": {

|

| 692 |

+

"slide_type": "slide"

|

| 693 |

+

}

|

| 694 |

+

},

|

| 695 |

"source": [

|

| 696 |

"Alright that worked!\n",

|

| 697 |

"\n",

|

|

|

|

| 702 |

"cell_type": "code",

|

| 703 |

"execution_count": 39,

|

| 704 |

"id": "4fd88c05",

|

| 705 |

+

"metadata": {

|

| 706 |

+

"slideshow": {

|

| 707 |

+

"slide_type": "slide"

|

| 708 |

+

}

|

| 709 |

+

},

|

| 710 |

"outputs": [],

|

| 711 |

"source": [

|

| 712 |

"import openai\n",

|

|

|

|

| 751 |

{

|

| 752 |

"cell_type": "markdown",

|

| 753 |

"id": "e0a00cda",

|

| 754 |

+

"metadata": {

|

| 755 |

+

"slideshow": {

|

| 756 |

+

"slide_type": "slide"

|

| 757 |

+

}

|

| 758 |

+

},

|

| 759 |

"source": [

|

| 760 |

"Now let's check that it works"

|

| 761 |

]

|

|

|

|

| 764 |

"cell_type": "code",

|

| 765 |

"execution_count": 42,

|

| 766 |

"id": "977f99bd",

|

| 767 |

+

"metadata": {

|

| 768 |

+

"slideshow": {

|

| 769 |

+

"slide_type": "fragment"

|

| 770 |

+

}

|

| 771 |

+

},

|

| 772 |

"outputs": [

|

| 773 |

{

|

| 774 |

"name": "stdout",

|

|

|

|

| 791 |

{

|

| 792 |

"cell_type": "markdown",

|

| 793 |

"id": "2ad45888",

|

| 794 |

+

"metadata": {

|

| 795 |

+

"slideshow": {

|

| 796 |

+

"slide_type": "slide"

|

| 797 |

+

}

|

| 798 |

+

},

|

| 799 |

"source": [

|

| 800 |

"Great! Now let's turn that into a test!"

|

| 801 |

]

|

|

|

|

| 804 |

"cell_type": "code",

|

| 805 |

"execution_count": 43,

|

| 806 |

"id": "83aa7546",

|

| 807 |

+

"metadata": {

|

| 808 |

+

"slideshow": {

|

| 809 |

+

"slide_type": "fragment"

|

| 810 |

+

}

|

| 811 |

+

},

|

| 812 |

"outputs": [],

|

| 813 |

"source": [

|

| 814 |

"system_message=\"The user will tell you their name. When asked, repeat their name back to them.\"\n",

|

|

|

|

| 824 |

{

|

| 825 |

"cell_type": "markdown",

|

| 826 |

"id": "25e498c8",

|

| 827 |

+

"metadata": {

|

| 828 |

+

"slideshow": {

|

| 829 |

+

"slide_type": "slide"

|

| 830 |

+

}

|

| 831 |

+

},

|

| 832 |

"source": [

|

| 833 |

"Alright here is our final function for integrating with OpenAI!"

|

| 834 |

]

|

|

|

|

| 837 |

"cell_type": "code",

|

| 838 |

"execution_count": null,

|

| 839 |

"id": "bfc5cd86",

|

| 840 |

+

"metadata": {

|

| 841 |

+

"slideshow": {

|

| 842 |

+

"slide_type": "slide"

|

| 843 |

+

}

|

| 844 |

+

},

|

| 845 |

"outputs": [],

|

| 846 |

"source": [

|

| 847 |

"import openai\n",

|

|

|

|

| 896 |

"cell_type": "code",

|

| 897 |

"execution_count": 44,

|

| 898 |

"id": "159eea8a",

|

| 899 |

+

"metadata": {

|

| 900 |

+

"slideshow": {

|

| 901 |

+

"slide_type": "slide"

|

| 902 |

+

}

|

| 903 |

+

},

|

| 904 |

"outputs": [

|

| 905 |

{

|

| 906 |

"name": "stdout",

|

|

|

|

| 917 |

{

|

| 918 |

"cell_type": "markdown",

|

| 919 |

"id": "9de8f5da",

|

| 920 |

+

"metadata": {

|

| 921 |

+

"slideshow": {

|

| 922 |

+

"slide_type": "fragment"

|

| 923 |

+

}

|

| 924 |

+

},

|

| 925 |

"source": [

|

| 926 |

"In the next few lessons, we will be building a graphical user interface around this functionality so we can have a real conversational experience."

|

| 927 |

]

|

| 928 |

}

|

| 929 |

],

|

| 930 |

"metadata": {

|

| 931 |

+

"celltoolbar": "Slideshow",

|

| 932 |

"kernelspec": {

|

| 933 |

"display_name": "Python 3 (ipykernel)",

|

| 934 |

"language": "python",

|

04_creating_a_functional_conversational_chatbot.ipynb

CHANGED

|

@@ -345,18 +345,10 @@

|

|

| 345 |

"# Set the share parameter to False, meaning the interface will not be publicly accessible\n",

|

| 346 |

"get_chatbot_app().launch()"

|

| 347 |

]

|

| 348 |

-

},

|

| 349 |

-

{

|

| 350 |

-

"cell_type": "code",

|

| 351 |

-

"execution_count": null,

|

| 352 |

-

"id": "ae24e591",

|

| 353 |

-

"metadata": {},

|

| 354 |

-

"outputs": [],

|

| 355 |

-

"source": []

|

| 356 |

}

|

| 357 |

],

|

| 358 |

"metadata": {

|

| 359 |

-

"celltoolbar": "

|

| 360 |

"kernelspec": {

|

| 361 |

"display_name": "Python 3 (ipykernel)",

|

| 362 |

"language": "python",

|

|

|

|

| 345 |

"# Set the share parameter to False, meaning the interface will not be publicly accessible\n",

|

| 346 |

"get_chatbot_app().launch()"

|

| 347 |

]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 348 |

}

|

| 349 |

],

|

| 350 |

"metadata": {

|

| 351 |

+

"celltoolbar": "Slideshow",

|

| 352 |

"kernelspec": {

|

| 353 |

"display_name": "Python 3 (ipykernel)",

|

| 354 |

"language": "python",

|

05_deploying_a_chatbot_to_the_web.ipynb

CHANGED

|

@@ -43,24 +43,36 @@

|

|

| 43 |

},

|

| 44 |

{

|

| 45 |

"cell_type": "markdown",

|

| 46 |

-

"id": "

|

| 47 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 48 |

"source": [

|

| 49 |

-

"

|

| 50 |

]

|

| 51 |

},

|

| 52 |

{

|

| 53 |

"cell_type": "markdown",

|

| 54 |

-

"id": "

|

| 55 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 56 |

"source": [

|

| 57 |

-

"

|

| 58 |

]

|

| 59 |

},

|

| 60 |

{

|

| 61 |

"cell_type": "markdown",

|

| 62 |

"id": "54de0ddc",

|

| 63 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 64 |

"source": [

|

| 65 |

"Add a username and password for your app to your `.env` file. This will ensure that unauthorized users are not able to access LLM features. Use the following format:"

|

| 66 |

]

|

|

@@ -69,7 +81,11 @@

|

|

| 69 |

"cell_type": "code",

|

| 70 |

"execution_count": null,

|

| 71 |

"id": "95dec7cf",

|

| 72 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 73 |

"outputs": [],

|

| 74 |

"source": [

|

| 75 |

"APP_USERNAME=<whatever username you want>\n",

|

|

@@ -79,24 +95,48 @@

|

|

| 79 |

{

|

| 80 |

"cell_type": "markdown",

|

| 81 |

"id": "5072dc21",

|

| 82 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 83 |

"source": [

|

| 84 |

-

"Let's start by taking all of our necessary chatbot code into one file which we will name `app.py`.

|

| 85 |

]

|

| 86 |

},

|

| 87 |

{

|

| 88 |

"cell_type": "markdown",

|

| 89 |

"id": "aacdcaaf",

|

| 90 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 91 |

"source": [

|

| 92 |

"Take note that this code has been altered a little bit from the last chatbot example in order to add authentication."

|

| 93 |

]

|

| 94 |

},

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 95 |

{

|

| 96 |

"cell_type": "code",

|

| 97 |

-

"execution_count":

|

| 98 |

"id": "710b66f7",

|

| 99 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 100 |

"outputs": [

|

| 101 |

{

|

| 102 |

"name": "stdout",

|

|

@@ -242,7 +282,11 @@

|

|

| 242 |

{

|

| 243 |

"cell_type": "markdown",

|

| 244 |

"id": "6d75af66",

|

| 245 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 246 |

"source": [

|

| 247 |

"We will also need a `requirements.txt` file to store the list of the packages that HuggingFace needs to install to run our chatbot."

|

| 248 |

]

|

|

@@ -251,7 +295,11 @@

|

|

| 251 |

"cell_type": "code",

|

| 252 |

"execution_count": 13,

|

| 253 |

"id": "14d0e434",

|

| 254 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 255 |

"outputs": [

|

| 256 |

{

|

| 257 |

"name": "stdout",

|

|

@@ -271,7 +319,11 @@

|

|

| 271 |

{

|

| 272 |

"cell_type": "markdown",

|

| 273 |

"id": "4debec45",

|

| 274 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 275 |

"source": [

|

| 276 |

"Now let's go ahead and commit our changes"

|

| 277 |

]

|

|

@@ -280,7 +332,11 @@

|

|

| 280 |

"cell_type": "code",

|

| 281 |

"execution_count": 9,

|

| 282 |

"id": "14d42a96",

|

| 283 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 284 |

"outputs": [],

|

| 285 |

"source": [

|

| 286 |

"!git add app.py"

|

|

@@ -290,7 +346,11 @@

|

|

| 290 |

"cell_type": "code",

|

| 291 |

"execution_count": 8,

|

| 292 |

"id": "d7c5b127",

|

| 293 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 294 |

"outputs": [],

|

| 295 |

"source": [

|

| 296 |

"!git add requirements.txt"

|

|

@@ -300,7 +360,11 @@

|

|

| 300 |

"cell_type": "code",

|

| 301 |

"execution_count": 11,

|

| 302 |

"id": "18960d9f",

|

| 303 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 304 |

"outputs": [

|

| 305 |

{

|

| 306 |

"name": "stdout",

|

|

@@ -320,7 +384,11 @@

|

|

| 320 |

{

|

| 321 |

"cell_type": "markdown",

|

| 322 |

"id": "09221ee0",

|

| 323 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 324 |

"source": [

|

| 325 |

"#### Using HuggingFace Spaces"

|

| 326 |

]

|

|

@@ -328,7 +396,11 @@

|

|

| 328 |

{

|

| 329 |

"cell_type": "markdown",

|

| 330 |

"id": "db789c94",

|

| 331 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 332 |

"source": [

|

| 333 |

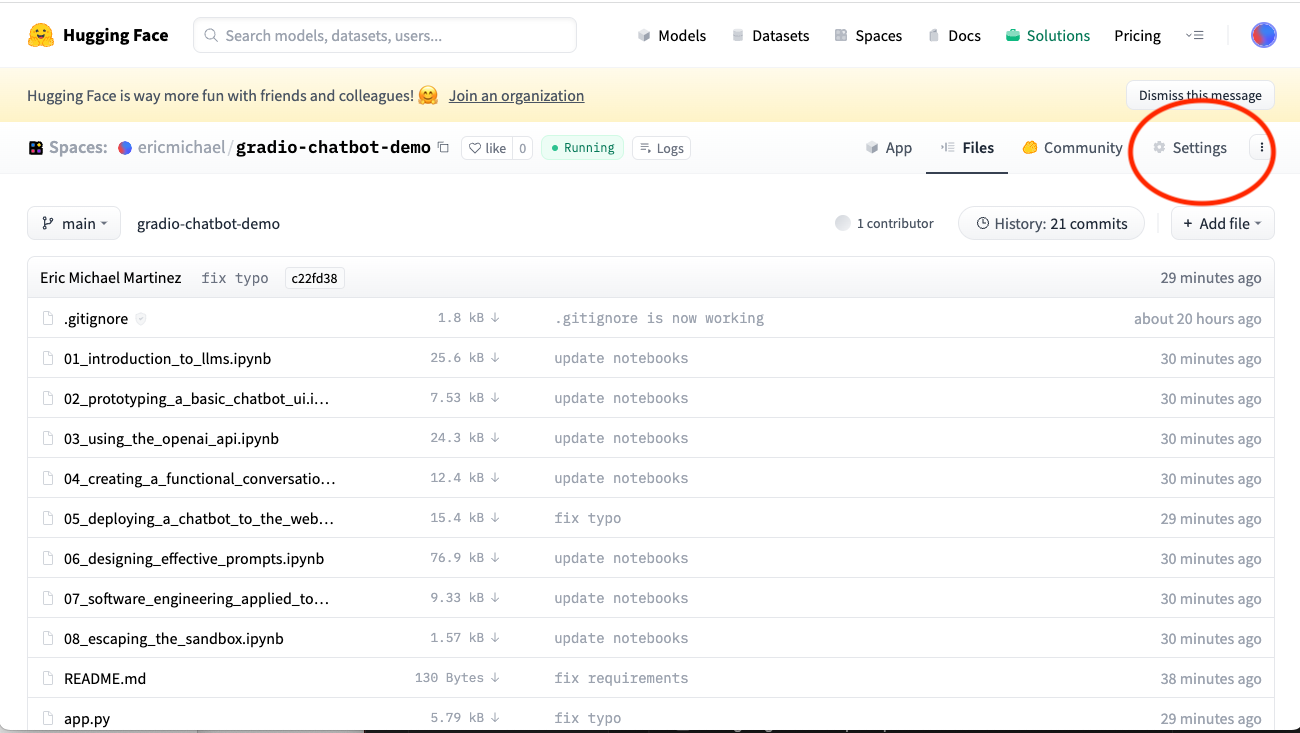

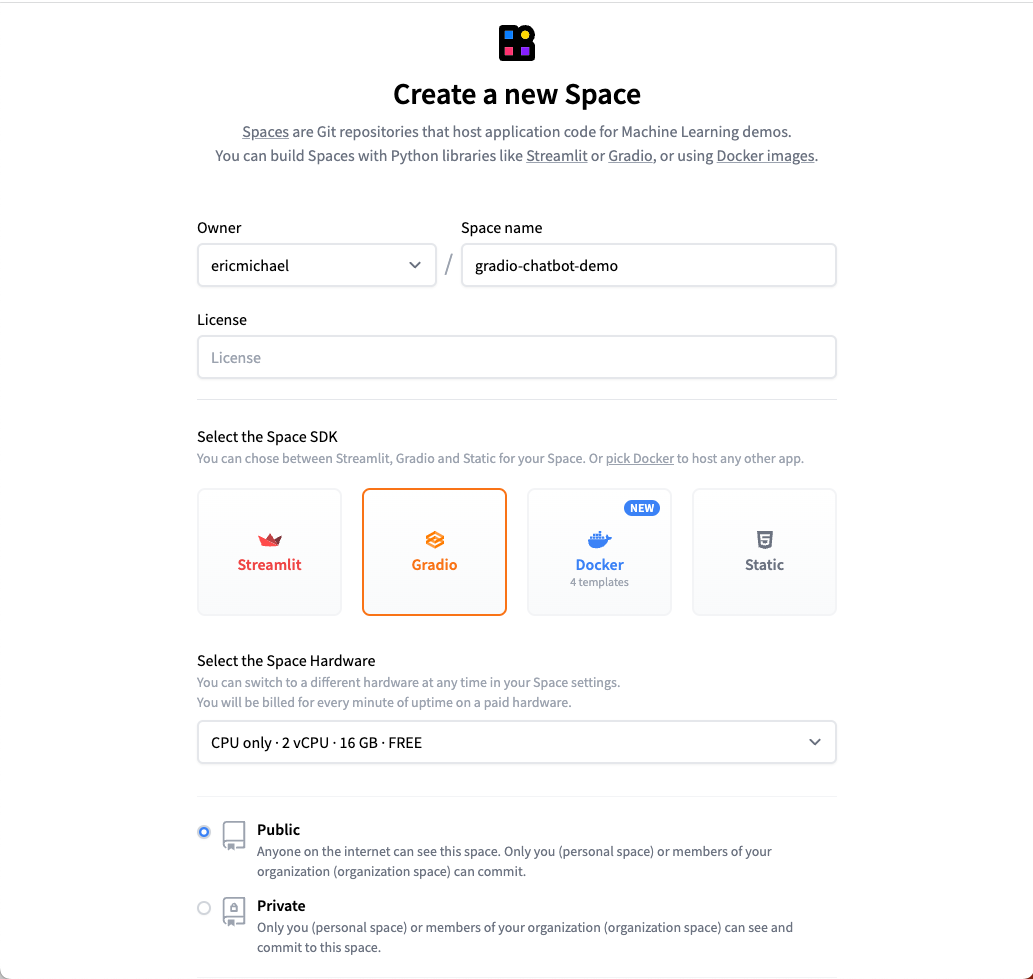

"As mentioned before, HuggingFace is a free-to-use platform for hosting AI demos and apps. We will need to make a HuggingFace _Space_ for our chatbot."

|

| 334 |

]

|

|

@@ -336,31 +408,235 @@

|

|

| 336 |

{

|

| 337 |

"cell_type": "markdown",

|

| 338 |

"id": "d9eedd10",

|

| 339 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 340 |

"source": [

|

| 341 |

-

"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 342 |

]

|

| 343 |

},

|

| 344 |

{

|

| 345 |

"cell_type": "markdown",

|

| 346 |

"id": "3e042d24",

|

| 347 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 348 |

"source": [

|

| 349 |

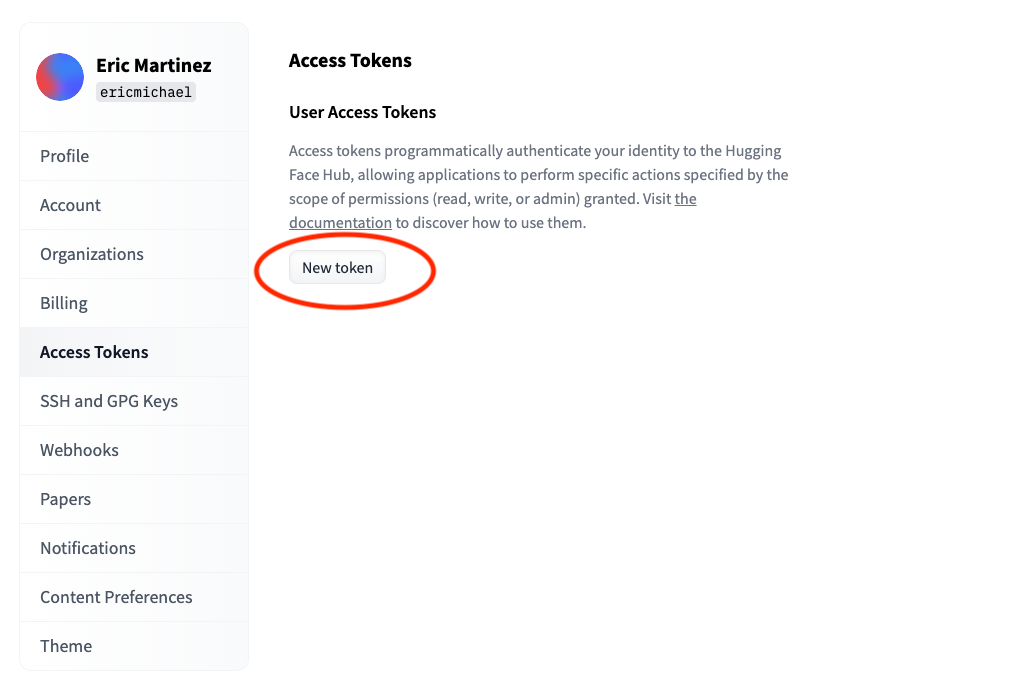

"#### Generate a HuggingFace Access Token"

|

| 350 |

]

|

| 351 |

},

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 352 |

{

|

| 353 |

"cell_type": "markdown",

|

| 354 |

"id": "a7d0781d",

|

| 355 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 356 |

"source": [

|

| 357 |

"#### Login to HuggingFace Hub"

|

| 358 |

]

|

| 359 |

},

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 360 |

{

|

| 361 |

"cell_type": "markdown",

|

| 362 |

"id": "eba83252",

|

| 363 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 364 |

"source": [

|

| 365 |

"Install `huggingface_hub`"

|

| 366 |

]

|

|

@@ -369,7 +645,11 @@

|

|

| 369 |

"cell_type": "code",

|

| 370 |

"execution_count": 40,

|

| 371 |

"id": "266bf481",

|

| 372 |

-

"metadata": {

|

|

|

|

|

|

|

|

|

|

|

|

|

| 373 |

"outputs": [],