Spaces:

Sleeping

Sleeping

Initial Commit

Browse files- __pycache__/craft.cpython-310.pyc +0 -0

- __pycache__/craft_utils.cpython-310.pyc +0 -0

- __pycache__/file_utils.cpython-310.pyc +0 -0

- __pycache__/imgproc.cpython-310.pyc +0 -0

- __pycache__/mosaik.cpython-310.pyc +0 -0

- __pycache__/ner.cpython-310.pyc +0 -0

- __pycache__/recognize.cpython-310.pyc +0 -0

- __pycache__/refinenet.cpython-310.pyc +0 -0

- __pycache__/seg.cpython-310.pyc +0 -0

- __pycache__/seg2.cpython-310.pyc +0 -0

- basenet/__init__.py +0 -0

- basenet/__pycache__/__init__.cpython-310.pyc +0 -0

- basenet/__pycache__/vgg16_bn.cpython-310.pyc +0 -0

- basenet/vgg16_bn.py +72 -0

- craft.py +76 -0

- craft_utils.py +217 -0

- dino2/__pycache__/model.cpython-310.pyc +0 -0

- dino2/model.py +93 -0

- file_utils.py +77 -0

- imgproc.py +70 -0

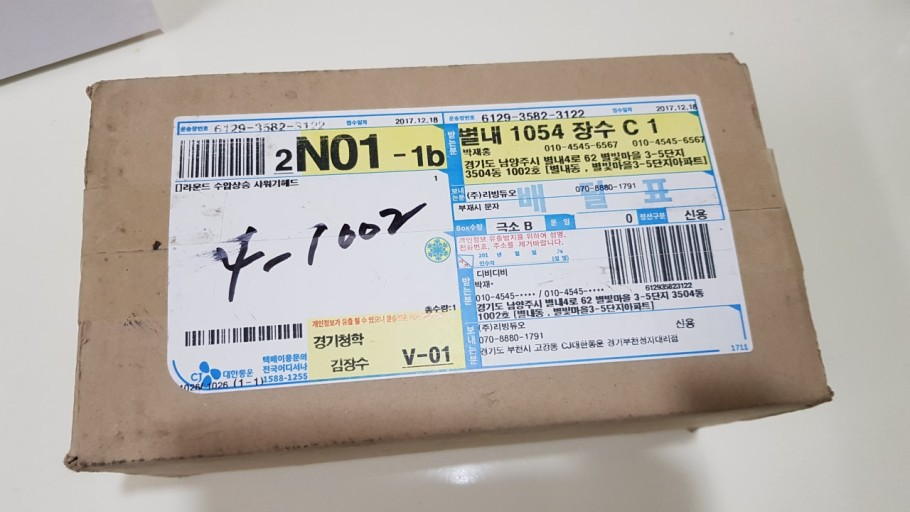

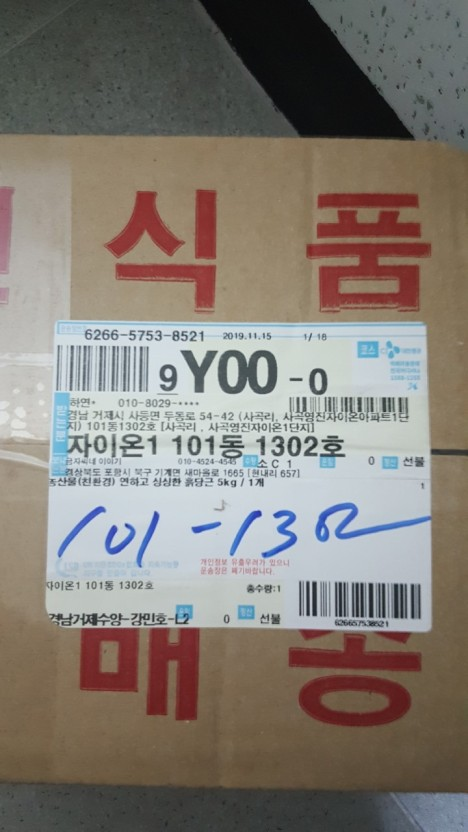

- input/1.png +0 -0

- input/2.png +0 -0

- input/3.png +0 -0

- input/4.png +0 -0

- install.sh +1 -0

- main.py +354 -0

- mosaik.py +32 -0

- ner.py +106 -0

- recognize.py +18 -0

- refinenet.py +65 -0

- requirements.txt +18 -0

- reset.sh +15 -0

- seg.py +46 -0

- seg2.py +68 -0

- sr/__pycache__/sr.cpython-310.pyc +0 -0

- sr/esrgan +1 -0

- sr/sr.py +15 -0

- unet/__pycache__/predict.cpython-310.pyc +0 -0

- unet/dino/__init__.py +1 -0

- unet/dino/__pycache__/__init__.cpython-310.pyc +0 -0

- unet/dino/__pycache__/model.cpython-310.pyc +0 -0

- unet/dino/__pycache__/modules.cpython-310.pyc +0 -0

- unet/dino/__pycache__/parts.cpython-310.pyc +0 -0

- unet/dino/model.py +47 -0

- unet/dino/parts.py +67 -0

- unet/predict.py +76 -0

__pycache__/craft.cpython-310.pyc

ADDED

|

Binary file (2.38 kB). View file

|

|

|

__pycache__/craft_utils.cpython-310.pyc

ADDED

|

Binary file (5.68 kB). View file

|

|

|

__pycache__/file_utils.cpython-310.pyc

ADDED

|

Binary file (2.49 kB). View file

|

|

|

__pycache__/imgproc.cpython-310.pyc

ADDED

|

Binary file (2.08 kB). View file

|

|

|

__pycache__/mosaik.cpython-310.pyc

ADDED

|

Binary file (698 Bytes). View file

|

|

|

__pycache__/ner.cpython-310.pyc

ADDED

|

Binary file (906 Bytes). View file

|

|

|

__pycache__/recognize.cpython-310.pyc

ADDED

|

Binary file (716 Bytes). View file

|

|

|

__pycache__/refinenet.cpython-310.pyc

ADDED

|

Binary file (1.93 kB). View file

|

|

|

__pycache__/seg.cpython-310.pyc

ADDED

|

Binary file (1.5 kB). View file

|

|

|

__pycache__/seg2.cpython-310.pyc

ADDED

|

Binary file (1.27 kB). View file

|

|

|

basenet/__init__.py

ADDED

|

File without changes

|

basenet/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (164 Bytes). View file

|

|

|

basenet/__pycache__/vgg16_bn.cpython-310.pyc

ADDED

|

Binary file (2.27 kB). View file

|

|

|

basenet/vgg16_bn.py

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from collections import namedtuple

|

| 2 |

+

|

| 3 |

+

import torch

|

| 4 |

+

import torch.nn as nn

|

| 5 |

+

import torch.nn.init as init

|

| 6 |

+

from torchvision import models

|

| 7 |

+

|

| 8 |

+

def init_weights(modules):

|

| 9 |

+

for m in modules:

|

| 10 |

+

if isinstance(m, nn.Conv2d):

|

| 11 |

+

init.xavier_uniform_(m.weight.data)

|

| 12 |

+

if m.bias is not None:

|

| 13 |

+

m.bias.data.zero_()

|

| 14 |

+

elif isinstance(m, nn.BatchNorm2d):

|

| 15 |

+

m.weight.data.fill_(1)

|

| 16 |

+

m.bias.data.zero_()

|

| 17 |

+

elif isinstance(m, nn.Linear):

|

| 18 |

+

m.weight.data.normal_(0, 0.01)

|

| 19 |

+

m.bias.data.zero_()

|

| 20 |

+

|

| 21 |

+

class vgg16_bn(torch.nn.Module):

|

| 22 |

+

def __init__(self, pretrained=True, freeze=True):

|

| 23 |

+

super(vgg16_bn, self).__init__()

|

| 24 |

+

|

| 25 |

+

vgg_pretrained_features = models.vgg16_bn(pretrained=pretrained).features

|

| 26 |

+

self.slice1 = torch.nn.Sequential()

|

| 27 |

+

self.slice2 = torch.nn.Sequential()

|

| 28 |

+

self.slice3 = torch.nn.Sequential()

|

| 29 |

+

self.slice4 = torch.nn.Sequential()

|

| 30 |

+

self.slice5 = torch.nn.Sequential()

|

| 31 |

+

for x in range(12): # conv2_2

|

| 32 |

+

self.slice1.add_module(str(x), vgg_pretrained_features[x])

|

| 33 |

+

for x in range(12, 19): # conv3_3

|

| 34 |

+

self.slice2.add_module(str(x), vgg_pretrained_features[x])

|

| 35 |

+

for x in range(19, 29): # conv4_3

|

| 36 |

+

self.slice3.add_module(str(x), vgg_pretrained_features[x])

|

| 37 |

+

for x in range(29, 39): # conv5_3

|

| 38 |

+

self.slice4.add_module(str(x), vgg_pretrained_features[x])

|

| 39 |

+

|

| 40 |

+

# fc6, fc7 without atrous conv

|

| 41 |

+

self.slice5 = torch.nn.Sequential(

|

| 42 |

+

nn.MaxPool2d(kernel_size=3, stride=1, padding=1),

|

| 43 |

+

nn.Conv2d(512, 1024, kernel_size=3, padding=6, dilation=6),

|

| 44 |

+

nn.Conv2d(1024, 1024, kernel_size=1)

|

| 45 |

+

)

|

| 46 |

+

|

| 47 |

+

if not pretrained:

|

| 48 |

+

init_weights(self.slice1.modules())

|

| 49 |

+

init_weights(self.slice2.modules())

|

| 50 |

+

init_weights(self.slice3.modules())

|

| 51 |

+

init_weights(self.slice4.modules())

|

| 52 |

+

|

| 53 |

+

init_weights(self.slice5.modules()) # no pretrained model for fc6 and fc7

|

| 54 |

+

|

| 55 |

+

if freeze:

|

| 56 |

+

for param in self.slice1.parameters(): # only first conv

|

| 57 |

+

param.requires_grad= False

|

| 58 |

+

|

| 59 |

+

def forward(self, X):

|

| 60 |

+

h = self.slice1(X)

|

| 61 |

+

h_relu2_2 = h

|

| 62 |

+

h = self.slice2(h)

|

| 63 |

+

h_relu3_2 = h

|

| 64 |

+

h = self.slice3(h)

|

| 65 |

+

h_relu4_3 = h

|

| 66 |

+

h = self.slice4(h)

|

| 67 |

+

h_relu5_3 = h

|

| 68 |

+

h = self.slice5(h)

|

| 69 |

+

h_fc7 = h

|

| 70 |

+

vgg_outputs = namedtuple("VggOutputs", ['fc7', 'relu5_3', 'relu4_3', 'relu3_2', 'relu2_2'])

|

| 71 |

+

out = vgg_outputs(h_fc7, h_relu5_3, h_relu4_3, h_relu3_2, h_relu2_2)

|

| 72 |

+

return out

|

craft.py

ADDED

|

@@ -0,0 +1,76 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import torch

|

| 3 |

+

import torch.nn as nn

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

|

| 6 |

+

from basenet.vgg16_bn import vgg16_bn, init_weights

|

| 7 |

+

|

| 8 |

+

class double_conv(nn.Module):

|

| 9 |

+

def __init__(self, in_ch, mid_ch, out_ch):

|

| 10 |

+

super(double_conv, self).__init__()

|

| 11 |

+

self.conv = nn.Sequential(

|

| 12 |

+

nn.Conv2d(in_ch + mid_ch, mid_ch, kernel_size=1),

|

| 13 |

+

nn.BatchNorm2d(mid_ch),

|

| 14 |

+

nn.ReLU(inplace=True),

|

| 15 |

+

nn.Conv2d(mid_ch, out_ch, kernel_size=3, padding=1),

|

| 16 |

+

nn.BatchNorm2d(out_ch),

|

| 17 |

+

nn.ReLU(inplace=True)

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

def forward(self, x):

|

| 21 |

+

x = self.conv(x)

|

| 22 |

+

return x

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

class CRAFT(nn.Module):

|

| 26 |

+

def __init__(self, pretrained=False, freeze=False):

|

| 27 |

+

super(CRAFT, self).__init__()

|

| 28 |

+

|

| 29 |

+

""" Base network """

|

| 30 |

+

self.basenet = vgg16_bn(pretrained, freeze)

|

| 31 |

+

|

| 32 |

+

""" U network """

|

| 33 |

+

self.upconv1 = double_conv(1024, 512, 256)

|

| 34 |

+

self.upconv2 = double_conv(512, 256, 128)

|

| 35 |

+

self.upconv3 = double_conv(256, 128, 64)

|

| 36 |

+

self.upconv4 = double_conv(128, 64, 32)

|

| 37 |

+

|

| 38 |

+

num_class = 2

|

| 39 |

+

self.conv_cls = nn.Sequential(

|

| 40 |

+

nn.Conv2d(32, 32, kernel_size=3, padding=1), nn.ReLU(inplace=True),

|

| 41 |

+

nn.Conv2d(32, 32, kernel_size=3, padding=1), nn.ReLU(inplace=True),

|

| 42 |

+

nn.Conv2d(32, 16, kernel_size=3, padding=1), nn.ReLU(inplace=True),

|

| 43 |

+

nn.Conv2d(16, 16, kernel_size=1), nn.ReLU(inplace=True),

|

| 44 |

+

nn.Conv2d(16, num_class, kernel_size=1),

|

| 45 |

+

)

|

| 46 |

+

|

| 47 |

+

init_weights(self.upconv1.modules())

|

| 48 |

+

init_weights(self.upconv2.modules())

|

| 49 |

+

init_weights(self.upconv3.modules())

|

| 50 |

+

init_weights(self.upconv4.modules())

|

| 51 |

+

init_weights(self.conv_cls.modules())

|

| 52 |

+

|

| 53 |

+

def forward(self, x):

|

| 54 |

+

""" Base network """

|

| 55 |

+

sources = self.basenet(x)

|

| 56 |

+

|

| 57 |

+

""" U network """

|

| 58 |

+

y = torch.cat([sources[0], sources[1]], dim=1)

|

| 59 |

+

y = self.upconv1(y)

|

| 60 |

+

|

| 61 |

+

y = F.interpolate(y, size=sources[2].size()[2:], mode='bilinear', align_corners=False)

|

| 62 |

+

y = torch.cat([y, sources[2]], dim=1)

|

| 63 |

+

y = self.upconv2(y)

|

| 64 |

+

|

| 65 |

+

y = F.interpolate(y, size=sources[3].size()[2:], mode='bilinear', align_corners=False)

|

| 66 |

+

y = torch.cat([y, sources[3]], dim=1)

|

| 67 |

+

y = self.upconv3(y)

|

| 68 |

+

|

| 69 |

+

y = F.interpolate(y, size=sources[4].size()[2:], mode='bilinear', align_corners=False)

|

| 70 |

+

y = torch.cat([y, sources[4]], dim=1)

|

| 71 |

+

feature = self.upconv4(y)

|

| 72 |

+

|

| 73 |

+

y = self.conv_cls(feature)

|

| 74 |

+

|

| 75 |

+

return y.permute(0,2,3,1), feature

|

| 76 |

+

|

craft_utils.py

ADDED

|

@@ -0,0 +1,217 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

|

| 3 |

+

import numpy as np

|

| 4 |

+

import cv2

|

| 5 |

+

import math

|

| 6 |

+

|

| 7 |

+

def warpCoord(Minv, pt):

|

| 8 |

+

out = np.matmul(Minv, (pt[0], pt[1], 1))

|

| 9 |

+

return np.array([out[0]/out[2], out[1]/out[2]])

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

def getDetBoxes_core(textmap, linkmap, text_threshold, link_threshold, low_text):

|

| 13 |

+

linkmap = linkmap.copy()

|

| 14 |

+

textmap = textmap.copy()

|

| 15 |

+

img_h, img_w = textmap.shape

|

| 16 |

+

|

| 17 |

+

ret, text_score = cv2.threshold(textmap, low_text, 1, 0)

|

| 18 |

+

ret, link_score = cv2.threshold(linkmap, link_threshold, 1, 0)

|

| 19 |

+

|

| 20 |

+

text_score_comb = np.clip(text_score + link_score, 0, 1)

|

| 21 |

+

nLabels, labels, stats, centroids = cv2.connectedComponentsWithStats(text_score_comb.astype(np.uint8), connectivity=4)

|

| 22 |

+

|

| 23 |

+

det = []

|

| 24 |

+

mapper = []

|

| 25 |

+

for k in range(1,nLabels):

|

| 26 |

+

size = stats[k, cv2.CC_STAT_AREA]

|

| 27 |

+

if size < 10: continue

|

| 28 |

+

|

| 29 |

+

if np.max(textmap[labels==k]) < text_threshold: continue

|

| 30 |

+

|

| 31 |

+

segmap = np.zeros(textmap.shape, dtype=np.uint8)

|

| 32 |

+

segmap[labels==k] = 255

|

| 33 |

+

segmap[np.logical_and(link_score==1, text_score==0)] = 0

|

| 34 |

+

x, y = stats[k, cv2.CC_STAT_LEFT], stats[k, cv2.CC_STAT_TOP]

|

| 35 |

+

w, h = stats[k, cv2.CC_STAT_WIDTH], stats[k, cv2.CC_STAT_HEIGHT]

|

| 36 |

+

niter = int(math.sqrt(size * min(w, h) / (w * h)) * 2)

|

| 37 |

+

sx, ex, sy, ey = x - niter, x + w + niter + 1, y - niter, y + h + niter + 1

|

| 38 |

+

if sx < 0 : sx = 0

|

| 39 |

+

if sy < 0 : sy = 0

|

| 40 |

+

if ex >= img_w: ex = img_w

|

| 41 |

+

if ey >= img_h: ey = img_h

|

| 42 |

+

kernel = cv2.getStructuringElement(cv2.MORPH_RECT,(1 + niter, 1 + niter))

|

| 43 |

+

segmap[sy:ey, sx:ex] = cv2.dilate(segmap[sy:ey, sx:ex], kernel)

|

| 44 |

+

|

| 45 |

+

np_contours = np.roll(np.array(np.where(segmap!=0)),1,axis=0).transpose().reshape(-1,2)

|

| 46 |

+

rectangle = cv2.minAreaRect(np_contours)

|

| 47 |

+

box = cv2.boxPoints(rectangle)

|

| 48 |

+

|

| 49 |

+

w, h = np.linalg.norm(box[0] - box[1]), np.linalg.norm(box[1] - box[2])

|

| 50 |

+

box_ratio = max(w, h) / (min(w, h) + 1e-5)

|

| 51 |

+

if abs(1 - box_ratio) <= 0.1:

|

| 52 |

+

l, r = min(np_contours[:,0]), max(np_contours[:,0])

|

| 53 |

+

t, b = min(np_contours[:,1]), max(np_contours[:,1])

|

| 54 |

+

box = np.array([[l, t], [r, t], [r, b], [l, b]], dtype=np.float32)

|

| 55 |

+

|

| 56 |

+

startidx = box.sum(axis=1).argmin()

|

| 57 |

+

box = np.roll(box, 4-startidx, 0)

|

| 58 |

+

box = np.array(box)

|

| 59 |

+

|

| 60 |

+

det.append(box)

|

| 61 |

+

mapper.append(k)

|

| 62 |

+

|

| 63 |

+

return det, labels, mapper

|

| 64 |

+

|

| 65 |

+

def getPoly_core(boxes, labels, mapper, linkmap):

|

| 66 |

+

num_cp = 5

|

| 67 |

+

max_len_ratio = 0.7

|

| 68 |

+

expand_ratio = 1.45

|

| 69 |

+

max_r = 2.0

|

| 70 |

+

step_r = 0.2

|

| 71 |

+

|

| 72 |

+

polys = []

|

| 73 |

+

for k, box in enumerate(boxes):

|

| 74 |

+

w, h = int(np.linalg.norm(box[0] - box[1]) + 1), int(np.linalg.norm(box[1] - box[2]) + 1)

|

| 75 |

+

if w < 10 or h < 10:

|

| 76 |

+

polys.append(None); continue

|

| 77 |

+

|

| 78 |

+

tar = np.float32([[0,0],[w,0],[w,h],[0,h]])

|

| 79 |

+

M = cv2.getPerspectiveTransform(box, tar)

|

| 80 |

+

word_label = cv2.warpPerspective(labels, M, (w, h), flags=cv2.INTER_NEAREST)

|

| 81 |

+

try:

|

| 82 |

+

Minv = np.linalg.inv(M)

|

| 83 |

+

except:

|

| 84 |

+

polys.append(None); continue

|

| 85 |

+

|

| 86 |

+

cur_label = mapper[k]

|

| 87 |

+

word_label[word_label != cur_label] = 0

|

| 88 |

+

word_label[word_label > 0] = 1

|

| 89 |

+

|

| 90 |

+

cp = []

|

| 91 |

+

max_len = -1

|

| 92 |

+

for i in range(w):

|

| 93 |

+

region = np.where(word_label[:,i] != 0)[0]

|

| 94 |

+

if len(region) < 2 : continue

|

| 95 |

+

cp.append((i, region[0], region[-1]))

|

| 96 |

+

length = region[-1] - region[0] + 1

|

| 97 |

+

if length > max_len: max_len = length

|

| 98 |

+

|

| 99 |

+

if h * max_len_ratio < max_len:

|

| 100 |

+

polys.append(None); continue

|

| 101 |

+

|

| 102 |

+

tot_seg = num_cp * 2 + 1

|

| 103 |

+

seg_w = w / tot_seg

|

| 104 |

+

pp = [None] * num_cp

|

| 105 |

+

cp_section = [[0, 0]] * tot_seg

|

| 106 |

+

seg_height = [0] * num_cp

|

| 107 |

+

seg_num = 0

|

| 108 |

+

num_sec = 0

|

| 109 |

+

prev_h = -1

|

| 110 |

+

for i in range(0,len(cp)):

|

| 111 |

+

(x, sy, ey) = cp[i]

|

| 112 |

+

if (seg_num + 1) * seg_w <= x and seg_num <= tot_seg:

|

| 113 |

+

# average previous segment

|

| 114 |

+

if num_sec == 0: break

|

| 115 |

+

cp_section[seg_num] = [cp_section[seg_num][0] / num_sec, cp_section[seg_num][1] / num_sec]

|

| 116 |

+

num_sec = 0

|

| 117 |

+

|

| 118 |

+

# reset variables

|

| 119 |

+

seg_num += 1

|

| 120 |

+

prev_h = -1

|

| 121 |

+

|

| 122 |

+

# accumulate center points

|

| 123 |

+

cy = (sy + ey) * 0.5

|

| 124 |

+

cur_h = ey - sy + 1

|

| 125 |

+

cp_section[seg_num] = [cp_section[seg_num][0] + x, cp_section[seg_num][1] + cy]

|

| 126 |

+

num_sec += 1

|

| 127 |

+

|

| 128 |

+

if seg_num % 2 == 0: continue # No polygon area

|

| 129 |

+

|

| 130 |

+

if prev_h < cur_h:

|

| 131 |

+

pp[int((seg_num - 1)/2)] = (x, cy)

|

| 132 |

+

seg_height[int((seg_num - 1)/2)] = cur_h

|

| 133 |

+

prev_h = cur_h

|

| 134 |

+

|

| 135 |

+

# processing last segment

|

| 136 |

+

if num_sec != 0:

|

| 137 |

+

cp_section[-1] = [cp_section[-1][0] / num_sec, cp_section[-1][1] / num_sec]

|

| 138 |

+

|

| 139 |

+

# pass if num of pivots is not sufficient or segment widh is smaller than character height

|

| 140 |

+

if None in pp or seg_w < np.max(seg_height) * 0.25:

|

| 141 |

+

polys.append(None); continue

|

| 142 |

+

|

| 143 |

+

# calc median maximum of pivot points

|

| 144 |

+

half_char_h = np.median(seg_height) * expand_ratio / 2

|

| 145 |

+

|

| 146 |

+

# calc gradiant and apply to make horizontal pivots

|

| 147 |

+

new_pp = []

|

| 148 |

+

for i, (x, cy) in enumerate(pp):

|

| 149 |

+

dx = cp_section[i * 2 + 2][0] - cp_section[i * 2][0]

|

| 150 |

+

dy = cp_section[i * 2 + 2][1] - cp_section[i * 2][1]

|

| 151 |

+

if dx == 0: # gradient if zero

|

| 152 |

+

new_pp.append([x, cy - half_char_h, x, cy + half_char_h])

|

| 153 |

+

continue

|

| 154 |

+

rad = - math.atan2(dy, dx)

|

| 155 |

+

c, s = half_char_h * math.cos(rad), half_char_h * math.sin(rad)

|

| 156 |

+

new_pp.append([x - s, cy - c, x + s, cy + c])

|

| 157 |

+

|

| 158 |

+

# get edge points to cover character heatmaps

|

| 159 |

+

isSppFound, isEppFound = False, False

|

| 160 |

+

grad_s = (pp[1][1] - pp[0][1]) / (pp[1][0] - pp[0][0]) + (pp[2][1] - pp[1][1]) / (pp[2][0] - pp[1][0])

|

| 161 |

+

grad_e = (pp[-2][1] - pp[-1][1]) / (pp[-2][0] - pp[-1][0]) + (pp[-3][1] - pp[-2][1]) / (pp[-3][0] - pp[-2][0])

|

| 162 |

+

for r in np.arange(0.5, max_r, step_r):

|

| 163 |

+

dx = 2 * half_char_h * r

|

| 164 |

+

if not isSppFound:

|

| 165 |

+

line_img = np.zeros(word_label.shape, dtype=np.uint8)

|

| 166 |

+

dy = grad_s * dx

|

| 167 |

+

p = np.array(new_pp[0]) - np.array([dx, dy, dx, dy])

|

| 168 |

+

cv2.line(line_img, (int(p[0]), int(p[1])), (int(p[2]), int(p[3])), 1, thickness=1)

|

| 169 |

+

if np.sum(np.logical_and(word_label, line_img)) == 0 or r + 2 * step_r >= max_r:

|

| 170 |

+

spp = p

|

| 171 |

+

isSppFound = True

|

| 172 |

+

if not isEppFound:

|

| 173 |

+

line_img = np.zeros(word_label.shape, dtype=np.uint8)

|

| 174 |

+

dy = grad_e * dx

|

| 175 |

+

p = np.array(new_pp[-1]) + np.array([dx, dy, dx, dy])

|

| 176 |

+

cv2.line(line_img, (int(p[0]), int(p[1])), (int(p[2]), int(p[3])), 1, thickness=1)

|

| 177 |

+

if np.sum(np.logical_and(word_label, line_img)) == 0 or r + 2 * step_r >= max_r:

|

| 178 |

+

epp = p

|

| 179 |

+

isEppFound = True

|

| 180 |

+

if isSppFound and isEppFound:

|

| 181 |

+

break

|

| 182 |

+

|

| 183 |

+

if not (isSppFound and isEppFound):

|

| 184 |

+

polys.append(None); continue

|

| 185 |

+

|

| 186 |

+

poly = []

|

| 187 |

+

poly.append(warpCoord(Minv, (spp[0], spp[1])))

|

| 188 |

+

for p in new_pp:

|

| 189 |

+

poly.append(warpCoord(Minv, (p[0], p[1])))

|

| 190 |

+

poly.append(warpCoord(Minv, (epp[0], epp[1])))

|

| 191 |

+

poly.append(warpCoord(Minv, (epp[2], epp[3])))

|

| 192 |

+

for p in reversed(new_pp):

|

| 193 |

+

poly.append(warpCoord(Minv, (p[2], p[3])))

|

| 194 |

+

poly.append(warpCoord(Minv, (spp[2], spp[3])))

|

| 195 |

+

|

| 196 |

+

# add to final result

|

| 197 |

+

polys.append(np.array(poly))

|

| 198 |

+

|

| 199 |

+

return polys

|

| 200 |

+

|

| 201 |

+

def getDetBoxes(textmap, linkmap, text_threshold, link_threshold, low_text, poly=False):

|

| 202 |

+

boxes, labels, mapper = getDetBoxes_core(textmap, linkmap, text_threshold, link_threshold, low_text)

|

| 203 |

+

|

| 204 |

+

if poly:

|

| 205 |

+

polys = getPoly_core(boxes, labels, mapper, linkmap)

|

| 206 |

+

else:

|

| 207 |

+

polys = [None] * len(boxes)

|

| 208 |

+

|

| 209 |

+

return boxes, polys

|

| 210 |

+

|

| 211 |

+

def adjustResultCoordinates(polys, ratio_w, ratio_h, ratio_net = 2):

|

| 212 |

+

if len(polys) > 0:

|

| 213 |

+

polys = np.array(polys)

|

| 214 |

+

for k in range(len(polys)):

|

| 215 |

+

if polys[k] is not None:

|

| 216 |

+

polys[k] *= (ratio_w * ratio_net, ratio_h * ratio_net)

|

| 217 |

+

return polys

|

dino2/__pycache__/model.cpython-310.pyc

ADDED

|

Binary file (2.85 kB). View file

|

|

|

dino2/model.py

ADDED

|

@@ -0,0 +1,93 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torch.nn as nn

|

| 3 |

+

from torch.hub import load

|

| 4 |

+

import torchvision.models as models

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

dino_backbones = {

|

| 8 |

+

'dinov2_s':{

|

| 9 |

+

'name':'dinov2_vits14',

|

| 10 |

+

'embedding_size':384,

|

| 11 |

+

'patch_size':14

|

| 12 |

+

},

|

| 13 |

+

'dinov2_b':{

|

| 14 |

+

'name':'dinov2_vitb14',

|

| 15 |

+

'embedding_size':768,

|

| 16 |

+

'patch_size':14

|

| 17 |

+

},

|

| 18 |

+

'dinov2_l':{

|

| 19 |

+

'name':'dinov2_vitl14',

|

| 20 |

+

'embedding_size':1024,

|

| 21 |

+

'patch_size':14

|

| 22 |

+

},

|

| 23 |

+

'dinov2_g':{

|

| 24 |

+

'name':'dinov2_vitg14',

|

| 25 |

+

'embedding_size':1536,

|

| 26 |

+

'patch_size':14

|

| 27 |

+

},

|

| 28 |

+

}

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

class linear_head(nn.Module):

|

| 32 |

+

def __init__(self, embedding_size = 384, num_classes = 5):

|

| 33 |

+

super(linear_head, self).__init__()

|

| 34 |

+

self.fc = nn.Linear(embedding_size, num_classes)

|

| 35 |

+

|

| 36 |

+

def forward(self, x):

|

| 37 |

+

return self.fc(x)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

class conv_head(nn.Module):

|

| 41 |

+

def __init__(self, embedding_size = 384, num_classes = 5):

|

| 42 |

+

super(conv_head, self).__init__()

|

| 43 |

+

self.segmentation_conv = nn.Sequential(

|

| 44 |

+

nn.Upsample(scale_factor=2),

|

| 45 |

+

nn.Conv2d(embedding_size, 64, (3,3), padding=(1,1)),

|

| 46 |

+

nn.Upsample(scale_factor=2),

|

| 47 |

+

nn.Conv2d(64, num_classes, (3,3), padding=(1,1)),

|

| 48 |

+

)

|

| 49 |

+

|

| 50 |

+

def forward(self, x):

|

| 51 |

+

x = self.segmentation_conv(x)

|

| 52 |

+

x = torch.sigmoid(x)

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

return x

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def threshold_mask(predicted, threshold=0.55):

|

| 59 |

+

thresholded_mask = (predicted > threshold).float()

|

| 60 |

+

return thresholded_mask

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

class Segmentor(nn.Module):

|

| 66 |

+

def __init__(self, device,num_classes, backbone = 'dinov2_s', head = 'conv', backbones = dino_backbones):

|

| 67 |

+

super(Segmentor, self).__init__()

|

| 68 |

+

self.heads = {

|

| 69 |

+

'conv':conv_head

|

| 70 |

+

}

|

| 71 |

+

self.backbones = dino_backbones

|

| 72 |

+

self.backbone = load('facebookresearch/dinov2', self.backbones[backbone]['name'])

|

| 73 |

+

|

| 74 |

+

self.backbone.eval()

|

| 75 |

+

self.num_classes = num_classes

|

| 76 |

+

self.embedding_size = self.backbones[backbone]['embedding_size']

|

| 77 |

+

self.patch_size = self.backbones[backbone]['patch_size']

|

| 78 |

+

self.head = self.heads[head](self.embedding_size,self.num_classes)

|

| 79 |

+

self.device=device

|

| 80 |

+

|

| 81 |

+

def forward(self, x):

|

| 82 |

+

batch_size = x.shape[0]

|

| 83 |

+

mask_dim = (x.shape[2] / self.patch_size, x.shape[3] / self.patch_size)

|

| 84 |

+

x = self.backbone.forward_features(x.to(self.device))

|

| 85 |

+

|

| 86 |

+

x = x['x_norm_patchtokens']

|

| 87 |

+

x = x.permute(0,2,1)

|

| 88 |

+

x = x.reshape(batch_size,self.embedding_size,int(mask_dim[0]),int(mask_dim[1]))

|

| 89 |

+

x = self.head(x)

|

| 90 |

+

return x

|

| 91 |

+

|

| 92 |

+

|

| 93 |

+

|

file_utils.py

ADDED

|

@@ -0,0 +1,77 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

import os

|

| 3 |

+

import numpy as np

|

| 4 |

+

import cv2

|

| 5 |

+

import imgproc

|

| 6 |

+

from mosaik import mosaik

|

| 7 |

+

# borrowed from https://github.com/lengstrom/fast-style-transfer/blob/master/src/utils.py

|

| 8 |

+

def get_files(img_dir):

|

| 9 |

+

imgs, masks, xmls = list_files(img_dir)

|

| 10 |

+

return imgs, masks, xmls

|

| 11 |

+

|

| 12 |

+

def list_files(in_path):

|

| 13 |

+

img_files = []

|

| 14 |

+

mask_files = []

|

| 15 |

+

gt_files = []

|

| 16 |

+

for (dirpath, dirnames, filenames) in os.walk(in_path):

|

| 17 |

+

for file in filenames:

|

| 18 |

+

filename, ext = os.path.splitext(file)

|

| 19 |

+

ext = str.lower(ext)

|

| 20 |

+

if ext == '.jpg' or ext == '.jpeg' or ext == '.gif' or ext == '.png' or ext == '.pgm':

|

| 21 |

+

img_files.append(os.path.join(dirpath, file))

|

| 22 |

+

elif ext == '.bmp':

|

| 23 |

+

mask_files.append(os.path.join(dirpath, file))

|

| 24 |

+

elif ext == '.xml' or ext == '.gt' or ext == '.txt':

|

| 25 |

+

gt_files.append(os.path.join(dirpath, file))

|

| 26 |

+

elif ext == '.zip':

|

| 27 |

+

continue

|

| 28 |

+

# img_files.sort()

|

| 29 |

+

# mask_files.sort()

|

| 30 |

+

# gt_files.sort()

|

| 31 |

+

return img_files, mask_files, gt_files

|

| 32 |

+

|

| 33 |

+

def saveResult(img_file, img, boxes, dirname='./result/', verticals=None, texts=None):

|

| 34 |

+

""" save text detection result one by one

|

| 35 |

+

Args:

|

| 36 |

+

img_file (str): image file name

|

| 37 |

+

img (array): raw image context

|

| 38 |

+

boxes (array): array of result file

|

| 39 |

+

Shape: [num_detections, 4] for BB output / [num_detections, 4] for QUAD output

|

| 40 |

+

Return:

|

| 41 |

+

None

|

| 42 |

+

"""

|

| 43 |

+

img = np.array(img)

|

| 44 |

+

|

| 45 |

+

# make result file list

|

| 46 |

+

filename, file_ext = os.path.splitext(os.path.basename(img_file))

|

| 47 |

+

|

| 48 |

+

# result directory

|

| 49 |

+

res_file = dirname + "res_" + filename + '.txt'

|

| 50 |

+

res_img_file = dirname + "res_" + filename + '.jpg'

|

| 51 |

+

|

| 52 |

+

if not os.path.isdir(dirname):

|

| 53 |

+

os.mkdir(dirname)

|

| 54 |

+

|

| 55 |

+

with open(res_file, 'w') as f:

|

| 56 |

+

for i, box in enumerate(boxes):

|

| 57 |

+

poly = np.array(box).astype(np.int32).reshape((-1))

|

| 58 |

+

strResult = ','.join([str(p) for p in poly]) + '\r\n'

|

| 59 |

+

f.write(strResult)

|

| 60 |

+

|

| 61 |

+

poly = poly.reshape(-1, 2)

|

| 62 |

+

cv2.polylines(img, [poly.reshape((-1, 1, 2))], True, color=(0, 0, 255), thickness=2)

|

| 63 |

+

ptColor = (0, 255, 255)

|

| 64 |

+

if verticals is not None:

|

| 65 |

+

if verticals[i]:

|

| 66 |

+

ptColor = (255, 0, 0)

|

| 67 |

+

|

| 68 |

+

if texts is not None:

|

| 69 |

+

font = cv2.FONT_HERSHEY_SIMPLEX

|

| 70 |

+

font_scale = 0.5

|

| 71 |

+

cv2.putText(img, "{}".format(texts[i]), (poly[0][0]+1, poly[0][1]+1), font, font_scale, (0, 0, 0), thickness=1)

|

| 72 |

+

cv2.putText(img, "{}".format(texts[i]), tuple(poly[0]), font, font_scale, (0, 255, 255), thickness=1)

|

| 73 |

+

|

| 74 |

+

# Save result image

|

| 75 |

+

cv2.imwrite(res_img_file, img)

|

| 76 |

+

return img

|

| 77 |

+

|

imgproc.py

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Copyright (c) 2019-present NAVER Corp.

|

| 3 |

+

MIT License

|

| 4 |

+

"""

|

| 5 |

+

|

| 6 |

+

# -*- coding: utf-8 -*-

|

| 7 |

+

import numpy as np

|

| 8 |

+

from skimage import io

|

| 9 |

+

import cv2

|

| 10 |

+

|

| 11 |

+

def loadImage(img_file):

|

| 12 |

+

img = io.imread(img_file) # RGB order

|

| 13 |

+

if img.shape[0] == 2: img = img[0]

|

| 14 |

+

if len(img.shape) == 2 : img = cv2.cvtColor(img, cv2.COLOR_GRAY2RGB)

|

| 15 |

+

if img.shape[2] == 4: img = img[:,:,:3]

|

| 16 |

+

img = np.array(img)

|

| 17 |

+

|

| 18 |

+

return img

|

| 19 |

+

|

| 20 |

+

def normalizeMeanVariance(in_img, mean=(0.485, 0.456, 0.406), variance=(0.229, 0.224, 0.225)):

|

| 21 |

+

# should be RGB order

|

| 22 |

+

img = in_img.copy().astype(np.float32)

|

| 23 |

+

|

| 24 |

+

img -= np.array([mean[0] * 255.0, mean[1] * 255.0, mean[2] * 255.0], dtype=np.float32)

|

| 25 |

+

img /= np.array([variance[0] * 255.0, variance[1] * 255.0, variance[2] * 255.0], dtype=np.float32)

|

| 26 |

+

return img

|

| 27 |

+

|

| 28 |

+

def denormalizeMeanVariance(in_img, mean=(0.485, 0.456, 0.406), variance=(0.229, 0.224, 0.225)):

|

| 29 |

+

# should be RGB order

|

| 30 |

+

img = in_img.copy()

|

| 31 |

+

img *= variance

|

| 32 |

+

img += mean

|

| 33 |

+

img *= 255.0

|

| 34 |

+

img = np.clip(img, 0, 255).astype(np.uint8)

|

| 35 |

+

return img

|

| 36 |

+

|

| 37 |

+

def resize_aspect_ratio(img, square_size, interpolation, mag_ratio=1):

|

| 38 |

+

height, width, channel = img.shape

|

| 39 |

+

|

| 40 |

+

# magnify image size

|

| 41 |

+

target_size = mag_ratio * max(height, width)

|

| 42 |

+

|

| 43 |

+

# set original image size

|

| 44 |

+

if target_size > square_size:

|

| 45 |

+

target_size = square_size

|

| 46 |

+

|

| 47 |

+

ratio = target_size / max(height, width)

|

| 48 |

+

|

| 49 |

+

target_h, target_w = int(height * ratio), int(width * ratio)

|

| 50 |

+

proc = cv2.resize(img, (target_w, target_h), interpolation = interpolation)

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

# make canvas and paste image

|

| 54 |

+

target_h32, target_w32 = target_h, target_w

|

| 55 |

+

if target_h % 32 != 0:

|

| 56 |

+

target_h32 = target_h + (32 - target_h % 32)

|

| 57 |

+

if target_w % 32 != 0:

|

| 58 |

+

target_w32 = target_w + (32 - target_w % 32)

|

| 59 |

+

resized = np.zeros((target_h32, target_w32, channel), dtype=np.float32)

|

| 60 |

+

resized[0:target_h, 0:target_w, :] = proc

|

| 61 |

+

target_h, target_w = target_h32, target_w32

|

| 62 |

+

|

| 63 |

+

size_heatmap = (int(target_w/2), int(target_h/2))

|

| 64 |

+

|

| 65 |

+

return resized, ratio, size_heatmap

|

| 66 |

+

|

| 67 |

+

def cvt2HeatmapImg(img):

|

| 68 |

+

img = (np.clip(img, 0, 1) * 255).astype(np.uint8)

|

| 69 |

+

img = cv2.applyColorMap(img, cv2.COLORMAP_JET)

|

| 70 |

+

return img

|

input/1.png

ADDED

|

input/2.png

ADDED

|

input/3.png

ADDED

|

input/4.png

ADDED

|

install.sh

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

pip install

|

main.py

ADDED

|

@@ -0,0 +1,354 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from recognize import recongize

|

| 2 |

+

from ner import ner

|

| 3 |

+

import os

|

| 4 |

+

import time

|

| 5 |

+

import argparse

|

| 6 |

+

from sr.sr import sr

|

| 7 |

+

import torch

|

| 8 |

+

from scipy.ndimage import gaussian_filter

|

| 9 |

+

from PIL import Image

|

| 10 |

+

import numpy as np

|

| 11 |

+

import torch.nn as nn

|

| 12 |

+

import torch.backends.cudnn as cudnn

|

| 13 |

+

from torch.autograd import Variable

|

| 14 |

+

from mosaik import mosaik

|

| 15 |

+

from PIL import Image

|

| 16 |

+

import cv2

|

| 17 |

+

from skimage import io

|

| 18 |

+

import numpy as np

|

| 19 |

+

import craft_utils

|

| 20 |

+

import imgproc

|

| 21 |

+

import file_utils

|

| 22 |

+

from seg import mask_percentage

|

| 23 |

+

|

| 24 |

+

from seg2 import dino_seg

|

| 25 |

+

|

| 26 |

+

from craft import CRAFT

|

| 27 |

+

from collections import OrderedDict

|

| 28 |

+

import gradio as gr

|

| 29 |

+

from refinenet import RefineNet

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

# craft, refine 모델 불러오는 코드

|

| 33 |

+

def copyStateDict(state_dict):

|

| 34 |

+

if list(state_dict.keys())[0].startswith("module"):

|

| 35 |

+

start_idx = 1

|

| 36 |

+

else:

|

| 37 |

+

start_idx = 0

|

| 38 |

+

new_state_dict = OrderedDict()

|

| 39 |

+

for k, v in state_dict.items():

|

| 40 |

+

name = ".".join(k.split(".")[start_idx:])

|

| 41 |

+

new_state_dict[name] = v

|

| 42 |

+

return new_state_dict

|

| 43 |

+

|

| 44 |

+

def str2bool(v):

|

| 45 |

+

return v.lower() in ("yes", "y", "true", "t", "1")

|

| 46 |

+

|

| 47 |

+

parser = argparse.ArgumentParser(description='CRAFT Text Detection')

|

| 48 |

+

parser.add_argument('--trained_model', default='weights/craft_mlt_25k.pth', type=str, help='사전학습 craft 모델')

|

| 49 |

+

parser.add_argument('--text_threshold', default=0.7, type=float, help='text confidence threshold')

|

| 50 |

+

parser.add_argument('--low_text', default=0.4, type=float, help='text low-bound score')

|

| 51 |

+

parser.add_argument('--link_threshold', default=0.4, type=float, help='link confidence threshold')

|

| 52 |

+

parser.add_argument('--cuda', default=True, type=str2bool, help='Use cuda for inference')

|

| 53 |

+

parser.add_argument('--canvas_size', default=1280, type=int, help='image size for inference')

|

| 54 |

+

parser.add_argument('--mag_ratio', default=1.5, type=float, help='image magnification ratio')

|

| 55 |

+

parser.add_argument('--poly', default=False, action='store_true', help='enable polygon type')

|

| 56 |

+

parser.add_argument('--refine', default=True, help='enable link refiner')

|

| 57 |

+

parser.add_argument('--image_path', default="input/2.png", help='input image')

|

| 58 |

+

parser.add_argument('--refiner_model', default='weights/craft_refiner_CTW1500.pth', type=str, help='pretrained refiner model')

|

| 59 |

+

|

| 60 |

+

args = parser.parse_args()

|

| 61 |

+

# 아래는 option

|

| 62 |

+

def full_img_masking(full_image,net,refine_net):

|

| 63 |

+

reference_image=sr(full_image)

|

| 64 |

+

reference_boxes=text_detect(reference_image,net=net,refine_net=refine_net)

|

| 65 |

+

boxes=get_box_from_refer(reference_boxes)

|

| 66 |

+

for index2,box in enumerate(boxes):

|

| 67 |

+

xmin,xmax,ymin,ymax=get_min_max(box)

|

| 68 |

+

|

| 69 |

+

text_area=full_image[int(ymin):int(ymax),int(xmin):int(xmax),:]

|

| 70 |

+

|

| 71 |

+

text=recongize(text_area)

|

| 72 |

+

label=ner(text)

|

| 73 |

+

|

| 74 |

+

if label==1:

|

| 75 |

+

A=full_image[int(ymin):int(ymax),int(xmin):int(xmax),:]

|

| 76 |

+

full_image[int(ymin):int(ymax),int(xmin):int(xmax),:] = gaussian_filter(A, sigma=16)

|

| 77 |

+

return full_image

|

| 78 |

+

|

| 79 |

+

def test_net(net, image, text_threshold, link_threshold, low_text, cuda, poly, refine_net=None):

|

| 80 |

+

t0 = time.time()

|

| 81 |

+

|

| 82 |

+

img_resized, target_ratio, size_heatmap = imgproc.resize_aspect_ratio(image, args.canvas_size, interpolation=cv2.INTER_LINEAR, mag_ratio=args.mag_ratio)

|

| 83 |

+

ratio_h = ratio_w = 1 / target_ratio

|

| 84 |

+

|

| 85 |

+

x = imgproc.normalizeMeanVariance(img_resized)

|

| 86 |

+

x = torch.from_numpy(x).permute(2, 0, 1)

|

| 87 |

+

x = Variable(x.unsqueeze(0))

|

| 88 |

+

if cuda:

|

| 89 |

+

x = x.cuda()

|

| 90 |

+

|

| 91 |

+

with torch.no_grad():

|

| 92 |

+

y, feature = net(x)

|

| 93 |

+

|

| 94 |

+

score_text = y[0,:,:,0].cpu().data.numpy()

|

| 95 |

+

score_link = y[0,:,:,1].cpu().data.numpy()

|

| 96 |

+

|

| 97 |

+

if refine_net is not None:

|

| 98 |

+

with torch.no_grad():

|

| 99 |

+

y_refiner = refine_net(y, feature)

|

| 100 |

+

score_link = y_refiner[0,:,:,0].cpu().data.numpy()

|

| 101 |

+

|

| 102 |

+

t0 = time.time() - t0

|

| 103 |

+

t1 = time.time()

|

| 104 |

+

|

| 105 |

+

boxes, polys = craft_utils.getDetBoxes(score_text, score_link, text_threshold, link_threshold, low_text, poly)

|

| 106 |

+

|

| 107 |

+

boxes = craft_utils.adjustResultCoordinates(boxes, ratio_w, ratio_h)

|

| 108 |

+

polys = craft_utils.adjustResultCoordinates(polys, ratio_w, ratio_h)

|

| 109 |

+

for k in range(len(polys)):

|

| 110 |

+

if polys[k] is None: polys[k] = boxes[k]

|

| 111 |

+

|

| 112 |

+

t1 = time.time() - t1

|

| 113 |

+

|

| 114 |

+

# render results (optional)

|

| 115 |

+

render_img = score_text.copy()

|

| 116 |

+

render_img = np.hstack((render_img, score_link))

|

| 117 |

+

ret_score_text = imgproc.cvt2HeatmapImg(render_img)

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

return boxes, polys, ret_score_text

|

| 121 |

+

|

| 122 |

+

def text_detect(image,net,refine_net):

|

| 123 |

+

|

| 124 |

+

bboxes, polys, score_text = test_net(net, image, args.text_threshold, args.link_threshold, args.low_text, args.cuda, args.poly, refine_net)

|

| 125 |

+

|

| 126 |

+

|

| 127 |

+

return bboxes

|

| 128 |

+

|

| 129 |

+

|

| 130 |

+

def get_box_from_refer(reference_boxes):

|

| 131 |

+

|

| 132 |

+

real_boxes=[]

|

| 133 |

+

for box in reference_boxes:

|

| 134 |

+

|

| 135 |

+

real_boxes.append(box//2)

|

| 136 |

+

|

| 137 |

+

return real_boxes

|

| 138 |

+

def get_min_max(box):

|

| 139 |

+

xlist=[]

|

| 140 |

+

ylist=[]

|

| 141 |

+

for coor in box:

|

| 142 |

+

xlist.append(coor[0])

|

| 143 |

+

ylist.append(coor[1])

|

| 144 |

+

return min(xlist),max(xlist),min(ylist),max(ylist)

|

| 145 |

+

|

| 146 |

+

def main(image_path0):

|

| 147 |

+

# 1단계

|

| 148 |

+

|

| 149 |

+

# ==> craft 모델과 refinenet 모델을 불러오고 cuda device 에 얹힙니다.

|

| 150 |

+

|

| 151 |

+

net = CRAFT()

|

| 152 |

+

if args.cuda:

|

| 153 |

+

net.load_state_dict(copyStateDict(torch.load(args.trained_model)))

|

| 154 |

+

|

| 155 |

+

if args.cuda:

|

| 156 |

+

net = net.cuda()

|