Spaces:

Running

on

L40S

Running

on

L40S

Migrated from GitHub

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +11 -0

- LightGlue/.flake8 +4 -0

- LightGlue/LICENSE +201 -0

- LightGlue/README.md +180 -0

- LightGlue/assets/DSC_0410.JPG +0 -0

- LightGlue/assets/DSC_0411.JPG +0 -0

- LightGlue/assets/architecture.svg +0 -0

- LightGlue/assets/benchmark.png +0 -0

- LightGlue/assets/benchmark_cpu.png +0 -0

- LightGlue/assets/easy_hard.jpg +0 -0

- LightGlue/assets/sacre_coeur1.jpg +0 -0

- LightGlue/assets/sacre_coeur2.jpg +0 -0

- LightGlue/assets/teaser.svg +1499 -0

- LightGlue/benchmark.py +255 -0

- LightGlue/demo.ipynb +0 -0

- LightGlue/lightglue/__init__.py +7 -0

- LightGlue/lightglue/aliked.py +758 -0

- LightGlue/lightglue/disk.py +55 -0

- LightGlue/lightglue/dog_hardnet.py +41 -0

- LightGlue/lightglue/lightglue.py +655 -0

- LightGlue/lightglue/sift.py +216 -0

- LightGlue/lightglue/superpoint.py +227 -0

- LightGlue/lightglue/utils.py +165 -0

- LightGlue/lightglue/viz2d.py +185 -0

- LightGlue/pyproject.toml +30 -0

- LightGlue/requirements.txt +6 -0

- ORIGINAL_README.md +115 -0

- cotracker/__init__.py +5 -0

- cotracker/build/lib/datasets/__init__.py +5 -0

- cotracker/build/lib/datasets/dataclass_utils.py +166 -0

- cotracker/build/lib/datasets/dr_dataset.py +161 -0

- cotracker/build/lib/datasets/kubric_movif_dataset.py +441 -0

- cotracker/build/lib/datasets/tap_vid_datasets.py +209 -0

- cotracker/build/lib/datasets/utils.py +106 -0

- cotracker/build/lib/evaluation/__init__.py +5 -0

- cotracker/build/lib/evaluation/core/__init__.py +5 -0

- cotracker/build/lib/evaluation/core/eval_utils.py +138 -0

- cotracker/build/lib/evaluation/core/evaluator.py +253 -0

- cotracker/build/lib/evaluation/evaluate.py +169 -0

- cotracker/build/lib/models/__init__.py +5 -0

- cotracker/build/lib/models/build_cotracker.py +33 -0

- cotracker/build/lib/models/core/__init__.py +5 -0

- cotracker/build/lib/models/core/cotracker/__init__.py +5 -0

- cotracker/build/lib/models/core/cotracker/blocks.py +367 -0

- cotracker/build/lib/models/core/cotracker/cotracker.py +503 -0

- cotracker/build/lib/models/core/cotracker/losses.py +61 -0

- cotracker/build/lib/models/core/embeddings.py +120 -0

- cotracker/build/lib/models/core/model_utils.py +271 -0

- cotracker/build/lib/models/evaluation_predictor.py +104 -0

- cotracker/build/lib/utils/__init__.py +5 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,14 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

figure/showcases/image1.gif filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

figure/showcases/image2.gif filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

figure/showcases/image29.gif filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

figure/showcases/image3.gif filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

figure/showcases/image30.gif filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

figure/showcases/image31.gif filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

figure/showcases/image33.gif filter=lfs diff=lfs merge=lfs -text

|

| 43 |

+

figure/showcases/image34.gif filter=lfs diff=lfs merge=lfs -text

|

| 44 |

+

figure/showcases/image35.gif filter=lfs diff=lfs merge=lfs -text

|

| 45 |

+

figure/showcases/image4.gif filter=lfs diff=lfs merge=lfs -text

|

| 46 |

+

figure/teaser.png filter=lfs diff=lfs merge=lfs -text

|

LightGlue/.flake8

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[flake8]

|

| 2 |

+

max-line-length = 88

|

| 3 |

+

extend-ignore = E203

|

| 4 |

+

exclude = .git,__pycache__,build,.venv/

|

LightGlue/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright 2023 ETH Zurich

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

LightGlue/README.md

ADDED

|

@@ -0,0 +1,180 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

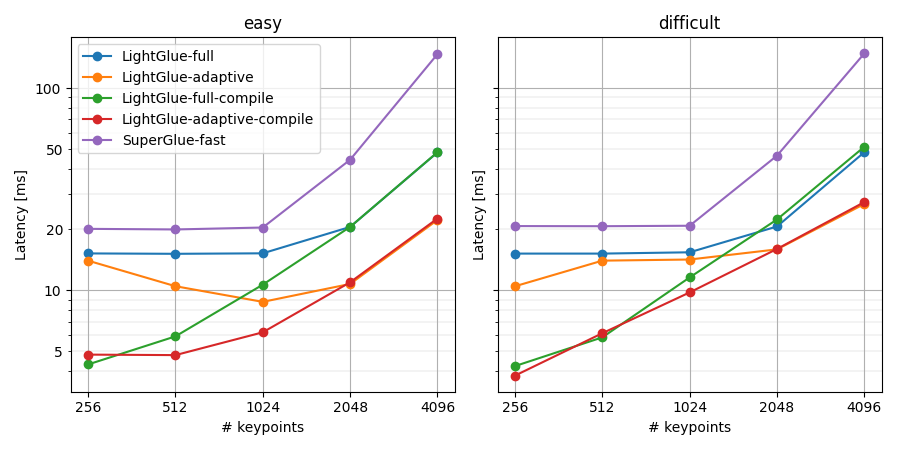

|

| 1 |

+

<p align="center">

|

| 2 |

+

<h1 align="center"><ins>LightGlue</ins> ⚡️<br>Local Feature Matching at Light Speed</h1>

|

| 3 |

+

<p align="center">

|

| 4 |

+

<a href="https://www.linkedin.com/in/philipplindenberger/">Philipp Lindenberger</a>

|

| 5 |

+

·

|

| 6 |

+

<a href="https://psarlin.com/">Paul-Edouard Sarlin</a>

|

| 7 |

+

·

|

| 8 |

+

<a href="https://www.microsoft.com/en-us/research/people/mapoll/">Marc Pollefeys</a>

|

| 9 |

+

</p>

|

| 10 |

+

<h2 align="center">

|

| 11 |

+

<p>ICCV 2023</p>

|

| 12 |

+

<a href="https://arxiv.org/pdf/2306.13643.pdf" align="center">Paper</a> |

|

| 13 |

+

<a href="https://colab.research.google.com/github/cvg/LightGlue/blob/main/demo.ipynb" align="center">Colab</a> |

|

| 14 |

+

<a href="https://psarlin.com/assets/LightGlue_ICCV2023_poster_compressed.pdf" align="center">Poster</a> |

|

| 15 |

+

<a href="https://github.com/cvg/glue-factory" align="center">Train your own!</a>

|

| 16 |

+

</h2>

|

| 17 |

+

|

| 18 |

+

</p>

|

| 19 |

+

<p align="center">

|

| 20 |

+

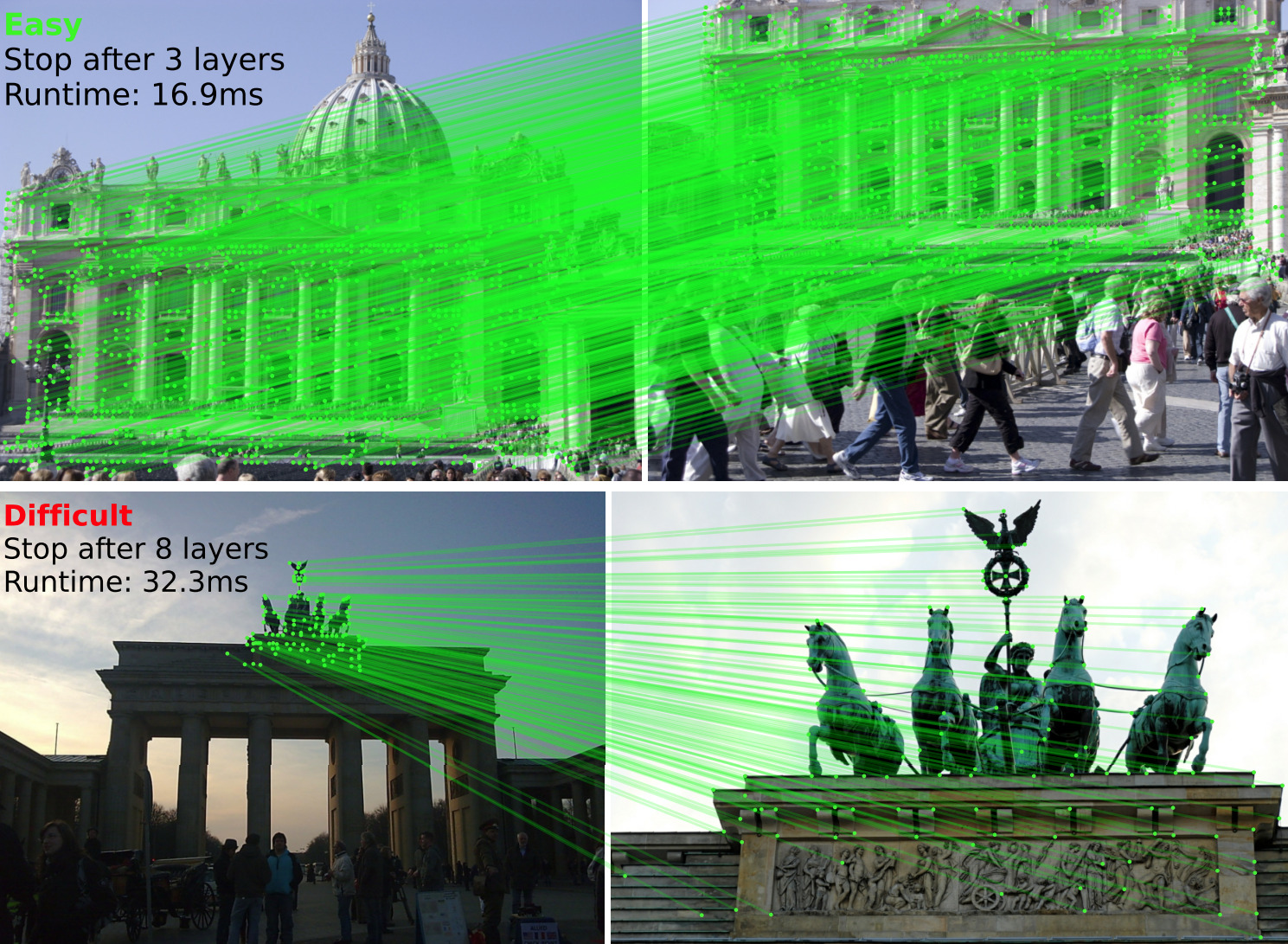

<a href="https://arxiv.org/abs/2306.13643"><img src="assets/easy_hard.jpg" alt="example" width=80%></a>

|

| 21 |

+

<br>

|

| 22 |

+

<em>LightGlue is a deep neural network that matches sparse local features across image pairs.<br>An adaptive mechanism makes it fast for easy pairs (top) and reduces the computational complexity for difficult ones (bottom).</em>

|

| 23 |

+

</p>

|

| 24 |

+

|

| 25 |

+

##

|

| 26 |

+

|

| 27 |

+

This repository hosts the inference code of LightGlue, a lightweight feature matcher with high accuracy and blazing fast inference. It takes as input a set of keypoints and descriptors for each image and returns the indices of corresponding points. The architecture is based on adaptive pruning techniques, in both network width and depth - [check out the paper for more details](https://arxiv.org/pdf/2306.13643.pdf).

|

| 28 |

+

|

| 29 |

+

We release pretrained weights of LightGlue with [SuperPoint](https://arxiv.org/abs/1712.07629), [DISK](https://arxiv.org/abs/2006.13566), [ALIKED](https://arxiv.org/abs/2304.03608) and [SIFT](https://www.cs.ubc.ca/~lowe/papers/ijcv04.pdf) local features.

|

| 30 |

+

The training and evaluation code can be found in our library [glue-factory](https://github.com/cvg/glue-factory/).

|

| 31 |

+

|

| 32 |

+

## Installation and demo [](https://colab.research.google.com/github/cvg/LightGlue/blob/main/demo.ipynb)

|

| 33 |

+

|

| 34 |

+

Install this repo using pip:

|

| 35 |

+

|

| 36 |

+

```bash

|

| 37 |

+

git clone https://github.com/cvg/LightGlue.git && cd LightGlue

|

| 38 |

+

python -m pip install -e .

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

We provide a [demo notebook](demo.ipynb) which shows how to perform feature extraction and matching on an image pair.

|

| 42 |

+

|

| 43 |

+

Here is a minimal script to match two images:

|

| 44 |

+

|

| 45 |

+

```python

|

| 46 |

+

from lightglue import LightGlue, SuperPoint, DISK, SIFT, ALIKED, DoGHardNet

|

| 47 |

+

from lightglue.utils import load_image, rbd

|

| 48 |

+

|

| 49 |

+

# SuperPoint+LightGlue

|

| 50 |

+

extractor = SuperPoint(max_num_keypoints=2048).eval().cuda() # load the extractor

|

| 51 |

+

matcher = LightGlue(features='superpoint').eval().cuda() # load the matcher

|

| 52 |

+

|

| 53 |

+

# or DISK+LightGlue, ALIKED+LightGlue or SIFT+LightGlue

|

| 54 |

+

extractor = DISK(max_num_keypoints=2048).eval().cuda() # load the extractor

|

| 55 |

+

matcher = LightGlue(features='disk').eval().cuda() # load the matcher

|

| 56 |

+

|

| 57 |

+

# load each image as a torch.Tensor on GPU with shape (3,H,W), normalized in [0,1]

|

| 58 |

+

image0 = load_image('path/to/image_0.jpg').cuda()

|

| 59 |

+

image1 = load_image('path/to/image_1.jpg').cuda()

|

| 60 |

+

|

| 61 |

+

# extract local features

|

| 62 |

+

feats0 = extractor.extract(image0) # auto-resize the image, disable with resize=None

|

| 63 |

+

feats1 = extractor.extract(image1)

|

| 64 |

+

|

| 65 |

+

# match the features

|

| 66 |

+

matches01 = matcher({'image0': feats0, 'image1': feats1})

|

| 67 |

+

feats0, feats1, matches01 = [rbd(x) for x in [feats0, feats1, matches01]] # remove batch dimension

|

| 68 |

+

matches = matches01['matches'] # indices with shape (K,2)

|

| 69 |

+

points0 = feats0['keypoints'][matches[..., 0]] # coordinates in image #0, shape (K,2)

|

| 70 |

+

points1 = feats1['keypoints'][matches[..., 1]] # coordinates in image #1, shape (K,2)

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

We also provide a convenience method to match a pair of images:

|

| 74 |

+

|

| 75 |

+

```python

|

| 76 |

+

from lightglue import match_pair

|

| 77 |

+

feats0, feats1, matches01 = match_pair(extractor, matcher, image0, image1)

|

| 78 |

+

```

|

| 79 |

+

|

| 80 |

+

##

|

| 81 |

+

|

| 82 |

+

<p align="center">

|

| 83 |

+

<a href="https://arxiv.org/abs/2306.13643"><img src="assets/teaser.svg" alt="Logo" width=50%></a>

|

| 84 |

+

<br>

|

| 85 |

+

<em>LightGlue can adjust its depth (number of layers) and width (number of keypoints) per image pair, with a marginal impact on accuracy.</em>

|

| 86 |

+

</p>

|

| 87 |

+

|

| 88 |

+

## Advanced configuration

|

| 89 |

+

|

| 90 |

+

<details>

|

| 91 |

+

<summary>[Detail of all parameters - click to expand]</summary>

|

| 92 |

+

|

| 93 |

+

- ```n_layers```: Number of stacked self+cross attention layers. Reduce this value for faster inference at the cost of accuracy (continuous red line in the plot above). Default: 9 (all layers).

|

| 94 |

+

- ```flash```: Enable FlashAttention. Significantly increases the speed and reduces the memory consumption without any impact on accuracy. Default: True (LightGlue automatically detects if FlashAttention is available).

|

| 95 |

+

- ```mp```: Enable mixed precision inference. Default: False (off)

|

| 96 |

+

- ```depth_confidence```: Controls the early stopping. A lower values stops more often at earlier layers. Default: 0.95, disable with -1.

|

| 97 |

+

- ```width_confidence```: Controls the iterative point pruning. A lower value prunes more points earlier. Default: 0.99, disable with -1.

|

| 98 |

+

- ```filter_threshold```: Match confidence. Increase this value to obtain less, but stronger matches. Default: 0.1

|

| 99 |

+

|

| 100 |

+

</details>

|

| 101 |

+

|

| 102 |

+

The default values give a good trade-off between speed and accuracy. To maximize the accuracy, use all keypoints and disable the adaptive mechanisms:

|

| 103 |

+

```python

|

| 104 |

+

extractor = SuperPoint(max_num_keypoints=None)

|

| 105 |

+

matcher = LightGlue(features='superpoint', depth_confidence=-1, width_confidence=-1)

|

| 106 |

+

```

|

| 107 |

+

|

| 108 |

+

To increase the speed with a small drop of accuracy, decrease the number of keypoints and lower the adaptive thresholds:

|

| 109 |

+

```python

|

| 110 |

+

extractor = SuperPoint(max_num_keypoints=1024)

|

| 111 |

+

matcher = LightGlue(features='superpoint', depth_confidence=0.9, width_confidence=0.95)

|

| 112 |

+

```

|

| 113 |

+

|

| 114 |

+

The maximum speed is obtained with a combination of:

|

| 115 |

+

- [FlashAttention](https://arxiv.org/abs/2205.14135): automatically used when ```torch >= 2.0``` or if [installed from source](https://github.com/HazyResearch/flash-attention#installation-and-features).

|

| 116 |

+

- PyTorch compilation, available when ```torch >= 2.0```:

|

| 117 |

+

```python

|

| 118 |

+

matcher = matcher.eval().cuda()

|

| 119 |

+

matcher.compile(mode='reduce-overhead')

|

| 120 |

+

```

|

| 121 |

+

For inputs with fewer than 1536 keypoints (determined experimentally), this compiles LightGlue but disables point pruning (large overhead). For larger input sizes, it automatically falls backs to eager mode with point pruning. Adaptive depths is supported for any input size.

|

| 122 |

+

|

| 123 |

+

## Benchmark

|

| 124 |

+

|

| 125 |

+

|

| 126 |

+

<p align="center">

|

| 127 |

+

<a><img src="assets/benchmark.png" alt="Logo" width=80%></a>

|

| 128 |

+

<br>

|

| 129 |

+

<em>Benchmark results on GPU (RTX 3080). With compilation and adaptivity, LightGlue runs at 150 FPS @ 1024 keypoints and 50 FPS @ 4096 keypoints per image. This is a 4-10x speedup over SuperGlue. </em>

|

| 130 |

+

</p>

|

| 131 |

+

|

| 132 |

+

<p align="center">

|

| 133 |

+

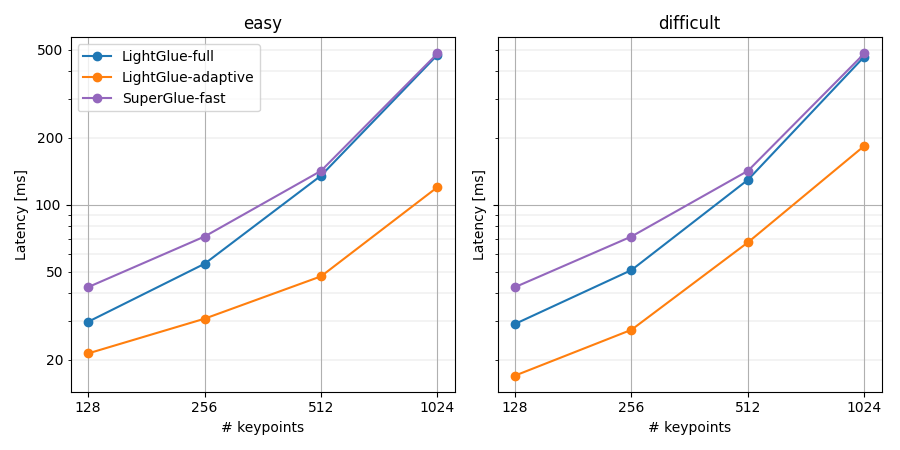

<a><img src="assets/benchmark_cpu.png" alt="Logo" width=80%></a>

|

| 134 |

+

<br>

|

| 135 |

+

<em>Benchmark results on CPU (Intel i7 10700K). LightGlue runs at 20 FPS @ 512 keypoints. </em>

|

| 136 |

+

</p>

|

| 137 |

+

|

| 138 |

+

Obtain the same plots for your setup using our [benchmark script](benchmark.py):

|

| 139 |

+

```

|

| 140 |

+

python benchmark.py [--device cuda] [--add_superglue] [--num_keypoints 512 1024 2048 4096] [--compile]

|

| 141 |

+

```

|

| 142 |

+

|

| 143 |

+

<details>

|

| 144 |

+

<summary>[Performance tip - click to expand]</summary>

|

| 145 |

+

|

| 146 |

+

Note: **Point pruning** introduces an overhead that sometimes outweighs its benefits.

|

| 147 |

+

Point pruning is thus enabled only when the there are more than N keypoints in an image, where N is hardware-dependent.

|

| 148 |

+

We provide defaults optimized for current hardware (RTX 30xx GPUs).

|

| 149 |

+

We suggest running the benchmark script and adjusting the thresholds for your hardware by updating `LightGlue.pruning_keypoint_thresholds['cuda']`.

|

| 150 |

+

|

| 151 |

+

</details>

|

| 152 |

+

|

| 153 |

+

## Training and evaluation

|

| 154 |

+

|

| 155 |

+

With [Glue Factory](https://github.com/cvg/glue-factory), you can train LightGlue with your own local features, on your own dataset!

|

| 156 |

+

You can also evaluate it and other baselines on standard benchmarks like HPatches and MegaDepth.

|

| 157 |

+

|

| 158 |

+

## Other links

|

| 159 |

+

- [hloc - the visual localization toolbox](https://github.com/cvg/Hierarchical-Localization/): run LightGlue for Structure-from-Motion and visual localization.

|

| 160 |

+

- [LightGlue-ONNX](https://github.com/fabio-sim/LightGlue-ONNX): export LightGlue to the Open Neural Network Exchange (ONNX) format with support for TensorRT and OpenVINO.

|

| 161 |

+

- [Image Matching WebUI](https://github.com/Vincentqyw/image-matching-webui): a web GUI to easily compare different matchers, including LightGlue.

|

| 162 |

+

- [kornia](https://kornia.readthedocs.io) now exposes LightGlue via the interfaces [`LightGlue`](https://kornia.readthedocs.io/en/latest/feature.html#kornia.feature.LightGlue) and [`LightGlueMatcher`](https://kornia.readthedocs.io/en/latest/feature.html#kornia.feature.LightGlueMatcher).

|

| 163 |

+

|

| 164 |

+

## BibTeX citation

|

| 165 |

+

If you use any ideas from the paper or code from this repo, please consider citing:

|

| 166 |

+

|

| 167 |

+

```txt

|

| 168 |

+

@inproceedings{lindenberger2023lightglue,

|

| 169 |

+

author = {Philipp Lindenberger and

|

| 170 |

+

Paul-Edouard Sarlin and

|

| 171 |

+

Marc Pollefeys},

|

| 172 |

+

title = {{LightGlue: Local Feature Matching at Light Speed}},

|

| 173 |

+

booktitle = {ICCV},

|

| 174 |

+

year = {2023}

|

| 175 |

+

}

|

| 176 |

+

```

|

| 177 |

+

|

| 178 |

+

|

| 179 |

+

## License

|

| 180 |

+

The pre-trained weights of LightGlue and the code provided in this repository are released under the [Apache-2.0 license](./LICENSE). [DISK](https://github.com/cvlab-epfl/disk) follows this license as well but SuperPoint follows [a different, restrictive license](https://github.com/magicleap/SuperPointPretrainedNetwork/blob/master/LICENSE) (this includes its pre-trained weights and its [inference file](./lightglue/superpoint.py)). [ALIKED](https://github.com/Shiaoming/ALIKED) was published under a BSD-3-Clause license.

|

LightGlue/assets/DSC_0410.JPG

ADDED

|

|

LightGlue/assets/DSC_0411.JPG

ADDED

|

|

LightGlue/assets/architecture.svg

ADDED

|

|

LightGlue/assets/benchmark.png

ADDED

|

LightGlue/assets/benchmark_cpu.png

ADDED

|

LightGlue/assets/easy_hard.jpg

ADDED

|

LightGlue/assets/sacre_coeur1.jpg

ADDED

|

LightGlue/assets/sacre_coeur2.jpg

ADDED

|

LightGlue/assets/teaser.svg

ADDED

|

|

LightGlue/benchmark.py

ADDED

|

@@ -0,0 +1,255 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Benchmark script for LightGlue on real images

|

| 2 |

+

import argparse

|

| 3 |

+

import time

|

| 4 |

+

from collections import defaultdict

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

|

| 7 |

+

import matplotlib.pyplot as plt

|

| 8 |

+

import numpy as np

|

| 9 |

+

import torch

|

| 10 |

+

import torch._dynamo

|

| 11 |

+

|

| 12 |

+

from lightglue import LightGlue, SuperPoint

|

| 13 |

+

from lightglue.utils import load_image

|

| 14 |

+

|

| 15 |

+

torch.set_grad_enabled(False)

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def measure(matcher, data, device="cuda", r=100):

|

| 19 |

+

timings = np.zeros((r, 1))

|

| 20 |

+

if device.type == "cuda":

|

| 21 |

+

starter = torch.cuda.Event(enable_timing=True)

|

| 22 |

+

ender = torch.cuda.Event(enable_timing=True)

|

| 23 |

+

# warmup

|

| 24 |

+

for _ in range(10):

|

| 25 |

+

_ = matcher(data)

|

| 26 |

+

# measurements

|

| 27 |

+

with torch.no_grad():

|

| 28 |

+

for rep in range(r):

|

| 29 |

+

if device.type == "cuda":

|

| 30 |

+

starter.record()

|

| 31 |

+

_ = matcher(data)

|

| 32 |

+

ender.record()

|

| 33 |

+

# sync gpu

|

| 34 |

+

torch.cuda.synchronize()

|

| 35 |

+

curr_time = starter.elapsed_time(ender)

|

| 36 |

+

else:

|

| 37 |

+

start = time.perf_counter()

|

| 38 |

+

_ = matcher(data)

|

| 39 |

+

curr_time = (time.perf_counter() - start) * 1e3

|

| 40 |

+

timings[rep] = curr_time

|

| 41 |

+

mean_syn = np.sum(timings) / r

|

| 42 |

+

std_syn = np.std(timings)

|

| 43 |

+

return {"mean": mean_syn, "std": std_syn}

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

def print_as_table(d, title, cnames):

|

| 47 |

+

print()

|

| 48 |

+

header = f"{title:30} " + " ".join([f"{x:>7}" for x in cnames])

|

| 49 |

+

print(header)

|

| 50 |

+

print("-" * len(header))

|

| 51 |

+

for k, l in d.items():

|

| 52 |

+

print(f"{k:30}", " ".join([f"{x:>7.1f}" for x in l]))

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

if __name__ == "__main__":

|

| 56 |

+

parser = argparse.ArgumentParser(description="Benchmark script for LightGlue")

|

| 57 |

+

parser.add_argument(

|

| 58 |

+

"--device",

|

| 59 |

+

choices=["auto", "cuda", "cpu", "mps"],

|

| 60 |

+

default="auto",

|

| 61 |

+

help="device to benchmark on",

|

| 62 |

+

)

|

| 63 |

+

parser.add_argument("--compile", action="store_true", help="Compile LightGlue runs")

|

| 64 |

+

parser.add_argument(

|

| 65 |

+

"--no_flash", action="store_true", help="disable FlashAttention"

|

| 66 |

+

)

|

| 67 |

+

parser.add_argument(

|

| 68 |

+

"--no_prune_thresholds",

|

| 69 |

+

action="store_true",

|

| 70 |

+

help="disable pruning thresholds (i.e. always do pruning)",

|

| 71 |

+

)

|

| 72 |

+

parser.add_argument(

|

| 73 |

+

"--add_superglue",

|

| 74 |

+

action="store_true",

|

| 75 |

+

help="add SuperGlue to the benchmark (requires hloc)",

|

| 76 |

+

)

|

| 77 |

+

parser.add_argument(

|

| 78 |

+

"--measure", default="time", choices=["time", "log-time", "throughput"]

|

| 79 |

+

)

|

| 80 |

+

parser.add_argument(

|

| 81 |

+

"--repeat", "--r", type=int, default=100, help="repetitions of measurements"

|

| 82 |

+

)

|

| 83 |

+

parser.add_argument(

|

| 84 |

+

"--num_keypoints",

|

| 85 |

+

nargs="+",

|

| 86 |

+

type=int,

|

| 87 |

+

default=[256, 512, 1024, 2048, 4096],

|

| 88 |

+

help="number of keypoints (list separated by spaces)",

|

| 89 |

+

)

|

| 90 |

+

parser.add_argument(

|

| 91 |

+

"--matmul_precision", default="highest", choices=["highest", "high", "medium"]

|

| 92 |

+

)

|

| 93 |

+

parser.add_argument(

|

| 94 |

+

"--save", default=None, type=str, help="path where figure should be saved"

|

| 95 |

+

)

|

| 96 |

+

args = parser.parse_intermixed_args()

|

| 97 |

+

|

| 98 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 99 |

+

if args.device != "auto":

|

| 100 |

+

device = torch.device(args.device)

|

| 101 |

+

|

| 102 |

+

print("Running benchmark on device:", device)

|

| 103 |

+

|

| 104 |

+

images = Path("assets")

|

| 105 |

+

inputs = {

|

| 106 |

+

"easy": (

|

| 107 |

+

load_image(images / "DSC_0411.JPG"),

|

| 108 |

+

load_image(images / "DSC_0410.JPG"),

|

| 109 |

+

),

|

| 110 |

+

"difficult": (

|

| 111 |

+

load_image(images / "sacre_coeur1.jpg"),

|

| 112 |

+

load_image(images / "sacre_coeur2.jpg"),

|

| 113 |

+

),

|

| 114 |

+

}

|

| 115 |

+

|

| 116 |

+

configs = {

|

| 117 |

+

"LightGlue-full": {

|

| 118 |

+

"depth_confidence": -1,

|

| 119 |

+

"width_confidence": -1,

|

| 120 |

+

},

|

| 121 |

+

# 'LG-prune': {

|

| 122 |

+

# 'width_confidence': -1,

|

| 123 |

+

# },

|

| 124 |

+

# 'LG-depth': {

|

| 125 |

+

# 'depth_confidence': -1,

|

| 126 |

+

# },

|

| 127 |

+

"LightGlue-adaptive": {},

|

| 128 |

+

}

|

| 129 |

+

|

| 130 |

+

if args.compile:

|

| 131 |

+

configs = {**configs, **{k + "-compile": v for k, v in configs.items()}}

|

| 132 |

+

|

| 133 |

+

sg_configs = {

|

| 134 |

+

# 'SuperGlue': {},

|

| 135 |

+

"SuperGlue-fast": {"sinkhorn_iterations": 5}

|

| 136 |

+

}

|

| 137 |

+

|

| 138 |

+

torch.set_float32_matmul_precision(args.matmul_precision)

|

| 139 |

+

|

| 140 |

+

results = {k: defaultdict(list) for k, v in inputs.items()}

|

| 141 |

+

|

| 142 |

+

extractor = SuperPoint(max_num_keypoints=None, detection_threshold=-1)

|

| 143 |

+

extractor = extractor.eval().to(device)

|

| 144 |

+

figsize = (len(inputs) * 4.5, 4.5)

|

| 145 |

+

fig, axes = plt.subplots(1, len(inputs), sharey=True, figsize=figsize)

|

| 146 |

+

axes = axes if len(inputs) > 1 else [axes]

|

| 147 |

+

fig.canvas.manager.set_window_title(f"LightGlue benchmark ({device.type})")

|

| 148 |

+

|

| 149 |

+

for title, ax in zip(inputs.keys(), axes):

|

| 150 |

+

ax.set_xscale("log", base=2)

|

| 151 |

+

bases = [2**x for x in range(7, 16)]

|

| 152 |

+

ax.set_xticks(bases, bases)

|

| 153 |

+

ax.grid(which="major")

|

| 154 |

+

if args.measure == "log-time":

|

| 155 |

+

ax.set_yscale("log")

|

| 156 |

+

yticks = [10**x for x in range(6)]

|

| 157 |

+

ax.set_yticks(yticks, yticks)

|

| 158 |

+

mpos = [10**x * i for x in range(6) for i in range(2, 10)]

|

| 159 |

+

mlabel = [

|

| 160 |

+

10**x * i if i in [2, 5] else None

|

| 161 |

+

for x in range(6)

|

| 162 |

+

for i in range(2, 10)

|

| 163 |

+

]

|

| 164 |

+

ax.set_yticks(mpos, mlabel, minor=True)

|

| 165 |

+

ax.grid(which="minor", linewidth=0.2)

|

| 166 |

+

ax.set_title(title)

|

| 167 |

+

|

| 168 |

+

ax.set_xlabel("# keypoints")

|

| 169 |

+

if args.measure == "throughput":

|

| 170 |

+

ax.set_ylabel("Throughput [pairs/s]")

|

| 171 |

+

else:

|

| 172 |

+

ax.set_ylabel("Latency [ms]")

|

| 173 |

+

|

| 174 |

+

for name, conf in configs.items():

|

| 175 |

+

print("Run benchmark for:", name)

|

| 176 |

+

torch.cuda.empty_cache()

|

| 177 |

+

matcher = LightGlue(features="superpoint", flash=not args.no_flash, **conf)

|

| 178 |

+

if args.no_prune_thresholds:

|

| 179 |

+

matcher.pruning_keypoint_thresholds = {

|

| 180 |

+

k: -1 for k in matcher.pruning_keypoint_thresholds

|

| 181 |

+

}

|

| 182 |

+

matcher = matcher.eval().to(device)

|

| 183 |

+

if name.endswith("compile"):

|

| 184 |

+

import torch._dynamo

|

| 185 |

+

|

| 186 |

+

torch._dynamo.reset() # avoid buffer overflow

|

| 187 |

+

matcher.compile()

|

| 188 |

+

for pair_name, ax in zip(inputs.keys(), axes):

|

| 189 |

+

image0, image1 = [x.to(device) for x in inputs[pair_name]]

|

| 190 |

+

runtimes = []

|

| 191 |

+

for num_kpts in args.num_keypoints:

|

| 192 |

+

extractor.conf.max_num_keypoints = num_kpts

|

| 193 |

+

feats0 = extractor.extract(image0)

|

| 194 |

+

feats1 = extractor.extract(image1)

|

| 195 |

+

runtime = measure(

|

| 196 |

+

matcher,

|

| 197 |

+

{"image0": feats0, "image1": feats1},

|

| 198 |

+

device=device,

|

| 199 |

+

r=args.repeat,

|

| 200 |

+

)["mean"]

|

| 201 |

+

results[pair_name][name].append(

|

| 202 |

+

1000 / runtime if args.measure == "throughput" else runtime

|

| 203 |

+

)

|

| 204 |

+

ax.plot(

|

| 205 |

+

args.num_keypoints, results[pair_name][name], label=name, marker="o"

|

| 206 |

+

)

|

| 207 |

+

del matcher, feats0, feats1

|

| 208 |

+

|

| 209 |

+

if args.add_superglue:

|

| 210 |

+

from hloc.matchers.superglue import SuperGlue

|

| 211 |

+

|

| 212 |

+

for name, conf in sg_configs.items():

|

| 213 |

+

print("Run benchmark for:", name)

|

| 214 |

+

matcher = SuperGlue(conf)

|

| 215 |

+

matcher = matcher.eval().to(device)

|

| 216 |

+

for pair_name, ax in zip(inputs.keys(), axes):

|

| 217 |

+

image0, image1 = [x.to(device) for x in inputs[pair_name]]

|

| 218 |

+

runtimes = []

|

| 219 |

+

for num_kpts in args.num_keypoints:

|

| 220 |

+

extractor.conf.max_num_keypoints = num_kpts

|

| 221 |

+

feats0 = extractor.extract(image0)

|

| 222 |

+

feats1 = extractor.extract(image1)

|

| 223 |

+

data = {

|

| 224 |

+

"image0": image0[None],

|

| 225 |

+

"image1": image1[None],

|

| 226 |

+

**{k + "0": v for k, v in feats0.items()},

|

| 227 |

+

**{k + "1": v for k, v in feats1.items()},

|

| 228 |

+

}

|

| 229 |

+

data["scores0"] = data["keypoint_scores0"]

|

| 230 |

+

data["scores1"] = data["keypoint_scores1"]

|

| 231 |

+

data["descriptors0"] = (

|

| 232 |

+

data["descriptors0"].transpose(-1, -2).contiguous()

|

| 233 |

+

)

|

| 234 |

+

data["descriptors1"] = (

|

| 235 |

+

data["descriptors1"].transpose(-1, -2).contiguous()

|

| 236 |

+

)

|

| 237 |

+

runtime = measure(matcher, data, device=device, r=args.repeat)[

|

| 238 |

+

"mean"

|

| 239 |

+

]

|

| 240 |

+

results[pair_name][name].append(

|

| 241 |

+

1000 / runtime if args.measure == "throughput" else runtime

|

| 242 |

+

)

|

| 243 |

+

ax.plot(

|

| 244 |

+

args.num_keypoints, results[pair_name][name], label=name, marker="o"

|

| 245 |

+

)

|

| 246 |

+

del matcher, data, image0, image1, feats0, feats1

|

| 247 |

+

|

| 248 |

+

for name, runtimes in results.items():

|

| 249 |

+

print_as_table(runtimes, name, args.num_keypoints)

|

| 250 |

+

|

| 251 |

+

axes[0].legend()

|

| 252 |

+

fig.tight_layout()

|

| 253 |

+

if args.save:

|

| 254 |

+

plt.savefig(args.save, dpi=fig.dpi)

|

| 255 |

+

plt.show()

|

LightGlue/demo.ipynb

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

LightGlue/lightglue/__init__.py

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .aliked import ALIKED # noqa

|

| 2 |

+

from .disk import DISK # noqa

|

| 3 |

+

from .dog_hardnet import DoGHardNet # noqa

|

| 4 |

+

from .lightglue import LightGlue # noqa

|

| 5 |

+

from .sift import SIFT # noqa

|

| 6 |

+

from .superpoint import SuperPoint # noqa

|

| 7 |

+

from .utils import match_pair # noqa

|

LightGlue/lightglue/aliked.py

ADDED

|

@@ -0,0 +1,758 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|