Spaces:

Runtime error

Runtime error

Upload 47 files

Browse files- .gitattributes +0 -1

- .gitignore +145 -0

- LICENSE +201 -0

- app.py +37 -0

- documents/docs/1-搜索功能.md +0 -0

- documents/docs/2-总结功能.md +19 -0

- documents/docs/3-visualization.md +2 -0

- documents/docs/4-文献分析平台比较.md +56 -0

- documents/docs/index.md +43 -0

- documents/mkdocs.yml +3 -0

- inference_hf/__init__.py +1 -0

- inference_hf/_inference.py +53 -0

- lrt/__init__.py +3 -0

- lrt/academic_query/__init__.py +1 -0

- lrt/academic_query/academic.py +35 -0

- lrt/clustering/__init__.py +2 -0

- lrt/clustering/clustering_pipeline.py +108 -0

- lrt/clustering/clusters.py +91 -0

- lrt/clustering/config.py +11 -0

- lrt/clustering/models/__init__.py +1 -0

- lrt/clustering/models/adapter.py +25 -0

- lrt/clustering/models/keyBartPlus.py +411 -0

- lrt/lrt.py +144 -0

- lrt/utils/__init__.py +3 -0

- lrt/utils/article.py +412 -0

- lrt/utils/dimension_reduction.py +17 -0

- lrt/utils/functions.py +180 -0

- lrt/utils/union_find.py +55 -0

- lrt_instance/__init__.py +1 -0

- lrt_instance/instances.py +4 -0

- requirements.txt +15 -0

- scripts/inference/inference.py +17 -0

- scripts/inference/lrt.ipynb +310 -0

- scripts/queryAPI/API_Summary.ipynb +0 -0

- scripts/readme.md +5 -0

- scripts/tests/lrt_test_run.py +65 -0

- scripts/tests/model_test.py +103 -0

- scripts/train/KeyBartAdapter_train.ipynb +0 -0

- scripts/train/train.py +171 -0

- setup.py +38 -0

- templates/test.html +213 -0

- widgets/__init__.py +3 -0

- widgets/body.py +117 -0

- widgets/charts.py +80 -0

- widgets/sidebar.py +96 -0

- widgets/static/tum.png +0 -0

- widgets/utils.py +17 -0

.gitattributes

CHANGED

|

@@ -2,7 +2,6 @@

|

|

| 2 |

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

| 5 |

-

*.ckpt filter=lfs diff=lfs merge=lfs -text

|

| 6 |

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 8 |

*.h5 filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 2 |

*.arrow filter=lfs diff=lfs merge=lfs -text

|

| 3 |

*.bin filter=lfs diff=lfs merge=lfs -text

|

| 4 |

*.bz2 filter=lfs diff=lfs merge=lfs -text

|

|

|

|

| 5 |

*.ftz filter=lfs diff=lfs merge=lfs -text

|

| 6 |

*.gz filter=lfs diff=lfs merge=lfs -text

|

| 7 |

*.h5 filter=lfs diff=lfs merge=lfs -text

|

.gitignore

ADDED

|

@@ -0,0 +1,145 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

venv

|

| 2 |

+

test.py

|

| 3 |

+

.config.json

|

| 4 |

+

__pycache__

|

| 5 |

+

.idea

|

| 6 |

+

*.sh

|

| 7 |

+

|

| 8 |

+

# Byte-compiled / optimized / DLL files

|

| 9 |

+

__pycache__/

|

| 10 |

+

*.py[cod]

|

| 11 |

+

*$py.class

|

| 12 |

+

|

| 13 |

+

# C extensions

|

| 14 |

+

*.so

|

| 15 |

+

|

| 16 |

+

# Distribution / packaging

|

| 17 |

+

.config.json

|

| 18 |

+

test.py

|

| 19 |

+

pages

|

| 20 |

+

KeyBartAdapter

|

| 21 |

+

scripts/train/KeyBartAdapter/

|

| 22 |

+

docs/site

|

| 23 |

+

.Python

|

| 24 |

+

devenv

|

| 25 |

+

.idea

|

| 26 |

+

build/

|

| 27 |

+

develop-eggs/

|

| 28 |

+

dist/

|

| 29 |

+

downloads/

|

| 30 |

+

eggs/

|

| 31 |

+

.eggs/

|

| 32 |

+

lib/

|

| 33 |

+

lib64/

|

| 34 |

+

parts/

|

| 35 |

+

sdist/

|

| 36 |

+

var/

|

| 37 |

+

wheels/

|

| 38 |

+

pip-wheel-metadata/

|

| 39 |

+

share/python-wheels/

|

| 40 |

+

*.egg-info/

|

| 41 |

+

.installed.cfg

|

| 42 |

+

*.egg

|

| 43 |

+

MANIFEST

|

| 44 |

+

|

| 45 |

+

# PyInstaller

|

| 46 |

+

# Usually these files are written by a python script from a template

|

| 47 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 48 |

+

*.manifest

|

| 49 |

+

*.spec

|

| 50 |

+

|

| 51 |

+

# Installer logs

|

| 52 |

+

pip-log.txt

|

| 53 |

+

pip-delete-this-directory.txt

|

| 54 |

+

|

| 55 |

+

# Unit test / coverage reports

|

| 56 |

+

htmlcov/

|

| 57 |

+

.tox/

|

| 58 |

+

.nox/

|

| 59 |

+

.coverage

|

| 60 |

+

.coverage.*

|

| 61 |

+

.cache

|

| 62 |

+

nosetests.xml

|

| 63 |

+

coverage.xml

|

| 64 |

+

*.cover

|

| 65 |

+

*.py,cover

|

| 66 |

+

.hypothesis/

|

| 67 |

+

.pytest_cache/

|

| 68 |

+

|

| 69 |

+

# Translations

|

| 70 |

+

*.mo

|

| 71 |

+

*.pot

|

| 72 |

+

|

| 73 |

+

# Django stuff:

|

| 74 |

+

*.log

|

| 75 |

+

local_settings.py

|

| 76 |

+

db.sqlite3

|

| 77 |

+

db.sqlite3-journal

|

| 78 |

+

|

| 79 |

+

# Flask stuff:

|

| 80 |

+

instance/

|

| 81 |

+

.webassets-cache

|

| 82 |

+

|

| 83 |

+

# Scrapy stuff:

|

| 84 |

+

.scrapy

|

| 85 |

+

|

| 86 |

+

# Sphinx documentation

|

| 87 |

+

docs/_build/

|

| 88 |

+

|

| 89 |

+

# PyBuilder

|

| 90 |

+

target/

|

| 91 |

+

|

| 92 |

+

# Jupyter Notebook

|

| 93 |

+

.ipynb_checkpoints

|

| 94 |

+

|

| 95 |

+

# IPython

|

| 96 |

+

profile_default/

|

| 97 |

+

ipython_config.py

|

| 98 |

+

|

| 99 |

+

# pyenv

|

| 100 |

+

.python-version

|

| 101 |

+

|

| 102 |

+

# pipenv

|

| 103 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 104 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 105 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 106 |

+

# install all needed dependencies.

|

| 107 |

+

#Pipfile.lock

|

| 108 |

+

|

| 109 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 110 |

+

__pypackages__/

|

| 111 |

+

|

| 112 |

+

# Celery stuff

|

| 113 |

+

celerybeat-schedule

|

| 114 |

+

celerybeat.pid

|

| 115 |

+

|

| 116 |

+

# SageMath parsed files

|

| 117 |

+

*.sage.py

|

| 118 |

+

|

| 119 |

+

# Environments

|

| 120 |

+

.env

|

| 121 |

+

.venv

|

| 122 |

+

env/

|

| 123 |

+

venv/

|

| 124 |

+

ENV/

|

| 125 |

+

env.bak/

|

| 126 |

+

venv.bak/

|

| 127 |

+

|

| 128 |

+

# Spyder project settings

|

| 129 |

+

.spyderproject

|

| 130 |

+

.spyproject

|

| 131 |

+

|

| 132 |

+

# Rope project settings

|

| 133 |

+

.ropeproject

|

| 134 |

+

|

| 135 |

+

# mkdocs documentation

|

| 136 |

+

/site

|

| 137 |

+

|

| 138 |

+

# mypy

|

| 139 |

+

.mypy_cache/

|

| 140 |

+

.dmypy.json

|

| 141 |

+

dmypy.json

|

| 142 |

+

|

| 143 |

+

# Pyre type checker

|

| 144 |

+

.pyre/

|

| 145 |

+

*.py~

|

LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

app.py

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import streamlit as st

|

| 2 |

+

from widgets import *

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

# sidebar content

|

| 8 |

+

platforms, number_papers, start_year, end_year, hyperparams = render_sidebar()

|

| 9 |

+

|

| 10 |

+

# body head

|

| 11 |

+

with st.form("my_form",clear_on_submit=False):

|

| 12 |

+

st.markdown('''# 👋 Hi, enter your query here :)''')

|

| 13 |

+

query_input = st.text_input(

|

| 14 |

+

'Enter your query:',

|

| 15 |

+

placeholder='''e.g. "Machine learning"''',

|

| 16 |

+

# label_visibility='collapsed',

|

| 17 |

+

value=''

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

show_preview = st.checkbox('show paper preview')

|

| 21 |

+

|

| 22 |

+

# Every form must have a submit button.

|

| 23 |

+

submitted = st.form_submit_button("Search")

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

if submitted:

|

| 27 |

+

# body

|

| 28 |

+

render_body(platforms, number_papers, 5, query_input,

|

| 29 |

+

show_preview, start_year, end_year,

|

| 30 |

+

hyperparams,

|

| 31 |

+

hyperparams['standardization'])

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

|

documents/docs/1-搜索功能.md

ADDED

|

File without changes

|

documents/docs/2-总结功能.md

ADDED

|

@@ -0,0 +1,19 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 2 Research Trends Summarization

|

| 2 |

+

|

| 3 |

+

## Model Architecture

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

### 1 Baseline Configuration

|

| 7 |

+

1. pre-trained language model: `sentence-transformers/all-MiniLM-L6-v2`

|

| 8 |

+

2. dimension reduction: `None`

|

| 9 |

+

3. clustering algorithms: `kmeans`

|

| 10 |

+

4. keywords extraction model: `keyphrase-transformer`

|

| 11 |

+

|

| 12 |

+

[[example run](https://github.com/Mondkuchen/idp_LiteratureResearch_Tool/blob/main/example_run.py)] [[results](https://github.com/Mondkuchen/idp_LiteratureResearch_Tool/blob/main/examples/IDP.ipynb)]

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

### TODO:

|

| 16 |

+

1. clustering: using other clustering algorithms such as Gausian Mixture Model (GMM)

|

| 17 |

+

2. keywords extraction model: train another model

|

| 18 |

+

3. add dimension reduction

|

| 19 |

+

4. better PLM: sentence-transformers/sentence-t5-xxl

|

documents/docs/3-visualization.md

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 3 Visualization

|

| 2 |

+

[web app](https://huggingface.co/spaces/Adapting/literature-research-tool)

|

documents/docs/4-文献分析平台比较.md

ADDED

|

@@ -0,0 +1,56 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 4 Other Literature Research Tools

|

| 2 |

+

## 1 Citespace

|

| 3 |

+

|

| 4 |

+

> 作者:爱学习的毛里

|

| 5 |

+

> 链接:https://www.zhihu.com/question/27463829/answer/284247493

|

| 6 |

+

> 来源:知乎

|

| 7 |

+

> 著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。

|

| 8 |

+

|

| 9 |

+

一、工作原理

|

| 10 |

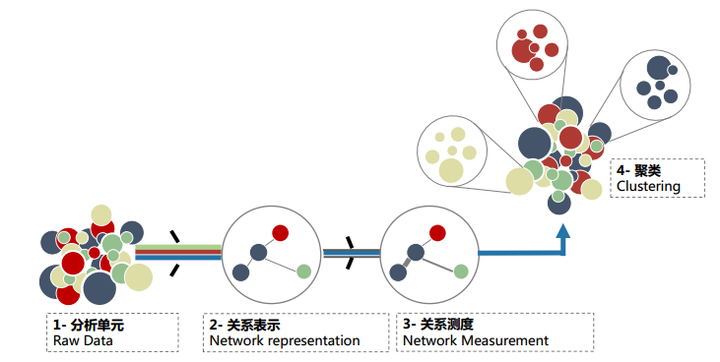

+

简单来讲,citespace主要基于“共现聚类”思想:

|

| 11 |

+

|

| 12 |

+

1. 首先对科学文献中的信息单元进行提取

|

| 13 |

+

- 包括文献层面上的参考文献,主题层面上的**关键词**、主题词、学科、领域分类等,主体层面上的作者、机构、国家、期刊等

|

| 14 |

+

2. 然后根据信息单元间的联系类型和强度进行重构,形成不同意义的网络结构

|

| 15 |

+

- 如关键词共现、作者合作、文献共被引等,

|

| 16 |

+

- 网络中的节点代表文献信息单元,连线代表节点间的联系(共现)

|

| 17 |

+

3. 最后通过对节点、连线及网络结构进行测度、统计分析(聚类、突现词检测等)和可视化,发现特定学科和领域知识结构的隐含模式和规律。

|

| 18 |

+

|

| 19 |

+

*共现聚类思想*

|

| 20 |

+

|

| 21 |

+

二、主要用途

|

| 22 |

+

|

| 23 |

+

1. **<u>研究热点分析</u>**:一般利用关键词/主题词共现

|

| 24 |

+

2. 研究前沿探测:共被引、耦合、共词、突现词检测都有人使用,但因为对“研究前沿”的定义尚未统一,所以方法的选择和图谱结果的解读上众说纷纭

|

| 25 |

+

3. 研究演进路径分析:将时序维度与主题聚类结合,例如citespace中的时间线图和时区图

|

| 26 |

+

4. 研究群体发现:一般建立作者/机构合作、作者耦合等网络,可以发现研究小团体、核心作者/机构等

|

| 27 |

+

5. 学科/领域/知识交叉和流动分析:一般建立期刊/学科等的共现网络,可以研究学科之间的交叉、知识流动和融合等除分析 科学文献 外,citespace也可以用来分析 专利技术文献,用途与科学文献类似,包括技术研究热点、趋势、结构、核心专利权人或团体的识别等。

|

| 28 |

+

|

| 29 |

+

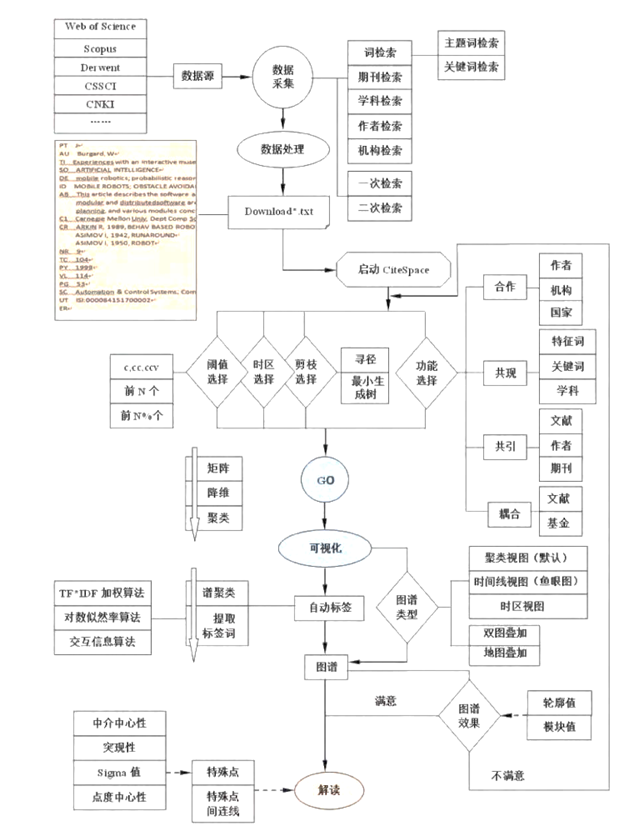

三、工作流程

|

| 30 |

+

|

| 31 |

+

*摘自《引文空间分析原理与应用》*

|

| 32 |

+

|

| 33 |

+

### 聚类算法

|

| 34 |

+

|

| 35 |

+

CiteSpace提供的算法有3个,3个算法的名称分别是:

|

| 36 |

+

|

| 37 |

+

- LSI/LSA: Latent Semantic Indexing/Latent Semantic Analysis 浅语义索引

|

| 38 |

+

[intro](https://www.cnblogs.com/pinard/p/6805861.html)

|

| 39 |

+

|

| 40 |

+

- LLR: Log Likelihood Ratio 对数极大似然率

|

| 41 |

+

|

| 42 |

+

- MI: Mutual Information 互信息

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

对不同的数据,3种算法表现一样,可在实践中多做实践。

|

| 46 |

+

|

| 47 |

+

[paper](https://readpaper.com/paper/2613897633)

|

| 48 |

+

|

| 49 |

+

## 2 VOSviewer

|

| 50 |

+

|

| 51 |

+

VOSviewer的处理流程与大部分的科学知识图谱类软件类似,即文件导入——信息单元抽取(如作者、关键词等)——建立共现矩阵——利用相似度计算对关系进行标准化处理——统计分析(一般描述统计+聚类)——可视化展现(布局+其它图形属性映射)

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

Normalization, mapping, and clustering

|

| 55 |

+

|

| 56 |

+

[paper](https://www.vosviewer.com/download/f-x2.pdf) (See Appendix)

|

documents/docs/index.md

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Intro

|

| 2 |

+

|

| 3 |

+

|

| 4 |

+

<!-- - [x] objective/Aim of the practical part

|

| 5 |

+

- [x] tasks/ work packages,

|

| 6 |

+

- [x] Timeline and Milestones

|

| 7 |

+

- [x] Brief introduction of the practice partner

|

| 8 |

+

- [x] Description of theoretical part and explanation of how the content of the lecture(s)/seminar(s) supports student in completing the practical part. -->

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

## IDP Theme

|

| 14 |

+

IDP Theme: Developing a Literature Research Tool that Automatically Search Literature and Summarize the Research Trends.

|

| 15 |

+

|

| 16 |

+

## Objective

|

| 17 |

+

In this IDP, we are going to develop a literature research tool that enables three functionalities:

|

| 18 |

+

1. Automatically search the most recent literature filtered by keywords on three literature platforms: Elvsier, IEEE and Google Scholar

|

| 19 |

+

2. Automatically summarize the most popular research directions and trends in the searched literature from step 1

|

| 20 |

+

3. visualize the results from step 1 and step 2

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

## Timeline & Milestones & Tasks

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

#### Tasks

|

| 27 |

+

| Label | Start | End | Duration | Description |

|

| 28 |

+

| ------- |------------| ---------- |----------| -------------------------------------------------------------------------------------------------------- |

|

| 29 |

+

| Task #1 | 15/11/2022 | 15/12/2022 | 30 days | Implement literature search by keywords on three literature platforms: Elvsier, IEEE, and Google Scholar |

|

| 30 |

+

| Task #2 | 15/12/2022 | 15/02/2023 | 60 days | Implement automatic summarization of research trends in the searched literature |

|

| 31 |

+

| Task #3 | 15/02/2022 | 15/03/2022 | 30 days | visualization of the tool (web app) |

|

| 32 |

+

| Task #4 | 01/03/2022 | 01/05/2022 | 60 days | write report and presentation |

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

## Correlation between the theoretical course and practical project

|

| 36 |

+

The accompanying theory courses *Machine Learning and Optimization* or *Machine Learning for Communication* teach basic and advanced machine learning (ML) and deep learning (DL) knowledge.

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

The core part of the project, in my opinion, is the automatic summarization of research trends/directions of the papers, which can be modeled as a **Topic Modeling** task in Natural Language Processing (NLP). This task requires machine learning and deep learning knowledge, such as word embeddings, transformers architecture, etc.

|

| 40 |

+

|

| 41 |

+

Therefore, I would like to take the Machine Learning and Optimization course or Machine learning for Communication course from EI department. And I think these theory courses should be necessary for a good ML/DL basis.

|

| 42 |

+

|

| 43 |

+

|

documents/mkdocs.yml

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

site_name: LRT Document

|

| 2 |

+

theme: material

|

| 3 |

+

|

inference_hf/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from ._inference import InferenceHF

|

inference_hf/_inference.py

ADDED

|

@@ -0,0 +1,53 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import requests

|

| 3 |

+

from typing import Union,List

|

| 4 |

+

import aiohttp

|

| 5 |

+

from asyncio import run

|

| 6 |

+

|

| 7 |

+

class InferenceHF:

|

| 8 |

+

headers = {"Authorization": f"Bearer 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"}

|

| 9 |

+

API_URL = "https://api-inference.huggingface.co/models/"

|

| 10 |

+

|

| 11 |

+

@classmethod

|

| 12 |

+

def inference(cls, inputs: Union[List[str], str], model_name:str) ->dict:

|

| 13 |

+

payload = dict(

|

| 14 |

+

inputs = inputs,

|

| 15 |

+

options = dict(

|

| 16 |

+

wait_for_model=True

|

| 17 |

+

)

|

| 18 |

+

)

|

| 19 |

+

|

| 20 |

+

data = json.dumps(payload)

|

| 21 |

+

response = requests.request("POST", cls.API_URL+model_name, headers=cls.headers, data=data)

|

| 22 |

+

return json.loads(response.content.decode("utf-8"))

|

| 23 |

+

|

| 24 |

+

@classmethod

|

| 25 |

+

async def async_inference(cls, inputs: Union[List[str], str], model_name: str) -> dict:

|

| 26 |

+

payload = dict(

|

| 27 |

+

inputs=inputs,

|

| 28 |

+

options=dict(

|

| 29 |

+

wait_for_model=True

|

| 30 |

+

)

|

| 31 |

+

)

|

| 32 |

+

|

| 33 |

+

data = json.dumps(payload)

|

| 34 |

+

|

| 35 |

+

async with aiohttp.ClientSession() as session:

|

| 36 |

+

async with session.post(cls.API_URL + model_name, data=data, headers=cls.headers) as response:

|

| 37 |

+

return await response.json()

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

if __name__ == '__main__':

|

| 41 |

+

print(InferenceHF.inference(

|

| 42 |

+

inputs='hi how are you?',

|

| 43 |

+

model_name= 't5-small'

|

| 44 |

+

))

|

| 45 |

+

|

| 46 |

+

print(

|

| 47 |

+

run(InferenceHF.async_inference(

|

| 48 |

+

inputs='hi how are you?',

|

| 49 |

+

model_name='t5-small'

|

| 50 |

+

))

|

| 51 |

+

)

|

| 52 |

+

|

| 53 |

+

|

lrt/__init__.py

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .lrt import LiteratureResearchTool

|

| 2 |

+

from .clustering import Configuration

|

| 3 |

+

from .utils import Article, ArticleList

|

lrt/academic_query/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .academic import AcademicQuery

|

lrt/academic_query/academic.py

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from requests_toolkit import ArxivQuery,IEEEQuery,PaperWithCodeQuery

|

| 2 |

+

from typing import List

|

| 3 |

+

|

| 4 |

+

class AcademicQuery:

|

| 5 |

+

@classmethod

|

| 6 |

+

def arxiv(cls,

|

| 7 |

+

query: str,

|

| 8 |

+

max_results: int = 50

|

| 9 |

+

) -> List[dict]:

|

| 10 |

+

ret = ArxivQuery.query(query,'',0,max_results)

|

| 11 |

+

if not isinstance(ret,list):

|

| 12 |

+

return [ret]

|

| 13 |

+

return ret

|

| 14 |

+

|

| 15 |

+

@classmethod

|

| 16 |

+

def ieee(cls,

|

| 17 |

+

query: str,

|

| 18 |

+

start_year: int,

|

| 19 |

+

end_year: int,

|

| 20 |

+

num_papers: int = 200

|

| 21 |

+

) -> List[dict]:

|

| 22 |

+

IEEEQuery.__setup_api_key__('vpd9yy325enruv27zj2d353e')

|

| 23 |

+

ret = IEEEQuery.query(query,start_year,end_year,num_papers)

|

| 24 |

+

if not isinstance(ret,list):

|

| 25 |

+

return [ret]

|

| 26 |

+

return ret

|

| 27 |

+

|

| 28 |

+

@classmethod

|

| 29 |

+

def paper_with_code(cls,

|

| 30 |

+

query: str,

|

| 31 |

+

items_per_page = 50) ->List[dict]:

|

| 32 |

+

ret = PaperWithCodeQuery.query(query, 1,items_per_page)

|

| 33 |

+

if not isinstance(ret, list):

|

| 34 |

+

return [ret]

|

| 35 |

+

return ret

|

lrt/clustering/__init__.py

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from .clustering_pipeline import ClusterPipeline, ClusterList

|

| 2 |

+

from .config import Configuration,BaselineConfig

|

lrt/clustering/clustering_pipeline.py

ADDED

|

@@ -0,0 +1,108 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List

|

| 2 |

+

from .config import BaselineConfig, Configuration

|

| 3 |

+

from ..utils import __create_model__

|

| 4 |

+

import numpy as np

|

| 5 |

+

# from sklearn.cluster import KMeans

|

| 6 |

+

from sklearn.preprocessing import StandardScaler

|

| 7 |

+

# from yellowbrick.cluster import KElbowVisualizer

|

| 8 |

+

from .clusters import ClusterList

|

| 9 |

+

from unsupervised_learning.clustering import GaussianMixture, Silhouette

|

| 10 |

+

|

| 11 |

+

class ClusterPipeline:

|

| 12 |

+

def __init__(self, config:Configuration = None):

|

| 13 |

+

if config is None:

|

| 14 |

+

self.__setup__(BaselineConfig())

|

| 15 |

+

else:

|

| 16 |

+

self.__setup__(config)

|

| 17 |

+

|

| 18 |

+

def __setup__(self, config:Configuration):

|

| 19 |

+

self.PTM = __create_model__(config.plm)

|

| 20 |

+

self.dimension_reduction = __create_model__(config.dimension_reduction)

|

| 21 |

+

self.clustering = __create_model__(config.clustering)

|

| 22 |

+

self.keywords_extraction = __create_model__(config.keywords_extraction)

|

| 23 |

+

|

| 24 |

+

def __1_generate_word_embeddings__(self, documents: List[str]):

|

| 25 |

+

'''

|

| 26 |

+

|

| 27 |

+

:param documents: a list of N strings:

|

| 28 |

+

:return: np.ndarray: Nx384 (sentence-transformers)

|

| 29 |

+

'''

|

| 30 |

+

print(f'>>> start generating word embeddings...')

|

| 31 |

+

print(f'>>> successfully generated word embeddings...')

|

| 32 |

+

return self.PTM.encode(documents)

|

| 33 |

+

|

| 34 |

+

def __2_dimenstion_reduction__(self, embeddings):

|

| 35 |

+

'''

|

| 36 |

+

|

| 37 |

+

:param embeddings: NxD

|

| 38 |

+

:return: Nxd, d<<D

|

| 39 |

+

'''

|

| 40 |

+

if self.dimension_reduction is None:

|

| 41 |

+

return embeddings

|

| 42 |

+

print(f'>>> start dimension reduction...')

|

| 43 |

+

embeddings = self.dimension_reduction.dimension_reduction(embeddings)

|

| 44 |

+

print(f'>>> finished dimension reduction...')

|

| 45 |

+

return embeddings

|

| 46 |

+

|

| 47 |

+

def __3_clustering__(self, embeddings, return_cluster_centers = False, max_k: int =10, standarization = False):

|

| 48 |

+

'''

|

| 49 |

+

|

| 50 |

+

:param embeddings: Nxd

|

| 51 |

+

:return:

|

| 52 |

+

'''

|

| 53 |

+

if self.clustering is None:

|

| 54 |

+

return embeddings

|

| 55 |

+

else:

|

| 56 |

+

print(f'>>> start clustering...')

|

| 57 |

+

|

| 58 |

+

######## new: standarization ########

|

| 59 |

+

if standarization:

|

| 60 |

+

print(f'>>> start standardization...')

|

| 61 |

+

scaler = StandardScaler()

|

| 62 |

+

embeddings = scaler.fit_transform(embeddings)

|

| 63 |

+

print(f'>>> finished standardization...')

|

| 64 |

+

######## new: standarization ########

|

| 65 |

+

|

| 66 |

+

best_k_algo = Silhouette(GaussianMixture,2,max_k)

|

| 67 |

+

best_k = best_k_algo.get_best_k(embeddings)

|

| 68 |

+

print(f'>>> The best K is {best_k}.')

|

| 69 |

+

|

| 70 |

+

labels, cluster_centers = self.clustering(embeddings, k=best_k)

|

| 71 |

+

clusters = ClusterList(best_k)

|

| 72 |

+

clusters.instantiate(labels)

|

| 73 |

+

print(f'>>> finished clustering...')

|

| 74 |

+

|

| 75 |

+

if return_cluster_centers:

|

| 76 |

+

return clusters, cluster_centers

|

| 77 |

+

return clusters

|

| 78 |

+

|

| 79 |

+

def __4_keywords_extraction__(self, clusters: ClusterList, documents: List[str]):

|

| 80 |

+

'''

|

| 81 |

+

|

| 82 |

+

:param clusters: N documents

|

| 83 |

+

:return: clusters, where each cluster has added keyphrases

|

| 84 |

+

'''

|

| 85 |

+

if self.keywords_extraction is None:

|

| 86 |

+

return clusters

|

| 87 |

+

else:

|

| 88 |

+

print(f'>>> start keywords extraction')

|

| 89 |

+

for cluster in clusters:

|

| 90 |

+

doc_ids = cluster.elements()

|

| 91 |

+

input_abstracts = [documents[i] for i in doc_ids] #[str]

|

| 92 |

+

keyphrases = self.keywords_extraction(input_abstracts) #[{keys...}]

|

| 93 |

+

cluster.add_keyphrase(keyphrases)

|

| 94 |

+

# for doc_id in doc_ids:

|

| 95 |

+

# keyphrases = self.keywords_extraction(documents[doc_id])

|

| 96 |

+

# cluster.add_keyphrase(keyphrases)

|

| 97 |

+

print(f'>>> finished keywords extraction')

|

| 98 |

+

return clusters

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

def __call__(self, documents: List[str], max_k:int, standarization = False):

|

| 102 |

+

print(f'>>> pipeline starts...')

|

| 103 |

+

x = self.__1_generate_word_embeddings__(documents)

|

| 104 |

+

x = self.__2_dimenstion_reduction__(x)

|

| 105 |

+

clusters = self.__3_clustering__(x,max_k=max_k,standarization=standarization)

|

| 106 |

+

outputs = self.__4_keywords_extraction__(clusters, documents)

|

| 107 |

+

print(f'>>> pipeline finished!\n')

|

| 108 |

+

return outputs

|

lrt/clustering/clusters.py

ADDED

|

@@ -0,0 +1,91 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import List, Iterable, Union

|

| 2 |

+

from pprint import pprint

|

| 3 |

+

|

| 4 |

+

class KeyphraseCount:

|

| 5 |

+

|

| 6 |

+

def __init__(self, keyphrase: str, count: int) -> None:

|

| 7 |

+

super().__init__()

|

| 8 |

+

self.keyphrase = keyphrase

|

| 9 |

+

self.count = count

|

| 10 |

+

|

| 11 |

+

@classmethod

|

| 12 |

+

def reduce(cls, kcs: list) :

|

| 13 |

+

'''

|

| 14 |

+

kcs: List[KeyphraseCount]

|

| 15 |

+

'''

|

| 16 |

+

keys = ''

|

| 17 |

+

count = 0

|

| 18 |

+

|

| 19 |

+

for i in range(len(kcs)-1):

|

| 20 |

+

kc = kcs[i]

|

| 21 |

+

keys += kc.keyphrase + '/'

|

| 22 |

+

count += kc.count

|

| 23 |

+

|

| 24 |

+

keys += kcs[-1].keyphrase

|

| 25 |

+

count += kcs[-1].count

|

| 26 |

+

return KeyphraseCount(keys, count)

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

class SingleCluster:

|

| 31 |

+

def __init__(self):

|

| 32 |

+

self.__container__ = []

|

| 33 |

+

self.__keyphrases__ = {}

|

| 34 |

+

def add(self, id:int):

|

| 35 |

+

self.__container__.append(id)

|

| 36 |

+

def __str__(self) -> str:

|

| 37 |

+

return str(self.__container__)

|

| 38 |

+

def elements(self) -> List:

|

| 39 |

+

return self.__container__

|

| 40 |

+

def get_keyphrases(self):

|

| 41 |

+

ret = []

|

| 42 |

+

for key, count in self.__keyphrases__.items():

|

| 43 |

+

ret.append(KeyphraseCount(key,count))

|

| 44 |

+

return ret

|

| 45 |

+

def add_keyphrase(self, keyphrase:Union[str,Iterable]):

|

| 46 |

+

if isinstance(keyphrase,str):

|

| 47 |

+

if keyphrase not in self.__keyphrases__.keys():

|

| 48 |

+

self.__keyphrases__[keyphrase] = 1

|

| 49 |

+

else:

|

| 50 |

+

self.__keyphrases__[keyphrase] += 1

|

| 51 |

+

elif isinstance(keyphrase,Iterable):

|

| 52 |

+

for i in keyphrase:

|

| 53 |

+

self.add_keyphrase(i)

|

| 54 |

+

|

| 55 |

+

def __len__(self):

|

| 56 |

+

return len(self.__container__)

|

| 57 |

+

|

| 58 |

+

def print_keyphrases(self):

|

| 59 |

+

pprint(self.__keyphrases__)

|

| 60 |

+

|

| 61 |

+

class ClusterList:

|

| 62 |

+

def __init__(self, k:int):

|

| 63 |

+

self.__clusters__ = [SingleCluster() for _ in range(k)]

|

| 64 |

+

|

| 65 |

+

# subscriptable and slice-able

|

| 66 |

+

def __getitem__(self, idx):

|

| 67 |

+

if isinstance(idx, int):

|

| 68 |

+

return self.__clusters__[idx]

|

| 69 |

+

if isinstance(idx, slice):

|

| 70 |

+

# return

|

| 71 |

+

return self.__clusters__[0 if idx.start is None else idx.start: idx.stop: 0 if idx.step is None else idx.step]

|

| 72 |

+

|

| 73 |

+

def instantiate(self, labels: Iterable):

|

| 74 |

+

for id, label in enumerate(labels):

|

| 75 |

+

self.__clusters__[label].add(id)

|

| 76 |

+

|

| 77 |

+

def __str__(self):

|

| 78 |

+

ret = f'There are {len(self.__clusters__)} clusters:\n'

|

| 79 |

+

for id,cluster in enumerate(self.__clusters__):

|

| 80 |

+

ret += f'cluster {id} contains: {cluster}.\n'

|

| 81 |

+

|