Tianheng Cheng*2,3

Tianheng Cheng*2,3,

Lin Song*1,

Yixiao Ge1,2,

Xinggang Wang3,

Wenyu Liu3,

Ying Shan1,2

1 Tencent AI Lab,

2 ARC Lab, Tencent PCG

3 Huazhong University of Science and Technology

[](https://arxiv.org/abs/)

[](https://huggingface.co/)

[](LICENSE)

## Updates

`[2024-1-25]:` We are excited to launch **YOLO-World**, a cutting-edge real-time open-vocabulary object detector.

## Highlights

This repo contains the PyTorch implementation, pre-trained weights, and pre-training/fine-tuning code for YOLO-World.

* YOLO-World is pre-trained on large-scale datasets, including detection, grounding, and image-text datasets.

* YOLO-World is the next-generation YOLO detector, with a strong open-vocabulary detection capability and grounding ability.

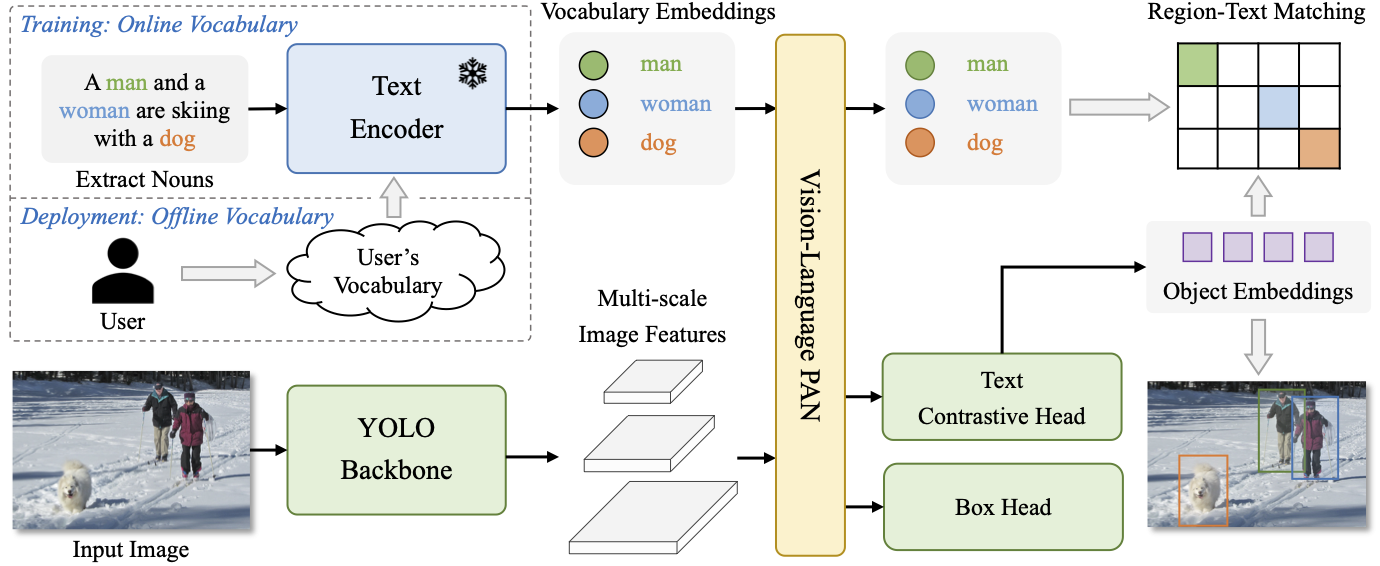

* YOLO-World presents a *prompt-then-detect* paradigm for efficient user-vocabulary inference, which re-parameterizes vocabulary embeddings as parameters into the model and achieve superior inference speed. You can try to export your own detection model without extra training or fine-tuning in our [online demo]()!