Spaces:

Runtime error

Runtime error

Upload 14 files

Browse files- .gitattributes +6 -0

- README.md +6 -6

- alphabet_map.json +28 -0

- app.py +112 -0

- braille_map.json +65 -0

- convert.py +73 -0

- image/alpha-numeric.jpeg +0 -0

- image/gray_image.jpg +3 -0

- image/img_41.jpg +0 -0

- image/test_1.jpg +3 -0

- image/test_2.jpg +3 -0

- image/test_3.jpg +3 -0

- image/test_4.jpg +3 -0

- image/test_5.jpg +3 -0

- number_map.json +66 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,9 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

image/gray_image.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

image/test_1.jpg filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

image/test_2.jpg filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

image/test_3.jpg filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

image/test_4.jpg filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

image/test_5.jpg filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,13 +1,13 @@

|

|

| 1 |

---

|

| 2 |

-

title: Braille

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: blue

|

| 5 |

-

colorTo:

|

| 6 |

sdk: streamlit

|

| 7 |

-

sdk_version: 1.

|

| 8 |

app_file: app.py

|

| 9 |

-

pinned:

|

| 10 |

license: mit

|

| 11 |

---

|

| 12 |

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Braille Detection

|

| 3 |

+

emoji: 🕶

|

| 4 |

colorFrom: blue

|

| 5 |

+

colorTo: yellow

|

| 6 |

sdk: streamlit

|

| 7 |

+

sdk_version: 1.17.0

|

| 8 |

app_file: app.py

|

| 9 |

+

pinned: true

|

| 10 |

license: mit

|

| 11 |

---

|

| 12 |

|

| 13 |

+

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

alphabet_map.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"a": "100000",

|

| 3 |

+

"b": "110000",

|

| 4 |

+

"c": "100100",

|

| 5 |

+

"d": "100110",

|

| 6 |

+

"e": "100010",

|

| 7 |

+

"f": "110100",

|

| 8 |

+

"g": "110110",

|

| 9 |

+

"h": "110010",

|

| 10 |

+

"i": "010100",

|

| 11 |

+

"j": "010110",

|

| 12 |

+

"k": "101000",

|

| 13 |

+

"l": "111000",

|

| 14 |

+

"m": "101100",

|

| 15 |

+

"n": "101110",

|

| 16 |

+

"o": "101010",

|

| 17 |

+

"p": "111100",

|

| 18 |

+

"q": "111110",

|

| 19 |

+

"r": "111010",

|

| 20 |

+

"s": "011100",

|

| 21 |

+

"t": "011110",

|

| 22 |

+

"u": "101001",

|

| 23 |

+

"v": "111001",

|

| 24 |

+

"w": "010111",

|

| 25 |

+

"x": "101101",

|

| 26 |

+

"y": "101111",

|

| 27 |

+

"z": "101011"

|

| 28 |

+

}

|

app.py

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Reference

|

| 3 |

+

- https://docs.streamlit.io/library/api-reference/layout

|

| 4 |

+

- https://github.com/CodingMantras/yolov8-streamlit-detection-tracking/blob/master/app.py

|

| 5 |

+

- https://huggingface.co/keremberke/yolov8m-valorant-detection/tree/main

|

| 6 |

+

- https://docs.ultralytics.com/usage/python/

|

| 7 |

+

"""

|

| 8 |

+

import time

|

| 9 |

+

import PIL

|

| 10 |

+

|

| 11 |

+

import streamlit as st

|

| 12 |

+

import torch

|

| 13 |

+

from ultralyticsplus import YOLO, render_result

|

| 14 |

+

|

| 15 |

+

from convert import convert_to_braille_unicode, parse_xywh_and_class

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def load_model(model_path):

|

| 19 |

+

"""load model from path"""

|

| 20 |

+

model = YOLO(model_path)

|

| 21 |

+

return model

|

| 22 |

+

|

| 23 |

+

|

| 24 |

+

def load_image(image_path):

|

| 25 |

+

"""load image from path"""

|

| 26 |

+

image = PIL.Image.open(image_path)

|

| 27 |

+

return image

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

# title

|

| 31 |

+

st.title("Braille Pattern Detection")

|

| 32 |

+

|

| 33 |

+

# sidebar

|

| 34 |

+

st.sidebar.header("Detection Config")

|

| 35 |

+

|

| 36 |

+

conf = float(st.sidebar.slider("Class Confidence", 10, 75, 15)) / 100

|

| 37 |

+

iou = float(st.sidebar.slider("IoU Threshold", 10, 75, 15)) / 100

|

| 38 |

+

|

| 39 |

+

model_path = "snoop2head/yolov8m-braille"

|

| 40 |

+

|

| 41 |

+

try:

|

| 42 |

+

model = load_model(model_path)

|

| 43 |

+

model.overrides["conf"] = conf # NMS confidence threshold

|

| 44 |

+

model.overrides["iou"] = iou # NMS IoU threshold

|

| 45 |

+

model.overrides["agnostic_nms"] = False # NMS class-agnostic

|

| 46 |

+

model.overrides["max_det"] = 1000 # maximum number of detections per image

|

| 47 |

+

|

| 48 |

+

except Exception as ex:

|

| 49 |

+

print(ex)

|

| 50 |

+

st.write(f"Unable to load model. Check the specified path: {model_path}")

|

| 51 |

+

|

| 52 |

+

source_img = None

|

| 53 |

+

|

| 54 |

+

source_img = st.sidebar.file_uploader(

|

| 55 |

+

"Choose an image...", type=("jpg", "jpeg", "png", "bmp", "webp")

|

| 56 |

+

)

|

| 57 |

+

col1, col2 = st.columns(2)

|

| 58 |

+

|

| 59 |

+

# left column of the page body

|

| 60 |

+

with col1:

|

| 61 |

+

if source_img is None:

|

| 62 |

+

default_image_path = "./images/alpha-numeric.jpeg"

|

| 63 |

+

image = load_image(default_image_path)

|

| 64 |

+

st.image(

|

| 65 |

+

default_image_path, caption="Example Input Image", use_column_width=True

|

| 66 |

+

)

|

| 67 |

+

else:

|

| 68 |

+

image = load_image(source_img)

|

| 69 |

+

st.image(source_img, caption="Uploaded Image", use_column_width=True)

|

| 70 |

+

|

| 71 |

+

# right column of the page body

|

| 72 |

+

with col2:

|

| 73 |

+

with st.spinner("Wait for it..."):

|

| 74 |

+

start_time = time.time()

|

| 75 |

+

try:

|

| 76 |

+

with torch.no_grad():

|

| 77 |

+

res = model.predict(

|

| 78 |

+

image, save=True, save_txt=True, exist_ok=True, conf=conf

|

| 79 |

+

)

|

| 80 |

+

boxes = res[0].boxes # first image

|

| 81 |

+

res_plotted = res[0].plot()[:, :, ::-1]

|

| 82 |

+

|

| 83 |

+

list_boxes = parse_xywh_and_class(boxes)

|

| 84 |

+

|

| 85 |

+

st.image(res_plotted, caption="Detected Image", use_column_width=True)

|

| 86 |

+

IMAGE_DOWNLOAD_PATH = f"runs/detect/predict/image0.jpg"

|

| 87 |

+

|

| 88 |

+

except Exception as ex:

|

| 89 |

+

st.write("Please upload image with types of JPG, JPEG, PNG ...")

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

try:

|

| 93 |

+

st.success(f"Done! Inference time: {time.time() - start_time:.2f} seconds")

|

| 94 |

+

st.subheader("Detected Braille Patterns")

|

| 95 |

+

for box_line in list_boxes:

|

| 96 |

+

str_left_to_right = ""

|

| 97 |

+

box_classes = box_line[:, -1]

|

| 98 |

+

for each_class in box_classes:

|

| 99 |

+

str_left_to_right += convert_to_braille_unicode(

|

| 100 |

+

model.names[int(each_class)]

|

| 101 |

+

)

|

| 102 |

+

st.write(str_left_to_right)

|

| 103 |

+

except Exception as ex:

|

| 104 |

+

st.write("Please try again with images with types of JPG, JPEG, PNG ...")

|

| 105 |

+

|

| 106 |

+

with open(IMAGE_DOWNLOAD_PATH, "rb") as fl:

|

| 107 |

+

st.download_button(

|

| 108 |

+

"Download object-detected image",

|

| 109 |

+

data=fl,

|

| 110 |

+

file_name="image0.jpg",

|

| 111 |

+

mime="image/jpg",

|

| 112 |

+

)

|

braille_map.json

ADDED

|

@@ -0,0 +1,65 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"000001": "⠠",

|

| 3 |

+

"000010": "⠐",

|

| 4 |

+

"000011": "⠰",

|

| 5 |

+

"000100": "⠈",

|

| 6 |

+

"000101": "⠨",

|

| 7 |

+

"000110": "⠘",

|

| 8 |

+

"000111": "⠸",

|

| 9 |

+

"001000": "⠄",

|

| 10 |

+

"001001": "⠤",

|

| 11 |

+

"001010": "⠔",

|

| 12 |

+

"001011": "⠴",

|

| 13 |

+

"001100": "⠌",

|

| 14 |

+

"001101": "⠬",

|

| 15 |

+

"001110": "⠜",

|

| 16 |

+

"001111": "⠼",

|

| 17 |

+

"010000": "⠂",

|

| 18 |

+

"010001": "⠢",

|

| 19 |

+

"010010": "⠒",

|

| 20 |

+

"010011": "⠲",

|

| 21 |

+

"010100": "⠊",

|

| 22 |

+

"010101": "⠪",

|

| 23 |

+

"010110": "⠚",

|

| 24 |

+

"010111": "⠺",

|

| 25 |

+

"011000": "⠆",

|

| 26 |

+

"011001": "⠦",

|

| 27 |

+

"011010": "⠖",

|

| 28 |

+

"011011": "⠶",

|

| 29 |

+

"011100": "⠎",

|

| 30 |

+

"011101": "⠮",

|

| 31 |

+

"011110": "⠞",

|

| 32 |

+

"011111": "⠾",

|

| 33 |

+

"100000": "⠁",

|

| 34 |

+

"100001": "⠡",

|

| 35 |

+

"100010": "⠑",

|

| 36 |

+

"100011": "⠱",

|

| 37 |

+

"100100": "⠉",

|

| 38 |

+

"100101": "⠩",

|

| 39 |

+

"100110": "⠙",

|

| 40 |

+

"100111": "⠹",

|

| 41 |

+

"101000": "⠅",

|

| 42 |

+

"101001": "⠥",

|

| 43 |

+

"101010": "⠕",

|

| 44 |

+

"101011": "⠵",

|

| 45 |

+

"101100": "⠍",

|

| 46 |

+

"101101": "⠭",

|

| 47 |

+

"101110": "⠝",

|

| 48 |

+

"101111": "⠽",

|

| 49 |

+

"110000": "⠃",

|

| 50 |

+

"110001": "⠣",

|

| 51 |

+

"110010": "⠓",

|

| 52 |

+

"110011": "⠳",

|

| 53 |

+

"110100": "⠋",

|

| 54 |

+

"110101": "⠫",

|

| 55 |

+

"110110": "⠛",

|

| 56 |

+

"110111": "⠻",

|

| 57 |

+

"111000": "⠇",

|

| 58 |

+

"111001": "⠧",

|

| 59 |

+

"111010": "⠗",

|

| 60 |

+

"111011": "⠷",

|

| 61 |

+

"111100": "⠏",

|

| 62 |

+

"111101": "⠯",

|

| 63 |

+

"111110": "⠟",

|

| 64 |

+

"111111": "⠿"

|

| 65 |

+

}

|

convert.py

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

import numpy as np

|

| 3 |

+

import torch

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

def convert_to_braille_unicode(str_input: str, path: str = "./src/utils/number_map.json") -> str:

|

| 7 |

+

with open(path, "r") as fl:

|

| 8 |

+

data = json.load(fl)

|

| 9 |

+

|

| 10 |

+

if str_input in data.keys():

|

| 11 |

+

str_output = data[str_input]

|

| 12 |

+

return str_output

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

def parse_xywh_and_class(boxes: torch.Tensor) -> list:

|

| 16 |

+

"""

|

| 17 |

+

boxes input tensor

|

| 18 |

+

boxes (torch.Tensor) or (numpy.ndarray): A tensor or numpy array containing the detection boxes,

|

| 19 |

+

with shape (num_boxes, 6).

|

| 20 |

+

orig_shape (torch.Tensor) or (numpy.ndarray): Original image size, in the format (height, width).

|

| 21 |

+

Properties:

|

| 22 |

+

xyxy (torch.Tensor) or (numpy.ndarray): The boxes in xyxy format.

|

| 23 |

+

conf (torch.Tensor) or (numpy.ndarray): The confidence values of the boxes.

|

| 24 |

+

cls (torch.Tensor) or (numpy.ndarray): The class values of the boxes.

|

| 25 |

+

xywh (torch.Tensor) or (numpy.ndarray): The boxes in xywh format.

|

| 26 |

+

xyxyn (torch.Tensor) or (numpy.ndarray): The boxes in xyxy format normalized by original image size.

|

| 27 |

+

xywhn (torch.Tensor) or (numpy.ndarray): The boxes in xywh format normalized by original image size.

|

| 28 |

+

"""

|

| 29 |

+

|

| 30 |

+

# copy values from troublesome "boxes" object to numpy array

|

| 31 |

+

new_boxes = np.zeros(boxes.shape)

|

| 32 |

+

new_boxes[:, :4] = boxes.xywh.cpu().numpy() # first 4 channels are xywh

|

| 33 |

+

new_boxes[:, 4] = boxes.conf.cpu().numpy() # 5th channel is confidence

|

| 34 |

+

new_boxes[:, 5] = boxes.cls.cpu().numpy() # 6th channel is class which is last channel

|

| 35 |

+

|

| 36 |

+

# sort according to y coordinate

|

| 37 |

+

new_boxes = new_boxes[new_boxes[:, 1].argsort()]

|

| 38 |

+

|

| 39 |

+

# find threshold index to break the line

|

| 40 |

+

y_threshold = np.mean(new_boxes[:, 3]) // 2

|

| 41 |

+

boxes_diff = np.diff(new_boxes[:, 1])

|

| 42 |

+

threshold_index = np.where(boxes_diff > y_threshold)[0]

|

| 43 |

+

|

| 44 |

+

# cluster according to threshold_index

|

| 45 |

+

boxes_clustered = np.split(new_boxes, threshold_index + 1)

|

| 46 |

+

boxes_return = []

|

| 47 |

+

for cluster in boxes_clustered:

|

| 48 |

+

# sort according to x coordinate

|

| 49 |

+

cluster = cluster[cluster[:, 0].argsort()]

|

| 50 |

+

boxes_return.append(cluster)

|

| 51 |

+

|

| 52 |

+

return boxes_return

|

| 53 |

+

|

| 54 |

+

|

| 55 |

+

def arrange_braille_to_2x3(box_classes: list) -> list:

|

| 56 |

+

"""

|

| 57 |

+

将检测到的盲文字符类别数组转为 2x3 点阵格式。

|

| 58 |

+

:param box_classes: 检测到的盲文字符类别列表 (长度必须是6的倍数)

|

| 59 |

+

:return: 2x3 盲文点阵列表

|

| 60 |

+

"""

|

| 61 |

+

# 检查输入长度是否为6的倍数

|

| 62 |

+

if len(box_classes) % 6 != 0:

|

| 63 |

+

raise ValueError("输入的盲文字符数组长度必须是6的倍数")

|

| 64 |

+

|

| 65 |

+

braille_2x3_list = []

|

| 66 |

+

|

| 67 |

+

# 每次取6个字符并将它们排成2x3格式

|

| 68 |

+

for i in range(0, len(box_classes), 6):

|

| 69 |

+

# reshape为3x2矩阵然后转置为2x3矩阵

|

| 70 |

+

braille_char = np.array(box_classes[i:i + 6]).reshape(3, 2).T

|

| 71 |

+

braille_2x3_list.append(braille_char)

|

| 72 |

+

|

| 73 |

+

return braille_2x3_list

|

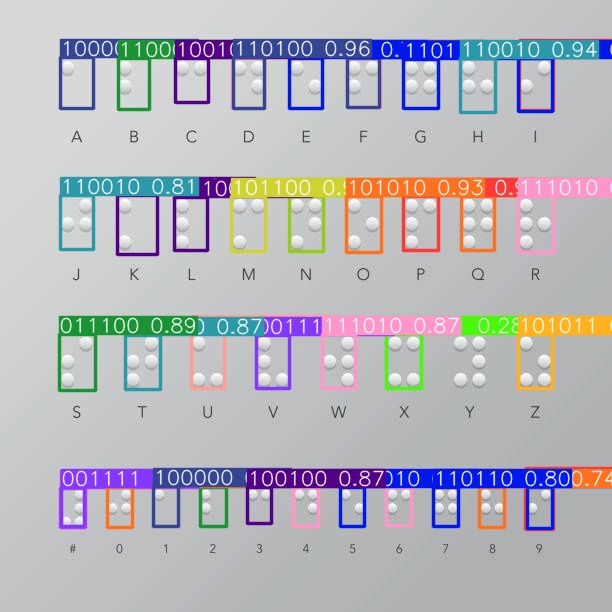

image/alpha-numeric.jpeg

ADDED

|

image/gray_image.jpg

ADDED

|

Git LFS Details

|

image/img_41.jpg

ADDED

|

image/test_1.jpg

ADDED

|

Git LFS Details

|

image/test_2.jpg

ADDED

|

Git LFS Details

|

image/test_3.jpg

ADDED

|

Git LFS Details

|

image/test_4.jpg

ADDED

|

Git LFS Details

|

image/test_5.jpg

ADDED

|

Git LFS Details

|

number_map.json

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"000001": "⠠",

|

| 3 |

+

"000010": "⠐",

|

| 4 |

+

"000011": "⠰",

|

| 5 |

+

"000100": "⠈",

|

| 6 |

+

"000101": "⠨",

|

| 7 |

+

"000110": "⠘",

|

| 8 |

+

"000111": "⠸",

|

| 9 |

+

"001000": "⠄",

|

| 10 |

+

"001001": "⠤",

|

| 11 |

+

"001010": "⠔",

|

| 12 |

+

"001011": "⠴",

|

| 13 |

+

"001100": "⠌",

|

| 14 |

+

"001101": "⠬",

|

| 15 |

+

"001110": "⠜",

|

| 16 |

+

"001111": "floor",

|

| 17 |

+

"010000": "⠂",

|

| 18 |

+

"010001": "⠢",

|

| 19 |

+

"010010": "⠒",

|

| 20 |

+

"010011": "⠲",

|

| 21 |

+

"010100": "9",

|

| 22 |

+

"010101": "⠪",

|

| 23 |

+

"010110": "0",

|

| 24 |

+

"010111": "⠺",

|

| 25 |

+

"011000": "⠆",

|

| 26 |

+

"011001": "⠦",

|

| 27 |

+

"011010": "⠖",

|

| 28 |

+

"011011": "⠶",

|

| 29 |

+

"011100": "⠎",

|

| 30 |

+

"011101": "⠮",

|

| 31 |

+

"011110": "⠞",

|

| 32 |

+

"011111": "⠾",

|

| 33 |

+

"100000": "1",

|

| 34 |

+

"100001": "⠡",

|

| 35 |

+

"100010": "5",

|

| 36 |

+

"100011": "⠱",

|

| 37 |

+

"100100": "3",

|

| 38 |

+

"100101": "⠩",

|

| 39 |

+

"100110": "4",

|

| 40 |

+

"100111": "⠹",

|

| 41 |

+

"101000": "⠅",

|

| 42 |

+

"101001": "⠥",

|

| 43 |

+

"101010": "⠕",

|

| 44 |

+

"101011": "⠵",

|

| 45 |

+

"101100": "⠍",

|

| 46 |

+

"101101": "⠭",

|

| 47 |

+

"101110": "⠝",

|

| 48 |

+

"101111": "⠽",

|

| 49 |

+

"110000": "2",

|

| 50 |

+

"110001": "⠣",

|

| 51 |

+

"110010": "8",

|

| 52 |

+

"110011": "⠳",

|

| 53 |

+

"110100": "6",

|

| 54 |

+

"110101": "⠫",

|

| 55 |

+

"110110": "7",

|

| 56 |

+

"110111": "⠻",

|

| 57 |

+

"111000": "⠇",

|

| 58 |

+

"111001": "⠧",

|

| 59 |

+

"111010": "⠗",

|

| 60 |

+

"111011": "⠷",

|

| 61 |

+

"111100": "⠏",

|

| 62 |

+

"111101": "⠯",

|

| 63 |

+

"111110": "⠟",

|

| 64 |

+

"111111": "⠿"

|

| 65 |

+

}

|

| 66 |

+

|