Spaces:

Configuration error

Configuration error

Upload 53 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- README.md +26 -10

- asset/external_view.png +0 -0

- asset/external_view.pptx +3 -0

- makefile +14 -0

- neollm.code-workspace +63 -0

- neollm/__init__.py +5 -0

- neollm/exceptions.py +2 -0

- neollm/llm/__init__.py +4 -0

- neollm/llm/abstract_llm.py +188 -0

- neollm/llm/claude/abstract_claude.py +214 -0

- neollm/llm/claude/anthropic_llm.py +66 -0

- neollm/llm/claude/gcp_llm.py +67 -0

- neollm/llm/gemini/abstract_gemini.py +229 -0

- neollm/llm/gemini/gcp_llm.py +114 -0

- neollm/llm/get_llm.py +47 -0

- neollm/llm/gpt/abstract_gpt.py +81 -0

- neollm/llm/gpt/azure_llm.py +215 -0

- neollm/llm/gpt/openai_llm.py +222 -0

- neollm/llm/gpt/token.py +247 -0

- neollm/llm/platform.py +16 -0

- neollm/llm/utils.py +72 -0

- neollm/myllm/abstract_myllm.py +148 -0

- neollm/myllm/myl3m2.py +165 -0

- neollm/myllm/myllm.py +449 -0

- neollm/myllm/print_utils.py +235 -0

- neollm/types/__init__.py +4 -0

- neollm/types/_model.py +8 -0

- neollm/types/info.py +82 -0

- neollm/types/mytypes.py +31 -0

- neollm/types/openai/__init__.py +2 -0

- neollm/types/openai/chat_completion.py +170 -0

- neollm/types/openai/chat_completion_chunk.py +109 -0

- neollm/utils/inference.py +70 -0

- neollm/utils/postprocess.py +120 -0

- neollm/utils/preprocess.py +107 -0

- neollm/utils/prompt_checker.py +110 -0

- neollm/utils/tokens.py +229 -0

- neollm/utils/utils.py +98 -0

- poetry.lock +0 -0

- project/.env.template +24 -0

- project/ex_module/ex_profile_extractor.py +113 -0

- project/ex_module/ex_translated_profile_extractor.py +49 -0

- project/ex_module/ex_translator.py +62 -0

- project/neollm-tutorial.ipynb +713 -0

- pyproject.toml +81 -0

- test/llm/claude/test_claude_llm.py +37 -0

- test/llm/gpt/test_azure_llm.py +92 -0

- test/llm/gpt/test_openai_llm.py +37 -0

- test/llm/platform.py +32 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

asset/external_view.pptx filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,10 +1,26 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# neoLLM Module

|

| 2 |

+

|

| 3 |

+

neoAIのLLMソリューションの基盤モジュール

|

| 4 |

+

[neoLLM Module Document](https://www.notion.so/neoLLM-Module-Document-64399d1d1db24d92bce8f9b88472833f)

|

| 5 |

+

|

| 6 |

+

## 準備

|

| 7 |

+

[neoLLM インストール方法](https://www.notion.so/c760d96f1b4240e6880a32bee96bba35)

|

| 8 |

+

1. install neoLLM Module ※ Python 3.10

|

| 9 |

+

```bash

|

| 10 |

+

$ pip install git+https://github.com/neoAI-inc/neo-llm-module.git@v1.x.x

|

| 11 |

+

```

|

| 12 |

+

|

| 13 |

+

2. APIキーの設定

|

| 14 |

+

`.env`ファイルの配置

|

| 15 |

+

- 環境変数を`.env`ファイルで定義し,実行するバスに配置

|

| 16 |

+

- `project/example_env.txt`を`.env`に名前を変えて, 必要事項を記入

|

| 17 |

+

|

| 18 |

+

## 使用方法

|

| 19 |

+

### 概要

|

| 20 |

+

灰色背景の部分を開発するだけでOK

|

| 21 |

+

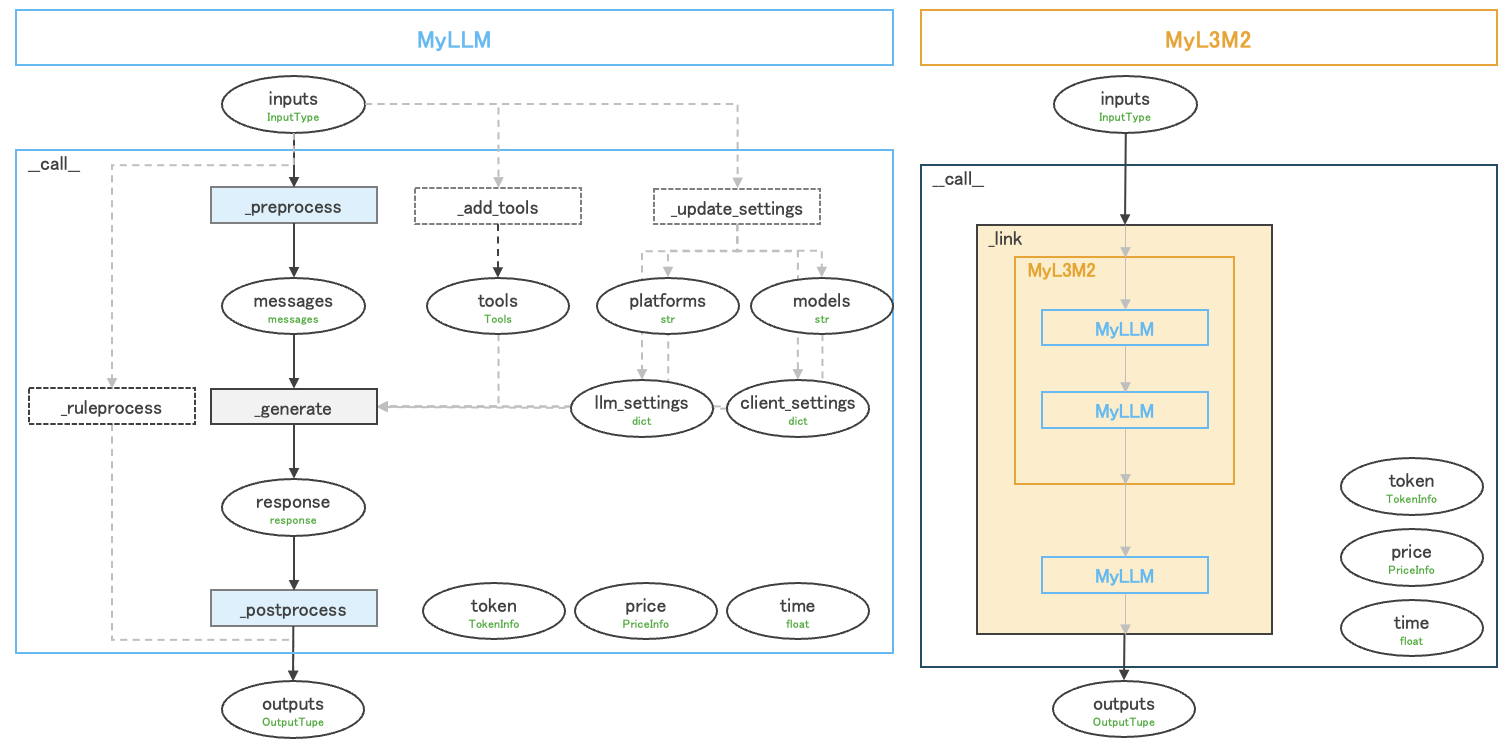

- MyLLM: 1回のLLMへのリクエストをラップできる

|

| 22 |

+

- MyL3M2: 複数のLLMへのリクエストをラップできる

|

| 23 |

+

|

| 24 |

+

詳しくは、`project/neollm-tutorial.ipynb`, `project/ex_module`

|

| 25 |

+

|

| 26 |

+

|

asset/external_view.png

ADDED

|

asset/external_view.pptx

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7b3e9d7dbbb6f9ca5750edd9eaad8fe7ce5fcb5797e8027ae11dea90a0a47a2c

|

| 3 |

+

size 8728033

|

makefile

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.PHONY: lint

|

| 2 |

+

lint: ## run tests with poetry (isort, black, pflake8, mypy)

|

| 3 |

+

poetry run black neollm

|

| 4 |

+

poetry run isort neollm

|

| 5 |

+

poetry run pflake8 neollm

|

| 6 |

+

poetry run mypy neollm --explicit-package-bases

|

| 7 |

+

|

| 8 |

+

.PHONY: test

|

| 9 |

+

test:

|

| 10 |

+

poetry run pytest

|

| 11 |

+

|

| 12 |

+

.PHONY: unit-test

|

| 13 |

+

unit-test:

|

| 14 |

+

poetry run pytest -k "not test_neollm"

|

neollm.code-workspace

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"folders": [

|

| 3 |

+

{

|

| 4 |

+

"name": "neo-llm-module",

|

| 5 |

+

"path": "."

|

| 6 |

+

}

|

| 7 |

+

],

|

| 8 |

+

"settings": {

|

| 9 |

+

"editor.codeActionsOnSave": {

|

| 10 |

+

"source.fixAll.eslint": "explicit",

|

| 11 |

+

"source.fixAll.stylelint": "explicit"

|

| 12 |

+

},

|

| 13 |

+

"editor.formatOnSave": true,

|

| 14 |

+

"editor.formatOnPaste": true,

|

| 15 |

+

"editor.formatOnType": true,

|

| 16 |

+

"json.format.keepLines": true,

|

| 17 |

+

"[javascript]": {

|

| 18 |

+

"editor.defaultFormatter": "esbenp.prettier-vscode"

|

| 19 |

+

},

|

| 20 |

+

"[typescript]": {

|

| 21 |

+

"editor.defaultFormatter": "esbenp.prettier-vscode"

|

| 22 |

+

},

|

| 23 |

+

"[typescriptreact]": {

|

| 24 |

+

"editor.defaultFormatter": "esbenp.prettier-vscode"

|

| 25 |

+

},

|

| 26 |

+

"[css]": {

|

| 27 |

+

"editor.defaultFormatter": "esbenp.prettier-vscode"

|

| 28 |

+

},

|

| 29 |

+

"[json]": {

|

| 30 |

+

"editor.defaultFormatter": "vscode.json-language-features"

|

| 31 |

+

},

|

| 32 |

+

"search.exclude": {

|

| 33 |

+

"**/node_modules": true,

|

| 34 |

+

"static": true

|

| 35 |

+

},

|

| 36 |

+

"[python]": {

|

| 37 |

+

"editor.defaultFormatter": "ms-python.black-formatter",

|

| 38 |

+

"editor.codeActionsOnSave": {

|

| 39 |

+

"source.organizeImports": "explicit"

|

| 40 |

+

}

|

| 41 |

+

},

|

| 42 |

+

"flake8.args": [

|

| 43 |

+

"--max-line-length=119",

|

| 44 |

+

"--max-complexity=15",

|

| 45 |

+

"--ignore=E203,E501,E704,W503",

|

| 46 |

+

"--exclude=.venv,.git,__pycache__,.mypy_cache,.hg"

|

| 47 |

+

],

|

| 48 |

+

"isort.args": ["--settings-path=pyproject.toml"],

|

| 49 |

+

"black-formatter.args": ["--config=pyproject.toml"],

|

| 50 |

+

"mypy-type-checker.args": ["--config-file=pyproject.toml"],

|

| 51 |

+

"python.analysis.extraPaths": ["./backend"]

|

| 52 |

+

},

|

| 53 |

+

"extensions": {

|

| 54 |

+

"recommendations": [

|

| 55 |

+

"esbenp.prettier-vscode",

|

| 56 |

+

"dbaeumer.vscode-eslint",

|

| 57 |

+

"ms-python.flake8",

|

| 58 |

+

"ms-python.isort",

|

| 59 |

+

"ms-python.black-formatter",

|

| 60 |

+

"ms-python.mypy-type-checker"

|

| 61 |

+

]

|

| 62 |

+

}

|

| 63 |

+

}

|

neollm/__init__.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from neollm.myllm.abstract_myllm import AbstractMyLLM

|

| 2 |

+

from neollm.myllm.myl3m2 import MyL3M2

|

| 3 |

+

from neollm.myllm.myllm import MyLLM

|

| 4 |

+

|

| 5 |

+

__all__ = ["AbstractMyLLM", "MyLLM", "MyL3M2"]

|

neollm/exceptions.py

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

class ContentFilterError(Exception):

|

| 2 |

+

pass

|

neollm/llm/__init__.py

ADDED

|

@@ -0,0 +1,4 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from neollm.llm.abstract_llm import AbstractLLM

|

| 2 |

+

from neollm.llm.get_llm import get_llm

|

| 3 |

+

|

| 4 |

+

__all__ = ["AbstractLLM", "get_llm"]

|

neollm/llm/abstract_llm.py

ADDED

|

@@ -0,0 +1,188 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from abc import ABC, abstractmethod

|

| 2 |

+

from typing import Any

|

| 3 |

+

|

| 4 |

+

from neollm.llm.utils import get_entity

|

| 5 |

+

from neollm.types import (

|

| 6 |

+

APIPricing,

|

| 7 |

+

ChatCompletion,

|

| 8 |

+

ChatCompletionMessage,

|

| 9 |

+

ChatCompletionMessageToolCall,

|

| 10 |

+

Choice,

|

| 11 |

+

ChoiceDeltaToolCall,

|

| 12 |

+

Chunk,

|

| 13 |

+

ClientSettings,

|

| 14 |

+

CompletionUsage,

|

| 15 |

+

Function,

|

| 16 |

+

FunctionCall,

|

| 17 |

+

LLMSettings,

|

| 18 |

+

Messages,

|

| 19 |

+

Response,

|

| 20 |

+

StreamResponse,

|

| 21 |

+

)

|

| 22 |

+

from neollm.utils.utils import cprint

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

# 現状、Azure, OpenAIに対応

|

| 26 |

+

class AbstractLLM(ABC):

|

| 27 |

+

dollar_per_ktoken: APIPricing

|

| 28 |

+

model: str

|

| 29 |

+

context_window: int

|

| 30 |

+

_custom_price_calculation: bool = False # self.tokenではなく、self.custom_tokenを使う場合にTrue

|

| 31 |

+

|

| 32 |

+

def __init__(self, client_settings: ClientSettings):

|

| 33 |

+

"""LLMクラスの初期化

|

| 34 |

+

|

| 35 |

+

Args:

|

| 36 |

+

client_settings (ClientSettings): クライアント設定

|

| 37 |

+

"""

|

| 38 |

+

self.client_settings = client_settings

|

| 39 |

+

|

| 40 |

+

def calculate_price(self, num_input_tokens: int = 0, num_output_tokens: int = 0) -> float:

|

| 41 |

+

"""

|

| 42 |

+

費用の計測

|

| 43 |

+

|

| 44 |

+

Args:

|

| 45 |

+

num_input_tokens (int, optional): 入力のトークン数. Defaults to 0.

|

| 46 |

+

num_output_tokens (int, optional): 出力のトークン数. Defaults to 0.

|

| 47 |

+

|

| 48 |

+

Returns:

|

| 49 |

+

float: API利用料(USD)

|

| 50 |

+

"""

|

| 51 |

+

price = (

|

| 52 |

+

self.dollar_per_ktoken.input * num_input_tokens + self.dollar_per_ktoken.output * num_output_tokens

|

| 53 |

+

) / 1000

|

| 54 |

+

return price

|

| 55 |

+

|

| 56 |

+

@abstractmethod

|

| 57 |

+

def count_tokens(self, messages: Messages | None = None, only_response: bool = False) -> int: ...

|

| 58 |

+

|

| 59 |

+

@abstractmethod

|

| 60 |

+

def encode(self, text: str) -> list[int]: ...

|

| 61 |

+

|

| 62 |

+

@abstractmethod

|

| 63 |

+

def decode(self, encoded: list[int]) -> str: ...

|

| 64 |

+

|

| 65 |

+

@abstractmethod

|

| 66 |

+

def generate(self, messages: Messages, llm_settings: LLMSettings) -> Response:

|

| 67 |

+

"""生成

|

| 68 |

+

|

| 69 |

+

Args:

|

| 70 |

+

messages (Messages): OpenAI仕様のMessages(list[dict])

|

| 71 |

+

|

| 72 |

+

Returns:

|

| 73 |

+

Response: OpenAI likeなResponse

|

| 74 |

+

"""

|

| 75 |

+

|

| 76 |

+

@abstractmethod

|

| 77 |

+

def generate_stream(self, messages: Messages, llm_settings: LLMSettings) -> StreamResponse: ...

|

| 78 |

+

|

| 79 |

+

def __repr__(self) -> str:

|

| 80 |

+

return f"{self.__class__}()"

|

| 81 |

+

|

| 82 |

+

def convert_nonstream_response(

|

| 83 |

+

self, chunk_list: list[Chunk], messages: Messages, functions: Any = None

|

| 84 |

+

) -> Response:

|

| 85 |

+

# messagesとfunctionsはトークン数計測に必要

|

| 86 |

+

_chunk_choices = [chunk.choices[0] for chunk in chunk_list if len(chunk.choices) > 0]

|

| 87 |

+

# TODO: n=2以上の場合にwarningを出したい

|

| 88 |

+

|

| 89 |

+

# FunctionCall --------------------------------------------------

|

| 90 |

+

function_call: FunctionCall | None

|

| 91 |

+

if all([_c.delta.function_call is None for _c in _chunk_choices]):

|

| 92 |

+

function_call = None

|

| 93 |

+

else:

|

| 94 |

+

function_call = FunctionCall(

|

| 95 |

+

arguments="".join(

|

| 96 |

+

[

|

| 97 |

+

_c.delta.function_call.arguments

|

| 98 |

+

for _c in _chunk_choices

|

| 99 |

+

if _c.delta.function_call is not None and _c.delta.function_call.arguments is not None

|

| 100 |

+

]

|

| 101 |

+

),

|

| 102 |

+

name=get_entity(

|

| 103 |

+

[_c.delta.function_call.name for _c in _chunk_choices if _c.delta.function_call is not None],

|

| 104 |

+

default="",

|

| 105 |

+

),

|

| 106 |

+

)

|

| 107 |

+

|

| 108 |

+

# ToolCalls --------------------------------------------------

|

| 109 |

+

_tool_calls_dict: dict[int, list[ChoiceDeltaToolCall]] = {} # key=index

|

| 110 |

+

for _chunk in _chunk_choices:

|

| 111 |

+

if _chunk.delta.tool_calls is None:

|

| 112 |

+

continue

|

| 113 |

+

for _tool_call in _chunk.delta.tool_calls:

|

| 114 |

+

_tool_calls_dict.setdefault(_tool_call.index, []).append(_tool_call)

|

| 115 |

+

|

| 116 |

+

tool_calls: list[ChatCompletionMessageToolCall] | None

|

| 117 |

+

if sum(len(_tool_calls) for _tool_calls in _tool_calls_dict.values()) == 0:

|

| 118 |

+

tool_calls = None

|

| 119 |

+

else:

|

| 120 |

+

tool_calls = []

|

| 121 |

+

for _tool_calls in _tool_calls_dict.values():

|

| 122 |

+

tool_calls.append(

|

| 123 |

+

ChatCompletionMessageToolCall(

|

| 124 |

+

id=get_entity([_tc.id for _tc in _tool_calls], default=""),

|

| 125 |

+

function=Function(

|

| 126 |

+

arguments="".join(

|

| 127 |

+

[

|

| 128 |

+

_tc.function.arguments

|

| 129 |

+

for _tc in _tool_calls

|

| 130 |

+

if _tc.function is not None and _tc.function.arguments is not None

|

| 131 |

+

]

|

| 132 |

+

),

|

| 133 |

+

name=get_entity(

|

| 134 |

+

[_tc.function.name for _tc in _tool_calls if _tc.function is not None], default=""

|

| 135 |

+

),

|

| 136 |

+

),

|

| 137 |

+

type=get_entity([_tc.type for _tc in _tool_calls], default="function"),

|

| 138 |

+

)

|

| 139 |

+

)

|

| 140 |

+

message = ChatCompletionMessage(

|

| 141 |

+

content="".join([_c.delta.content for _c in _chunk_choices if _c.delta.content is not None]),

|

| 142 |

+

# TODO: ChoiceDeltaのroleなんで、assistant以外も許されてるの?

|

| 143 |

+

role=get_entity([_c.delta.role for _c in _chunk_choices], default="assistant"), # type: ignore

|

| 144 |

+

function_call=function_call,

|

| 145 |

+

tool_calls=tool_calls,

|

| 146 |

+

)

|

| 147 |

+

choice = Choice(

|

| 148 |

+

index=get_entity([_c.index for _c in _chunk_choices], default=0),

|

| 149 |

+

message=message,

|

| 150 |

+

finish_reason=get_entity([_c.finish_reason for _c in _chunk_choices], default=None),

|

| 151 |

+

)

|

| 152 |

+

|

| 153 |

+

# Usage --------------------------------------------------

|

| 154 |

+

try:

|

| 155 |

+

for chunk in chunk_list:

|

| 156 |

+

if getattr(chunk, "tokens"):

|

| 157 |

+

prompt_tokens = int(getattr(chunk, "tokens")["input_tokens"])

|

| 158 |

+

completion_tokens = int(getattr(chunk, "tokens")["output_tokens"])

|

| 159 |

+

assert prompt_tokens

|

| 160 |

+

assert completion_tokens

|

| 161 |

+

except Exception:

|

| 162 |

+

prompt_tokens = self.count_tokens(messages) # TODO: fcなど

|

| 163 |

+

completion_tokens = self.count_tokens([message.to_typeddict_message()], only_response=True)

|

| 164 |

+

usages = CompletionUsage(

|

| 165 |

+

completion_tokens=completion_tokens,

|

| 166 |

+

prompt_tokens=prompt_tokens,

|

| 167 |

+

total_tokens=prompt_tokens + completion_tokens,

|

| 168 |

+

)

|

| 169 |

+

|

| 170 |

+

# ChatCompletion ------------------------------------------

|

| 171 |

+

response = ChatCompletion(

|

| 172 |

+

id=get_entity([chunk.id for chunk in chunk_list], default=""),

|

| 173 |

+

object="chat.completion",

|

| 174 |

+

created=get_entity([getattr(chunk, "created", 0) for chunk in chunk_list], default=0),

|

| 175 |

+

model=get_entity([getattr(chunk, "model", "") for chunk in chunk_list], default=""),

|

| 176 |

+

choices=[choice],

|

| 177 |

+

system_fingerprint=get_entity(

|

| 178 |

+

[getattr(chunk, "system_fingerprint", None) for chunk in chunk_list], default=None

|

| 179 |

+

),

|

| 180 |

+

usage=usages,

|

| 181 |

+

)

|

| 182 |

+

|

| 183 |

+

return response

|

| 184 |

+

|

| 185 |

+

@property

|

| 186 |

+

def max_tokens(self) -> int:

|

| 187 |

+

cprint("max_tokensは非推奨です。context_windowを使用してください。")

|

| 188 |

+

return self.context_window

|

neollm/llm/claude/abstract_claude.py

ADDED

|

@@ -0,0 +1,214 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import time

|

| 2 |

+

from abc import abstractmethod

|

| 3 |

+

from typing import Any, Literal, cast

|

| 4 |

+

|

| 5 |

+

from anthropic import Anthropic, AnthropicBedrock, AnthropicVertex, Stream

|

| 6 |

+

from anthropic.types import MessageParam as AnthropicMessageParam

|

| 7 |

+

from anthropic.types import MessageStreamEvent as AnthropicMessageStreamEvent

|

| 8 |

+

from anthropic.types.message import Message as AnthropicMessage

|

| 9 |

+

|

| 10 |

+

from neollm.llm.abstract_llm import AbstractLLM

|

| 11 |

+

from neollm.types import (

|

| 12 |

+

ChatCompletion,

|

| 13 |

+

LLMSettings,

|

| 14 |

+

Message,

|

| 15 |

+

Messages,

|

| 16 |

+

Response,

|

| 17 |

+

StreamResponse,

|

| 18 |

+

)

|

| 19 |

+

from neollm.types.openai.chat_completion import (

|

| 20 |

+

ChatCompletionMessage,

|

| 21 |

+

Choice,

|

| 22 |

+

CompletionUsage,

|

| 23 |

+

FinishReason,

|

| 24 |

+

)

|

| 25 |

+

from neollm.types.openai.chat_completion_chunk import (

|

| 26 |

+

ChatCompletionChunk,

|

| 27 |

+

ChoiceDelta,

|

| 28 |

+

ChunkChoice,

|

| 29 |

+

)

|

| 30 |

+

from neollm.utils.utils import cprint

|

| 31 |

+

|

| 32 |

+

DEFAULT_MAX_TOKENS = 4_096

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

class AbstractClaude(AbstractLLM):

|

| 36 |

+

@property

|

| 37 |

+

@abstractmethod

|

| 38 |

+

def client(self) -> Anthropic | AnthropicVertex | AnthropicBedrock: ...

|

| 39 |

+

|

| 40 |

+

@property

|

| 41 |

+

def _client_for_token(self) -> Anthropic:

|

| 42 |

+

"""トークンカウント用のAnthropicクライアント取得

|

| 43 |

+

(AnthropicBedrock, AnthropicVertexがmethodを持っていないため)

|

| 44 |

+

|

| 45 |

+

Returns:

|

| 46 |

+

Anthropic: Anthropicクライアント

|

| 47 |

+

"""

|

| 48 |

+

return Anthropic()

|

| 49 |

+

|

| 50 |

+

def encode(self, text: str) -> list[int]:

|

| 51 |

+

tokenizer = self._client_for_token.get_tokenizer()

|

| 52 |

+

encoded = cast(list[int], tokenizer.encode(text).ids)

|

| 53 |

+

return encoded

|

| 54 |

+

|

| 55 |

+

def decode(self, decoded: list[int]) -> str:

|

| 56 |

+

tokenizer = self._client_for_token.get_tokenizer()

|

| 57 |

+

text = cast(str, tokenizer.decode(decoded))

|

| 58 |

+

return text

|

| 59 |

+

|

| 60 |

+

def count_tokens(self, messages: list[Message] | None = None, only_response: bool = False) -> int:

|

| 61 |

+

"""

|

| 62 |

+

トークン数の計測

|

| 63 |

+

|

| 64 |

+

Args:

|

| 65 |

+

messages (Messages): messages

|

| 66 |

+

|

| 67 |

+

Returns:

|

| 68 |

+

int: トークン数

|

| 69 |

+

"""

|

| 70 |

+

if messages is None:

|

| 71 |

+

return 0

|

| 72 |

+

tokens = 0

|

| 73 |

+

for message in messages:

|

| 74 |

+

content = message["content"]

|

| 75 |

+

if isinstance(content, str):

|

| 76 |

+

tokens += self._client_for_token.count_tokens(content)

|

| 77 |

+

continue

|

| 78 |

+

if isinstance(content, list):

|

| 79 |

+

for content_i in content:

|

| 80 |

+

if content_i["type"] == "text":

|

| 81 |

+

tokens += self._client_for_token.count_tokens(content_i["text"])

|

| 82 |

+

continue

|

| 83 |

+

return tokens

|

| 84 |

+

|

| 85 |

+

def _convert_finish_reason(

|

| 86 |

+

self, stop_reason: Literal["end_turn", "max_tokens", "stop_sequence"] | None

|

| 87 |

+

) -> FinishReason | None:

|

| 88 |

+

if stop_reason == "max_tokens":

|

| 89 |

+

return "length"

|

| 90 |

+

if stop_reason == "stop_sequence":

|

| 91 |

+

return "stop"

|

| 92 |

+

return None

|

| 93 |

+

|

| 94 |

+

def _convert_to_response(self, platform_response: AnthropicMessage) -> Response:

|

| 95 |

+

return ChatCompletion(

|

| 96 |

+

id=platform_response.id,

|

| 97 |

+

choices=[

|

| 98 |

+

Choice(

|

| 99 |

+

index=0,

|

| 100 |

+

message=ChatCompletionMessage(

|

| 101 |

+

content=platform_response.content[0].text if len(platform_response.content) > 0 else "",

|

| 102 |

+

role="assistant",

|

| 103 |

+

),

|

| 104 |

+

finish_reason=self._convert_finish_reason(platform_response.stop_reason),

|

| 105 |

+

)

|

| 106 |

+

],

|

| 107 |

+

created=int(time.time()),

|

| 108 |

+

model=self.model,

|

| 109 |

+

object="messages.create",

|

| 110 |

+

system_fingerprint=None,

|

| 111 |

+

usage=CompletionUsage(

|

| 112 |

+

prompt_tokens=platform_response.usage.input_tokens,

|

| 113 |

+

completion_tokens=platform_response.usage.output_tokens,

|

| 114 |

+

total_tokens=platform_response.usage.input_tokens + platform_response.usage.output_tokens,

|

| 115 |

+

),

|

| 116 |

+

)

|

| 117 |

+

|

| 118 |

+

def _convert_to_platform_messages(self, messages: Messages) -> tuple[str, list[AnthropicMessageParam]]:

|

| 119 |

+

_system = ""

|

| 120 |

+

_message: list[AnthropicMessageParam] = []

|

| 121 |

+

for message in messages:

|

| 122 |

+

if message["role"] == "system":

|

| 123 |

+

_system += "\n" + message["content"]

|

| 124 |

+

elif message["role"] == "user":

|

| 125 |

+

if isinstance(message["content"], str):

|

| 126 |

+

_message.append({"role": "user", "content": message["content"]})

|

| 127 |

+

else:

|

| 128 |

+

cprint("WARNING: 未対応です", color="yellow", background=True)

|

| 129 |

+

elif message["role"] == "assistant":

|

| 130 |

+

if isinstance(message["content"], str):

|

| 131 |

+

_message.append({"role": "assistant", "content": message["content"]})

|

| 132 |

+

else:

|

| 133 |

+

cprint("WARNING: 未対応です", color="yellow", background=True)

|

| 134 |

+

else:

|

| 135 |

+

cprint("WARNING: 未対応です", color="yellow", background=True)

|

| 136 |

+

return _system, _message

|

| 137 |

+

|

| 138 |

+

def _convert_to_streamresponse(

|

| 139 |

+

self, platform_streamresponse: Stream[AnthropicMessageStreamEvent]

|

| 140 |

+

) -> StreamResponse:

|

| 141 |

+

created = int(time.time())

|

| 142 |

+

model = ""

|

| 143 |

+

id_ = ""

|

| 144 |

+

content: str | None = None

|

| 145 |

+

for chunk in platform_streamresponse:

|

| 146 |

+

input_tokens = 0

|

| 147 |

+

output_tokens = 0

|

| 148 |

+

if chunk.type == "message_stop" or chunk.type == "content_block_stop":

|

| 149 |

+

continue

|

| 150 |

+

if chunk.type == "message_start":

|

| 151 |

+

model = model or chunk.message.model

|

| 152 |

+

id_ = id_ or chunk.message.id

|

| 153 |

+

input_tokens = chunk.message.usage.input_tokens

|

| 154 |

+

output_tokens = chunk.message.usage.output_tokens

|

| 155 |

+

content = "".join([content_block.text for content_block in chunk.message.content])

|

| 156 |

+

finish_reason = self._convert_finish_reason(chunk.message.stop_reason)

|

| 157 |

+

elif chunk.type == "message_delta":

|

| 158 |

+

content = ""

|

| 159 |

+

finish_reason = self._convert_finish_reason(chunk.delta.stop_reason)

|

| 160 |

+

output_tokens = chunk.usage.output_tokens

|

| 161 |

+

elif chunk.type == "content_block_start":

|

| 162 |

+

content = chunk.content_block.text

|

| 163 |

+

finish_reason = None

|

| 164 |

+

elif chunk.type == "content_block_delta":

|

| 165 |

+

content = chunk.delta.text

|

| 166 |

+

finish_reason = None

|

| 167 |

+

yield ChatCompletionChunk(

|

| 168 |

+

id=id_,

|

| 169 |

+

choices=[

|

| 170 |

+

ChunkChoice(

|

| 171 |

+

delta=ChoiceDelta(

|

| 172 |

+

content=content,

|

| 173 |

+

role="assistant",

|

| 174 |

+

),

|

| 175 |

+

finish_reason=finish_reason,

|

| 176 |

+

index=0, # 0-indexedじゃないかもしれないので0に塗り替え

|

| 177 |

+

)

|

| 178 |

+

],

|

| 179 |

+

created=created,

|

| 180 |

+

model=model,

|

| 181 |

+

object="chat.completion.chunk",

|

| 182 |

+

tokens={"input_tokens": input_tokens, "output_tokens": output_tokens}, # type: ignore

|

| 183 |

+

)

|

| 184 |

+

|

| 185 |

+

def generate(self, messages: Messages, llm_settings: LLMSettings) -> Response:

|

| 186 |

+

_system, _message = self._convert_to_platform_messages(messages)

|

| 187 |

+

llm_settings = self._set_max_tokens(llm_settings)

|

| 188 |

+

response = self.client.messages.create(

|

| 189 |

+

model=self.model,

|

| 190 |

+

system=_system,

|

| 191 |

+

messages=_message,

|

| 192 |

+

stream=False,

|

| 193 |

+

**llm_settings,

|

| 194 |

+

)

|

| 195 |

+

return self._convert_to_response(platform_response=response)

|

| 196 |

+

|

| 197 |

+

def generate_stream(self, messages: Any, llm_settings: LLMSettings) -> StreamResponse:

|

| 198 |

+

_system, _message = self._convert_to_platform_messages(messages)

|

| 199 |

+

llm_settings = self._set_max_tokens(llm_settings)

|

| 200 |

+

response = self.client.messages.create(

|

| 201 |

+

model=self.model,

|

| 202 |

+

system=_system,

|

| 203 |

+

messages=_message,

|

| 204 |

+

stream=True,

|

| 205 |

+

**llm_settings,

|

| 206 |

+

)

|

| 207 |

+

return self._convert_to_streamresponse(platform_streamresponse=response)

|

| 208 |

+

|

| 209 |

+

def _set_max_tokens(self, llm_settings: LLMSettings) -> LLMSettings:

|

| 210 |

+

# claudeはmax_tokensが必須

|

| 211 |

+

if not llm_settings.get("max_tokens"):

|

| 212 |

+

cprint(f"max_tokens is not set. Set to {DEFAULT_MAX_TOKENS}.", color="yellow")

|

| 213 |

+

llm_settings["max_tokens"] = DEFAULT_MAX_TOKENS

|

| 214 |

+

return llm_settings

|

neollm/llm/claude/anthropic_llm.py

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Literal, cast, get_args

|

| 2 |

+

|

| 3 |

+

from anthropic import Anthropic

|

| 4 |

+

|

| 5 |

+

from neollm.llm.abstract_llm import AbstractLLM

|

| 6 |

+

from neollm.llm.claude.abstract_claude import AbstractClaude

|

| 7 |

+

from neollm.types import APIPricing, ClientSettings

|

| 8 |

+

from neollm.utils.utils import cprint

|

| 9 |

+

|

| 10 |

+

# price: https://www.anthropic.com/api

|

| 11 |

+

# models: https://docs.anthropic.com/claude/docs/models-overview

|

| 12 |

+

|

| 13 |

+

SUPPORTED_MODELS = Literal[

|

| 14 |

+

"claude-3-opus-20240229",

|

| 15 |

+

"claude-3-sonnet-20240229",

|

| 16 |

+

"claude-3-haiku-20240307",

|

| 17 |

+

]

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

def get_anthoropic_llm(model_name: SUPPORTED_MODELS | str, client_settings: ClientSettings) -> AbstractLLM:

|

| 21 |

+

# Add 日付

|

| 22 |

+

replace_map_for_nodate: dict[str, SUPPORTED_MODELS] = {

|

| 23 |

+

"claude-3-opus": "claude-3-opus-20240229",

|

| 24 |

+

"claude-3-sonnet": "claude-3-sonnet-20240229",

|

| 25 |

+

"claude-3-haiku": "claude-3-haiku-20240307",

|

| 26 |

+

}

|

| 27 |

+

if model_name in replace_map_for_nodate:

|

| 28 |

+

cprint("WARNING: model_nameに日付を指定してください", color="yellow", background=True)

|

| 29 |

+

print(f"model_name: {model_name} -> {replace_map_for_nodate[model_name]}")

|

| 30 |

+

model_name = replace_map_for_nodate[model_name]

|

| 31 |

+

|

| 32 |

+

# map to LLM

|

| 33 |

+

supported_model_map: dict[SUPPORTED_MODELS, AbstractLLM] = {

|

| 34 |

+

"claude-3-opus-20240229": AnthropicClaude3Opus20240229(client_settings),

|

| 35 |

+

"claude-3-sonnet-20240229": AnthropicClaude3Sonnet20240229(client_settings),

|

| 36 |

+

"claude-3-haiku-20240307": AnthropicClaude3Haiku20240229(client_settings),

|

| 37 |

+

}

|

| 38 |

+

if model_name in supported_model_map:

|

| 39 |

+

model_name = cast(SUPPORTED_MODELS, model_name)

|

| 40 |

+

return supported_model_map[model_name]

|

| 41 |

+

raise ValueError(f"model_name must be {get_args(SUPPORTED_MODELS)}, but got {model_name}.")

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

class AnthoropicLLM(AbstractClaude):

|

| 45 |

+

@property

|

| 46 |

+

def client(self) -> Anthropic:

|

| 47 |

+

client = Anthropic(**self.client_settings)

|

| 48 |

+

return client

|

| 49 |

+

|

| 50 |

+

|

| 51 |

+

class AnthropicClaude3Opus20240229(AnthoropicLLM):

|

| 52 |

+

dollar_per_ktoken = APIPricing(input=15 / 1000, output=75 / 1000)

|

| 53 |

+

model: str = "claude-3-opus-20240229"

|

| 54 |

+

context_window: int = 200_000

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

class AnthropicClaude3Sonnet20240229(AnthoropicLLM):

|

| 58 |

+

dollar_per_ktoken = APIPricing(input=3 / 1000, output=15 / 1000)

|

| 59 |

+

model: str = "claude-3-sonnet-20240229"

|

| 60 |

+

context_window: int = 200_000

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

class AnthropicClaude3Haiku20240229(AnthoropicLLM):

|

| 64 |

+

dollar_per_ktoken = APIPricing(input=0.25 / 1000, output=1.25 / 1000)

|

| 65 |

+

model: str = "claude-3-haiku-20240307"

|

| 66 |

+

context_window: int = 200_000

|

neollm/llm/claude/gcp_llm.py

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Literal, cast, get_args

|

| 2 |

+

|

| 3 |

+

from anthropic import AnthropicVertex

|

| 4 |

+

|

| 5 |

+

from neollm.llm.abstract_llm import AbstractLLM

|

| 6 |

+

from neollm.llm.claude.abstract_claude import AbstractClaude

|

| 7 |

+

from neollm.types import APIPricing, ClientSettings

|

| 8 |

+

from neollm.utils.utils import cprint

|

| 9 |

+

|

| 10 |

+

# price: https://www.anthropic.com/api

|

| 11 |

+

# models: https://docs.anthropic.com/claude/docs/models-overview

|

| 12 |

+

|

| 13 |

+

SUPPORTED_MODELS = Literal[

|

| 14 |

+

"claude-3-opus@20240229",

|

| 15 |

+

"claude-3-sonnet@20240229",

|

| 16 |

+

"claude-3-haiku@20240307",

|

| 17 |

+

]

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

# TODO! google 動かしたいね

|

| 21 |

+

def get_gcp_llm(model_name: SUPPORTED_MODELS | str, client_settings: ClientSettings) -> AbstractLLM:

|

| 22 |

+

# Add 日付

|

| 23 |

+

replace_map_for_nodate: dict[str, SUPPORTED_MODELS] = {

|

| 24 |

+

"claude-3-opus": "claude-3-opus@20240229",

|

| 25 |

+

"claude-3-sonnet": "claude-3-sonnet@20240229",

|

| 26 |

+

"claude-3-haiku": "claude-3-haiku@20240307",

|

| 27 |

+

}

|

| 28 |

+

if model_name in replace_map_for_nodate:

|

| 29 |

+

cprint("WARNING: model_nameに日付を指定してください", color="yellow", background=True)

|

| 30 |

+

print(f"model_name: {model_name} -> {replace_map_for_nodate[model_name]}")

|

| 31 |

+

model_name = replace_map_for_nodate[model_name]

|

| 32 |

+

|

| 33 |

+

# map to LLM

|

| 34 |

+

supported_model_map: dict[SUPPORTED_MODELS, AbstractLLM] = {

|

| 35 |

+

"claude-3-opus@20240229": GCPClaude3Opus20240229(client_settings),

|

| 36 |

+

"claude-3-sonnet@20240229": GCPClaude3Sonnet20240229(client_settings),

|

| 37 |

+

"claude-3-haiku@20240307": GCPClaude3Haiku20240229(client_settings),

|

| 38 |

+

}

|

| 39 |

+

if model_name in supported_model_map:

|

| 40 |

+

model_name = cast(SUPPORTED_MODELS, model_name)

|

| 41 |

+

return supported_model_map[model_name]

|

| 42 |

+

raise ValueError(f"model_name must be {get_args(SUPPORTED_MODELS)}, but got {model_name}.")

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

class GoogleLLM(AbstractClaude):

|

| 46 |

+

@property

|

| 47 |

+

def client(self) -> AnthropicVertex:

|

| 48 |

+

client = AnthropicVertex(**self.client_settings)

|

| 49 |

+

return client

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

class GCPClaude3Opus20240229(GoogleLLM):

|

| 53 |

+

dollar_per_ktoken = APIPricing(input=15 / 1000, output=75 / 1000)

|

| 54 |

+

model: str = "claude-3-opus@20240229"

|

| 55 |

+

context_window: int = 200_000

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

class GCPClaude3Sonnet20240229(GoogleLLM):

|

| 59 |

+

dollar_per_ktoken = APIPricing(input=3 / 1000, output=15 / 1000)

|

| 60 |

+

model: str = "claude-3-sonnet@20240229"

|

| 61 |

+

context_window: int = 200_000

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

class GCPClaude3Haiku20240229(GoogleLLM):

|

| 65 |

+

dollar_per_ktoken = APIPricing(input=0.25 / 1000, output=1.25 / 1000)

|

| 66 |

+

model: str = "claude-3-haiku@20240307"

|

| 67 |

+

context_window: int = 200_000

|

neollm/llm/gemini/abstract_gemini.py

ADDED

|

@@ -0,0 +1,229 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import time

|

| 2 |

+

from abc import abstractmethod

|

| 3 |

+

from typing import Iterable, cast

|

| 4 |

+

|

| 5 |

+

from google.cloud.aiplatform_v1beta1.types import CountTokensResponse

|

| 6 |

+

from google.cloud.aiplatform_v1beta1.types.content import Candidate

|

| 7 |

+

from vertexai.generative_models import (

|

| 8 |

+

Content,

|

| 9 |

+

GenerationConfig,

|

| 10 |

+

GenerationResponse,

|

| 11 |

+

GenerativeModel,

|

| 12 |

+

Part,

|

| 13 |

+

)

|

| 14 |

+

from vertexai.generative_models._generative_models import ContentsType

|

| 15 |

+

|

| 16 |

+

from neollm.llm.abstract_llm import AbstractLLM

|

| 17 |

+

from neollm.types import (

|

| 18 |

+

ChatCompletion,

|

| 19 |

+

CompletionUsageForCustomPriceCalculation,

|

| 20 |

+

LLMSettings,

|

| 21 |

+

Message,

|

| 22 |

+

Messages,

|

| 23 |

+

Response,

|

| 24 |

+

StreamResponse,

|

| 25 |

+

)

|

| 26 |

+

from neollm.types.openai.chat_completion import (

|

| 27 |

+

ChatCompletionMessage,

|

| 28 |

+

Choice,

|

| 29 |

+

CompletionUsage,

|

| 30 |

+

)

|

| 31 |

+

from neollm.types.openai.chat_completion import FinishReason as FinishReasonVertex

|

| 32 |

+

from neollm.types.openai.chat_completion_chunk import (

|

| 33 |

+

ChatCompletionChunk,

|

| 34 |

+

ChoiceDelta,

|

| 35 |

+

ChunkChoice,

|

| 36 |

+

)

|

| 37 |

+

from neollm.utils.utils import cprint

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

class AbstractGemini(AbstractLLM):

|

| 41 |

+

|

| 42 |

+

@abstractmethod

|

| 43 |

+

def generate_config(self, llm_settings: LLMSettings) -> GenerationConfig: ...

|

| 44 |

+

|

| 45 |

+

# 使っていない

|

| 46 |

+

def encode(self, text: str) -> list[int]:

|

| 47 |

+

return [ord(char) for char in text]

|

| 48 |

+

|

| 49 |

+

# 使っていない

|

| 50 |

+

def decode(self, decoded: list[int]) -> str:

|

| 51 |

+

return "".join([chr(number) for number in decoded])

|

| 52 |

+

|

| 53 |

+

def _count_tokens_vertex(self, contents: ContentsType) -> CountTokensResponse:

|

| 54 |

+

model = GenerativeModel(model_name=self.model)

|

| 55 |

+

return cast(CountTokensResponse, model.count_tokens(contents))

|

| 56 |

+

|

| 57 |

+

def count_tokens(self, messages: list[Message] | None = None, only_response: bool = False) -> int:

|

| 58 |

+

"""

|

| 59 |

+

トークン数の計測

|

| 60 |

+

|

| 61 |

+

Args:

|

| 62 |

+

messages (Messages): messages

|

| 63 |

+

|

| 64 |

+

Returns:

|

| 65 |

+

int: トークン数

|

| 66 |

+

"""

|

| 67 |

+

if messages is None:

|

| 68 |

+

return 0

|

| 69 |

+

_system, _message = self._convert_to_platform_messages(messages)

|

| 70 |

+

total_tokens = 0

|

| 71 |

+

if _system:

|

| 72 |

+

total_tokens += int(self._count_tokens_vertex(_system).total_tokens)

|

| 73 |

+

if _message:

|

| 74 |

+

total_tokens = int(self._count_tokens_vertex(_message).total_tokens)

|

| 75 |

+

return total_tokens

|

| 76 |

+

|

| 77 |

+

def _convert_to_platform_messages(self, messages: Messages) -> tuple[str | None, list[Content]]:

|

| 78 |

+

_system = None

|

| 79 |

+

_message: list[Content] = []

|

| 80 |

+

|

| 81 |

+

for message in messages:

|

| 82 |

+

if message["role"] == "system":

|

| 83 |

+

_system = "\n" + message["content"]

|

| 84 |

+

elif message["role"] == "user":

|

| 85 |

+

if isinstance(message["content"], str):

|

| 86 |

+

_message.append(Content(role="user", parts=[Part.from_text(message["content"])]))

|

| 87 |

+

else:

|

| 88 |

+

try:

|

| 89 |

+

if isinstance(message["content"], list) and message["content"][1]["type"] == "image_url":

|

| 90 |

+

encoded_image = message["content"][1]["image_url"]["url"].split(",")[-1]

|

| 91 |

+

_message.append(

|

| 92 |

+

Content(

|

| 93 |

+

role="user",

|

| 94 |

+

parts=[

|

| 95 |

+

Part.from_text(message["content"][0]["text"]),

|

| 96 |

+

Part.from_data(data=encoded_image, mime_type="image/jpeg"),

|

| 97 |

+

],

|

| 98 |

+

)

|

| 99 |

+

)

|

| 100 |

+

except KeyError:

|

| 101 |

+

cprint("WARNING: 未対応です", color="yellow", background=True)

|

| 102 |

+

except IndexError:

|

| 103 |

+

cprint("WARNING: 未対応です", color="yellow", background=True)

|

| 104 |

+

except Exception as e:

|

| 105 |

+

cprint(e, color="red", background=True)

|

| 106 |

+

elif message["role"] == "assistant":

|

| 107 |

+

if isinstance(message["content"], str):

|

| 108 |

+

_message.append(Content(role="model", parts=[Part.from_text(message["content"])]))

|

| 109 |

+

else:

|

| 110 |

+

cprint("WARNING: 未対応です", color="yellow", background=True)

|

| 111 |

+

return _system, _message

|

| 112 |

+

|

| 113 |

+

def _convert_finish_reason(self, stop_reason: Candidate.FinishReason) -> FinishReasonVertex | None:

|

| 114 |

+

"""

|

| 115 |

+

参考記事 : https://ai.google.dev/api/python/google/ai/generativelanguage/Candidate/FinishReason

|

| 116 |

+

|

| 117 |

+

0: FINISH_REASON_UNSPECIFIED

|

| 118 |

+

Default value. This value is unused.

|

| 119 |

+

1: STOP

|

| 120 |

+

Natural stop point of the model or provided stop sequence.

|

| 121 |

+

2: MAX_TOKENS

|

| 122 |

+

The maximum number of tokens as specified in the request was reached.

|

| 123 |

+

3: SAFETY

|

| 124 |

+

The candidate content was flagged for safety reasons.

|

| 125 |

+

4: RECITATION

|

| 126 |

+

The candidate content was flagged for recitation reasons.

|

| 127 |

+

5: OTHER

|

| 128 |

+

Unknown reason.

|

| 129 |

+

"""

|

| 130 |

+

|

| 131 |

+

if stop_reason.value in [0, 3, 4, 5]:

|

| 132 |

+

return "stop"

|

| 133 |

+

|

| 134 |

+

if stop_reason.value in [2]:

|

| 135 |

+

return "length"

|

| 136 |

+

|

| 137 |

+

return None

|

| 138 |

+

|

| 139 |

+

def _convert_to_response(

|

| 140 |

+