(vertical spaces around image) +

+

+ +

+

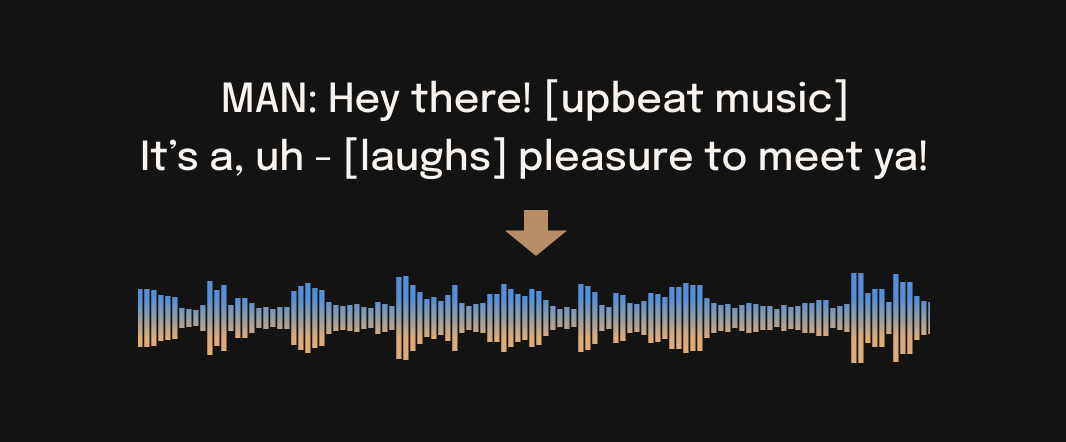

+ +Bark is a transformer-based text-to-audio model created by [Suno](https://suno.ai). Bark can generate highly realistic, multilingual speech as well as other audio - including music, background noise and simple sound effects. The model can also produce nonverbal communications like laughing, sighing and crying. To support the research community, we are providing access to pretrained model checkpoints, which are ready for inference and available for commercial use. + +## ⚠ Disclaimer +Bark was developed for research purposes. It is not a conventional text-to-speech model but instead a fully generative text-to-audio model, which can deviate in unexpected ways from provided prompts. Suno does not take responsibility for any output generated. Use at your own risk, and please act responsibly. + +## 🎧 Demos + +[](https://huggingface.co/spaces/suno/bark) +[](https://replicate.com/suno-ai/bark) +[](https://colab.research.google.com/drive/1eJfA2XUa-mXwdMy7DoYKVYHI1iTd9Vkt?usp=sharing) + +## 🚀 Updates + +**2023.05.01** +- ©️ Bark is now licensed under the MIT License, meaning it's now available for commercial use! +- ⚡ 2x speed-up on GPU. 10x speed-up on CPU. We also added an option for a smaller version of Bark, which offers additional speed-up with the trade-off of slightly lower quality. +- 📕 [Long-form generation](notebooks/long_form_generation.ipynb), voice consistency enhancements and other examples are now documented in a new [notebooks](./notebooks) section. +- 👥 We created a [voice prompt library](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c). We hope this resource helps you find useful prompts for your use cases! You can also join us on [Discord](https://discord.gg/J2B2vsjKuE), where the community actively shares useful prompts in the **#audio-prompts** channel. +- 💬 Growing community support and access to new features here: + + [](https://discord.gg/J2B2vsjKuE) + +- 💾 You can now use Bark with GPUs that have low VRAM (<4GB). + +**2023.04.20** +- 🐶 Bark release! + +## 🐍 Usage in Python + +

+

+

+```python

+from bark import SAMPLE_RATE, generate_audio, preload_models

+from scipy.io.wavfile import write as write_wav

+from IPython.display import Audio

+

+# download and load all models

+preload_models()

+

+# generate audio from text

+text_prompt = """

+ Hello, my name is Suno. And, uh — and I like pizza. [laughs]

+ But I also have other interests such as playing tic tac toe.

+"""

+audio_array = generate_audio(text_prompt)

+

+# save audio to disk

+write_wav("bark_generation.wav", SAMPLE_RATE, audio_array)

+

+# play text in notebook

+Audio(audio_array, rate=SAMPLE_RATE)

+```

+

+[pizza.webm](https://user-images.githubusercontent.com/5068315/230490503-417e688d-5115-4eee-9550-b46a2b465ee3.webm)

+

+

+

+🪑 Basics

+

+```python

+from bark import SAMPLE_RATE, generate_audio, preload_models

+from scipy.io.wavfile import write as write_wav

+from IPython.display import Audio

+

+# download and load all models

+preload_models()

+

+# generate audio from text

+text_prompt = """

+ Hello, my name is Suno. And, uh — and I like pizza. [laughs]

+ But I also have other interests such as playing tic tac toe.

+"""

+audio_array = generate_audio(text_prompt)

+

+# save audio to disk

+write_wav("bark_generation.wav", SAMPLE_RATE, audio_array)

+

+# play text in notebook

+Audio(audio_array, rate=SAMPLE_RATE)

+```

+

+[pizza.webm](https://user-images.githubusercontent.com/5068315/230490503-417e688d-5115-4eee-9550-b46a2b465ee3.webm)

+

+

+

+

+Bark supports various languages out-of-the-box and automatically determines language from input text. When prompted with code-switched text, Bark will attempt to employ the native accent for the respective languages. English quality is best for the time being, and we expect other languages to further improve with scaling. +

+

+ +```python + +text_prompt = """ + 추석은 내가 가장 좋아하는 명절이다. 나는 며칠 동안 휴식을 취하고 친구 및 가족과 시간을 보낼 수 있습니다. +""" +audio_array = generate_audio(text_prompt) +``` +[suno_korean.webm](https://user-images.githubusercontent.com/32879321/235313033-dc4477b9-2da0-4b94-9c8b-a8c2d8f5bb5e.webm) + +*Note: since Bark recognizes languages automatically from input text, it is possible to use for example a german history prompt with english text. This usually leads to english audio with a german accent.* + +

+

+🌎 Foreign Language

++Bark supports various languages out-of-the-box and automatically determines language from input text. When prompted with code-switched text, Bark will attempt to employ the native accent for the respective languages. English quality is best for the time being, and we expect other languages to further improve with scaling. +

+

+ +```python + +text_prompt = """ + 추석은 내가 가장 좋아하는 명절이다. 나는 며칠 동안 휴식을 취하고 친구 및 가족과 시간을 보낼 수 있습니다. +""" +audio_array = generate_audio(text_prompt) +``` +[suno_korean.webm](https://user-images.githubusercontent.com/32879321/235313033-dc4477b9-2da0-4b94-9c8b-a8c2d8f5bb5e.webm) + +*Note: since Bark recognizes languages automatically from input text, it is possible to use for example a german history prompt with english text. This usually leads to english audio with a german accent.* + +

+

+Bark can generate all types of audio, and, in principle, doesn't see a difference between speech and music. Sometimes Bark chooses to generate text as music, but you can help it out by adding music notes around your lyrics.

+

+

+ +```python +text_prompt = """ + ♪ In the jungle, the mighty jungle, the lion barks tonight ♪ +""" +audio_array = generate_audio(text_prompt) +``` +[lion.webm](https://user-images.githubusercontent.com/5068315/230684766-97f5ea23-ad99-473c-924b-66b6fab24289.webm) +

+

+🎶 Music

+Bark can generate all types of audio, and, in principle, doesn't see a difference between speech and music. Sometimes Bark chooses to generate text as music, but you can help it out by adding music notes around your lyrics.

++

+ +```python +text_prompt = """ + ♪ In the jungle, the mighty jungle, the lion barks tonight ♪ +""" +audio_array = generate_audio(text_prompt) +``` +[lion.webm](https://user-images.githubusercontent.com/5068315/230684766-97f5ea23-ad99-473c-924b-66b6fab24289.webm) +

+

+

+Bark supports 100+ speaker presets across [supported languages](#supported-languages). You can browse the library of speaker presets [here](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c), or in the [code](bark/assets/prompts). The community also often shares presets in [Discord](https://discord.gg/J2B2vsjKuE).

+

+Bark tries to match the tone, pitch, emotion and prosody of a given preset, but does not currently support custom voice cloning. The model also attempts to preserve music, ambient noise, etc.

+

+

+ +```python +text_prompt = """ + I have a silky smooth voice, and today I will tell you about + the exercise regimen of the common sloth. +""" +audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_1") +``` + +[sloth.webm](https://user-images.githubusercontent.com/5068315/230684883-a344c619-a560-4ff5-8b99-b4463a34487b.webm) +

+

+### Generating Longer Audio

+

+By default, `generate_audio` works well with around 13 seconds of spoken text. For an example of how to do long-form generation, see this [example notebook](notebooks/long_form_generation.ipynb).

+

+🎤 Voice Presets

+

+Bark supports 100+ speaker presets across [supported languages](#supported-languages). You can browse the library of speaker presets [here](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c), or in the [code](bark/assets/prompts). The community also often shares presets in [Discord](https://discord.gg/J2B2vsjKuE).

+

+Bark tries to match the tone, pitch, emotion and prosody of a given preset, but does not currently support custom voice cloning. The model also attempts to preserve music, ambient noise, etc.

++

+ +```python +text_prompt = """ + I have a silky smooth voice, and today I will tell you about + the exercise regimen of the common sloth. +""" +audio_array = generate_audio(text_prompt, history_prompt="v2/en_speaker_1") +``` + +[sloth.webm](https://user-images.githubusercontent.com/5068315/230684883-a344c619-a560-4ff5-8b99-b4463a34487b.webm) +

+

+

+

+

+

+## 💻 Installation

+

+```

+pip install git+https://github.com/suno-ai/bark.git

+```

+

+or

+

+```

+git clone https://github.com/suno-ai/bark

+cd bark && pip install .

+```

+*Note: Do NOT use 'pip install bark'. It installs a different package, which is not managed by Suno.*

+

+

+## 🛠️ Hardware and Inference Speed

+

+Bark has been tested and works on both CPU and GPU (`pytorch 2.0+`, CUDA 11.7 and CUDA 12.0).

+

+On enterprise GPUs and PyTorch nightly, Bark can generate audio in roughly real-time. On older GPUs, default colab, or CPU, inference time might be significantly slower. For older GPUs or CPU you might want to consider using smaller models. Details can be found in out tutorial sections here.

+

+The full version of Bark requires around 12GB of VRAM to hold everything on GPU at the same time.

+To use a smaller version of the models, which should fit into 8GB VRAM, set the environment flag `SUNO_USE_SMALL_MODELS=True`.

+

+If you don't have hardware available or if you want to play with bigger versions of our models, you can also sign up for early access to our model playground [here](https://3os84zs17th.typeform.com/suno-studio).

+

+## ⚙️ Details

+

+Bark is fully generative tex-to-audio model devolved for research and demo purposes. It follows a GPT style architecture similar to [AudioLM](https://arxiv.org/abs/2209.03143) and [Vall-E](https://arxiv.org/abs/2301.02111) and a quantized Audio representation from [EnCodec](https://github.com/facebookresearch/encodec). It is not a conventional TTS model, but instead a fully generative text-to-audio model capable of deviating in unexpected ways from any given script. Different to previous approaches, the input text prompt is converted directly to audio without the intermediate use of phonemes. It can therefore generalize to arbitrary instructions beyond speech such as music lyrics, sound effects or other non-speech sounds.

+

+Below is a list of some known non-speech sounds, but we are finding more every day. Please let us know if you find patterns that work particularly well on [Discord](https://discord.gg/J2B2vsjKuE)!

+

+- `[laughter]`

+- `[laughs]`

+- `[sighs]`

+- `[music]`

+- `[gasps]`

+- `[clears throat]`

+- `—` or `...` for hesitations

+- `♪` for song lyrics

+- CAPITALIZATION for emphasis of a word

+- `[MAN]` and `[WOMAN]` to bias Bark toward male and female speakers, respectively

+

+### Supported Languages

+

+| Language | Status |

+| --- | --- |

+| English (en) | ✅ |

+| German (de) | ✅ |

+| Spanish (es) | ✅ |

+| French (fr) | ✅ |

+| Hindi (hi) | ✅ |

+| Italian (it) | ✅ |

+| Japanese (ja) | ✅ |

+| Korean (ko) | ✅ |

+| Polish (pl) | ✅ |

+| Portuguese (pt) | ✅ |

+| Russian (ru) | ✅ |

+| Turkish (tr) | ✅ |

+| Chinese, simplified (zh) | ✅ |

+

+Requests for future language support [here](https://github.com/suno-ai/bark/discussions/111) or in the **#forums** channel on [Discord](https://discord.com/invite/J2B2vsjKuE).

+

+## 🙏 Appreciation

+

+- [nanoGPT](https://github.com/karpathy/nanoGPT) for a dead-simple and blazing fast implementation of GPT-style models

+- [EnCodec](https://github.com/facebookresearch/encodec) for a state-of-the-art implementation of a fantastic audio codec

+- [AudioLM](https://github.com/lucidrains/audiolm-pytorch) for related training and inference code

+- [Vall-E](https://arxiv.org/abs/2301.02111), [AudioLM](https://arxiv.org/abs/2209.03143) and many other ground-breaking papers that enabled the development of Bark

+

+## © License

+

+Bark is licensed under the MIT License.

+

+Please contact us at `bark@suno.ai` to request access to a larger version of the model.

+

+## 📱 Community

+

+- [Twitter](https://twitter.com/OnusFM)

+- [Discord](https://discord.gg/J2B2vsjKuE)

+

+## 🎧 Suno Studio (Early Access)

+

+We’re developing a playground for our models, including Bark.

+

+If you are interested, you can sign up for early access [here](https://3os84zs17th.typeform.com/suno-studio).

+

+## ❓ FAQ

+

+#### How do I specify where models are downloaded and cached?

+* Bark uses Hugging Face to download and store models. You can see find more info [here](https://huggingface.co/docs/huggingface_hub/package_reference/environment_variables#hfhome).

+

+

+#### Bark's generations sometimes differ from my prompts. What's happening?

+* Bark is a GPT-style model. As such, it may take some creative liberties in its generations, resulting in higher-variance model outputs than traditional text-to-speech approaches.

+

+#### What voices are supported by Bark?

+* Bark supports 100+ speaker presets across [supported languages](#supported-languages). You can browse the library of speaker presets [here](https://suno-ai.notion.site/8b8e8749ed514b0cbf3f699013548683?v=bc67cff786b04b50b3ceb756fd05f68c). The community also shares presets in [Discord](https://discord.gg/J2B2vsjKuE). Bark also supports generating unique random voices that fit the input text. Bark does not currently support custom voice cloning.

+

+#### Why is the output limited to ~13-14 seconds?

+* Bark is a GPT-style model, and its architecture/context window is optimized to output generations with roughly this length.

+

+#### How much VRAM do I need?

+* The full version of Bark requires around 12Gb of memory to hold everything on GPU at the same time. However, even smaller cards down to ~2Gb work with some additional settings. Simply add the following code snippet before your generation:

+

+```python

+import os

+os.environ["SUNO_OFFLOAD_CPU"] = True

+os.environ["SUNO_USE_SMALL_MODELS"] = True

+```

+

+#### My generated audio sounds like a 1980s phone call. What's happening?

+* Bark generates audio from scratch. It is not meant to create only high-fidelity, studio-quality speech. Rather, outputs could be anything from perfect speech to multiple people arguing at a baseball game recorded with bad microphones.

diff --git a/Untitled.ipynb b/Untitled.ipynb

new file mode 100644

index 0000000000000000000000000000000000000000..0b630d951b0e11dc5727e70b6b7361c6f0fc8626

--- /dev/null

+++ b/Untitled.ipynb

@@ -0,0 +1,486 @@

+{

+ "cells": [

+ {

+ "cell_type": "code",

+ "execution_count": 1,

+ "id": "9c4c1f56",

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Ignoring sox: markers 'platform_system == \"Darwin\"' don't match your environment\n",

+ "Ignoring soundfile: markers 'platform_system == \"Windows\"' don't match your environment\n",

+ "Ignoring fairseq: markers 'platform_system == \"Windows\"' don't match your environment\n",

+ "Ignoring fairseq: markers 'platform_system == \"Darwin\"' don't match your environment\n",

+ "Ignoring pywin32: markers 'platform_system == \"Windows\"' don't match your environment\n",

+ "Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from -r old_setup_files/requirements-pip.txt (line 1)) (45.2.0)\n",

+ "Collecting transformers\n",

+ " Downloading transformers-4.31.0-py3-none-any.whl (7.4 MB)\n",

+ "\u001b[K |████████████████████████████████| 7.4 MB 40 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting diffusers\n",

+ " Downloading diffusers-0.19.3-py3-none-any.whl (1.3 MB)\n",

+ "\u001b[K |████████████████████████████████| 1.3 MB 14 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting ffmpeg-downloader\n",

+ " Downloading ffmpeg_downloader-0.2.0-py3-none-any.whl (27 kB)\n",

+ "Collecting ffmpeg\n",

+ " Downloading ffmpeg-1.4.tar.gz (5.1 kB)\n",

+ "Collecting ffmpeg-python\n",

+ " Downloading ffmpeg_python-0.2.0-py3-none-any.whl (25 kB)\n",

+ "Collecting sox\n",

+ " Downloading sox-1.4.1-py2.py3-none-any.whl (39 kB)\n",

+ "Collecting fairseq\n",

+ " Downloading fairseq-0.12.2-cp38-cp38-manylinux_2_5_x86_64.manylinux1_x86_64.whl (11.0 MB)\n",

+ "\u001b[K |████████████████████████████████| 11.0 MB 1.1 kB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: librosa in /home/jamal/.local/lib/python3.8/site-packages (from -r old_setup_files/requirements-pip.txt (line 13)) (0.8.1)\n",

+ "Collecting boto3\n",

+ " Downloading boto3-1.28.18-py3-none-any.whl (135 kB)\n",

+ "\u001b[K |████████████████████████████████| 135 kB 3.2 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting funcy\n",

+ " Downloading funcy-2.0-py2.py3-none-any.whl (30 kB)\n",

+ "Requirement already satisfied: numpy in /home/jamal/.local/lib/python3.8/site-packages (from -r old_setup_files/requirements-pip.txt (line 16)) (1.20.3)\n",

+ "Requirement already satisfied: scipy in /home/jamal/.local/lib/python3.8/site-packages (from -r old_setup_files/requirements-pip.txt (line 17)) (1.7.3)\n",

+ "Collecting tokenizers\n",

+ " Downloading tokenizers-0.13.3-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (7.8 MB)\n",

+ "\u001b[K |████████████████████████████████| 7.8 MB 5.1 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: tqdm in /home/jamal/.local/lib/python3.8/site-packages (from -r old_setup_files/requirements-pip.txt (line 19)) (4.62.3)\n",

+ "Requirement already satisfied: ipython in /home/jamal/.local/lib/python3.8/site-packages (from -r old_setup_files/requirements-pip.txt (line 20)) (8.9.0)\n",

+ "Collecting huggingface_hub>0.15\n",

+ " Downloading huggingface_hub-0.16.4-py3-none-any.whl (268 kB)\n",

+ "\u001b[K |████████████████████████████████| 268 kB 2.5 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: rich in /home/jamal/.local/lib/python3.8/site-packages (from -r old_setup_files/requirements-pip.txt (line 22)) (13.3.5)\n",

+ "Collecting pathvalidate\n",

+ " Downloading pathvalidate-3.1.0-py3-none-any.whl (21 kB)\n",

+ "Collecting rich-argparse\n",

+ " Downloading rich_argparse-1.2.0-py3-none-any.whl (16 kB)\n",

+ "Collecting encodec\n",

+ " Downloading encodec-0.1.1.tar.gz (3.7 MB)\n",

+ "\u001b[K |████████████████████████████████| 3.7 MB 2.8 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: chardet in /usr/lib/python3/dist-packages (from -r old_setup_files/requirements-pip.txt (line 26)) (3.0.4)\n",

+ "Collecting pydub\n",

+ " Downloading pydub-0.25.1-py2.py3-none-any.whl (32 kB)\n",

+ "Requirement already satisfied: requests in /home/jamal/.local/lib/python3.8/site-packages (from -r old_setup_files/requirements-pip.txt (line 28)) (2.28.2)\n",

+ "Collecting audio2numpy\n",

+ " Downloading audio2numpy-0.1.2-py3-none-any.whl (10 kB)\n",

+ "Collecting faiss-cpu\n",

+ " Downloading faiss_cpu-1.7.4-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (17.6 MB)\n",

+ "\u001b[K |████████████████████████████████| 17.6 MB 69 kB/s eta 0:00:01 |███████████▍ | 6.2 MB 13.9 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: joblib in /home/jamal/.local/lib/python3.8/site-packages (from -r old_setup_files/requirements-pip.txt (line 31)) (1.3.1)\n",

+ "Collecting audiolm-pytorch\n",

+ " Downloading audiolm_pytorch-1.2.24-py3-none-any.whl (40 kB)\n",

+ "\u001b[K |████████████████████████████████| 40 kB 192 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting universal-startfile\n",

+ " Downloading universal_startfile-0.2-py3-none-any.whl (3.4 kB)\n",

+ "Collecting gradio>=3.34.0\n",

+ " Downloading gradio-3.39.0-py3-none-any.whl (19.9 MB)\n",

+ "\u001b[K |████████████████████████████████| 19.9 MB 2.9 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: pyyaml>=5.1 in /usr/lib/python3/dist-packages (from transformers->-r old_setup_files/requirements-pip.txt (line 2)) (5.3.1)\n",

+ "Requirement already satisfied: filelock in /home/jamal/.local/lib/python3.8/site-packages (from transformers->-r old_setup_files/requirements-pip.txt (line 2)) (3.12.2)\n",

+ "Collecting regex!=2019.12.17\n",

+ " Downloading regex-2023.6.3-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (772 kB)\n",

+ "\u001b[K |████████████████████████████████| 772 kB 3.0 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting safetensors>=0.3.1\n",

+ " Downloading safetensors-0.3.1-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.3 MB)\n",

+ "\u001b[K |████████████████████████████████| 1.3 MB 3.1 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: packaging>=20.0 in /home/jamal/.local/lib/python3.8/site-packages (from transformers->-r old_setup_files/requirements-pip.txt (line 2)) (23.0)\n",

+ "Requirement already satisfied: importlib-metadata in /home/jamal/.local/lib/python3.8/site-packages (from diffusers->-r old_setup_files/requirements-pip.txt (line 3)) (6.0.0)\n",

+ "Requirement already satisfied: Pillow in /home/jamal/.local/lib/python3.8/site-packages (from diffusers->-r old_setup_files/requirements-pip.txt (line 3)) (8.4.0)\n",

+ "Collecting appdirs\n",

+ " Downloading appdirs-1.4.4-py2.py3-none-any.whl (9.6 kB)\n",

+ "Requirement already satisfied: future in /usr/lib/python3/dist-packages (from ffmpeg-python->-r old_setup_files/requirements-pip.txt (line 6)) (0.18.2)\n",

+ "Collecting omegaconf<2.1\n",

+ " Downloading omegaconf-2.0.6-py3-none-any.whl (36 kB)\n",

+ "Collecting cython\n",

+ " Downloading Cython-3.0.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (3.6 MB)\n",

+ "\u001b[K |████████████████████████████████| 3.6 MB 3.6 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: cffi in /home/jamal/.local/lib/python3.8/site-packages (from fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (1.15.1)\n",

+ "Requirement already satisfied: torch in /home/jamal/.local/lib/python3.8/site-packages (from fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (2.0.1)\n",

+ "Collecting sacrebleu>=1.4.12\n",

+ " Downloading sacrebleu-2.3.1-py3-none-any.whl (118 kB)\n",

+ "\u001b[K |████████████████████████████████| 118 kB 3.1 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting torchaudio>=0.8.0\n",

+ " Downloading torchaudio-2.0.2-cp38-cp38-manylinux1_x86_64.whl (4.4 MB)\n",

+ "\u001b[K |████████████████████████████████| 4.4 MB 572 kB/s eta 0:00:01 |█████████████████████ | 2.9 MB 2.0 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting hydra-core<1.1,>=1.0.7\n",

+ " Downloading hydra_core-1.0.7-py3-none-any.whl (123 kB)\n",

+ "\u001b[K |████████████████████████████████| 123 kB 1.9 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting bitarray\n",

+ " Downloading bitarray-2.8.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (283 kB)\n",

+ "\u001b[K |████████████████████████████████| 283 kB 2.4 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: audioread>=2.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa->-r old_setup_files/requirements-pip.txt (line 13)) (3.0.0)\n",

+ "Requirement already satisfied: soundfile>=0.10.2 in /home/jamal/.local/lib/python3.8/site-packages (from librosa->-r old_setup_files/requirements-pip.txt (line 13)) (0.10.3.post1)\n",

+ "Requirement already satisfied: pooch>=1.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa->-r old_setup_files/requirements-pip.txt (line 13)) (1.7.0)\n",

+ "Requirement already satisfied: scikit-learn!=0.19.0,>=0.14.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa->-r old_setup_files/requirements-pip.txt (line 13)) (1.0.2)\n",

+ "Requirement already satisfied: numba>=0.43.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa->-r old_setup_files/requirements-pip.txt (line 13)) (0.57.1)\n",

+ "Requirement already satisfied: decorator>=3.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa->-r old_setup_files/requirements-pip.txt (line 13)) (5.1.1)\n",

+ "Requirement already satisfied: resampy>=0.2.2 in /home/jamal/.local/lib/python3.8/site-packages (from librosa->-r old_setup_files/requirements-pip.txt (line 13)) (0.4.2)\n"

+ ]

+ },

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Collecting botocore<1.32.0,>=1.31.18\n",

+ " Downloading botocore-1.31.18-py3-none-any.whl (11.1 MB)\n",

+ "\u001b[K |████████████████████████████████| 11.1 MB 1.2 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting s3transfer<0.7.0,>=0.6.0\n",

+ " Downloading s3transfer-0.6.1-py3-none-any.whl (79 kB)\n",

+ "\u001b[K |████████████████████████████████| 79 kB 964 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting jmespath<2.0.0,>=0.7.1\n",

+ " Downloading jmespath-1.0.1-py3-none-any.whl (20 kB)\n",

+ "Requirement already satisfied: backcall in /home/jamal/.local/lib/python3.8/site-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (0.2.0)\n",

+ "Requirement already satisfied: pygments>=2.4.0 in /home/jamal/.local/lib/python3.8/site-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (2.14.0)\n",

+ "Requirement already satisfied: jedi>=0.16 in /home/jamal/.local/lib/python3.8/site-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (0.18.2)\n",

+ "Requirement already satisfied: stack-data in /home/jamal/.local/lib/python3.8/site-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (0.6.2)\n",

+ "Requirement already satisfied: traitlets>=5 in /home/jamal/.local/lib/python3.8/site-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (5.9.0)\n",

+ "Requirement already satisfied: pexpect>4.3; sys_platform != \"win32\" in /usr/lib/python3/dist-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (4.6.0)\n",

+ "Requirement already satisfied: matplotlib-inline in /home/jamal/.local/lib/python3.8/site-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (0.1.6)\n",

+ "Requirement already satisfied: pickleshare in /home/jamal/.local/lib/python3.8/site-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (0.7.5)\n",

+ "Requirement already satisfied: prompt-toolkit<3.1.0,>=3.0.30 in /home/jamal/.local/lib/python3.8/site-packages (from ipython->-r old_setup_files/requirements-pip.txt (line 20)) (3.0.36)\n",

+ "Collecting fsspec\n",

+ " Downloading fsspec-2023.6.0-py3-none-any.whl (163 kB)\n",

+ "\u001b[K |████████████████████████████████| 163 kB 3.0 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: typing-extensions>=3.7.4.3 in /home/jamal/.local/lib/python3.8/site-packages (from huggingface_hub>0.15->-r old_setup_files/requirements-pip.txt (line 21)) (4.5.0)\n",

+ "Requirement already satisfied: markdown-it-py<3.0.0,>=2.2.0 in /home/jamal/.local/lib/python3.8/site-packages (from rich->-r old_setup_files/requirements-pip.txt (line 22)) (2.2.0)\n",

+ "Collecting einops\n",

+ " Downloading einops-0.6.1-py3-none-any.whl (42 kB)\n",

+ "\u001b[K |████████████████████████████████| 42 kB 143 kB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: charset-normalizer<4,>=2 in /home/jamal/.local/lib/python3.8/site-packages (from requests->-r old_setup_files/requirements-pip.txt (line 28)) (3.0.1)\n",

+ "Requirement already satisfied: idna<4,>=2.5 in /usr/lib/python3/dist-packages (from requests->-r old_setup_files/requirements-pip.txt (line 28)) (2.8)\n",

+ "Requirement already satisfied: certifi>=2017.4.17 in /usr/lib/python3/dist-packages (from requests->-r old_setup_files/requirements-pip.txt (line 28)) (2019.11.28)\n",

+ "Requirement already satisfied: urllib3<1.27,>=1.21.1 in /home/jamal/.local/lib/python3.8/site-packages (from requests->-r old_setup_files/requirements-pip.txt (line 28)) (1.26.7)\n",

+ "Collecting sentencepiece\n",

+ " Downloading sentencepiece-0.1.99-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.3 MB)\n",

+ "\u001b[K |████████████████████████████████| 1.3 MB 3.1 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting lion-pytorch\n",

+ " Downloading lion_pytorch-0.1.2-py3-none-any.whl (4.4 kB)\n",

+ "Collecting ema-pytorch>=0.2.2\n",

+ " Downloading ema_pytorch-0.2.3-py3-none-any.whl (4.4 kB)\n",

+ "Collecting vector-quantize-pytorch>=1.5.14\n",

+ " Downloading vector_quantize_pytorch-1.6.30-py3-none-any.whl (13 kB)\n",

+ "Collecting local-attention>=1.8.4\n",

+ " Downloading local_attention-1.8.6-py3-none-any.whl (8.1 kB)\n",

+ "Collecting beartype\n",

+ " Downloading beartype-0.15.0-py3-none-any.whl (777 kB)\n",

+ "\u001b[K |████████████████████████████████| 777 kB 3.2 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting accelerate\n",

+ " Downloading accelerate-0.21.0-py3-none-any.whl (244 kB)\n",

+ "\u001b[K |████████████████████████████████| 244 kB 2.4 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting aiohttp~=3.0\n",

+ " Downloading aiohttp-3.8.5-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.1 MB)\n",

+ "\u001b[K |████████████████████████████████| 1.1 MB 2.7 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: pandas<3.0,>=1.0 in /home/jamal/.local/lib/python3.8/site-packages (from gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (1.5.3)\n",

+ "Collecting orjson~=3.0\n",

+ " Downloading orjson-3.9.2-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (138 kB)\n",

+ "\u001b[K |████████████████████████████████| 138 kB 2.8 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting python-multipart\n",

+ " Downloading python_multipart-0.0.6-py3-none-any.whl (45 kB)\n",

+ "\u001b[K |████████████████████████████████| 45 kB 486 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting fastapi\n",

+ " Downloading fastapi-0.100.1-py3-none-any.whl (65 kB)\n",

+ "\u001b[K |████████████████████████████████| 65 kB 530 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting uvicorn>=0.14.0\n",

+ " Downloading uvicorn-0.23.2-py3-none-any.whl (59 kB)\n",

+ "\u001b[K |████████████████████████████████| 59 kB 1.0 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: jinja2<4.0 in /home/jamal/.local/lib/python3.8/site-packages (from gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (3.1.2)\n",

+ "Collecting aiofiles<24.0,>=22.0\n",

+ " Downloading aiofiles-23.1.0-py3-none-any.whl (14 kB)\n",

+ "Collecting altair<6.0,>=4.2.0\n",

+ " Downloading altair-5.0.1-py3-none-any.whl (471 kB)\n",

+ "\u001b[K |████████████████████████████████| 471 kB 3.0 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting mdit-py-plugins<=0.3.3\n",

+ " Downloading mdit_py_plugins-0.3.3-py3-none-any.whl (50 kB)\n",

+ "\u001b[K |████████████████████████████████| 50 kB 777 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting ffmpy\n",

+ " Downloading ffmpy-0.3.1.tar.gz (5.5 kB)\n",

+ "Collecting pydantic!=1.8,!=1.8.1,!=2.0.0,!=2.0.1,<3.0.0,>=1.7.4\n",

+ " Downloading pydantic-2.1.1-py3-none-any.whl (370 kB)\n",

+ "\u001b[K |████████████████████████████████| 370 kB 3.2 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting httpx\n",

+ " Downloading httpx-0.24.1-py3-none-any.whl (75 kB)\n",

+ "\u001b[K |████████████████████████████████| 75 kB 593 kB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: websockets<12.0,>=10.0 in /home/jamal/.local/lib/python3.8/site-packages (from gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (11.0.3)\n",

+ "Requirement already satisfied: markupsafe~=2.0 in /home/jamal/.local/lib/python3.8/site-packages (from gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (2.1.2)\n",

+ "Collecting gradio-client>=0.3.0\n",

+ " Downloading gradio_client-0.3.0-py3-none-any.whl (294 kB)\n",

+ "\u001b[K |████████████████████████████████| 294 kB 2.2 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting semantic-version~=2.0\n",

+ " Downloading semantic_version-2.10.0-py2.py3-none-any.whl (15 kB)\n",

+ "Requirement already satisfied: matplotlib~=3.0 in /home/jamal/.local/lib/python3.8/site-packages (from gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (3.5.1)\n",

+ "Requirement already satisfied: zipp>=0.5 in /usr/lib/python3/dist-packages (from importlib-metadata->diffusers->-r old_setup_files/requirements-pip.txt (line 3)) (1.0.0)\n",

+ "Requirement already satisfied: pycparser in /home/jamal/.local/lib/python3.8/site-packages (from cffi->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (2.21)\n",

+ "Requirement already satisfied: sympy in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (1.12)\n",

+ "Requirement already satisfied: nvidia-curand-cu11==10.2.10.91; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (10.2.10.91)\n",

+ "Requirement already satisfied: nvidia-cusparse-cu11==11.7.4.91; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (11.7.4.91)\n",

+ "Requirement already satisfied: nvidia-cuda-runtime-cu11==11.7.99; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (11.7.99)\n",

+ "Requirement already satisfied: nvidia-nvtx-cu11==11.7.91; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (11.7.91)\n",

+ "Requirement already satisfied: networkx in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (3.1)\n",

+ "Requirement already satisfied: nvidia-cublas-cu11==11.10.3.66; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (11.10.3.66)\n",

+ "Requirement already satisfied: nvidia-cusolver-cu11==11.4.0.1; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (11.4.0.1)\n"

+ ]

+ },

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Requirement already satisfied: triton==2.0.0; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (2.0.0)\n",

+ "Requirement already satisfied: nvidia-cufft-cu11==10.9.0.58; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (10.9.0.58)\n",

+ "Requirement already satisfied: nvidia-nccl-cu11==2.14.3; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (2.14.3)\n",

+ "Requirement already satisfied: nvidia-cuda-cupti-cu11==11.7.101; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (11.7.101)\n",

+ "Requirement already satisfied: nvidia-cudnn-cu11==8.5.0.96; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (8.5.0.96)\n",

+ "Requirement already satisfied: nvidia-cuda-nvrtc-cu11==11.7.99; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (11.7.99)\n",

+ "Requirement already satisfied: colorama in /usr/lib/python3/dist-packages (from sacrebleu>=1.4.12->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (0.4.3)\n",

+ "Collecting lxml\n",

+ " Downloading lxml-4.9.3-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.manylinux_2_24_x86_64.whl (7.1 MB)\n",

+ "\u001b[K |████████████████████████████████| 7.1 MB 2.8 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting tabulate>=0.8.9\n",

+ " Downloading tabulate-0.9.0-py3-none-any.whl (35 kB)\n",

+ "Collecting portalocker\n",

+ " Downloading portalocker-2.7.0-py2.py3-none-any.whl (15 kB)\n",

+ "Requirement already satisfied: importlib-resources; python_version < \"3.9\" in /home/jamal/.local/lib/python3.8/site-packages (from hydra-core<1.1,>=1.0.7->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (5.10.2)\n",

+ "Collecting antlr4-python3-runtime==4.8\n",

+ " Downloading antlr4-python3-runtime-4.8.tar.gz (112 kB)\n",

+ "\u001b[K |████████████████████████████████| 112 kB 3.2 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: platformdirs>=2.5.0 in /home/jamal/.local/lib/python3.8/site-packages (from pooch>=1.0->librosa->-r old_setup_files/requirements-pip.txt (line 13)) (2.6.2)\n",

+ "Requirement already satisfied: threadpoolctl>=2.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from scikit-learn!=0.19.0,>=0.14.0->librosa->-r old_setup_files/requirements-pip.txt (line 13)) (3.2.0)\n",

+ "Requirement already satisfied: llvmlite<0.41,>=0.40.0dev0 in /home/jamal/.local/lib/python3.8/site-packages (from numba>=0.43.0->librosa->-r old_setup_files/requirements-pip.txt (line 13)) (0.40.1)\n",

+ "Requirement already satisfied: python-dateutil<3.0.0,>=2.1 in /home/jamal/.local/lib/python3.8/site-packages (from botocore<1.32.0,>=1.31.18->boto3->-r old_setup_files/requirements-pip.txt (line 14)) (2.8.2)\n",

+ "Requirement already satisfied: parso<0.9.0,>=0.8.0 in /home/jamal/.local/lib/python3.8/site-packages (from jedi>=0.16->ipython->-r old_setup_files/requirements-pip.txt (line 20)) (0.8.3)\n",

+ "Requirement already satisfied: executing>=1.2.0 in /home/jamal/.local/lib/python3.8/site-packages (from stack-data->ipython->-r old_setup_files/requirements-pip.txt (line 20)) (1.2.0)\n",

+ "Requirement already satisfied: asttokens>=2.1.0 in /home/jamal/.local/lib/python3.8/site-packages (from stack-data->ipython->-r old_setup_files/requirements-pip.txt (line 20)) (2.2.1)\n",

+ "Requirement already satisfied: pure-eval in /home/jamal/.local/lib/python3.8/site-packages (from stack-data->ipython->-r old_setup_files/requirements-pip.txt (line 20)) (0.2.2)\n",

+ "Requirement already satisfied: wcwidth in /home/jamal/.local/lib/python3.8/site-packages (from prompt-toolkit<3.1.0,>=3.0.30->ipython->-r old_setup_files/requirements-pip.txt (line 20)) (0.2.6)\n",

+ "Requirement already satisfied: mdurl~=0.1 in /home/jamal/.local/lib/python3.8/site-packages (from markdown-it-py<3.0.0,>=2.2.0->rich->-r old_setup_files/requirements-pip.txt (line 22)) (0.1.2)\n",

+ "Requirement already satisfied: psutil in /usr/lib/python3/dist-packages (from accelerate->audiolm-pytorch->-r old_setup_files/requirements-pip.txt (line 32)) (5.5.1)\n",

+ "Collecting frozenlist>=1.1.1\n",

+ " Downloading frozenlist-1.4.0-cp38-cp38-manylinux_2_5_x86_64.manylinux1_x86_64.manylinux_2_17_x86_64.manylinux2014_x86_64.whl (220 kB)\n",

+ "\u001b[K |████████████████████████████████| 220 kB 3.1 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: attrs>=17.3.0 in /usr/lib/python3/dist-packages (from aiohttp~=3.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (19.3.0)\n",

+ "Collecting aiosignal>=1.1.2\n",

+ " Downloading aiosignal-1.3.1-py3-none-any.whl (7.6 kB)\n",

+ "Requirement already satisfied: multidict<7.0,>=4.5 in /home/jamal/.local/lib/python3.8/site-packages (from aiohttp~=3.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (6.0.4)\n",

+ "Collecting async-timeout<5.0,>=4.0.0a3\n",

+ " Downloading async_timeout-4.0.2-py3-none-any.whl (5.8 kB)\n",

+ "Collecting yarl<2.0,>=1.0\n",

+ " Downloading yarl-1.9.2-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (266 kB)\n",

+ "\u001b[K |████████████████████████████████| 266 kB 2.7 MB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: pytz>=2020.1 in /home/jamal/.local/lib/python3.8/site-packages (from pandas<3.0,>=1.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (2022.7.1)\n",

+ "Collecting starlette<0.28.0,>=0.27.0\n",

+ " Downloading starlette-0.27.0-py3-none-any.whl (66 kB)\n",

+ "\u001b[K |████████████████████████████████| 66 kB 574 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting h11>=0.8\n",

+ " Downloading h11-0.14.0-py3-none-any.whl (58 kB)\n",

+ "\u001b[K |████████████████████████████████| 58 kB 719 kB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: click>=7.0 in /usr/lib/python3/dist-packages (from uvicorn>=0.14.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (7.0)\n",

+ "Requirement already satisfied: jsonschema>=3.0 in /home/jamal/.local/lib/python3.8/site-packages (from altair<6.0,>=4.2.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (4.17.3)\n",

+ "Collecting toolz\n",

+ " Downloading toolz-0.12.0-py3-none-any.whl (55 kB)\n",

+ "\u001b[K |████████████████████████████████| 55 kB 606 kB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting pydantic-core==2.4.0\n",

+ " Downloading pydantic_core-2.4.0-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl (1.9 MB)\n",

+ "\u001b[K |████████████████████████████████| 1.9 MB 2.6 MB/s eta 0:00:01\n",

+ "\u001b[?25hCollecting annotated-types>=0.4.0\n",

+ " Downloading annotated_types-0.5.0-py3-none-any.whl (11 kB)\n",

+ "Requirement already satisfied: sniffio in /home/jamal/.local/lib/python3.8/site-packages (from httpx->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (1.3.0)\n",

+ "Collecting httpcore<0.18.0,>=0.15.0\n",

+ " Downloading httpcore-0.17.3-py3-none-any.whl (74 kB)\n",

+ "\u001b[K |████████████████████████████████| 74 kB 471 kB/s eta 0:00:01\n",

+ "\u001b[?25hRequirement already satisfied: pyparsing>=2.2.1 in /usr/lib/python3/dist-packages (from matplotlib~=3.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (2.4.6)\n",

+ "Requirement already satisfied: cycler>=0.10 in /home/jamal/.local/lib/python3.8/site-packages (from matplotlib~=3.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (0.11.0)\n",

+ "Requirement already satisfied: fonttools>=4.22.0 in /home/jamal/.local/lib/python3.8/site-packages (from matplotlib~=3.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (4.38.0)\n",

+ "Requirement already satisfied: kiwisolver>=1.0.1 in /home/jamal/.local/lib/python3.8/site-packages (from matplotlib~=3.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (1.4.4)\n",

+ "Requirement already satisfied: mpmath>=0.19 in /home/jamal/.local/lib/python3.8/site-packages (from sympy->torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (1.3.0)\n",

+ "Requirement already satisfied: wheel in /usr/lib/python3/dist-packages (from nvidia-curand-cu11==10.2.10.91; platform_system == \"Linux\" and platform_machine == \"x86_64\"->torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (0.34.2)\n",

+ "Requirement already satisfied: cmake in /home/jamal/.local/lib/python3.8/site-packages (from triton==2.0.0; platform_system == \"Linux\" and platform_machine == \"x86_64\"->torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (3.27.0)\n",

+ "Requirement already satisfied: lit in /home/jamal/.local/lib/python3.8/site-packages (from triton==2.0.0; platform_system == \"Linux\" and platform_machine == \"x86_64\"->torch->fairseq->-r old_setup_files/requirements-pip.txt (line 12)) (16.0.6)\n",

+ "Requirement already satisfied: six>=1.5 in /usr/lib/python3/dist-packages (from python-dateutil<3.0.0,>=2.1->botocore<1.32.0,>=1.31.18->boto3->-r old_setup_files/requirements-pip.txt (line 14)) (1.14.0)\n",

+ "Requirement already satisfied: anyio<5,>=3.4.0 in /home/jamal/.local/lib/python3.8/site-packages (from starlette<0.28.0,>=0.27.0->fastapi->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (3.6.2)\n",

+ "Requirement already satisfied: pkgutil-resolve-name>=1.3.10; python_version < \"3.9\" in /home/jamal/.local/lib/python3.8/site-packages (from jsonschema>=3.0->altair<6.0,>=4.2.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (1.3.10)\n",

+ "Requirement already satisfied: pyrsistent!=0.17.0,!=0.17.1,!=0.17.2,>=0.14.0 in /usr/lib/python3/dist-packages (from jsonschema>=3.0->altair<6.0,>=4.2.0->gradio>=3.34.0->-r old_setup_files/requirements-pip.txt (line 34)) (0.15.5)\n",

+ "Building wheels for collected packages: ffmpeg, encodec, ffmpy, antlr4-python3-runtime\n"

+ ]

+ },

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ " Building wheel for ffmpeg (setup.py) ... \u001b[?25ldone\n",

+ "\u001b[?25h Created wheel for ffmpeg: filename=ffmpeg-1.4-py3-none-any.whl size=6083 sha256=19514e448b6bdeb0af7ec0711ab1153c6ed7a4c2c94d70be977b7c7fe20bb378\n",

+ " Stored in directory: /home/jamal/.cache/pip/wheels/30/33/46/5ab7eca55b9490dddbf3441c68a29535996270ef1ce8b9b6d7\n",

+ " Building wheel for encodec (setup.py) ... \u001b[?25ldone\n",

+ "\u001b[?25h Created wheel for encodec: filename=encodec-0.1.1-py3-none-any.whl size=45768 sha256=5be14b57922136d74f505c3f00b442410ed01e1df97724788ca60a81ae4c88c3\n",

+ " Stored in directory: /home/jamal/.cache/pip/wheels/83/ca/c5/2770ecff40c79307803c30f8d4c5dcb533722f5f7c049ee9db\n",

+ " Building wheel for ffmpy (setup.py) ... \u001b[?25ldone\n",

+ "\u001b[?25h Created wheel for ffmpy: filename=ffmpy-0.3.1-py3-none-any.whl size=5580 sha256=30a6f174f3c528a86e4132068eecaac56ddfdca93b4128ed8631c5f17707f7d7\n",

+ " Stored in directory: /home/jamal/.cache/pip/wheels/75/a3/1a/2f3f90b9a4eb0408109ae1b5bae01efbdf8ab4ef98797433e4\n",

+ " Building wheel for antlr4-python3-runtime (setup.py) ... \u001b[?25ldone\n",

+ "\u001b[?25h Created wheel for antlr4-python3-runtime: filename=antlr4_python3_runtime-4.8-py3-none-any.whl size=141230 sha256=24d2cd414c2d61b3f9bc6b30748b85ab68aa6d00c4ed7145823ba466469b0796\n",

+ " Stored in directory: /home/jamal/.cache/pip/wheels/c8/d0/ab/d43c02eaddc5b9004db86950802442ad9a26f279c619e28da0\n",

+ "Successfully built ffmpeg encodec ffmpy antlr4-python3-runtime\n",

+ "\u001b[31mERROR: pydantic-core 2.4.0 has requirement typing-extensions!=4.7.0,>=4.6.0, but you'll have typing-extensions 4.5.0 which is incompatible.\u001b[0m\n",

+ "\u001b[31mERROR: pydantic 2.1.1 has requirement typing-extensions>=4.6.1, but you'll have typing-extensions 4.5.0 which is incompatible.\u001b[0m\n",

+ "Installing collected packages: regex, fsspec, huggingface-hub, safetensors, tokenizers, transformers, diffusers, appdirs, ffmpeg-downloader, ffmpeg, ffmpeg-python, sox, omegaconf, cython, lxml, tabulate, portalocker, sacrebleu, torchaudio, antlr4-python3-runtime, hydra-core, bitarray, fairseq, jmespath, botocore, s3transfer, boto3, funcy, pathvalidate, rich-argparse, einops, encodec, pydub, audio2numpy, faiss-cpu, sentencepiece, lion-pytorch, ema-pytorch, vector-quantize-pytorch, local-attention, beartype, accelerate, audiolm-pytorch, universal-startfile, frozenlist, aiosignal, async-timeout, yarl, aiohttp, orjson, python-multipart, pydantic-core, annotated-types, pydantic, starlette, fastapi, h11, uvicorn, aiofiles, toolz, altair, mdit-py-plugins, ffmpy, httpcore, httpx, gradio-client, semantic-version, gradio\n",

+ "Successfully installed accelerate-0.21.0 aiofiles-23.1.0 aiohttp-3.8.5 aiosignal-1.3.1 altair-5.0.1 annotated-types-0.5.0 antlr4-python3-runtime-4.8 appdirs-1.4.4 async-timeout-4.0.2 audio2numpy-0.1.2 audiolm-pytorch-1.2.24 beartype-0.15.0 bitarray-2.8.0 boto3-1.28.18 botocore-1.31.18 cython-3.0.0 diffusers-0.19.3 einops-0.6.1 ema-pytorch-0.2.3 encodec-0.1.1 fairseq-0.12.2 faiss-cpu-1.7.4 fastapi-0.100.1 ffmpeg-1.4 ffmpeg-downloader-0.2.0 ffmpeg-python-0.2.0 ffmpy-0.3.1 frozenlist-1.4.0 fsspec-2023.6.0 funcy-2.0 gradio-3.39.0 gradio-client-0.3.0 h11-0.14.0 httpcore-0.17.3 httpx-0.24.1 huggingface-hub-0.16.4 hydra-core-1.0.7 jmespath-1.0.1 lion-pytorch-0.1.2 local-attention-1.8.6 lxml-4.9.3 mdit-py-plugins-0.3.3 omegaconf-2.0.6 orjson-3.9.2 pathvalidate-3.1.0 portalocker-2.7.0 pydantic-2.1.1 pydantic-core-2.4.0 pydub-0.25.1 python-multipart-0.0.6 regex-2023.6.3 rich-argparse-1.2.0 s3transfer-0.6.1 sacrebleu-2.3.1 safetensors-0.3.1 semantic-version-2.10.0 sentencepiece-0.1.99 sox-1.4.1 starlette-0.27.0 tabulate-0.9.0 tokenizers-0.13.3 toolz-0.12.0 torchaudio-2.0.2 transformers-4.31.0 universal-startfile-0.2 uvicorn-0.23.2 vector-quantize-pytorch-1.6.30 yarl-1.9.2\n",

+ "Requirement already satisfied: encodec in /home/jamal/.local/lib/python3.8/site-packages (0.1.1)\n",

+ "Requirement already satisfied: rich-argparse in /home/jamal/.local/lib/python3.8/site-packages (1.2.0)\n",

+ "Requirement already satisfied: librosa in /home/jamal/.local/lib/python3.8/site-packages (0.8.1)\n",

+ "Requirement already satisfied: pydub in /home/jamal/.local/lib/python3.8/site-packages (0.25.1)\n",

+ "Collecting devtools\n",

+ " Downloading devtools-0.11.0-py3-none-any.whl (19 kB)\n",

+ "Requirement already satisfied: einops in /home/jamal/.local/lib/python3.8/site-packages (from encodec) (0.6.1)\n",

+ "Requirement already satisfied: numpy in /home/jamal/.local/lib/python3.8/site-packages (from encodec) (1.20.3)\n",

+ "Requirement already satisfied: torch in /home/jamal/.local/lib/python3.8/site-packages (from encodec) (2.0.1)\n",

+ "Requirement already satisfied: torchaudio in /home/jamal/.local/lib/python3.8/site-packages (from encodec) (2.0.2)\n",

+ "Requirement already satisfied: rich>=11.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from rich-argparse) (13.3.5)\n",

+ "Requirement already satisfied: numba>=0.43.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (0.57.1)\n",

+ "Requirement already satisfied: joblib>=0.14 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (1.3.1)\n",

+ "Requirement already satisfied: soundfile>=0.10.2 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (0.10.3.post1)\n",

+ "Requirement already satisfied: packaging>=20.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (23.0)\n",

+ "Requirement already satisfied: resampy>=0.2.2 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (0.4.2)\n",

+ "Requirement already satisfied: pooch>=1.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (1.7.0)\n",

+ "Requirement already satisfied: scipy>=1.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (1.7.3)\n",

+ "Requirement already satisfied: decorator>=3.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (5.1.1)\n",

+ "Requirement already satisfied: scikit-learn!=0.19.0,>=0.14.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (1.0.2)\n",

+ "Requirement already satisfied: audioread>=2.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from librosa) (3.0.0)\n",

+ "Requirement already satisfied: executing>=1.1.1 in /home/jamal/.local/lib/python3.8/site-packages (from devtools) (1.2.0)\n",

+ "Requirement already satisfied: asttokens<3.0.0,>=2.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from devtools) (2.2.1)\n",

+ "Requirement already satisfied: nvidia-cuda-nvrtc-cu11==11.7.99; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (11.7.99)\n",

+ "Requirement already satisfied: nvidia-nccl-cu11==2.14.3; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (2.14.3)\n",

+ "Requirement already satisfied: nvidia-cuda-runtime-cu11==11.7.99; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (11.7.99)\n",

+ "Requirement already satisfied: nvidia-nvtx-cu11==11.7.91; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (11.7.91)\n",

+ "Requirement already satisfied: nvidia-cublas-cu11==11.10.3.66; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (11.10.3.66)\n",

+ "Requirement already satisfied: nvidia-curand-cu11==10.2.10.91; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (10.2.10.91)\n",

+ "Requirement already satisfied: nvidia-cufft-cu11==10.9.0.58; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (10.9.0.58)\n",

+ "Requirement already satisfied: nvidia-cusolver-cu11==11.4.0.1; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (11.4.0.1)\n",

+ "Requirement already satisfied: nvidia-cudnn-cu11==8.5.0.96; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (8.5.0.96)\n",

+ "Requirement already satisfied: networkx in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (3.1)\n",

+ "Requirement already satisfied: nvidia-cuda-cupti-cu11==11.7.101; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (11.7.101)\n",

+ "Requirement already satisfied: sympy in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (1.12)\n",

+ "Requirement already satisfied: filelock in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (3.12.2)\n",

+ "Requirement already satisfied: nvidia-cusparse-cu11==11.7.4.91; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (11.7.4.91)\n",

+ "Requirement already satisfied: triton==2.0.0; platform_system == \"Linux\" and platform_machine == \"x86_64\" in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (2.0.0)\n"

+ ]

+ },

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Requirement already satisfied: jinja2 in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (3.1.2)\n",

+ "Requirement already satisfied: typing-extensions in /home/jamal/.local/lib/python3.8/site-packages (from torch->encodec) (4.5.0)\n",

+ "Requirement already satisfied: pygments<3.0.0,>=2.13.0 in /home/jamal/.local/lib/python3.8/site-packages (from rich>=11.0.0->rich-argparse) (2.14.0)\n",

+ "Requirement already satisfied: markdown-it-py<3.0.0,>=2.2.0 in /home/jamal/.local/lib/python3.8/site-packages (from rich>=11.0.0->rich-argparse) (2.2.0)\n",

+ "Requirement already satisfied: importlib-metadata; python_version < \"3.9\" in /home/jamal/.local/lib/python3.8/site-packages (from numba>=0.43.0->librosa) (6.0.0)\n",

+ "Requirement already satisfied: llvmlite<0.41,>=0.40.0dev0 in /home/jamal/.local/lib/python3.8/site-packages (from numba>=0.43.0->librosa) (0.40.1)\n",

+ "Requirement already satisfied: cffi>=1.0 in /home/jamal/.local/lib/python3.8/site-packages (from soundfile>=0.10.2->librosa) (1.15.1)\n",

+ "Requirement already satisfied: platformdirs>=2.5.0 in /home/jamal/.local/lib/python3.8/site-packages (from pooch>=1.0->librosa) (2.6.2)\n",

+ "Requirement already satisfied: requests>=2.19.0 in /home/jamal/.local/lib/python3.8/site-packages (from pooch>=1.0->librosa) (2.28.2)\n",

+ "Requirement already satisfied: threadpoolctl>=2.0.0 in /home/jamal/.local/lib/python3.8/site-packages (from scikit-learn!=0.19.0,>=0.14.0->librosa) (3.2.0)\n",

+ "Requirement already satisfied: six in /usr/lib/python3/dist-packages (from asttokens<3.0.0,>=2.0.0->devtools) (1.14.0)\n",

+ "Requirement already satisfied: setuptools in /usr/lib/python3/dist-packages (from nvidia-cuda-runtime-cu11==11.7.99; platform_system == \"Linux\" and platform_machine == \"x86_64\"->torch->encodec) (45.2.0)\n",

+ "Requirement already satisfied: wheel in /usr/lib/python3/dist-packages (from nvidia-cuda-runtime-cu11==11.7.99; platform_system == \"Linux\" and platform_machine == \"x86_64\"->torch->encodec) (0.34.2)\n",

+ "Requirement already satisfied: mpmath>=0.19 in /home/jamal/.local/lib/python3.8/site-packages (from sympy->torch->encodec) (1.3.0)\n",

+ "Requirement already satisfied: lit in /home/jamal/.local/lib/python3.8/site-packages (from triton==2.0.0; platform_system == \"Linux\" and platform_machine == \"x86_64\"->torch->encodec) (16.0.6)\n",

+ "Requirement already satisfied: cmake in /home/jamal/.local/lib/python3.8/site-packages (from triton==2.0.0; platform_system == \"Linux\" and platform_machine == \"x86_64\"->torch->encodec) (3.27.0)\n",

+ "Requirement already satisfied: MarkupSafe>=2.0 in /home/jamal/.local/lib/python3.8/site-packages (from jinja2->torch->encodec) (2.1.2)\n",

+ "Requirement already satisfied: mdurl~=0.1 in /home/jamal/.local/lib/python3.8/site-packages (from markdown-it-py<3.0.0,>=2.2.0->rich>=11.0.0->rich-argparse) (0.1.2)\n",

+ "Requirement already satisfied: zipp>=0.5 in /usr/lib/python3/dist-packages (from importlib-metadata; python_version < \"3.9\"->numba>=0.43.0->librosa) (1.0.0)\n",

+ "Requirement already satisfied: pycparser in /home/jamal/.local/lib/python3.8/site-packages (from cffi>=1.0->soundfile>=0.10.2->librosa) (2.21)\n",

+ "Requirement already satisfied: certifi>=2017.4.17 in /usr/lib/python3/dist-packages (from requests>=2.19.0->pooch>=1.0->librosa) (2019.11.28)\n",

+ "Requirement already satisfied: urllib3<1.27,>=1.21.1 in /home/jamal/.local/lib/python3.8/site-packages (from requests>=2.19.0->pooch>=1.0->librosa) (1.26.7)\n",

+ "Requirement already satisfied: charset-normalizer<4,>=2 in /home/jamal/.local/lib/python3.8/site-packages (from requests>=2.19.0->pooch>=1.0->librosa) (3.0.1)\n",

+ "Requirement already satisfied: idna<4,>=2.5 in /usr/lib/python3/dist-packages (from requests>=2.19.0->pooch>=1.0->librosa) (2.8)\n",

+ "Installing collected packages: devtools\n",

+ "Successfully installed devtools-0.11.0\n",

+ "fish: Unknown command: python\n",

+ "fish: \n",

+ "python bark_webui.py --share\n",

+ "^\n"

+ ]

+ }

+ ],

+ "source": [

+ "!pip install -r old_setup_files/requirements-pip.txt\n",

+ "!pip install encodec rich-argparse librosa pydub devtools"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 3,

+ "id": "e6884c4a",

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Collecting typing-extensions\n",

+ " Downloading typing_extensions-4.7.1-py3-none-any.whl (33 kB)\n",

+ "\u001b[31mERROR: tensorflow 2.13.0 has requirement numpy<=1.24.3,>=1.22, but you'll have numpy 1.20.3 which is incompatible.\u001b[0m\n",

+ "\u001b[31mERROR: tensorflow 2.13.0 has requirement typing-extensions<4.6.0,>=3.6.6, but you'll have typing-extensions 4.7.1 which is incompatible.\u001b[0m\n",

+ "Installing collected packages: typing-extensions\n",

+ " Attempting uninstall: typing-extensions\n",

+ " Found existing installation: typing-extensions 4.5.0\n",

+ " Uninstalling typing-extensions-4.5.0:\n",

+ " Successfully uninstalled typing-extensions-4.5.0\n",

+ "Successfully installed typing-extensions-4.7.1\n",

+ "Note: you may need to restart the kernel to use updated packages.\n"

+ ]

+ }

+ ],

+ "source": [

+ "pip install typing-extensions --upgrade"

+ ]

+ },

+ {

+ "cell_type": "code",

+ "execution_count": 4,

+ "id": "3a2e8312",

+ "metadata": {},

+ "outputs": [

+ {

+ "name": "stdout",

+ "output_type": "stream",

+ "text": [

+ "Traceback (most recent call last):\r\n",

+ " File \"bark_webui.py\", line 14, in Click to toggle example long-form generations (from the example notebook)