Spaces:

Runtime error

Runtime error

Upload 30 files

Browse files- .gitattributes +2 -0

- README.md +2 -9

- app_ead_instuct.py +620 -0

- images/214000000000.jpg +0 -0

- images/311000000002.jpg +0 -0

- images/Doom_Slayer.jpg +0 -0

- images/Elon_Musk.webp +0 -0

- images/InfEdit.jpg +3 -0

- images/angry.jpg +0 -0

- images/bear.jpg +0 -0

- images/computer.png +0 -0

- images/corgi.jpg +0 -0

- images/dragon.jpg +0 -0

- images/droplet.png +0 -0

- images/frieren.jpg +0 -0

- images/genshin.png +0 -0

- images/groundhog.png +0 -0

- images/james.jpg +0 -0

- images/miku.png +0 -0

- images/moyu.png +0 -0

- images/muffin.png +0 -0

- images/osu.jfif +0 -0

- images/sam.png +3 -0

- images/summer.jpg +0 -0

- nsfw.png +0 -0

- pipeline_ead.py +707 -0

- ptp_utils.py +180 -0

- requirements.txt +8 -0

- seq_aligner.py +314 -0

- utils.py +6 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

images/InfEdit.jpg filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

images/sam.png filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,13 +1,6 @@

|

|

| 1 |

---

|

| 2 |

title: InfEdit

|

| 3 |

-

|

| 4 |

-

colorFrom: purple

|

| 5 |

-

colorTo: purple

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 4.

|

| 8 |

-

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: cc-by-nc-sa-4.0

|

| 11 |

---

|

| 12 |

-

|

| 13 |

-

Check out the configuration reference at https://huggingface.co/docs/hub/spaces-config-reference

|

|

|

|

| 1 |

---

|

| 2 |

title: InfEdit

|

| 3 |

+

app_file: app_ead_instuct.py

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

+

sdk_version: 4.7.1

|

|

|

|

|

|

|

|

|

|

| 6 |

---

|

|

|

|

|

|

app_ead_instuct.py

ADDED

|

@@ -0,0 +1,620 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from diffusers import LCMScheduler

|

| 2 |

+

from pipeline_ead import EditPipeline

|

| 3 |

+

import os

|

| 4 |

+

import gradio as gr

|

| 5 |

+

import torch

|

| 6 |

+

from PIL import Image

|

| 7 |

+

import torch.nn.functional as nnf

|

| 8 |

+

from typing import Optional, Union, Tuple, List, Callable, Dict

|

| 9 |

+

import abc

|

| 10 |

+

import ptp_utils

|

| 11 |

+

import utils

|

| 12 |

+

import numpy as np

|

| 13 |

+

import seq_aligner

|

| 14 |

+

import math

|

| 15 |

+

|

| 16 |

+

LOW_RESOURCE = False

|

| 17 |

+

MAX_NUM_WORDS = 77

|

| 18 |

+

|

| 19 |

+

is_colab = utils.is_google_colab()

|

| 20 |

+

colab_instruction = "" if is_colab else """

|

| 21 |

+

Colab Instuction"""

|

| 22 |

+

|

| 23 |

+

torch_dtype = torch.float16 if torch.cuda.is_available() else torch.float32

|

| 24 |

+

model_id_or_path = "SimianLuo/LCM_Dreamshaper_v7"

|

| 25 |

+

device_print = "GPU 🔥" if torch.cuda.is_available() else "CPU 🥶"

|

| 26 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 27 |

+

|

| 28 |

+

if is_colab:

|

| 29 |

+

scheduler = LCMScheduler.from_config(model_id_or_path, subfolder="scheduler")

|

| 30 |

+

pipe = EditPipeline.from_pretrained(model_id_or_path, scheduler=scheduler, torch_dtype=torch_dtype)

|

| 31 |

+

else:

|

| 32 |

+

# import streamlit as st

|

| 33 |

+

# scheduler = DDIMScheduler.from_config(model_id_or_path, use_auth_token=st.secrets["USER_TOKEN"], subfolder="scheduler")

|

| 34 |

+

# pipe = CycleDiffusionPipeline.from_pretrained(model_id_or_path, use_auth_token=st.secrets["USER_TOKEN"], scheduler=scheduler, torch_dtype=torch_dtype)

|

| 35 |

+

scheduler = LCMScheduler.from_config(model_id_or_path, use_auth_token=os.environ.get("USER_TOKEN"), subfolder="scheduler")

|

| 36 |

+

pipe = EditPipeline.from_pretrained(model_id_or_path, use_auth_token=os.environ.get("USER_TOKEN"), scheduler=scheduler, torch_dtype=torch_dtype)

|

| 37 |

+

|

| 38 |

+

tokenizer = pipe.tokenizer

|

| 39 |

+

encoder = pipe.text_encoder

|

| 40 |

+

|

| 41 |

+

if torch.cuda.is_available():

|

| 42 |

+

pipe = pipe.to("cuda")

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

class LocalBlend:

|

| 46 |

+

|

| 47 |

+

def get_mask(self,x_t,maps,word_idx, thresh, i):

|

| 48 |

+

# print(word_idx)

|

| 49 |

+

# print(maps.shape)

|

| 50 |

+

# for i in range(0,self.len):

|

| 51 |

+

# self.save_image(maps[:,:,:,:,i].mean(0,keepdim=True),i,"map")

|

| 52 |

+

maps = maps * word_idx.reshape(1,1,1,1,-1)

|

| 53 |

+

maps = (maps[:,:,:,:,1:self.len-1]).mean(0,keepdim=True)

|

| 54 |

+

# maps = maps.mean(0,keepdim=True)

|

| 55 |

+

maps = (maps).max(-1)[0]

|

| 56 |

+

# self.save_image(maps,i,"map")

|

| 57 |

+

maps = nnf.interpolate(maps, size=(x_t.shape[2:]))

|

| 58 |

+

# maps = maps.mean(1,keepdim=True)\

|

| 59 |

+

maps = maps / maps.max(2, keepdim=True)[0].max(3, keepdim=True)[0]

|

| 60 |

+

mask = maps > thresh

|

| 61 |

+

return mask

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def save_image(self,mask,i, caption):

|

| 65 |

+

image = mask[0, 0, :, :]

|

| 66 |

+

image = 255 * image / image.max()

|

| 67 |

+

# print(image.shape)

|

| 68 |

+

image = image.unsqueeze(-1).expand(*image.shape, 3)

|

| 69 |

+

# print(image.shape)

|

| 70 |

+

image = image.cpu().numpy().astype(np.uint8)

|

| 71 |

+

image = np.array(Image.fromarray(image).resize((256, 256)))

|

| 72 |

+

if not os.path.exists(f"inter/{caption}"):

|

| 73 |

+

os.mkdir(f"inter/{caption}")

|

| 74 |

+

ptp_utils.save_images(image, f"inter/{caption}/{i}.jpg")

|

| 75 |

+

|

| 76 |

+

|

| 77 |

+

def __call__(self, i, x_s, x_t, x_m, attention_store, alpha_prod, temperature=0.15, use_xm=False):

|

| 78 |

+

maps = attention_store["down_cross"][2:4] + attention_store["up_cross"][:3]

|

| 79 |

+

h,w = x_t.shape[2],x_t.shape[3]

|

| 80 |

+

h , w = ((h+1)//2+1)//2, ((w+1)//2+1)//2

|

| 81 |

+

# print(h,w)

|

| 82 |

+

# print(maps[0].shape)

|

| 83 |

+

maps = [item.reshape(2, -1, 1, h // int((h*w/item.shape[-2])**0.5), w // int((h*w/item.shape[-2])**0.5), MAX_NUM_WORDS) for item in maps]

|

| 84 |

+

maps = torch.cat(maps, dim=1)

|

| 85 |

+

maps_s = maps[0,:]

|

| 86 |

+

maps_m = maps[1,:]

|

| 87 |

+

thresh_e = temperature / alpha_prod ** (0.5)

|

| 88 |

+

if thresh_e < self.thresh_e:

|

| 89 |

+

thresh_e = self.thresh_e

|

| 90 |

+

thresh_m = self.thresh_m

|

| 91 |

+

mask_e = self.get_mask(x_t, maps_m, self.alpha_e, thresh_e, i)

|

| 92 |

+

mask_m = self.get_mask(x_t, maps_s, (self.alpha_m-self.alpha_me), thresh_m, i)

|

| 93 |

+

mask_me = self.get_mask(x_t, maps_m, self.alpha_me, self.thresh_e, i)

|

| 94 |

+

if self.save_inter:

|

| 95 |

+

self.save_image(mask_e,i,"mask_e")

|

| 96 |

+

self.save_image(mask_m,i,"mask_m")

|

| 97 |

+

self.save_image(mask_me,i,"mask_me")

|

| 98 |

+

|

| 99 |

+

if self.alpha_e.sum() == 0:

|

| 100 |

+

x_t_out = x_t

|

| 101 |

+

else:

|

| 102 |

+

x_t_out = torch.where(mask_e, x_t, x_m)

|

| 103 |

+

x_t_out = torch.where(mask_m, x_s, x_t_out)

|

| 104 |

+

if use_xm:

|

| 105 |

+

x_t_out = torch.where(mask_me, x_m, x_t_out)

|

| 106 |

+

|

| 107 |

+

return x_m, x_t_out

|

| 108 |

+

|

| 109 |

+

def __init__(self,thresh_e=0.3, thresh_m=0.3, save_inter = False):

|

| 110 |

+

self.thresh_e = thresh_e

|

| 111 |

+

self.thresh_m = thresh_m

|

| 112 |

+

self.save_inter = save_inter

|

| 113 |

+

|

| 114 |

+

def set_map(self, ms, alpha, alpha_e, alpha_m,len):

|

| 115 |

+

self.m = ms

|

| 116 |

+

self.alpha = alpha

|

| 117 |

+

self.alpha_e = alpha_e

|

| 118 |

+

self.alpha_m = alpha_m

|

| 119 |

+

alpha_me = alpha_e.to(torch.bool) & alpha_m.to(torch.bool)

|

| 120 |

+

self.alpha_me = alpha_me.to(torch.float)

|

| 121 |

+

self.len = len

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

class AttentionControl(abc.ABC):

|

| 125 |

+

|

| 126 |

+

def step_callback(self, x_t):

|

| 127 |

+

return x_t

|

| 128 |

+

|

| 129 |

+

def between_steps(self):

|

| 130 |

+

return

|

| 131 |

+

|

| 132 |

+

@property

|

| 133 |

+

def num_uncond_att_layers(self):

|

| 134 |

+

return self.num_att_layers if LOW_RESOURCE else 0

|

| 135 |

+

|

| 136 |

+

@abc.abstractmethod

|

| 137 |

+

def forward(self, attn, is_cross: bool, place_in_unet: str):

|

| 138 |

+

raise NotImplementedError

|

| 139 |

+

|

| 140 |

+

def __call__(self, attn, is_cross: bool, place_in_unet: str):

|

| 141 |

+

if self.cur_att_layer >= self.num_uncond_att_layers:

|

| 142 |

+

if LOW_RESOURCE:

|

| 143 |

+

attn = self.forward(attn, is_cross, place_in_unet)

|

| 144 |

+

else:

|

| 145 |

+

h = attn.shape[0]

|

| 146 |

+

attn[h // 2:] = self.forward(attn[h // 2:], is_cross, place_in_unet)

|

| 147 |

+

self.cur_att_layer += 1

|

| 148 |

+

if self.cur_att_layer == self.num_att_layers // 2 + self.num_uncond_att_layers:

|

| 149 |

+

self.cur_att_layer = 0

|

| 150 |

+

self.cur_step += 1

|

| 151 |

+

self.between_steps()

|

| 152 |

+

return attn

|

| 153 |

+

|

| 154 |

+

def reset(self):

|

| 155 |

+

self.cur_step = 0

|

| 156 |

+

self.cur_att_layer = 0

|

| 157 |

+

|

| 158 |

+

def __init__(self):

|

| 159 |

+

self.cur_step = 0

|

| 160 |

+

self.num_att_layers = -1

|

| 161 |

+

self.cur_att_layer = 0

|

| 162 |

+

|

| 163 |

+

|

| 164 |

+

class EmptyControl(AttentionControl):

|

| 165 |

+

|

| 166 |

+

def forward(self, attn, is_cross: bool, place_in_unet: str):

|

| 167 |

+

return attn

|

| 168 |

+

def self_attn_forward(self, q, k, v, sim, attn, is_cross, place_in_unet, num_heads, **kwargs):

|

| 169 |

+

b = q.shape[0] // num_heads

|

| 170 |

+

out = torch.einsum("h i j, h j d -> h i d", attn, v)

|

| 171 |

+

return out

|

| 172 |

+

|

| 173 |

+

|

| 174 |

+

class AttentionStore(AttentionControl):

|

| 175 |

+

|

| 176 |

+

@staticmethod

|

| 177 |

+

def get_empty_store():

|

| 178 |

+

return {"down_cross": [], "mid_cross": [], "up_cross": [],

|

| 179 |

+

"down_self": [], "mid_self": [], "up_self": []}

|

| 180 |

+

|

| 181 |

+

def forward(self, attn, is_cross: bool, place_in_unet: str):

|

| 182 |

+

key = f"{place_in_unet}_{'cross' if is_cross else 'self'}"

|

| 183 |

+

if attn.shape[1] <= 32 ** 2: # avoid memory overhead

|

| 184 |

+

self.step_store[key].append(attn)

|

| 185 |

+

return attn

|

| 186 |

+

|

| 187 |

+

def between_steps(self):

|

| 188 |

+

if len(self.attention_store) == 0:

|

| 189 |

+

self.attention_store = self.step_store

|

| 190 |

+

else:

|

| 191 |

+

for key in self.attention_store:

|

| 192 |

+

for i in range(len(self.attention_store[key])):

|

| 193 |

+

self.attention_store[key][i] += self.step_store[key][i]

|

| 194 |

+

self.step_store = self.get_empty_store()

|

| 195 |

+

|

| 196 |

+

def get_average_attention(self):

|

| 197 |

+

average_attention = {key: [item / self.cur_step for item in self.attention_store[key]] for key in self.attention_store}

|

| 198 |

+

return average_attention

|

| 199 |

+

|

| 200 |

+

def reset(self):

|

| 201 |

+

super(AttentionStore, self).reset()

|

| 202 |

+

self.step_store = self.get_empty_store()

|

| 203 |

+

self.attention_store = {}

|

| 204 |

+

|

| 205 |

+

def __init__(self):

|

| 206 |

+

super(AttentionStore, self).__init__()

|

| 207 |

+

self.step_store = self.get_empty_store()

|

| 208 |

+

self.attention_store = {}

|

| 209 |

+

|

| 210 |

+

|

| 211 |

+

class AttentionControlEdit(AttentionStore, abc.ABC):

|

| 212 |

+

|

| 213 |

+

def step_callback(self,i, t, x_s, x_t, x_m, alpha_prod):

|

| 214 |

+

if (self.local_blend is not None) and (i>0):

|

| 215 |

+

use_xm = (self.cur_step+self.start_steps+1 == self.num_steps)

|

| 216 |

+

x_m, x_t = self.local_blend(i, x_s, x_t, x_m, self.attention_store, alpha_prod, use_xm=use_xm)

|

| 217 |

+

return x_m, x_t

|

| 218 |

+

|

| 219 |

+

def replace_self_attention(self, attn_base, att_replace):

|

| 220 |

+

if att_replace.shape[2] <= 16 ** 2:

|

| 221 |

+

return attn_base.unsqueeze(0).expand(att_replace.shape[0], *attn_base.shape)

|

| 222 |

+

else:

|

| 223 |

+

return att_replace

|

| 224 |

+

|

| 225 |

+

@abc.abstractmethod

|

| 226 |

+

def replace_cross_attention(self, attn_base, att_replace):

|

| 227 |

+

raise NotImplementedError

|

| 228 |

+

|

| 229 |

+

def attn_batch(self, q, k, v, sim, attn, is_cross, place_in_unet, num_heads, **kwargs):

|

| 230 |

+

b = q.shape[0] // num_heads

|

| 231 |

+

|

| 232 |

+

sim = torch.einsum("h i d, h j d -> h i j", q, k) * kwargs.get("scale")

|

| 233 |

+

attn = sim.softmax(-1)

|

| 234 |

+

out = torch.einsum("h i j, h j d -> h i d", attn, v)

|

| 235 |

+

return out

|

| 236 |

+

|

| 237 |

+

def self_attn_forward(self, q, k, v, num_heads):

|

| 238 |

+

if q.shape[0]//num_heads == 3:

|

| 239 |

+

if (self.self_replace_steps <= ((self.cur_step+self.start_steps+1)*1.0 / self.num_steps) ):

|

| 240 |

+

q=torch.cat([q[:num_heads*2],q[num_heads:num_heads*2]])

|

| 241 |

+

k=torch.cat([k[:num_heads*2],k[:num_heads]])

|

| 242 |

+

v=torch.cat([v[:num_heads*2],v[:num_heads]])

|

| 243 |

+

else:

|

| 244 |

+

q=torch.cat([q[:num_heads],q[:num_heads],q[:num_heads]])

|

| 245 |

+

k=torch.cat([k[:num_heads],k[:num_heads],k[:num_heads]])

|

| 246 |

+

v=torch.cat([v[:num_heads*2],v[:num_heads]])

|

| 247 |

+

return q,k,v

|

| 248 |

+

else:

|

| 249 |

+

qu, qc = q.chunk(2)

|

| 250 |

+

ku, kc = k.chunk(2)

|

| 251 |

+

vu, vc = v.chunk(2)

|

| 252 |

+

if (self.self_replace_steps <= ((self.cur_step+self.start_steps+1)*1.0 / self.num_steps) ):

|

| 253 |

+

qu=torch.cat([qu[:num_heads*2],qu[num_heads:num_heads*2]])

|

| 254 |

+

qc=torch.cat([qc[:num_heads*2],qc[num_heads:num_heads*2]])

|

| 255 |

+

ku=torch.cat([ku[:num_heads*2],ku[:num_heads]])

|

| 256 |

+

kc=torch.cat([kc[:num_heads*2],kc[:num_heads]])

|

| 257 |

+

vu=torch.cat([vu[:num_heads*2],vu[:num_heads]])

|

| 258 |

+

vc=torch.cat([vc[:num_heads*2],vc[:num_heads]])

|

| 259 |

+

else:

|

| 260 |

+

qu=torch.cat([qu[:num_heads],qu[:num_heads],qu[:num_heads]])

|

| 261 |

+

qc=torch.cat([qc[:num_heads],qc[:num_heads],qc[:num_heads]])

|

| 262 |

+

ku=torch.cat([ku[:num_heads],ku[:num_heads],ku[:num_heads]])

|

| 263 |

+

kc=torch.cat([kc[:num_heads],kc[:num_heads],kc[:num_heads]])

|

| 264 |

+

vu=torch.cat([vu[:num_heads*2],vu[:num_heads]])

|

| 265 |

+

vc=torch.cat([vc[:num_heads*2],vc[:num_heads]])

|

| 266 |

+

|

| 267 |

+

return torch.cat([qu, qc], dim=0) ,torch.cat([ku, kc], dim=0), torch.cat([vu, vc], dim=0)

|

| 268 |

+

|

| 269 |

+

def forward(self, attn, is_cross: bool, place_in_unet: str):

|

| 270 |

+

if is_cross :

|

| 271 |

+

h = attn.shape[0] // self.batch_size

|

| 272 |

+

attn = attn.reshape(self.batch_size,h, *attn.shape[1:])

|

| 273 |

+

attn_base, attn_repalce,attn_masa = attn[0], attn[1], attn[2]

|

| 274 |

+

attn_replace_new = self.replace_cross_attention(attn_masa, attn_repalce)

|

| 275 |

+

attn_base_store = self.replace_cross_attention(attn_base, attn_repalce)

|

| 276 |

+

if (self.cross_replace_steps >= ((self.cur_step+self.start_steps+1)*1.0 / self.num_steps) ):

|

| 277 |

+

attn[1] = attn_base_store

|

| 278 |

+

attn_store=torch.cat([attn_base_store,attn_replace_new])

|

| 279 |

+

attn = attn.reshape(self.batch_size * h, *attn.shape[2:])

|

| 280 |

+

attn_store = attn_store.reshape(2 *h, *attn_store.shape[2:])

|

| 281 |

+

super(AttentionControlEdit, self).forward(attn_store, is_cross, place_in_unet)

|

| 282 |

+

return attn

|

| 283 |

+

|

| 284 |

+

def __init__(self, prompts, num_steps: int,start_steps: int,

|

| 285 |

+

cross_replace_steps: Union[float, Tuple[float, float], Dict[str, Tuple[float, float]]],

|

| 286 |

+

self_replace_steps: Union[float, Tuple[float, float]],

|

| 287 |

+

local_blend: Optional[LocalBlend]):

|

| 288 |

+

super(AttentionControlEdit, self).__init__()

|

| 289 |

+

self.batch_size = len(prompts)+1

|

| 290 |

+

self.self_replace_steps = self_replace_steps

|

| 291 |

+

self.cross_replace_steps = cross_replace_steps

|

| 292 |

+

self.num_steps=num_steps

|

| 293 |

+

self.start_steps=start_steps

|

| 294 |

+

self.local_blend = local_blend

|

| 295 |

+

|

| 296 |

+

|

| 297 |

+

class AttentionReplace(AttentionControlEdit):

|

| 298 |

+

|

| 299 |

+

def replace_cross_attention(self, attn_base, att_replace):

|

| 300 |

+

return torch.einsum('hpw,bwn->bhpn', attn_base, self.mapper)

|

| 301 |

+

|

| 302 |

+

def __init__(self, prompts, num_steps: int, cross_replace_steps: float, self_replace_steps: float,

|

| 303 |

+

local_blend: Optional[LocalBlend] = None):

|

| 304 |

+

super(AttentionReplace, self).__init__(prompts, num_steps, cross_replace_steps, self_replace_steps, local_blend)

|

| 305 |

+

self.mapper = seq_aligner.get_replacement_mapper(prompts, tokenizer).to(device).to(torch_dtype)

|

| 306 |

+

|

| 307 |

+

|

| 308 |

+

class AttentionRefine(AttentionControlEdit):

|

| 309 |

+

|

| 310 |

+

def replace_cross_attention(self, attn_masa, att_replace):

|

| 311 |

+

attn_masa_replace = attn_masa[:, :, self.mapper].squeeze()

|

| 312 |

+

attn_replace = attn_masa_replace * self.alphas + \

|

| 313 |

+

att_replace * (1 - self.alphas)

|

| 314 |

+

return attn_replace

|

| 315 |

+

|

| 316 |

+

def __init__(self, prompts, prompt_specifiers, num_steps: int,start_steps: int, cross_replace_steps: float, self_replace_steps: float,

|

| 317 |

+

local_blend: Optional[LocalBlend] = None):

|

| 318 |

+

super(AttentionRefine, self).__init__(prompts, num_steps,start_steps, cross_replace_steps, self_replace_steps, local_blend)

|

| 319 |

+

self.mapper, alphas, ms, alpha_e, alpha_m = seq_aligner.get_refinement_mapper(prompts, prompt_specifiers, tokenizer, encoder, device)

|

| 320 |

+

self.mapper, alphas, ms = self.mapper.to(device), alphas.to(device).to(torch_dtype), ms.to(device).to(torch_dtype)

|

| 321 |

+

self.alphas = alphas.reshape(alphas.shape[0], 1, 1, alphas.shape[1])

|

| 322 |

+

self.ms = ms.reshape(ms.shape[0], 1, 1, ms.shape[1])

|

| 323 |

+

ms = ms.to(device)

|

| 324 |

+

alpha_e = alpha_e.to(device)

|

| 325 |

+

alpha_m = alpha_m.to(device)

|

| 326 |

+

t_len = len(tokenizer(prompts[1])["input_ids"])

|

| 327 |

+

self.local_blend.set_map(ms,alphas,alpha_e,alpha_m,t_len)

|

| 328 |

+

|

| 329 |

+

|

| 330 |

+

def get_equalizer(text: str, word_select: Union[int, Tuple[int, ...]], values: Union[List[float], Tuple[float, ...]]):

|

| 331 |

+

if type(word_select) is int or type(word_select) is str:

|

| 332 |

+

word_select = (word_select,)

|

| 333 |

+

equalizer = torch.ones(len(values), 77)

|

| 334 |

+

values = torch.tensor(values, dtype=torch_dtype)

|

| 335 |

+

for word in word_select:

|

| 336 |

+

inds = ptp_utils.get_word_inds(text, word, tokenizer)

|

| 337 |

+

equalizer[:, inds] = values

|

| 338 |

+

return equalizer

|

| 339 |

+

|

| 340 |

+

|

| 341 |

+

def inference(img, source_prompt, target_prompt,

|

| 342 |

+

local, mutual,

|

| 343 |

+

positive_prompt, negative_prompt,

|

| 344 |

+

guidance_s, guidance_t,

|

| 345 |

+

num_inference_steps,

|

| 346 |

+

width, height, seed, strength,

|

| 347 |

+

cross_replace_steps, self_replace_steps,

|

| 348 |

+

thresh_e, thresh_m, denoise, user_instruct="", api_key=""):

|

| 349 |

+

print(img)

|

| 350 |

+

if user_instruct != "" and api_key != "":

|

| 351 |

+

source_prompt, target_prompt, local, mutual, replace_steps, num_inference_steps = get_params(api_key, user_instruct)

|

| 352 |

+

cross_replace_steps = replace_steps

|

| 353 |

+

self_replace_steps = replace_steps

|

| 354 |

+

|

| 355 |

+

torch.manual_seed(seed)

|

| 356 |

+

ratio = min(height / img.height, width / img.width)

|

| 357 |

+

img = img.resize((int(img.width * ratio), int(img.height * ratio)))

|

| 358 |

+

if denoise is False:

|

| 359 |

+

strength = 1

|

| 360 |

+

num_denoise_num = math.trunc(num_inference_steps*strength)

|

| 361 |

+

num_start = num_inference_steps-num_denoise_num

|

| 362 |

+

# create the CAC controller.

|

| 363 |

+

local_blend = LocalBlend(thresh_e=thresh_e, thresh_m=thresh_m, save_inter=False)

|

| 364 |

+

controller = AttentionRefine([source_prompt, target_prompt],[[local, mutual]],

|

| 365 |

+

num_inference_steps,

|

| 366 |

+

num_start,

|

| 367 |

+

cross_replace_steps=cross_replace_steps,

|

| 368 |

+

self_replace_steps=self_replace_steps,

|

| 369 |

+

local_blend=local_blend

|

| 370 |

+

)

|

| 371 |

+

ptp_utils.register_attention_control(pipe, controller)

|

| 372 |

+

|

| 373 |

+

results = pipe(prompt=target_prompt,

|

| 374 |

+

source_prompt=source_prompt,

|

| 375 |

+

positive_prompt=positive_prompt,

|

| 376 |

+

negative_prompt=negative_prompt,

|

| 377 |

+

image=img,

|

| 378 |

+

num_inference_steps=num_inference_steps,

|

| 379 |

+

eta=1,

|

| 380 |

+

strength=strength,

|

| 381 |

+

guidance_scale=guidance_t,

|

| 382 |

+

source_guidance_scale=guidance_s,

|

| 383 |

+

denoise_model=denoise,

|

| 384 |

+

callback = controller.step_callback

|

| 385 |

+

)

|

| 386 |

+

|

| 387 |

+

return replace_nsfw_images(results)

|

| 388 |

+

|

| 389 |

+

|

| 390 |

+

def replace_nsfw_images(results):

|

| 391 |

+

for i in range(len(results.images)):

|

| 392 |

+

if results.nsfw_content_detected[i]:

|

| 393 |

+

results.images[i] = Image.open("nsfw.png")

|

| 394 |

+

return results.images[0]

|

| 395 |

+

|

| 396 |

+

|

| 397 |

+

css = """.cycle-diffusion-div div{display:inline-flex;align-items:center;gap:.8rem;font-size:1.75rem}.cycle-diffusion-div div h1{font-weight:900;margin-bottom:7px}.cycle-diffusion-div p{margin-bottom:10px;font-size:94%}.cycle-diffusion-div p a{text-decoration:underline}.tabs{margin-top:0;margin-bottom:0}#gallery{min-height:20rem}

|

| 398 |

+

"""

|

| 399 |

+

intro = """

|

| 400 |

+

<div style="display: flex;align-items: center;justify-content: center">

|

| 401 |

+

<img src="https://sled-group.github.io/InfEdit/image_assets/InfEdit.png" width="80" style="display: inline-block">

|

| 402 |

+

<h1 style="margin-left: 12px;text-align: center;margin-bottom: 7px;display: inline-block">InfEdit</h1>

|

| 403 |

+

<h3 style="display: inline-block;margin-left: 10px;margin-top: 6px;font-weight: 500">Inversion-Free Image Editing

|

| 404 |

+

with Natural Language</h3>

|

| 405 |

+

</div>

|

| 406 |

+

"""

|

| 407 |

+

|

| 408 |

+

param_bot_prompt = """

|

| 409 |

+

You are a helpful assistant named InfEdit that provides input parameters to the image editing model based on user instructions. You should respond in valid json format.

|

| 410 |

+

|

| 411 |

+

User:

|

| 412 |

+

```

|

| 413 |

+

{image descrption and editing commands | example: 'The image shows an apple on the table and I want to change the apple to a banana.'}

|

| 414 |

+

```

|

| 415 |

+

|

| 416 |

+

After receiving this, you will need to generate the appropriate params as input to the image editing models.

|

| 417 |

+

|

| 418 |

+

Assistant:

|

| 419 |

+

```

|

| 420 |

+

{

|

| 421 |

+

“source_prompt”: “{a string describes the input image, it needs to includes the thing user want to change | example: 'an apple on the table'}”,

|

| 422 |

+

“target_prompt”: “{a string that matches the source prompt, but it needs to includes the thing user want to change | example: 'a banana on the table'}”,

|

| 423 |

+

“target_sub”: “{a special substring from the target prompt}”,

|

| 424 |

+

“mutual_sub”: “{a special mutual substring from source/target prompt}”

|

| 425 |

+

“attention_control”: {a number between 0 and 1}

|

| 426 |

+

“steps”: {a number between 8 and 50}

|

| 427 |

+

}

|

| 428 |

+

```

|

| 429 |

+

|

| 430 |

+

You need to fill in the "target_sub" and "mutual_sub" by the guideline below.

|

| 431 |

+

|

| 432 |

+

If the editing instruction is not about changing style or background:

|

| 433 |

+

- The "target_sub" should be a special substring from the target prompt that highlights what you want to edit, it should be as short as possible and should only be noun ("banana" instead of "a banana").

|

| 434 |

+

- The "mutual_sub" should be kept as an empty string.

|

| 435 |

+

P.S. When you want to remove something, it's always better to use "empty", "nothing" or some appropriate words to replace it. Like remove an apple on the table, you can use "an apple on the table" and "nothing on the table" as your prompts, and use "nothing" as your target_sub.

|

| 436 |

+

P.S. You should think carefully about what you want to modify, like "short hair" to "long hair", your target_sub should be "hair" instead of "long".

|

| 437 |

+

P.S. When you are adding something, the target_sub should be the thing you want to add.

|

| 438 |

+

|

| 439 |

+

If it's about style editing:

|

| 440 |

+

- The "target_sub" should be kept as an empty string.

|

| 441 |

+

- The "mutual_sub" should be kept as an empty string.

|

| 442 |

+

|

| 443 |

+

If it's about background editing:

|

| 444 |

+

- The "target_sub" should be kept as an empty string.

|

| 445 |

+

- The "mutual_sub" should be a common substring from source/target prompt, and is the main object/character (noun) in the image. It should be as short as possible and only be noun ("banana" instead of "a banana", "man" instead of "running man").

|

| 446 |

+

|

| 447 |

+

A specific case, if it's about change an object's abstract information, like pose, view or shape and want to keep the semantic feature same, like a dog to a running dog,

|

| 448 |

+

- The "target_sub" should be a special substring from the target prompt that highlights what you want to edit, it should be as short as possible and should only be noun ("dog" instead of "a running dog").

|

| 449 |

+

- The "mutual_sub" should be as same as target_sub because we want to "edit the dog but also keep the dog as same".

|

| 450 |

+

|

| 451 |

+

|

| 452 |

+

You need to choose a specific value of “attention_control” by the guideline below.

|

| 453 |

+

A larger value of “attention_control” means more consistency between the source image and the output.

|

| 454 |

+

|

| 455 |

+

- the editing is on the feature level, like color, material and so on, and want to ensure the characteristics of the original object as much as possible, you should choose a large value. (Example: for color editing, you can choose 1, and for material you can choose 0.9)

|

| 456 |

+

- the editing is on the object level, like edit a "cat" to a "dog", or a "horse" to a "zebra", and want to make them to be similar, you need to choose a relatively large value, we say 0.7 for example.

|

| 457 |

+

- the editing is changing the style but want to keep the spatial features, you need to choose a relatively large value, we say 0.7 for example.

|

| 458 |

+

- the editing need to change something's shape, like edit an "apple" to a "banana", a "flower" to a "knife", "short" hair to "long" hair, "round" to "square", which have very different shapes, you need to choose a relatively small value, we say 0.3 for example.

|

| 459 |

+

- the editing is tring to change the spatial information, like change the pose and so on, you need to choose a relatively small value, we say 0.3 for example.

|

| 460 |

+

- the editing should not consider the consistency with the input image, like add something new, remove something, or change the background, you can directly use 0.

|

| 461 |

+

|

| 462 |

+

|

| 463 |

+

You need to choose a specific value of “steps” by the guideline below.

|

| 464 |

+

More steps mean that the edit effect is more pronounced.

|

| 465 |

+

- If the editing is super easy, like changing something to something with very similar features, you can choose 8 steps.

|

| 466 |

+

- In most cases, you can choose 15 steps.

|

| 467 |

+

- For style editing and remove tasks, you can choose a larger value, like 25 steps.

|

| 468 |

+

- If you feel the task is extremely difficult (like some kinds of styles or removing very tiny stuffs), you can directly use 50 steps.

|

| 469 |

+

"""

|

| 470 |

+

def get_params(api_key, user_instruct):

|

| 471 |

+

from openai import OpenAI

|

| 472 |

+

client = OpenAI(api_key=api_key)

|

| 473 |

+

print("user_instruct", user_instruct)

|

| 474 |

+

response = client.chat.completions.create(

|

| 475 |

+

model="gpt-4-1106-preview",

|

| 476 |

+

messages=[

|

| 477 |

+

{"role": "system", "content": param_bot_prompt},

|

| 478 |

+

{"role": "user", "content": user_instruct}

|

| 479 |

+

],

|

| 480 |

+

response_format={ "type": "json_object" },

|

| 481 |

+

)

|

| 482 |

+

param_dict = response.choices[0].message.content

|

| 483 |

+

print("param_dict", param_dict)

|

| 484 |

+

import json

|

| 485 |

+

param_dict = json.loads(param_dict)

|

| 486 |

+

return param_dict['source_prompt'], param_dict['target_prompt'], param_dict['target_sub'], param_dict['mutual_sub'], param_dict['attention_control'], param_dict['steps']

|

| 487 |

+

with gr.Blocks(css=css) as demo:

|

| 488 |

+

gr.HTML(intro)

|

| 489 |

+

with gr.Accordion("README", open=False):

|

| 490 |

+

gr.HTML(

|

| 491 |

+

"""

|

| 492 |

+

<p style="font-size: 0.95rem;margin: 0rem;line-height: 1.2em;margin-top:1em;display: inline-block">

|

| 493 |

+

<a href="https://sled-group.github.io/InfEdit/" target="_blank">project page</a> | <a href="https://arxiv.org" target="_blank">paper</a>| <a href="https://github.com/sled-group/InfEdit/tree/website" target="_blank">handbook</a>

|

| 494 |

+

</p>

|

| 495 |

+

|

| 496 |

+

We are now hosting on a A4000 GPU with 16 GiB memory.

|

| 497 |

+

"""

|

| 498 |

+

)

|

| 499 |

+

with gr.Row():

|

| 500 |

+

|

| 501 |

+

with gr.Column(scale=55):

|

| 502 |

+

with gr.Group():

|

| 503 |

+

|

| 504 |

+

img = gr.Image(label="Input image", height=512, type="pil")

|

| 505 |

+

|

| 506 |

+

image_out = gr.Image(label="Output image", height=512)

|

| 507 |

+

# gallery = gr.Gallery(

|

| 508 |

+

# label="Generated images", show_label=False, elem_id="gallery"

|

| 509 |

+

# ).style(grid=[1], height="auto")

|

| 510 |

+

|

| 511 |

+

with gr.Column(scale=45):

|

| 512 |

+

|

| 513 |

+

with gr.Tab("UAC options"):

|

| 514 |

+

with gr.Group():

|

| 515 |

+

with gr.Row():

|

| 516 |

+

source_prompt = gr.Textbox(label="Source prompt", placeholder="Source prompt describes the input image")

|

| 517 |

+

with gr.Row():

|

| 518 |

+

guidance_s = gr.Slider(label="Source guidance scale", value=1, minimum=1, maximum=10)

|

| 519 |

+

positive_prompt = gr.Textbox(label="Positive prompt", placeholder="")

|

| 520 |

+

with gr.Row():

|

| 521 |

+

target_prompt = gr.Textbox(label="Target prompt", placeholder="Target prompt describes the output image")

|

| 522 |

+

with gr.Row():

|

| 523 |

+

guidance_t = gr.Slider(label="Target guidance scale", value=2, minimum=1, maximum=10)

|

| 524 |

+

negative_prompt = gr.Textbox(label="Negative prompt", placeholder="")

|

| 525 |

+

with gr.Row():

|

| 526 |

+

local = gr.Textbox(label="Target blend", placeholder="")

|

| 527 |

+

thresh_e = gr.Slider(label="Target blend thresh", value=0.6, minimum=0, maximum=1)

|

| 528 |

+

with gr.Row():

|

| 529 |

+

mutual = gr.Textbox(label="Source blend", placeholder="")

|

| 530 |

+

thresh_m = gr.Slider(label="Source blend thresh", value=0.6, minimum=0, maximum=1)

|

| 531 |

+

with gr.Row():

|

| 532 |

+

cross_replace_steps = gr.Slider(label="Cross attn control schedule", value=0.7, minimum=0.0, maximum=1, step=0.01)

|

| 533 |

+

self_replace_steps = gr.Slider(label="Self attn control schedule", value=0.3, minimum=0.0, maximum=1, step=0.01)

|

| 534 |

+

with gr.Row():

|

| 535 |

+

denoise = gr.Checkbox(label='Denoising Mode', value=False)

|

| 536 |

+

strength = gr.Slider(label="Strength", value=0.7, minimum=0, maximum=1, step=0.01, visible=False)

|

| 537 |

+

denoise.change(fn=lambda value: gr.update(visible=value), inputs=denoise, outputs=strength)

|

| 538 |

+

with gr.Row():

|

| 539 |

+

generate1 = gr.Button(value="Run")

|

| 540 |

+

|

| 541 |

+

with gr.Tab("Advanced options"):

|

| 542 |

+

with gr.Group():

|

| 543 |

+

with gr.Row():

|

| 544 |

+

num_inference_steps = gr.Slider(label="Inference steps", value=15, minimum=1, maximum=50, step=1)

|

| 545 |

+

width = gr.Slider(label="Width", value=512, minimum=512, maximum=1024, step=8)

|

| 546 |

+

height = gr.Slider(label="Height", value=512, minimum=512, maximum=1024, step=8)

|

| 547 |

+

with gr.Row():

|

| 548 |

+

seed = gr.Slider(0, 2147483647, label='Seed', value=0, step=1)

|

| 549 |

+

with gr.Row():

|

| 550 |

+

generate3 = gr.Button(value="Run")

|

| 551 |

+

|

| 552 |

+

with gr.Tab("Instruction following (+GPT4)"):

|

| 553 |

+

guide_str = """Describe the image you uploaded and tell me how you want to edit it."""

|

| 554 |

+

with gr.Group():

|

| 555 |

+

api_key = gr.Textbox(label="YOUR OPENAI API KEY", placeholder="sk-xxx", lines = 1, type="password")

|

| 556 |

+

user_instruct = gr.Textbox(label=guide_str, placeholder="The image shows an apple on the table and I want to change the apple to a banana.", lines = 3)

|

| 557 |

+

# source_prompt, target_prompt, local, mutual = get_params(api_key, user_instruct)

|

| 558 |

+

with gr.Row():

|

| 559 |

+

generate4 = gr.Button(value="Run")

|

| 560 |

+

|

| 561 |

+

inputs1 = [img, source_prompt, target_prompt,

|

| 562 |

+

local, mutual,

|

| 563 |

+

positive_prompt, negative_prompt,

|

| 564 |

+

guidance_s, guidance_t,

|

| 565 |

+

num_inference_steps,

|

| 566 |

+

width, height, seed, strength,

|

| 567 |

+

cross_replace_steps, self_replace_steps,

|

| 568 |

+

thresh_e, thresh_m, denoise]

|

| 569 |

+

inputs4 =[img, source_prompt, target_prompt,

|

| 570 |

+

local, mutual,

|

| 571 |

+

positive_prompt, negative_prompt,

|

| 572 |

+

guidance_s, guidance_t,

|

| 573 |

+

num_inference_steps,

|

| 574 |

+

width, height, seed, strength,

|

| 575 |

+

cross_replace_steps, self_replace_steps,

|

| 576 |

+

thresh_e, thresh_m, denoise, user_instruct, api_key]

|

| 577 |

+

generate1.click(inference, inputs=inputs1, outputs=image_out)

|

| 578 |

+

generate3.click(inference, inputs=inputs1, outputs=image_out)

|

| 579 |

+

generate4.click(inference, inputs=inputs4, outputs=image_out)

|

| 580 |

+

|

| 581 |

+

ex = gr.Examples(

|

| 582 |

+

[

|

| 583 |

+

["images/corgi.jpg","corgi","cat","cat","","","",1,2,15,512,512,0,1,0.7,0.7,0.6,0.6,False],

|

| 584 |

+

["images/muffin.png","muffin","chihuahua","chihuahua","","","",1,2,15,512,512,0,1,0.65,0.6,0.4,0.7,False],

|

| 585 |

+

["images/InfEdit.jpg","an anime girl holding a pad","an anime girl holding a book","book","girl ","","",1,2,15,512,512,0,1,0.8,0.8,0.6,0.6,False],

|

| 586 |

+

["images/summer.jpg","a photo of summer scene","A photo of winter scene","","","","",1,2,15,512,512,0,1,1,1,0.6,0.7,False],

|

| 587 |

+

["images/bear.jpg","A bear sitting on the ground","A bear standing on the ground","bear","","","",1,1.5,15,512,512,0,1,0.3,0.3,0.5,0.7,False],

|

| 588 |

+

["images/james.jpg","a man playing basketball","a man playing soccer","soccer","man ","","",1,2,15,512,512,0,1,0,0,0.5,0.4,False],

|

| 589 |

+

["images/osu.jfif","A football with OSU logo","A football with Umich logo","logo","","","",1,2,15,512,512,0,1,0.5,0,0.6,0.7,False],

|

| 590 |

+

["images/groundhog.png","A anime groundhog head","A anime ferret head","head","","","",1,2,15,512,512,0,1,0.5,0.5,0.6,0.7,False],

|

| 591 |

+

["images/miku.png","A anime girl with green hair and green eyes and shirt","A anime girl with red hair and red eyes and shirt","red hair and red eyes","shirt","","",1,2,15,512,512,0,1,1,1,0.2,0.8,False],

|

| 592 |

+

["images/droplet.png","a blue droplet emoji with a smiling face with yellow dot","a red fire emoji with an angry face with yellow dot","","yellow dot","","",1,2,15,512,512,0,1,0.7,0.7,0.6,0.7,False],

|

| 593 |

+

["images/moyu.png","an emoji holding a sign and a fish","an emoji holding a sign and a shark","shark","sign","","",1,2,15,512,512,0,1,0.7,0.7,0.5,0.7,False],

|

| 594 |

+

["images/214000000000.jpg","a painting of a waterfall in the mountains","a painting of a waterfall and angels in the mountains","angels","","","",1,2,15,512,512,0,1,0,0,0.5,0.5,False],

|

| 595 |

+

["images/311000000002.jpg","a lion in a suit sitting at a table with a laptop","a lion in a suit sitting at a table with nothing","nothing","","","",1,2,15,512,512,0,1,0,0,0.5,0.5,False],

|

| 596 |

+

["images/genshin.png","anime girl, with blue logo","anime boy with golden hair named Link, from The Legend of Zelda, with legend of zelda logo","anime boy","","","",1,2,50,512,512,0,1,0.65,0.65,0.5,0.5,False],

|

| 597 |

+

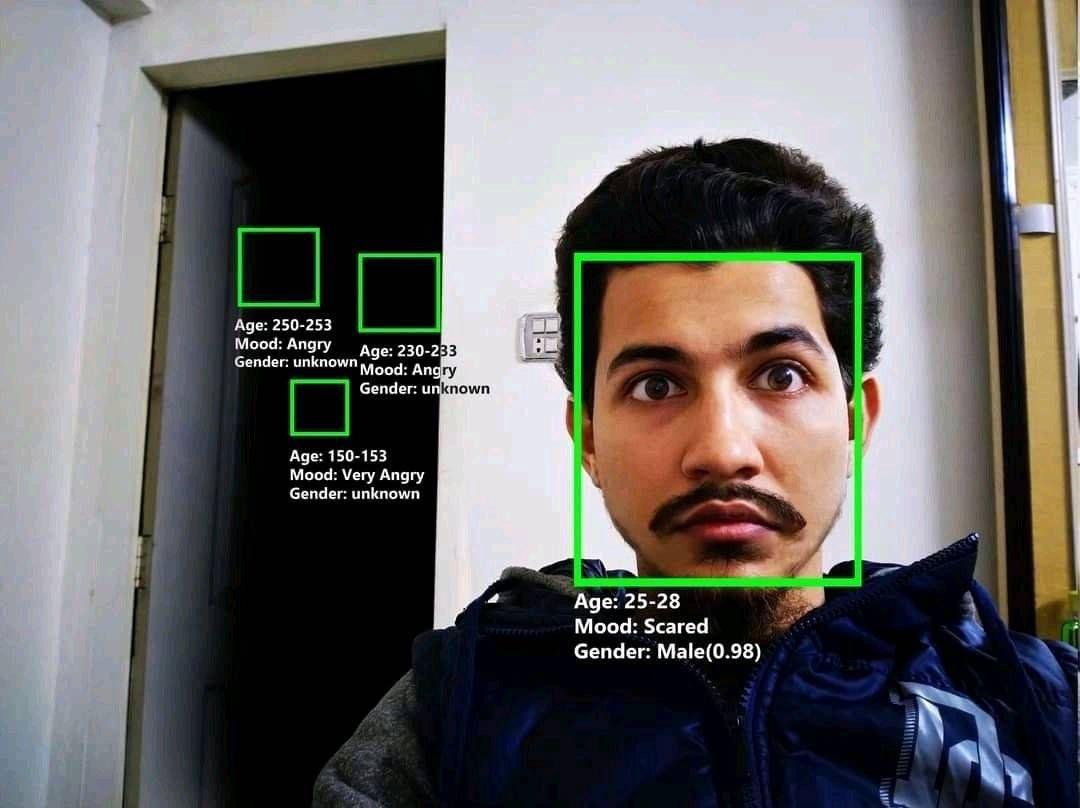

["images/angry.jpg","a man with bounding boxes at the door","a man with angry birds at the door","angry birds","a man","","",1,2,15,512,512,0,1,0.3,0.1,0.45,0.4,False],

|

| 598 |

+

["images/Doom_Slayer.jpg","doom slayer from game doom","master chief from game halo","","","","",1,2,15,512,512,0,1,0.6,0.8,0.7,0.7,False],

|

| 599 |

+

["images/Elon_Musk.webp","Elon Musk in front of a car","Mark Iv iron man suit in front of a car","Mark Iv iron man suit","car","","",1,2,15,512,512,0,1,0.5,0.3,0.6,0.7,False],

|

| 600 |

+

["images/dragon.jpg","a mascot dragon","pixel art, a mascot dragon","","","","",1,2,25,512,512,0,1,0.7,0.7,0.6,0.6,False],

|

| 601 |

+

["images/frieren.jpg","a anime girl with long white hair holding a bottle","a anime girl with long white hair holding a smartphone","smartphone","","","",1,2,15,512,512,0,1,0.7,0.7,0.7,0.7,False],

|

| 602 |

+

["images/sam.png","a man with an openai logo","a man with a twitter logo","a twitter logo","a man","","",1,2,15,512,512,0,0.8,0,0,0.3,0.6,True],

|

| 603 |

+

|

| 604 |

+

|

| 605 |

+

],

|

| 606 |

+

[img, source_prompt, target_prompt,

|

| 607 |

+

local, mutual,

|

| 608 |

+

positive_prompt, negative_prompt,

|

| 609 |

+

guidance_s, guidance_t,

|

| 610 |

+

num_inference_steps,

|

| 611 |

+

width, height, seed, strength,

|

| 612 |

+

cross_replace_steps, self_replace_steps,

|

| 613 |

+

thresh_e, thresh_m, denoise],

|

| 614 |

+

image_out, inference, cache_examples=True,examples_per_page=20)

|

| 615 |

+

# if not is_colab:

|

| 616 |

+

# demo.queue(concurrency_count=1)

|

| 617 |

+

|

| 618 |

+

# demo.launch(debug=False, share=False,server_name="0.0.0.0",server_port = 80)

|

| 619 |

+

demo.launch(debug=False, share=False)

|

| 620 |

+

|

images/214000000000.jpg

ADDED

|

images/311000000002.jpg

ADDED

|

images/Doom_Slayer.jpg

ADDED

|

images/Elon_Musk.webp

ADDED

|

images/InfEdit.jpg

ADDED

|

Git LFS Details

|

images/angry.jpg

ADDED

|

images/bear.jpg

ADDED

|

images/computer.png

ADDED

|

images/corgi.jpg

ADDED

|

images/dragon.jpg

ADDED

|

images/droplet.png

ADDED

|

images/frieren.jpg

ADDED

|

images/genshin.png

ADDED

|

images/groundhog.png

ADDED

|

images/james.jpg

ADDED

|

images/miku.png

ADDED

|

images/moyu.png

ADDED

|

images/muffin.png

ADDED

|

images/osu.jfif

ADDED

|

Binary file (4.38 kB). View file

|

|

|

images/sam.png

ADDED

|

Git LFS Details

|

images/summer.jpg

ADDED

|

nsfw.png

ADDED

|

pipeline_ead.py

ADDED

|

@@ -0,0 +1,707 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|