+  +

+

+

+**News**: We released the technical report on [ArXiv](https://arxiv.org/abs/1906.07155).

+

+Documentation: https://mmdetection.readthedocs.io/

+

+## Introduction

+

+English | [简体中文](README_zh-CN.md)

+

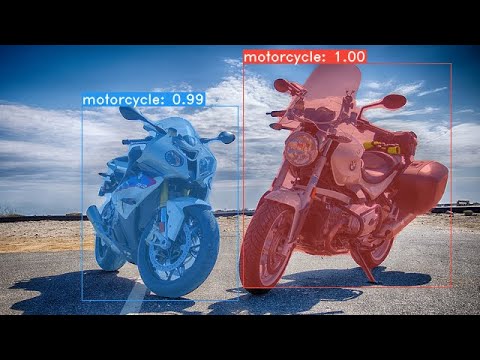

+MMDetection is an open source object detection toolbox based on PyTorch. It is

+a part of the [OpenMMLab](https://openmmlab.com/) project.

+

+The master branch works with **PyTorch 1.3+**.

+The old v1.x branch works with PyTorch 1.1 to 1.4, but v2.0 is strongly recommended for faster speed, higher performance, better design and more friendly usage.

+

+

+

+### Major features

+

+- **Modular Design**

+

+ We decompose the detection framework into different components and one can easily construct a customized object detection framework by combining different modules.

+

+- **Support of multiple frameworks out of box**

+

+ The toolbox directly supports popular and contemporary detection frameworks, *e.g.* Faster RCNN, Mask RCNN, RetinaNet, etc.

+

+- **High efficiency**

+

+ All basic bbox and mask operations run on GPUs. The training speed is faster than or comparable to other codebases, including [Detectron2](https://github.com/facebookresearch/detectron2), [maskrcnn-benchmark](https://github.com/facebookresearch/maskrcnn-benchmark) and [SimpleDet](https://github.com/TuSimple/simpledet).

+

+- **State of the art**

+

+ The toolbox stems from the codebase developed by the *MMDet* team, who won [COCO Detection Challenge](http://cocodataset.org/#detection-leaderboard) in 2018, and we keep pushing it forward.

+

+Apart from MMDetection, we also released a library [mmcv](https://github.com/open-mmlab/mmcv) for computer vision research, which is heavily depended on by this toolbox.

+

+## License

+

+This project is released under the [Apache 2.0 license](LICENSE).

+

+## Changelog

+

+v2.11.0 was released in 01/04/2021.

+Please refer to [changelog.md](docs/changelog.md) for details and release history.

+A comparison between v1.x and v2.0 codebases can be found in [compatibility.md](docs/compatibility.md).

+

+## Benchmark and model zoo

+

+Results and models are available in the [model zoo](docs/model_zoo.md).

+

+Supported backbones:

+

+- [x] ResNet (CVPR'2016)

+- [x] ResNeXt (CVPR'2017)

+- [x] VGG (ICLR'2015)

+- [x] HRNet (CVPR'2019)

+- [x] RegNet (CVPR'2020)

+- [x] Res2Net (TPAMI'2020)

+- [x] ResNeSt (ArXiv'2020)

+

+Supported methods:

+

+- [x] [RPN (NeurIPS'2015)](configs/rpn)

+- [x] [Fast R-CNN (ICCV'2015)](configs/fast_rcnn)

+- [x] [Faster R-CNN (NeurIPS'2015)](configs/faster_rcnn)

+- [x] [Mask R-CNN (ICCV'2017)](configs/mask_rcnn)

+- [x] [Cascade R-CNN (CVPR'2018)](configs/cascade_rcnn)

+- [x] [Cascade Mask R-CNN (CVPR'2018)](configs/cascade_rcnn)

+- [x] [SSD (ECCV'2016)](configs/ssd)

+- [x] [RetinaNet (ICCV'2017)](configs/retinanet)

+- [x] [GHM (AAAI'2019)](configs/ghm)

+- [x] [Mask Scoring R-CNN (CVPR'2019)](configs/ms_rcnn)

+- [x] [Double-Head R-CNN (CVPR'2020)](configs/double_heads)

+- [x] [Hybrid Task Cascade (CVPR'2019)](configs/htc)

+- [x] [Libra R-CNN (CVPR'2019)](configs/libra_rcnn)

+- [x] [Guided Anchoring (CVPR'2019)](configs/guided_anchoring)

+- [x] [FCOS (ICCV'2019)](configs/fcos)

+- [x] [RepPoints (ICCV'2019)](configs/reppoints)

+- [x] [Foveabox (TIP'2020)](configs/foveabox)

+- [x] [FreeAnchor (NeurIPS'2019)](configs/free_anchor)

+- [x] [NAS-FPN (CVPR'2019)](configs/nas_fpn)

+- [x] [ATSS (CVPR'2020)](configs/atss)

+- [x] [FSAF (CVPR'2019)](configs/fsaf)

+- [x] [PAFPN (CVPR'2018)](configs/pafpn)

+- [x] [Dynamic R-CNN (ECCV'2020)](configs/dynamic_rcnn)

+- [x] [PointRend (CVPR'2020)](configs/point_rend)

+- [x] [CARAFE (ICCV'2019)](configs/carafe/README.md)

+- [x] [DCNv2 (CVPR'2019)](configs/dcn/README.md)

+- [x] [Group Normalization (ECCV'2018)](configs/gn/README.md)

+- [x] [Weight Standardization (ArXiv'2019)](configs/gn+ws/README.md)

+- [x] [OHEM (CVPR'2016)](configs/faster_rcnn/faster_rcnn_r50_fpn_ohem_1x_coco.py)

+- [x] [Soft-NMS (ICCV'2017)](configs/faster_rcnn/faster_rcnn_r50_fpn_soft_nms_1x_coco.py)

+- [x] [Generalized Attention (ICCV'2019)](configs/empirical_attention/README.md)

+- [x] [GCNet (ICCVW'2019)](configs/gcnet/README.md)

+- [x] [Mixed Precision (FP16) Training (ArXiv'2017)](configs/fp16/README.md)

+- [x] [InstaBoost (ICCV'2019)](configs/instaboost/README.md)

+- [x] [GRoIE (ICPR'2020)](configs/groie/README.md)

+- [x] [DetectoRS (ArXix'2020)](configs/detectors/README.md)

+- [x] [Generalized Focal Loss (NeurIPS'2020)](configs/gfl/README.md)

+- [x] [CornerNet (ECCV'2018)](configs/cornernet/README.md)

+- [x] [Side-Aware Boundary Localization (ECCV'2020)](configs/sabl/README.md)

+- [x] [YOLOv3 (ArXiv'2018)](configs/yolo/README.md)

+- [x] [PAA (ECCV'2020)](configs/paa/README.md)

+- [x] [YOLACT (ICCV'2019)](configs/yolact/README.md)

+- [x] [CentripetalNet (CVPR'2020)](configs/centripetalnet/README.md)

+- [x] [VFNet (ArXix'2020)](configs/vfnet/README.md)

+- [x] [DETR (ECCV'2020)](configs/detr/README.md)

+- [x] [Deformable DETR (ICLR'2021)](configs/deformable_detr/README.md)

+- [x] [CascadeRPN (NeurIPS'2019)](configs/cascade_rpn/README.md)

+- [x] [SCNet (AAAI'2021)](configs/scnet/README.md)

+- [x] [AutoAssign (ArXix'2020)](configs/autoassign/README.md)

+- [x] [YOLOF (CVPR'2021)](configs/yolof/README.md)

+

+

+Some other methods are also supported in [projects using MMDetection](./docs/projects.md).

+

+## Installation

+

+Please refer to [get_started.md](docs/get_started.md) for installation.

+

+## Getting Started

+

+Please see [get_started.md](docs/get_started.md) for the basic usage of MMDetection.

+We provide [colab tutorial](demo/MMDet_Tutorial.ipynb), and full guidance for quick run [with existing dataset](docs/1_exist_data_model.md) and [with new dataset](docs/2_new_data_model.md) for beginners.

+There are also tutorials for [finetuning models](docs/tutorials/finetune.md), [adding new dataset](docs/tutorials/new_dataset.md), [designing data pipeline](docs/tutorials/data_pipeline.md), [customizing models](docs/tutorials/customize_models.md), [customizing runtime settings](docs/tutorials/customize_runtime.md) and [useful tools](docs/useful_tools.md).

+

+Please refer to [FAQ](docs/faq.md) for frequently asked questions.

+

+## Contributing

+

+We appreciate all contributions to improve MMDetection. Please refer to [CONTRIBUTING.md](.github/CONTRIBUTING.md) for the contributing guideline.

+

+## Acknowledgement

+

+MMDetection is an open source project that is contributed by researchers and engineers from various colleges and companies. We appreciate all the contributors who implement their methods or add new features, as well as users who give valuable feedbacks.

+We wish that the toolbox and benchmark could serve the growing research community by providing a flexible toolkit to reimplement existing methods and develop their own new detectors.

+

+## Citation

+

+If you use this toolbox or benchmark in your research, please cite this project.

+

+```

+@article{mmdetection,

+ title = {{MMDetection}: Open MMLab Detection Toolbox and Benchmark},

+ author = {Chen, Kai and Wang, Jiaqi and Pang, Jiangmiao and Cao, Yuhang and

+ Xiong, Yu and Li, Xiaoxiao and Sun, Shuyang and Feng, Wansen and

+ Liu, Ziwei and Xu, Jiarui and Zhang, Zheng and Cheng, Dazhi and

+ Zhu, Chenchen and Cheng, Tianheng and Zhao, Qijie and Li, Buyu and

+ Lu, Xin and Zhu, Rui and Wu, Yue and Dai, Jifeng and Wang, Jingdong

+ and Shi, Jianping and Ouyang, Wanli and Loy, Chen Change and Lin, Dahua},

+ journal= {arXiv preprint arXiv:1906.07155},

+ year={2019}

+}

+```

+

+## Projects in OpenMMLab

+

+- [MMCV](https://github.com/open-mmlab/mmcv): OpenMMLab foundational library for computer vision.

+- [MMClassification](https://github.com/open-mmlab/mmclassification): OpenMMLab image classification toolbox and benchmark.

+- [MMDetection](https://github.com/open-mmlab/mmdetection): OpenMMLab detection toolbox and benchmark.

+- [MMDetection3D](https://github.com/open-mmlab/mmdetection3d): OpenMMLab's next-generation platform for general 3D object detection.

+- [MMSegmentation](https://github.com/open-mmlab/mmsegmentation): OpenMMLab semantic segmentation toolbox and benchmark.

+- [MMAction2](https://github.com/open-mmlab/mmaction2): OpenMMLab's next-generation action understanding toolbox and benchmark.

+- [MMTracking](https://github.com/open-mmlab/mmtracking): OpenMMLab video perception toolbox and benchmark.

+- [MMPose](https://github.com/open-mmlab/mmpose): OpenMMLab pose estimation toolbox and benchmark.

+- [MMEditing](https://github.com/open-mmlab/mmediting): OpenMMLab image and video editing toolbox.

+- [MMOCR](https://github.com/open-mmlab/mmocr): A Comprehensive Toolbox for Text Detection, Recognition and Understanding.

+- [MMGeneration](https://github.com/open-mmlab/mmgeneration): OpenMMLab image and video generative models toolbox.

diff --git a/src/ndl_layout/mmdetection/README_zh-CN.md b/src/ndl_layout/mmdetection/README_zh-CN.md

new file mode 100644

index 0000000000000000000000000000000000000000..ba72107cc5a52f20794a3842f19d3b58edbf197e

--- /dev/null

+++ b/src/ndl_layout/mmdetection/README_zh-CN.md

@@ -0,0 +1,190 @@

+ +

+

+  +

+

+

+**新闻**: 我们在 [ArXiv](https://arxiv.org/abs/1906.07155) 上公开了技术报告。

+

+文档: https://mmdetection.readthedocs.io/

+

+## 简介

+

+[English](README.md) | 简体中文

+

+MMDetection 是一个基于 PyTorch 的目标检测开源工具箱。它是 [OpenMMLab](https://openmmlab.com/) 项目的一部分。

+

+主分支代码目前支持 PyTorch 1.3 以上的版本。

+

+v1.x 的历史版本支持 PyTorch 1.1 到 1.4,但是我们强烈建议用户使用新的 2.x 的版本,新的版本速度更快,性能更高,有更优雅的代码设计,对用户使用也更加友好。

+

+

+

+### 主要特性

+

+- **模块化设计**

+

+ MMDetection 将检测框架解耦成不同的模块组件,通过组合不同的模块组件,用户可以便捷地构建自定义的检测模型

+

+- **丰富的即插即用的算法和模型**

+

+ MMDetection 支持了众多主流的和最新的检测算法,例如 Faster R-CNN,Mask R-CNN,RetinaNet 等。

+

+- **速度快**

+

+ 基本的框和 mask 操作都实现了 GPU 版本,训练速度比其他代码库更快或者相当,包括 [Detectron2](https://github.com/facebookresearch/detectron2), [maskrcnn-benchmark](https://github.com/facebookresearch/maskrcnn-benchmark) 和 [SimpleDet](https://github.com/TuSimple/simpledet)。

+

+- **性能高**

+

+ MMDetection 这个算法库源自于 COCO 2018 目标检测竞赛的冠军团队 *MMDet* 团队开发的代码,我们在之后持续进行了改进和提升。

+

+除了 MMDetection 之外,我们还开源了计算机视觉基础库 [MMCV](https://github.com/open-mmlab/mmcv),MMCV 是 MMDetection 的主要依赖。

+

+## 开源许可证

+

+该项目采用 [Apache 2.0 开源许可证](LICENSE)。

+

+## 更新日志

+

+最新的月度版本 v2.11.0 在 2021.04.01 发布。

+如果想了解更多版本更新细节和历史信息,请阅读[更新日志](docs/changelog.md)。

+在[兼容性说明文档](docs/compatibility.md)中我们提供了 1.x 和 2.0 版本的详细比较。

+

+## 基准测试和模型库

+

+测试结果和模型可以在[模型库](docs/model_zoo.md)中找到。

+

+已支持的骨干网络:

+

+- [x] ResNet (CVPR'2016)

+- [x] ResNeXt (CVPR'2017)

+- [x] VGG (ICLR'2015)

+- [x] HRNet (CVPR'2019)

+- [x] RegNet (CVPR'2020)

+- [x] Res2Net (TPAMI'2020)

+- [x] ResNeSt (ArXiv'2020)

+

+已支持的算法:

+

+- [x] [RPN (NeurIPS'2015)](configs/rpn)

+- [x] [Fast R-CNN (ICCV'2015)](configs/fast_rcnn)

+- [x] [Faster R-CNN (NeurIPS'2015)](configs/faster_rcnn)

+- [x] [Mask R-CNN (ICCV'2017)](configs/mask_rcnn)

+- [x] [Cascade R-CNN (CVPR'2018)](configs/cascade_rcnn)

+- [x] [Cascade Mask R-CNN (CVPR'2018)](configs/cascade_rcnn)

+- [x] [SSD (ECCV'2016)](configs/ssd)

+- [x] [RetinaNet (ICCV'2017)](configs/retinanet)

+- [x] [GHM (AAAI'2019)](configs/ghm)

+- [x] [Mask Scoring R-CNN (CVPR'2019)](configs/ms_rcnn)

+- [x] [Double-Head R-CNN (CVPR'2020)](configs/double_heads)

+- [x] [Hybrid Task Cascade (CVPR'2019)](configs/htc)

+- [x] [Libra R-CNN (CVPR'2019)](configs/libra_rcnn)

+- [x] [Guided Anchoring (CVPR'2019)](configs/guided_anchoring)

+- [x] [FCOS (ICCV'2019)](configs/fcos)

+- [x] [RepPoints (ICCV'2019)](configs/reppoints)

+- [x] [Foveabox (TIP'2020)](configs/foveabox)

+- [x] [FreeAnchor (NeurIPS'2019)](configs/free_anchor)

+- [x] [NAS-FPN (CVPR'2019)](configs/nas_fpn)

+- [x] [ATSS (CVPR'2020)](configs/atss)

+- [x] [FSAF (CVPR'2019)](configs/fsaf)

+- [x] [PAFPN (CVPR'2018)](configs/pafpn)

+- [x] [Dynamic R-CNN (ECCV'2020)](configs/dynamic_rcnn)

+- [x] [PointRend (CVPR'2020)](configs/point_rend)

+- [x] [CARAFE (ICCV'2019)](configs/carafe/README.md)

+- [x] [DCNv2 (CVPR'2019)](configs/dcn/README.md)

+- [x] [Group Normalization (ECCV'2018)](configs/gn/README.md)

+- [x] [Weight Standardization (ArXiv'2019)](configs/gn+ws/README.md)

+- [x] [OHEM (CVPR'2016)](configs/faster_rcnn/faster_rcnn_r50_fpn_ohem_1x_coco.py)

+- [x] [Soft-NMS (ICCV'2017)](configs/faster_rcnn/faster_rcnn_r50_fpn_soft_nms_1x_coco.py)

+- [x] [Generalized Attention (ICCV'2019)](configs/empirical_attention/README.md)

+- [x] [GCNet (ICCVW'2019)](configs/gcnet/README.md)

+- [x] [Mixed Precision (FP16) Training (ArXiv'2017)](configs/fp16/README.md)

+- [x] [InstaBoost (ICCV'2019)](configs/instaboost/README.md)

+- [x] [GRoIE (ICPR'2020)](configs/groie/README.md)

+- [x] [DetectoRS (ArXix'2020)](configs/detectors/README.md)

+- [x] [Generalized Focal Loss (NeurIPS'2020)](configs/gfl/README.md)

+- [x] [CornerNet (ECCV'2018)](configs/cornernet/README.md)

+- [x] [Side-Aware Boundary Localization (ECCV'2020)](configs/sabl/README.md)

+- [x] [YOLOv3 (ArXiv'2018)](configs/yolo/README.md)

+- [x] [PAA (ECCV'2020)](configs/paa/README.md)

+- [x] [YOLACT (ICCV'2019)](configs/yolact/README.md)

+- [x] [CentripetalNet (CVPR'2020)](configs/centripetalnet/README.md)

+- [x] [VFNet (ArXix'2020)](configs/vfnet/README.md)

+- [x] [DETR (ECCV'2020)](configs/detr/README.md)

+- [x] [Deformable DETR (ICLR'2021)](configs/deformable_detr/README.md)

+- [x] [CascadeRPN (NeurIPS'2019)](configs/cascade_rpn/README.md)

+- [x] [SCNet (AAAI'2021)](configs/scnet/README.md)

+- [x] [AutoAssign (ArXix'2020)](configs/autoassign/README.md)

+- [x] [YOLOF (CVPR'2021)](configs/yolof/README.md)

+

+我们在[基于 MMDetection 的项目](./docs/projects.md)中列举了一些其他的支持的算法。

+

+## 安装

+

+请参考[快速入门文档](docs/get_started.md)进行安装。

+

+## 快速入门

+

+请参考[快速入门文档](docs/get_started.md)学习 MMDetection 的基本使用。

+我们提供了 [colab 教程](demo/MMDet_Tutorial.ipynb),也为新手提供了完整的运行教程,分别针对[已有数据集](docs/1_exist_data_model.md)和[新数据集](docs/2_new_data_model.md) 完整的使用指南

+

+我们也提供了一些进阶教程,内容覆盖了 [finetune 模型](docs/tutorials/finetune.md),[增加新数据集支持](docs/tutorials/new_dataset.md),[设计新的数据预处理流程](docs/tutorials/data_pipeline.md),[增加自定义模型](ocs/tutorials/customize_models.md),[增加自定义的运行时配置](docs/tutorials/customize_runtime.md),[常用工具和脚本](docs/useful_tools.md)。

+

+如果遇到问题,请参考 [FAQ 页面](docs/faq.md)。

+

+## 贡献指南

+

+我们感谢所有的贡献者为改进和提升 MMDetection 所作出的努力。请参考[贡献指南](.github/CONTRIBUTING.md)来了解参与项目贡献的相关指引。

+

+## 致谢

+

+MMDetection 是一款由来自不同高校和企业的研发人员共同参与贡献的开源项目。我们感谢所有为项目提供算法复现和新功能支持的贡献者,以及提供宝贵反馈的用户。 我们希望这个工具箱和基准测试可以为社区提供灵活的代码工具,供用户复现已有算法并开发自己的新模型,从而不断为开源社区提供贡献。

+

+## 引用

+

+如果你在研究中使用了本项目的代码或者性能基准,请参考如下 bibtex 引用 MMDetection。

+

+```

+@article{mmdetection,

+ title = {{MMDetection}: Open MMLab Detection Toolbox and Benchmark},

+ author = {Chen, Kai and Wang, Jiaqi and Pang, Jiangmiao and Cao, Yuhang and

+ Xiong, Yu and Li, Xiaoxiao and Sun, Shuyang and Feng, Wansen and

+ Liu, Ziwei and Xu, Jiarui and Zhang, Zheng and Cheng, Dazhi and

+ Zhu, Chenchen and Cheng, Tianheng and Zhao, Qijie and Li, Buyu and

+ Lu, Xin and Zhu, Rui and Wu, Yue and Dai, Jifeng and Wang, Jingdong

+ and Shi, Jianping and Ouyang, Wanli and Loy, Chen Change and Lin, Dahua},

+ journal= {arXiv preprint arXiv:1906.07155},

+ year={2019}

+}

+```

+

+## OpenMMLab 的其他项目

+

+- [MMCV](https://github.com/open-mmlab/mmcv): OpenMMLab 计算机视觉基础库

+- [MMClassification](https://github.com/open-mmlab/mmclassification): OpenMMLab 图像分类工具箱

+- [MMDetection](https://github.com/open-mmlab/mmdetection): OpenMMLab 目标检测工具箱

+- [MMDetection3D](https://github.com/open-mmlab/mmdetection3d): OpenMMLab 新一代通用 3D 目标检测平台

+- [MMSegmentation](https://github.com/open-mmlab/mmsegmentation): OpenMMLab 语义分割工具箱

+- [MMAction2](https://github.com/open-mmlab/mmaction2): OpenMMLab 新一代视频理解工具箱

+- [MMTracking](https://github.com/open-mmlab/mmtracking): OpenMMLab 一体化视频目标感知平台

+- [MMPose](https://github.com/open-mmlab/mmpose): OpenMMLab 姿态估计工具箱

+- [MMEditing](https://github.com/open-mmlab/mmediting): OpenMMLab 图像视频编辑工具箱

+- [MMOCR](https://github.com/open-mmlab/mmocr): OpenMMLab 全流程文字检测识别理解工具包

+- [MMGeneration](https://github.com/open-mmlab/mmgeneration): OpenMMLab 图片视频生成模型工具箱

+

+## 欢迎加入 OpenMMLab 社区

+

+扫描下方的二维码可关注 OpenMMLab 团队的 [知乎官方账号](https://www.zhihu.com/people/openmmlab),加入 OpenMMLab 团队的 [官方交流 QQ 群](https://jq.qq.com/?_wv=1027&k=aCvMxdr3)

+

+ +

+

+

+

+

+

+我们会在 OpenMMLab 社区为大家

+

+- 📢 分享 AI 框架的前沿核心技术

+- 💻 解读 PyTorch 常用模块源码

+- 📰 发布 OpenMMLab 的相关新闻

+- 🚀 介绍 OpenMMLab 开发的前沿算法

+- 🏃 获取更高效的问题答疑和意见反馈

+- 🔥 提供与各行各业开发者充分交流的平台

+

+干货满满 📘,等你来撩 💗,OpenMMLab 社区期待您的加入 👬

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/cityscapes_detection.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/cityscapes_detection.py

new file mode 100644

index 0000000000000000000000000000000000000000..e341b59d6fa6265c2d17dc32aae2341871670a3d

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/cityscapes_detection.py

@@ -0,0 +1,56 @@

+# dataset settings

+dataset_type = 'CityscapesDataset'

+data_root = 'data/cityscapes/'

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True),

+ dict(

+ type='Resize', img_scale=[(2048, 800), (2048, 1024)], keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(2048, 1024),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='RandomFlip'),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=1,

+ workers_per_gpu=2,

+ train=dict(

+ type='RepeatDataset',

+ times=8,

+ dataset=dict(

+ type=dataset_type,

+ ann_file=data_root +

+ 'annotations/instancesonly_filtered_gtFine_train.json',

+ img_prefix=data_root + 'leftImg8bit/train/',

+ pipeline=train_pipeline)),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root +

+ 'annotations/instancesonly_filtered_gtFine_val.json',

+ img_prefix=data_root + 'leftImg8bit/val/',

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root +

+ 'annotations/instancesonly_filtered_gtFine_test.json',

+ img_prefix=data_root + 'leftImg8bit/test/',

+ pipeline=test_pipeline))

+evaluation = dict(interval=1, metric='bbox')

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/cityscapes_instance.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/cityscapes_instance.py

new file mode 100644

index 0000000000000000000000000000000000000000..4e3c34e2c85b4fc2ba854e1b409af70dc2c34e94

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/cityscapes_instance.py

@@ -0,0 +1,56 @@

+# dataset settings

+dataset_type = 'CityscapesDataset'

+data_root = 'data/cityscapes/'

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

+ dict(

+ type='Resize', img_scale=[(2048, 800), (2048, 1024)], keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(2048, 1024),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='RandomFlip'),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=1,

+ workers_per_gpu=2,

+ train=dict(

+ type='RepeatDataset',

+ times=8,

+ dataset=dict(

+ type=dataset_type,

+ ann_file=data_root +

+ 'annotations/instancesonly_filtered_gtFine_train.json',

+ img_prefix=data_root + 'leftImg8bit/train/',

+ pipeline=train_pipeline)),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root +

+ 'annotations/instancesonly_filtered_gtFine_val.json',

+ img_prefix=data_root + 'leftImg8bit/val/',

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root +

+ 'annotations/instancesonly_filtered_gtFine_test.json',

+ img_prefix=data_root + 'leftImg8bit/test/',

+ pipeline=test_pipeline))

+evaluation = dict(metric=['bbox', 'segm'])

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_detection.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_detection.py

new file mode 100644

index 0000000000000000000000000000000000000000..149f590bb45fa65c29fd4c005e4a237d7dd2e117

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_detection.py

@@ -0,0 +1,49 @@

+# dataset settings

+dataset_type = 'CocoDataset'

+data_root = 'data/coco/'

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True),

+ dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(1333, 800),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='RandomFlip'),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=2,

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_train2017.json',

+ img_prefix=data_root + 'train2017/',

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_val2017.json',

+ img_prefix=data_root + 'val2017/',

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_val2017.json',

+ img_prefix=data_root + 'val2017/',

+ pipeline=test_pipeline))

+evaluation = dict(interval=1, metric='bbox')

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_instance.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_instance.py

new file mode 100644

index 0000000000000000000000000000000000000000..9901a858414465d19d8ec6ced316b460166176b4

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_instance.py

@@ -0,0 +1,49 @@

+# dataset settings

+dataset_type = 'CocoDataset'

+data_root = 'data/coco/'

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

+ dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(1333, 800),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='RandomFlip'),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=2,

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_train2017.json',

+ img_prefix=data_root + 'train2017/',

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_val2017.json',

+ img_prefix=data_root + 'val2017/',

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_val2017.json',

+ img_prefix=data_root + 'val2017/',

+ pipeline=test_pipeline))

+evaluation = dict(metric=['bbox', 'segm'])

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_instance_semantic.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_instance_semantic.py

new file mode 100644

index 0000000000000000000000000000000000000000..6c8bf07b278f615e7ff5e67490d7a92068574b5b

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/coco_instance_semantic.py

@@ -0,0 +1,54 @@

+# dataset settings

+dataset_type = 'CocoDataset'

+data_root = 'data/coco/'

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='LoadAnnotations', with_bbox=True, with_mask=True, with_seg=True),

+ dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='SegRescale', scale_factor=1 / 8),

+ dict(type='DefaultFormatBundle'),

+ dict(

+ type='Collect',

+ keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks', 'gt_semantic_seg']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(1333, 800),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=2,

+ workers_per_gpu=2,

+ train=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_train2017.json',

+ img_prefix=data_root + 'train2017/',

+ seg_prefix=data_root + 'stuffthingmaps/train2017/',

+ pipeline=train_pipeline),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_val2017.json',

+ img_prefix=data_root + 'val2017/',

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/instances_val2017.json',

+ img_prefix=data_root + 'val2017/',

+ pipeline=test_pipeline))

+evaluation = dict(metric=['bbox', 'segm'])

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/deepfashion.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/deepfashion.py

new file mode 100644

index 0000000000000000000000000000000000000000..308b4b2ac4d9e3516ba4a57e9d3b6af91e97f24b

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/deepfashion.py

@@ -0,0 +1,53 @@

+# dataset settings

+dataset_type = 'DeepFashionDataset'

+data_root = 'data/DeepFashion/In-shop/'

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True, with_mask=True),

+ dict(type='Resize', img_scale=(750, 1101), keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels', 'gt_masks']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(750, 1101),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='RandomFlip'),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ imgs_per_gpu=2,

+ workers_per_gpu=1,

+ train=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/DeepFashion_segmentation_query.json',

+ img_prefix=data_root + 'Img/',

+ pipeline=train_pipeline,

+ data_root=data_root),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/DeepFashion_segmentation_query.json',

+ img_prefix=data_root + 'Img/',

+ pipeline=test_pipeline,

+ data_root=data_root),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root +

+ 'annotations/DeepFashion_segmentation_gallery.json',

+ img_prefix=data_root + 'Img/',

+ pipeline=test_pipeline,

+ data_root=data_root))

+evaluation = dict(interval=5, metric=['bbox', 'segm'])

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/lvis_v0.5_instance.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/lvis_v0.5_instance.py

new file mode 100644

index 0000000000000000000000000000000000000000..207e0053c24d73e05e78c764d05e65c102675320

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/lvis_v0.5_instance.py

@@ -0,0 +1,24 @@

+# dataset settings

+_base_ = 'coco_instance.py'

+dataset_type = 'LVISV05Dataset'

+data_root = 'data/lvis_v0.5/'

+data = dict(

+ samples_per_gpu=2,

+ workers_per_gpu=2,

+ train=dict(

+ _delete_=True,

+ type='ClassBalancedDataset',

+ oversample_thr=1e-3,

+ dataset=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/lvis_v0.5_train.json',

+ img_prefix=data_root + 'train2017/')),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/lvis_v0.5_val.json',

+ img_prefix=data_root + 'val2017/'),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/lvis_v0.5_val.json',

+ img_prefix=data_root + 'val2017/'))

+evaluation = dict(metric=['bbox', 'segm'])

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/lvis_v1_instance.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/lvis_v1_instance.py

new file mode 100644

index 0000000000000000000000000000000000000000..be791edd79495dce88d010eea63e33d398f242b0

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/lvis_v1_instance.py

@@ -0,0 +1,24 @@

+# dataset settings

+_base_ = 'coco_instance.py'

+dataset_type = 'LVISV1Dataset'

+data_root = 'data/lvis_v1/'

+data = dict(

+ samples_per_gpu=2,

+ workers_per_gpu=2,

+ train=dict(

+ _delete_=True,

+ type='ClassBalancedDataset',

+ oversample_thr=1e-3,

+ dataset=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/lvis_v1_train.json',

+ img_prefix=data_root)),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/lvis_v1_val.json',

+ img_prefix=data_root),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root + 'annotations/lvis_v1_val.json',

+ img_prefix=data_root))

+evaluation = dict(metric=['bbox', 'segm'])

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/voc0712.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/voc0712.py

new file mode 100644

index 0000000000000000000000000000000000000000..ae09acdd5c9580217815300abbad9f08b71b37ed

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/voc0712.py

@@ -0,0 +1,55 @@

+# dataset settings

+dataset_type = 'VOCDataset'

+data_root = 'data/VOCdevkit/'

+img_norm_cfg = dict(

+ mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(type='LoadAnnotations', with_bbox=True),

+ dict(type='Resize', img_scale=(1000, 600), keep_ratio=True),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(1000, 600),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=True),

+ dict(type='RandomFlip'),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='Pad', size_divisor=32),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=2,

+ workers_per_gpu=2,

+ train=dict(

+ type='RepeatDataset',

+ times=3,

+ dataset=dict(

+ type=dataset_type,

+ ann_file=[

+ data_root + 'VOC2007/ImageSets/Main/trainval.txt',

+ data_root + 'VOC2012/ImageSets/Main/trainval.txt'

+ ],

+ img_prefix=[data_root + 'VOC2007/', data_root + 'VOC2012/'],

+ pipeline=train_pipeline)),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'VOC2007/ImageSets/Main/test.txt',

+ img_prefix=data_root + 'VOC2007/',

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root + 'VOC2007/ImageSets/Main/test.txt',

+ img_prefix=data_root + 'VOC2007/',

+ pipeline=test_pipeline))

+evaluation = dict(interval=1, metric='mAP')

diff --git a/src/ndl_layout/mmdetection/configs/_base_/datasets/wider_face.py b/src/ndl_layout/mmdetection/configs/_base_/datasets/wider_face.py

new file mode 100644

index 0000000000000000000000000000000000000000..d1d649be42bca2955fb56a784fe80bcc2fdce4e1

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/datasets/wider_face.py

@@ -0,0 +1,63 @@

+# dataset settings

+dataset_type = 'WIDERFaceDataset'

+data_root = 'data/WIDERFace/'

+img_norm_cfg = dict(mean=[123.675, 116.28, 103.53], std=[1, 1, 1], to_rgb=True)

+train_pipeline = [

+ dict(type='LoadImageFromFile', to_float32=True),

+ dict(type='LoadAnnotations', with_bbox=True),

+ dict(

+ type='PhotoMetricDistortion',

+ brightness_delta=32,

+ contrast_range=(0.5, 1.5),

+ saturation_range=(0.5, 1.5),

+ hue_delta=18),

+ dict(

+ type='Expand',

+ mean=img_norm_cfg['mean'],

+ to_rgb=img_norm_cfg['to_rgb'],

+ ratio_range=(1, 4)),

+ dict(

+ type='MinIoURandomCrop',

+ min_ious=(0.1, 0.3, 0.5, 0.7, 0.9),

+ min_crop_size=0.3),

+ dict(type='Resize', img_scale=(300, 300), keep_ratio=False),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='RandomFlip', flip_ratio=0.5),

+ dict(type='DefaultFormatBundle'),

+ dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels']),

+]

+test_pipeline = [

+ dict(type='LoadImageFromFile'),

+ dict(

+ type='MultiScaleFlipAug',

+ img_scale=(300, 300),

+ flip=False,

+ transforms=[

+ dict(type='Resize', keep_ratio=False),

+ dict(type='Normalize', **img_norm_cfg),

+ dict(type='ImageToTensor', keys=['img']),

+ dict(type='Collect', keys=['img']),

+ ])

+]

+data = dict(

+ samples_per_gpu=60,

+ workers_per_gpu=2,

+ train=dict(

+ type='RepeatDataset',

+ times=2,

+ dataset=dict(

+ type=dataset_type,

+ ann_file=data_root + 'train.txt',

+ img_prefix=data_root + 'WIDER_train/',

+ min_size=17,

+ pipeline=train_pipeline)),

+ val=dict(

+ type=dataset_type,

+ ann_file=data_root + 'val.txt',

+ img_prefix=data_root + 'WIDER_val/',

+ pipeline=test_pipeline),

+ test=dict(

+ type=dataset_type,

+ ann_file=data_root + 'val.txt',

+ img_prefix=data_root + 'WIDER_val/',

+ pipeline=test_pipeline))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/default_runtime.py b/src/ndl_layout/mmdetection/configs/_base_/default_runtime.py

new file mode 100644

index 0000000000000000000000000000000000000000..55097c5b242da66c9735c0b45cd84beefab487b1

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/default_runtime.py

@@ -0,0 +1,16 @@

+checkpoint_config = dict(interval=1)

+# yapf:disable

+log_config = dict(

+ interval=50,

+ hooks=[

+ dict(type='TextLoggerHook'),

+ # dict(type='TensorboardLoggerHook')

+ ])

+# yapf:enable

+custom_hooks = [dict(type='NumClassCheckHook')]

+

+dist_params = dict(backend='nccl')

+log_level = 'INFO'

+load_from = None

+resume_from = None

+workflow = [('train', 1)]

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/cascade_mask_rcnn_r50_fpn.py b/src/ndl_layout/mmdetection/configs/_base_/models/cascade_mask_rcnn_r50_fpn.py

new file mode 100644

index 0000000000000000000000000000000000000000..9ef6673c2d08f3c43a96cf08ce1710b19865acd4

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/cascade_mask_rcnn_r50_fpn.py

@@ -0,0 +1,196 @@

+# model settings

+model = dict(

+ type='CascadeRCNN',

+ pretrained='torchvision://resnet50',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=True,

+ style='pytorch'),

+ neck=dict(

+ type='FPN',

+ in_channels=[256, 512, 1024, 2048],

+ out_channels=256,

+ num_outs=5),

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=256,

+ feat_channels=256,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ scales=[8],

+ ratios=[0.5, 1.0, 2.0],

+ strides=[4, 8, 16, 32, 64]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

+ loss_bbox=dict(type='SmoothL1Loss', beta=1.0 / 9.0, loss_weight=1.0)),

+ roi_head=dict(

+ type='CascadeRoIHead',

+ num_stages=3,

+ stage_loss_weights=[1, 0.5, 0.25],

+ bbox_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

+ out_channels=256,

+ featmap_strides=[4, 8, 16, 32]),

+ bbox_head=[

+ dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=True,

+ loss_cls=dict(

+ type='CrossEntropyLoss',

+ use_sigmoid=False,

+ loss_weight=1.0),

+ loss_bbox=dict(type='SmoothL1Loss', beta=1.0,

+ loss_weight=1.0)),

+ dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.05, 0.05, 0.1, 0.1]),

+ reg_class_agnostic=True,

+ loss_cls=dict(

+ type='CrossEntropyLoss',

+ use_sigmoid=False,

+ loss_weight=1.0),

+ loss_bbox=dict(type='SmoothL1Loss', beta=1.0,

+ loss_weight=1.0)),

+ dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.033, 0.033, 0.067, 0.067]),

+ reg_class_agnostic=True,

+ loss_cls=dict(

+ type='CrossEntropyLoss',

+ use_sigmoid=False,

+ loss_weight=1.0),

+ loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0))

+ ],

+ mask_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=14, sampling_ratio=0),

+ out_channels=256,

+ featmap_strides=[4, 8, 16, 32]),

+ mask_head=dict(

+ type='FCNMaskHead',

+ num_convs=4,

+ in_channels=256,

+ conv_out_channels=256,

+ num_classes=80,

+ loss_mask=dict(

+ type='CrossEntropyLoss', use_mask=True, loss_weight=1.0))),

+ # model training and testing settings

+ train_cfg=dict(

+ rpn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.3,

+ min_pos_iou=0.3,

+ match_low_quality=True,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=256,

+ pos_fraction=0.5,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=False),

+ allowed_border=0,

+ pos_weight=-1,

+ debug=False),

+ rpn_proposal=dict(

+ nms_pre=2000,

+ max_per_img=2000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=[

+ dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.5,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ mask_size=28,

+ pos_weight=-1,

+ debug=False),

+ dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.6,

+ neg_iou_thr=0.6,

+ min_pos_iou=0.6,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ mask_size=28,

+ pos_weight=-1,

+ debug=False),

+ dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.7,

+ min_pos_iou=0.7,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ mask_size=28,

+ pos_weight=-1,

+ debug=False)

+ ]),

+ test_cfg=dict(

+ rpn=dict(

+ nms_pre=1000,

+ max_per_img=1000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=100,

+ mask_thr_binary=0.5)))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/cascade_rcnn_r50_fpn.py b/src/ndl_layout/mmdetection/configs/_base_/models/cascade_rcnn_r50_fpn.py

new file mode 100644

index 0000000000000000000000000000000000000000..c3bef1947eaefe73c41936075ad8a942b6b813d8

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/cascade_rcnn_r50_fpn.py

@@ -0,0 +1,179 @@

+# model settings

+model = dict(

+ type='CascadeRCNN',

+ pretrained='torchvision://resnet50',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=True,

+ style='pytorch'),

+ neck=dict(

+ type='FPN',

+ in_channels=[256, 512, 1024, 2048],

+ out_channels=256,

+ num_outs=5),

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=256,

+ feat_channels=256,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ scales=[8],

+ ratios=[0.5, 1.0, 2.0],

+ strides=[4, 8, 16, 32, 64]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

+ loss_bbox=dict(type='SmoothL1Loss', beta=1.0 / 9.0, loss_weight=1.0)),

+ roi_head=dict(

+ type='CascadeRoIHead',

+ num_stages=3,

+ stage_loss_weights=[1, 0.5, 0.25],

+ bbox_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

+ out_channels=256,

+ featmap_strides=[4, 8, 16, 32]),

+ bbox_head=[

+ dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=True,

+ loss_cls=dict(

+ type='CrossEntropyLoss',

+ use_sigmoid=False,

+ loss_weight=1.0),

+ loss_bbox=dict(type='SmoothL1Loss', beta=1.0,

+ loss_weight=1.0)),

+ dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.05, 0.05, 0.1, 0.1]),

+ reg_class_agnostic=True,

+ loss_cls=dict(

+ type='CrossEntropyLoss',

+ use_sigmoid=False,

+ loss_weight=1.0),

+ loss_bbox=dict(type='SmoothL1Loss', beta=1.0,

+ loss_weight=1.0)),

+ dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.033, 0.033, 0.067, 0.067]),

+ reg_class_agnostic=True,

+ loss_cls=dict(

+ type='CrossEntropyLoss',

+ use_sigmoid=False,

+ loss_weight=1.0),

+ loss_bbox=dict(type='SmoothL1Loss', beta=1.0, loss_weight=1.0))

+ ]),

+ # model training and testing settings

+ train_cfg=dict(

+ rpn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.3,

+ min_pos_iou=0.3,

+ match_low_quality=True,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=256,

+ pos_fraction=0.5,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=False),

+ allowed_border=0,

+ pos_weight=-1,

+ debug=False),

+ rpn_proposal=dict(

+ nms_pre=5000,

+ max_per_img=5000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=[

+ dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.5,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ pos_weight=-1,

+ debug=False),

+ dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.6,

+ neg_iou_thr=0.6,

+ min_pos_iou=0.6,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ pos_weight=-1,

+ debug=False),

+ dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.7,

+ min_pos_iou=0.7,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ pos_weight=-1,

+ debug=False)

+ ]),

+ test_cfg=dict(

+ rpn=dict(

+ nms_pre=5000,

+ max_per_img=5000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=5000)))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/fast_rcnn_r50_fpn.py b/src/ndl_layout/mmdetection/configs/_base_/models/fast_rcnn_r50_fpn.py

new file mode 100644

index 0000000000000000000000000000000000000000..1099165b2a7a7af5cee60cf757ef674e768c6a8a

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/fast_rcnn_r50_fpn.py

@@ -0,0 +1,62 @@

+# model settings

+model = dict(

+ type='FastRCNN',

+ pretrained='torchvision://resnet50',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=True,

+ style='pytorch'),

+ neck=dict(

+ type='FPN',

+ in_channels=[256, 512, 1024, 2048],

+ out_channels=256,

+ num_outs=5),

+ roi_head=dict(

+ type='StandardRoIHead',

+ bbox_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

+ out_channels=256,

+ featmap_strides=[4, 8, 16, 32]),

+ bbox_head=dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=False,

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0))),

+ # model training and testing settings

+ train_cfg=dict(

+ rcnn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.5,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ pos_weight=-1,

+ debug=False)),

+ test_cfg=dict(

+ rcnn=dict(

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=100)))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_caffe_c4.py b/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_caffe_c4.py

new file mode 100644

index 0000000000000000000000000000000000000000..6e18f71b31b9fb85a6ca7a6b05ff4d2313951750

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_caffe_c4.py

@@ -0,0 +1,112 @@

+# model settings

+norm_cfg = dict(type='BN', requires_grad=False)

+model = dict(

+ type='FasterRCNN',

+ pretrained='open-mmlab://detectron2/resnet50_caffe',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=3,

+ strides=(1, 2, 2),

+ dilations=(1, 1, 1),

+ out_indices=(2, ),

+ frozen_stages=1,

+ norm_cfg=norm_cfg,

+ norm_eval=True,

+ style='caffe'),

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=1024,

+ feat_channels=1024,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ scales=[2, 4, 8, 16, 32],

+ ratios=[0.5, 1.0, 2.0],

+ strides=[16]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ roi_head=dict(

+ type='StandardRoIHead',

+ shared_head=dict(

+ type='ResLayer',

+ depth=50,

+ stage=3,

+ stride=2,

+ dilation=1,

+ style='caffe',

+ norm_cfg=norm_cfg,

+ norm_eval=True),

+ bbox_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=14, sampling_ratio=0),

+ out_channels=1024,

+ featmap_strides=[16]),

+ bbox_head=dict(

+ type='BBoxHead',

+ with_avg_pool=True,

+ roi_feat_size=7,

+ in_channels=2048,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=False,

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0))),

+ # model training and testing settings

+ train_cfg=dict(

+ rpn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.3,

+ min_pos_iou=0.3,

+ match_low_quality=True,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=256,

+ pos_fraction=0.5,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=False),

+ allowed_border=0,

+ pos_weight=-1,

+ debug=False),

+ rpn_proposal=dict(

+ nms_pre=12000,

+ max_per_img=2000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.5,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ pos_weight=-1,

+ debug=False)),

+ test_cfg=dict(

+ rpn=dict(

+ nms_pre=6000,

+ max_per_img=1000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=100)))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_caffe_dc5.py b/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_caffe_dc5.py

new file mode 100644

index 0000000000000000000000000000000000000000..5089f0e33a5736a34435c6a3f37b996c32542c8c

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_caffe_dc5.py

@@ -0,0 +1,103 @@

+# model settings

+norm_cfg = dict(type='BN', requires_grad=False)

+model = dict(

+ type='FasterRCNN',

+ pretrained='open-mmlab://detectron2/resnet50_caffe',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ strides=(1, 2, 2, 1),

+ dilations=(1, 1, 1, 2),

+ out_indices=(3, ),

+ frozen_stages=1,

+ norm_cfg=norm_cfg,

+ norm_eval=True,

+ style='caffe'),

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=2048,

+ feat_channels=2048,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ scales=[2, 4, 8, 16, 32],

+ ratios=[0.5, 1.0, 2.0],

+ strides=[16]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ roi_head=dict(

+ type='StandardRoIHead',

+ bbox_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

+ out_channels=2048,

+ featmap_strides=[16]),

+ bbox_head=dict(

+ type='Shared2FCBBoxHead',

+ in_channels=2048,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=False,

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0))),

+ # model training and testing settings

+ train_cfg=dict(

+ rpn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.3,

+ min_pos_iou=0.3,

+ match_low_quality=True,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=256,

+ pos_fraction=0.5,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=False),

+ allowed_border=0,

+ pos_weight=-1,

+ debug=False),

+ rpn_proposal=dict(

+ nms_pre=12000,

+ max_per_img=2000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.5,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ pos_weight=-1,

+ debug=False)),

+ test_cfg=dict(

+ rpn=dict(

+ nms=dict(type='nms', iou_threshold=0.7),

+ nms_pre=6000,

+ max_per_img=1000,

+ min_bbox_size=0),

+ rcnn=dict(

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=100)))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_fpn.py b/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_fpn.py

new file mode 100644

index 0000000000000000000000000000000000000000..c67137e93d943fee65314682620667a5eb971c23

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/faster_rcnn_r50_fpn.py

@@ -0,0 +1,108 @@

+# model settings

+model = dict(

+ type='FasterRCNN',

+ pretrained='torchvision://resnet50',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=True,

+ style='pytorch'),

+ neck=dict(

+ type='FPN',

+ in_channels=[256, 512, 1024, 2048],

+ out_channels=256,

+ num_outs=5),

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=256,

+ feat_channels=256,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ scales=[8],

+ ratios=[0.5, 1.0, 2.0],

+ strides=[4, 8, 16, 32, 64]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ roi_head=dict(

+ type='StandardRoIHead',

+ bbox_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

+ out_channels=256,

+ featmap_strides=[4, 8, 16, 32]),

+ bbox_head=dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=False,

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0))),

+ # model training and testing settings

+ train_cfg=dict(

+ rpn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.3,

+ min_pos_iou=0.3,

+ match_low_quality=True,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=256,

+ pos_fraction=0.5,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=False),

+ allowed_border=-1,

+ pos_weight=-1,

+ debug=False),

+ rpn_proposal=dict(

+ nms_pre=2000,

+ max_per_img=1000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.5,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ pos_weight=-1,

+ debug=False)),

+ test_cfg=dict(

+ rpn=dict(

+ nms_pre=1000,

+ max_per_img=1000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=100)

+ # soft-nms is also supported for rcnn testing

+ # e.g., nms=dict(type='soft_nms', iou_threshold=0.5, min_score=0.05)

+ ))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/mask_rcnn_r50_caffe_c4.py b/src/ndl_layout/mmdetection/configs/_base_/models/mask_rcnn_r50_caffe_c4.py

new file mode 100644

index 0000000000000000000000000000000000000000..eaae1342f4aaa7015510d51bb4f12500a8a6b81d

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/mask_rcnn_r50_caffe_c4.py

@@ -0,0 +1,123 @@

+# model settings

+norm_cfg = dict(type='BN', requires_grad=False)

+model = dict(

+ type='MaskRCNN',

+ pretrained='open-mmlab://detectron2/resnet50_caffe',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=3,

+ strides=(1, 2, 2),

+ dilations=(1, 1, 1),

+ out_indices=(2, ),

+ frozen_stages=1,

+ norm_cfg=norm_cfg,

+ norm_eval=True,

+ style='caffe'),

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=1024,

+ feat_channels=1024,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ scales=[2, 4, 8, 16, 32],

+ ratios=[0.5, 1.0, 2.0],

+ strides=[16]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ roi_head=dict(

+ type='StandardRoIHead',

+ shared_head=dict(

+ type='ResLayer',

+ depth=50,

+ stage=3,

+ stride=2,

+ dilation=1,

+ style='caffe',

+ norm_cfg=norm_cfg,

+ norm_eval=True),

+ bbox_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=14, sampling_ratio=0),

+ out_channels=1024,

+ featmap_strides=[16]),

+ bbox_head=dict(

+ type='BBoxHead',

+ with_avg_pool=True,

+ roi_feat_size=7,

+ in_channels=2048,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=False,

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ mask_roi_extractor=None,

+ mask_head=dict(

+ type='FCNMaskHead',

+ num_convs=0,

+ in_channels=2048,

+ conv_out_channels=256,

+ num_classes=80,

+ loss_mask=dict(

+ type='CrossEntropyLoss', use_mask=True, loss_weight=1.0))),

+ # model training and testing settings

+ train_cfg=dict(

+ rpn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.3,

+ min_pos_iou=0.3,

+ match_low_quality=True,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=256,

+ pos_fraction=0.5,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=False),

+ allowed_border=0,

+ pos_weight=-1,

+ debug=False),

+ rpn_proposal=dict(

+ nms_pre=12000,

+ max_per_img=2000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.5,

+ match_low_quality=False,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ mask_size=14,

+ pos_weight=-1,

+ debug=False)),

+ test_cfg=dict(

+ rpn=dict(

+ nms_pre=6000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ max_per_img=1000,

+ min_bbox_size=0),

+ rcnn=dict(

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=100,

+ mask_thr_binary=0.5)))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/mask_rcnn_r50_fpn.py b/src/ndl_layout/mmdetection/configs/_base_/models/mask_rcnn_r50_fpn.py

new file mode 100644

index 0000000000000000000000000000000000000000..6fc7908249e013376b343c5fc136cbbe5ff29390

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/mask_rcnn_r50_fpn.py

@@ -0,0 +1,120 @@

+# model settings

+model = dict(

+ type='MaskRCNN',

+ pretrained='torchvision://resnet50',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=True,

+ style='pytorch'),

+ neck=dict(

+ type='FPN',

+ in_channels=[256, 512, 1024, 2048],

+ out_channels=256,

+ num_outs=5),

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=256,

+ feat_channels=256,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ scales=[8],

+ ratios=[0.5, 1.0, 2.0],

+ strides=[4, 8, 16, 32, 64]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ roi_head=dict(

+ type='StandardRoIHead',

+ bbox_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

+ out_channels=256,

+ featmap_strides=[4, 8, 16, 32]),

+ bbox_head=dict(

+ type='Shared2FCBBoxHead',

+ in_channels=256,

+ fc_out_channels=1024,

+ roi_feat_size=7,

+ num_classes=80,

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[0., 0., 0., 0.],

+ target_stds=[0.1, 0.1, 0.2, 0.2]),

+ reg_class_agnostic=False,

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ mask_roi_extractor=dict(

+ type='SingleRoIExtractor',

+ roi_layer=dict(type='RoIAlign', output_size=14, sampling_ratio=0),

+ out_channels=256,

+ featmap_strides=[4, 8, 16, 32]),

+ mask_head=dict(

+ type='FCNMaskHead',

+ num_convs=4,

+ in_channels=256,

+ conv_out_channels=256,

+ num_classes=80,

+ loss_mask=dict(

+ type='CrossEntropyLoss', use_mask=True, loss_weight=1.0))),

+ # model training and testing settings

+ train_cfg=dict(

+ rpn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.3,

+ min_pos_iou=0.3,

+ match_low_quality=True,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=256,

+ pos_fraction=0.5,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=False),

+ allowed_border=-1,

+ pos_weight=-1,

+ debug=False),

+ rpn_proposal=dict(

+ nms_pre=2000,

+ max_per_img=1000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.5,

+ min_pos_iou=0.5,

+ match_low_quality=True,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=512,

+ pos_fraction=0.25,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=True),

+ mask_size=28,

+ pos_weight=-1,

+ debug=False)),

+ test_cfg=dict(

+ rpn=dict(

+ nms_pre=1000,

+ max_per_img=1000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0),

+ rcnn=dict(

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=100,

+ mask_thr_binary=0.5)))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/retinanet_r50_fpn.py b/src/ndl_layout/mmdetection/configs/_base_/models/retinanet_r50_fpn.py

new file mode 100644

index 0000000000000000000000000000000000000000..f3b97b30bc6e343453df22ab1fc444fcf8d4168f

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/retinanet_r50_fpn.py

@@ -0,0 +1,60 @@

+# model settings

+model = dict(

+ type='RetinaNet',

+ pretrained='torchvision://resnet50',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=True,

+ style='pytorch'),

+ neck=dict(

+ type='FPN',

+ in_channels=[256, 512, 1024, 2048],

+ out_channels=256,

+ start_level=1,

+ add_extra_convs='on_input',

+ num_outs=5),

+ bbox_head=dict(

+ type='RetinaHead',

+ num_classes=80,

+ in_channels=256,

+ stacked_convs=4,

+ feat_channels=256,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ octave_base_scale=4,

+ scales_per_octave=3,

+ ratios=[0.5, 1.0, 2.0],

+ strides=[8, 16, 32, 64, 128]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='FocalLoss',

+ use_sigmoid=True,

+ gamma=2.0,

+ alpha=0.25,

+ loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ # model training and testing settings

+ train_cfg=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.5,

+ neg_iou_thr=0.4,

+ min_pos_iou=0,

+ ignore_iof_thr=-1),

+ allowed_border=-1,

+ pos_weight=-1,

+ debug=False),

+ test_cfg=dict(

+ nms_pre=1000,

+ min_bbox_size=0,

+ score_thr=0.05,

+ nms=dict(type='nms', iou_threshold=0.5),

+ max_per_img=100))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/rpn_r50_caffe_c4.py b/src/ndl_layout/mmdetection/configs/_base_/models/rpn_r50_caffe_c4.py

new file mode 100644

index 0000000000000000000000000000000000000000..9c32a55ddaa88812c8020872c33502122c409041

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/rpn_r50_caffe_c4.py

@@ -0,0 +1,56 @@

+# model settings

+model = dict(

+ type='RPN',

+ pretrained='open-mmlab://detectron2/resnet50_caffe',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=3,

+ strides=(1, 2, 2),

+ dilations=(1, 1, 1),

+ out_indices=(2, ),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=False),

+ norm_eval=True,

+ style='caffe'),

+ neck=None,

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=1024,

+ feat_channels=1024,

+ anchor_generator=dict(

+ type='AnchorGenerator',

+ scales=[2, 4, 8, 16, 32],

+ ratios=[0.5, 1.0, 2.0],

+ strides=[16]),

+ bbox_coder=dict(

+ type='DeltaXYWHBBoxCoder',

+ target_means=[.0, .0, .0, .0],

+ target_stds=[1.0, 1.0, 1.0, 1.0]),

+ loss_cls=dict(

+ type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

+ loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

+ # model training and testing settings

+ train_cfg=dict(

+ rpn=dict(

+ assigner=dict(

+ type='MaxIoUAssigner',

+ pos_iou_thr=0.7,

+ neg_iou_thr=0.3,

+ min_pos_iou=0.3,

+ ignore_iof_thr=-1),

+ sampler=dict(

+ type='RandomSampler',

+ num=256,

+ pos_fraction=0.5,

+ neg_pos_ub=-1,

+ add_gt_as_proposals=False),

+ allowed_border=0,

+ pos_weight=-1,

+ debug=False)),

+ test_cfg=dict(

+ rpn=dict(

+ nms_pre=12000,

+ max_per_img=2000,

+ nms=dict(type='nms', iou_threshold=0.7),

+ min_bbox_size=0)))

diff --git a/src/ndl_layout/mmdetection/configs/_base_/models/rpn_r50_fpn.py b/src/ndl_layout/mmdetection/configs/_base_/models/rpn_r50_fpn.py

new file mode 100644

index 0000000000000000000000000000000000000000..b9b7618371d62bf13548cb71510c6d01148fbf2e

--- /dev/null

+++ b/src/ndl_layout/mmdetection/configs/_base_/models/rpn_r50_fpn.py

@@ -0,0 +1,58 @@

+# model settings

+model = dict(

+ type='RPN',

+ pretrained='torchvision://resnet50',

+ backbone=dict(

+ type='ResNet',

+ depth=50,

+ num_stages=4,

+ out_indices=(0, 1, 2, 3),

+ frozen_stages=1,

+ norm_cfg=dict(type='BN', requires_grad=True),

+ norm_eval=True,

+ style='pytorch'),

+ neck=dict(

+ type='FPN',

+ in_channels=[256, 512, 1024, 2048],

+ out_channels=256,

+ num_outs=5),

+ rpn_head=dict(

+ type='RPNHead',

+ in_channels=256,

+ feat_channels=256,