zy5830850

commited on

Commit

•

91ef820

1

Parent(s):

232404e

First model version

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- app.py.py +150 -0

- images/649af982-e3af4e3a-75013d30-cdc71514-a34738fd.jpg +0 -0

- med_rpg/__init__.py +17 -0

- med_rpg/__pycache__/__init__.cpython-310.pyc +0 -0

- med_rpg/__pycache__/data_loader.cpython-310.pyc +0 -0

- med_rpg/__pycache__/data_loader.cpython-37.pyc +0 -0

- med_rpg/__pycache__/engine.cpython-37.pyc +0 -0

- med_rpg/__pycache__/med_rpg.cpython-310.pyc +0 -0

- med_rpg/__pycache__/transforms.cpython-310.pyc +0 -0

- med_rpg/__pycache__/transforms.cpython-37.pyc +0 -0

- med_rpg/data/00363400-cee06fa7-8c2ca1f7-2678a170-b3a62a6e.jpg +0 -0

- med_rpg/data/04e10148-c36f7afb-d0aaf964-152d8a5d-a02ab550.jpg +0 -0

- med_rpg/data/1176839d-cf4f677f-d597a1ef-548bc32a-c05429f3.jpg +0 -0

- med_rpg/data/13255e1f-91b7b172-02baaeee-340ec493-0e531681.jpg +0 -0

- med_rpg/data/4b7f7a4c-18c39245-53724c25-06878595-7e41bb94.jpg +0 -0

- med_rpg/data/649af982-e3af4e3a-75013d30-cdc71514-a34738fd.jpg +0 -0

- med_rpg/data/95423e8e-45dff550-563d3eba-b8bc94be-a87f5a1d.jpg +0 -0

- med_rpg/data_loader.py +376 -0

- med_rpg/demo.py +222 -0

- med_rpg/med_rpg.py +268 -0

- med_rpg/models/MHA.py +467 -0

- med_rpg/models/__init__.py +6 -0

- med_rpg/models/__pycache__/MHA.cpython-310.pyc +0 -0

- med_rpg/models/__pycache__/MHA.cpython-37.pyc +0 -0

- med_rpg/models/__pycache__/__init__.cpython-310.pyc +0 -0

- med_rpg/models/__pycache__/__init__.cpython-37.pyc +0 -0

- med_rpg/models/__pycache__/trans_vg_ca.cpython-310.pyc +0 -0

- med_rpg/models/__pycache__/trans_vg_ca.cpython-37.pyc +0 -0

- med_rpg/models/__pycache__/vl_transformer.cpython-310.pyc +0 -0

- med_rpg/models/__pycache__/vl_transformer.cpython-37.pyc +0 -0

- med_rpg/models/language_model/__init__.py +0 -0

- med_rpg/models/language_model/__pycache__/__init__.cpython-310.pyc +0 -0

- med_rpg/models/language_model/__pycache__/__init__.cpython-37.pyc +0 -0

- med_rpg/models/language_model/__pycache__/bert.cpython-310.pyc +0 -0

- med_rpg/models/language_model/__pycache__/bert.cpython-37.pyc +0 -0

- med_rpg/models/language_model/bert.py +63 -0

- med_rpg/models/trans_vg_ca.py +88 -0

- med_rpg/models/transformer.py +314 -0

- med_rpg/models/visual_model/__init__.py +0 -0

- med_rpg/models/visual_model/__pycache__/__init__.cpython-310.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/__init__.cpython-37.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/backbone.cpython-310.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/backbone.cpython-37.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/detr.cpython-310.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/detr.cpython-37.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/position_encoding.cpython-310.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/position_encoding.cpython-37.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/transformer.cpython-310.pyc +0 -0

- med_rpg/models/visual_model/__pycache__/transformer.cpython-37.pyc +0 -0

- med_rpg/models/visual_model/backbone.py +121 -0

app.py.py

ADDED

|

@@ -0,0 +1,150 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import torch

|

| 3 |

+

|

| 4 |

+

import sys

|

| 5 |

+

# sys.path.insert(0, '/Users/daipl/Desktop/MedRPG_Demo/med_rpg')

|

| 6 |

+

sys.path.insert(0, 'med_rpg')

|

| 7 |

+

|

| 8 |

+

# import datasets

|

| 9 |

+

from models import build_model

|

| 10 |

+

from med_rpg import get_args_parser, medical_phrase_grounding

|

| 11 |

+

import PIL.Image as Image

|

| 12 |

+

from transformers import AutoTokenizer

|

| 13 |

+

|

| 14 |

+

'''

|

| 15 |

+

build args

|

| 16 |

+

'''

|

| 17 |

+

parser = get_args_parser()

|

| 18 |

+

args = parser.parse_args()

|

| 19 |

+

|

| 20 |

+

'''

|

| 21 |

+

build model

|

| 22 |

+

'''

|

| 23 |

+

|

| 24 |

+

# device = 'cpu'

|

| 25 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 26 |

+

# device = torch.device('mps')

|

| 27 |

+

|

| 28 |

+

# Check that MPS is available

|

| 29 |

+

# if not torch.backends.mps.is_available():

|

| 30 |

+

# if not torch.backends.mps.is_built():

|

| 31 |

+

# print("MPS not available because the current PyTorch install was not "

|

| 32 |

+

# "built with MPS enabled.")

|

| 33 |

+

# else:

|

| 34 |

+

# print("MPS not available because the current MacOS version is not 12.3+ "

|

| 35 |

+

# "and/or you do not have an MPS-enabled device on this machine.")

|

| 36 |

+

|

| 37 |

+

# else:

|

| 38 |

+

# device = torch.device("mps")

|

| 39 |

+

|

| 40 |

+

tokenizer = AutoTokenizer.from_pretrained(args.bert_model, do_lower_case=True)

|

| 41 |

+

## build model

|

| 42 |

+

model = build_model(args)

|

| 43 |

+

model.to(device)

|

| 44 |

+

checkpoint = torch.load(args.eval_model, map_location='cpu')

|

| 45 |

+

model.load_state_dict(checkpoint['model'], strict=False)

|

| 46 |

+

|

| 47 |

+

'''

|

| 48 |

+

inference model

|

| 49 |

+

'''

|

| 50 |

+

@torch.no_grad()

|

| 51 |

+

def inference(image, text, bbox = [0, 0, 0, 0]):

|

| 52 |

+

image = image.convert("RGB")

|

| 53 |

+

# if bbox is not None:

|

| 54 |

+

# bbox = bbox.to_numpy(dtype='int')[0].tolist()

|

| 55 |

+

with torch.autocast(device_type='cuda', dtype=torch.float16):

|

| 56 |

+

return medical_phrase_grounding(model, tokenizer, image, text, bbox)

|

| 57 |

+

|

| 58 |

+

# """

|

| 59 |

+

# Small left apical pneumothorax unchanged in size since ___:56 a.m.,

|

| 60 |

+

# and no appreciable left pleural effusion,

|

| 61 |

+

# basal pleural tubes still in place and reportedly on waterseal.

|

| 62 |

+

# Greater coalescence of consolidation in both the right mid and lower lung zones could be progressive atelectasis but is more concerning for pneumonia.

|

| 63 |

+

# Consolidation in the left lower lobe, however, has improved since ___ through ___.

|

| 64 |

+

# There is no right pleural effusion or definite right pneumothorax.

|

| 65 |

+

# Cardiomediastinal silhouette is normal.

|

| 66 |

+

# Distention of large and small bowel seen in the imaged portion of the upper abdomen is unchanged.

|

| 67 |

+

# """

|

| 68 |

+

|

| 69 |

+

def get_result(image, evt: gr.SelectData):

|

| 70 |

+

if evt.value:

|

| 71 |

+

bbox = evt.value[1][1:-1] # Remove "[" and "]"

|

| 72 |

+

bbox = [int(num) for num in bbox.split(",")]

|

| 73 |

+

output_img = inference(image, evt.value[0], bbox)

|

| 74 |

+

return evt.value[0], output_img

|

| 75 |

+

|

| 76 |

+

GT_text = {

|

| 77 |

+

"Finding 1": "Small left apical pneumothorax",

|

| 78 |

+

"Finding 2": "Greater coalescence of consolidation in both the right mid and lower lung zones",

|

| 79 |

+

"Finding 3": "Consilidation in the left lower lobe"

|

| 80 |

+

}

|

| 81 |

+

# GT_bboxes = {"Finding 1": [1, 332, 28, 141, 48], "Finding 2": [2, 57, 177, 163, 165], "Finding 3": [3, 325, 231, 183, 132]}

|

| 82 |

+

GT_bboxes = {"Finding 1": [1, 332, 28, 332+141, 28+48], "Finding 2": [2, 57, 177, 163+57, 165+177], "Finding 3": [3, 325, 231, 183+325, 132+231]}

|

| 83 |

+

def get_new_result(image, evt: gr.SelectData):

|

| 84 |

+

if evt.value[1]:

|

| 85 |

+

if evt.value[0] == "(Show GT)":

|

| 86 |

+

bbox = GT_bboxes[evt.value[1]]

|

| 87 |

+

text = GT_text[evt.value[1]]

|

| 88 |

+

else:

|

| 89 |

+

bbox = [GT_bboxes[evt.value[1]][0], 0, 0, 0, 0]

|

| 90 |

+

text = evt.value[0]

|

| 91 |

+

output_img = inference(image, text, bbox)

|

| 92 |

+

return text, output_img

|

| 93 |

+

|

| 94 |

+

def clear():

|

| 95 |

+

return ""

|

| 96 |

+

|

| 97 |

+

with gr.Blocks() as demo:

|

| 98 |

+

gr.Markdown(

|

| 99 |

+

"""

|

| 100 |

+

<center> <h1>Medical Phrase Grounding Demo</h1> </center>

|

| 101 |

+

<p style='text-align: center'> <a href='https://arxiv.org/abs/2303.07618' target='_blank'>Paper</a> </p>

|

| 102 |

+

""")

|

| 103 |

+

with gr.Row():

|

| 104 |

+

with gr.Column(scale=1, min_width=300):

|

| 105 |

+

input_image = gr.Image(type='pil', value="./images/649af982-e3af4e3a-75013d30-cdc71514-a34738fd.jpg")

|

| 106 |

+

hl_text = gr.HighlightedText(

|

| 107 |

+

label="Medical Report",

|

| 108 |

+

combine_adjacent=False,

|

| 109 |

+

# combine_adjacent=True,

|

| 110 |

+

show_legend=False,

|

| 111 |

+

# value = [("Small left apical pneumothorax","[332, 28, 141, 48]"),

|

| 112 |

+

# ("unchanged in size since ___:56 a.m., and no appreciable left pleural effusion, basal pleural tubes still in place and reportedly on waterseal.", None),

|

| 113 |

+

# ("Greater coalescence of consolidation in both the right mid and lower lung zones","[57, 177, 163, 165]"),

|

| 114 |

+

# ("could be progressive atelectasis but is more concerning for pneumonia.", None),

|

| 115 |

+

# ("Consilidation in the left lower lobe","[325, 231, 183, 132]"),

|

| 116 |

+

# (", however, has improved since ___ through ___.", None),

|

| 117 |

+

# # ("There is no right pleural effusion or definite right pneumothorax.", None),

|

| 118 |

+

# # ("Cardiomediastinal silhouette is normal.", None),

|

| 119 |

+

# # ("Distention of large and small bowel seen in the imaged portion of the upper abdomen is unchanged.", None),

|

| 120 |

+

# ]

|

| 121 |

+

value = [("Small left apical pneumothorax","Finding 1"),

|

| 122 |

+

("(Show GT)","Finding 1"),

|

| 123 |

+

("unchanged in size since ___:56 a.m., and no appreciable left pleural effusion, basal pleural tubes still in place and reportedly on waterseal.", None),

|

| 124 |

+

("Greater coalescence of consolidation in both the right mid and lower lung zones","Finding 2"),

|

| 125 |

+

("(Show GT)","Finding 2"),

|

| 126 |

+

("could be progressive atelectasis but is more concerning for pneumonia.", None),

|

| 127 |

+

("Consilidation in the left lower lobe","Finding 3"),

|

| 128 |

+

("(Show GT)","Finding 3"),

|

| 129 |

+

# ", however, has improved since ___ through ___.",

|

| 130 |

+

(", however, has improved since ___ through ___.", None),

|

| 131 |

+

]

|

| 132 |

+

)

|

| 133 |

+

input_text = gr.Textbox(label="Input Text", interactive=False)

|

| 134 |

+

# bbox = gr.Dataframe(

|

| 135 |

+

# headers=["x", "y", "w", "h"],

|

| 136 |

+

# datatype=["number", "number", "number", "number"],

|

| 137 |

+

# label="Groud-Truth Bounding Box",

|

| 138 |

+

# value=[[332, 28, 141, 48]]

|

| 139 |

+

# )

|

| 140 |

+

# with gr.Row():

|

| 141 |

+

# clear_btn = gr.Button("Clear")

|

| 142 |

+

# run_btn = gr.Button("Run")

|

| 143 |

+

# output = gr.Image(type="pil", label="Grounding Results", interactive=False).style(height=500)

|

| 144 |

+

output = gr.Image(type="pil", value="./images/649af982-e3af4e3a-75013d30-cdc71514-a34738fd.jpg", label="Grounding Results", interactive=False).style(height=500)

|

| 145 |

+

hl_text.select(get_new_result, inputs=[input_image], outputs=[input_text, output])

|

| 146 |

+

# run_btn.click(fn=inference, inputs=[input_image, input_text], outputs=output)

|

| 147 |

+

# clear_btn.click(fn=clear, outputs=input_text)

|

| 148 |

+

demo.launch(share=True)

|

| 149 |

+

|

| 150 |

+

|

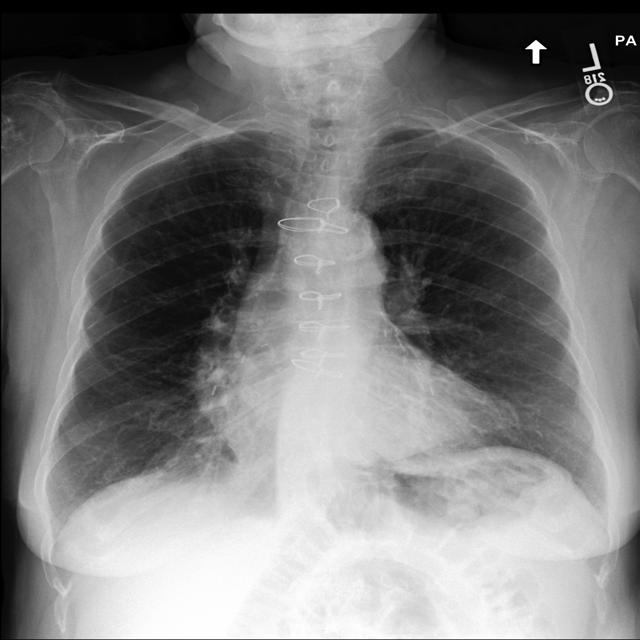

images/649af982-e3af4e3a-75013d30-cdc71514-a34738fd.jpg

ADDED

|

med_rpg/__init__.py

ADDED

|

@@ -0,0 +1,17 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# import med_rpg.utils.misc as misc

|

| 2 |

+

# from med_rpg.utils.box_utils import xywh2xyxy

|

| 3 |

+

# from med_rpg.utils.visual_bbox import visualBBox

|

| 4 |

+

# from med_rpg.models import build_model

|

| 5 |

+

# from med_rpg.med_rpg import get_args_parser

|

| 6 |

+

# import med_rpg.transforms as T

|

| 7 |

+

|

| 8 |

+

# import med_rpg.utils.misc

|

| 9 |

+

# import med_rpg.utils.misc as misc

|

| 10 |

+

# from .open_inst import open_instseg

|

| 11 |

+

# from .open_pano import open_panoseg

|

| 12 |

+

# from .open_sem import open_semseg

|

| 13 |

+

# from .ref_cap import referring_captioning

|

| 14 |

+

# from .ref_in import referring_inpainting

|

| 15 |

+

# from .ref_seg import referring_segmentation

|

| 16 |

+

# from .text_ret import text_retrieval

|

| 17 |

+

# from .reg_ret import region_retrieval

|

med_rpg/__pycache__/__init__.cpython-310.pyc

ADDED

|

Binary file (137 Bytes). View file

|

|

|

med_rpg/__pycache__/data_loader.cpython-310.pyc

ADDED

|

Binary file (9.5 kB). View file

|

|

|

med_rpg/__pycache__/data_loader.cpython-37.pyc

ADDED

|

Binary file (9.51 kB). View file

|

|

|

med_rpg/__pycache__/engine.cpython-37.pyc

ADDED

|

Binary file (7.65 kB). View file

|

|

|

med_rpg/__pycache__/med_rpg.cpython-310.pyc

ADDED

|

Binary file (6.68 kB). View file

|

|

|

med_rpg/__pycache__/transforms.cpython-310.pyc

ADDED

|

Binary file (10.1 kB). View file

|

|

|

med_rpg/__pycache__/transforms.cpython-37.pyc

ADDED

|

Binary file (10.8 kB). View file

|

|

|

med_rpg/data/00363400-cee06fa7-8c2ca1f7-2678a170-b3a62a6e.jpg

ADDED

|

med_rpg/data/04e10148-c36f7afb-d0aaf964-152d8a5d-a02ab550.jpg

ADDED

|

med_rpg/data/1176839d-cf4f677f-d597a1ef-548bc32a-c05429f3.jpg

ADDED

|

med_rpg/data/13255e1f-91b7b172-02baaeee-340ec493-0e531681.jpg

ADDED

|

med_rpg/data/4b7f7a4c-18c39245-53724c25-06878595-7e41bb94.jpg

ADDED

|

med_rpg/data/649af982-e3af4e3a-75013d30-cdc71514-a34738fd.jpg

ADDED

|

med_rpg/data/95423e8e-45dff550-563d3eba-b8bc94be-a87f5a1d.jpg

ADDED

|

med_rpg/data_loader.py

ADDED

|

@@ -0,0 +1,376 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

|

| 3 |

+

"""

|

| 4 |

+

ReferIt, UNC, UNC+ and GRef referring image segmentation PyTorch dataset.

|

| 5 |

+

|

| 6 |

+

Define and group batches of images, segmentations and queries.

|

| 7 |

+

Based on:

|

| 8 |

+

https://github.com/chenxi116/TF-phrasecut-public/blob/master/build_batches.py

|

| 9 |

+

"""

|

| 10 |

+

|

| 11 |

+

import os

|

| 12 |

+

import re

|

| 13 |

+

# import cv2

|

| 14 |

+

import sys

|

| 15 |

+

import json

|

| 16 |

+

import torch

|

| 17 |

+

import numpy as np

|

| 18 |

+

import os.path as osp

|

| 19 |

+

import scipy.io as sio

|

| 20 |

+

import torch.utils.data as data

|

| 21 |

+

sys.path.append('.')

|

| 22 |

+

|

| 23 |

+

from PIL import Image

|

| 24 |

+

from transformers import AutoTokenizer, AutoModel

|

| 25 |

+

# from pytorch_pretrained_bert.tokenization import BertTokenizer

|

| 26 |

+

# from transformers import BertTokenizer

|

| 27 |

+

from utils.word_utils import Corpus

|

| 28 |

+

from utils.box_utils import sampleNegBBox

|

| 29 |

+

from utils.genome_utils import getCLSLabel

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

def read_examples(input_line, unique_id):

|

| 33 |

+

"""Read a list of `InputExample`s from an input file."""

|

| 34 |

+

examples = []

|

| 35 |

+

# unique_id = 0

|

| 36 |

+

line = input_line #reader.readline()

|

| 37 |

+

# if not line:

|

| 38 |

+

# break

|

| 39 |

+

line = line.strip()

|

| 40 |

+

text_a = None

|

| 41 |

+

text_b = None

|

| 42 |

+

m = re.match(r"^(.*) \|\|\| (.*)$", line)

|

| 43 |

+

if m is None:

|

| 44 |

+

text_a = line

|

| 45 |

+

else:

|

| 46 |

+

text_a = m.group(1)

|

| 47 |

+

text_b = m.group(2)

|

| 48 |

+

examples.append(

|

| 49 |

+

InputExample(unique_id=unique_id, text_a=text_a, text_b=text_b))

|

| 50 |

+

# unique_id += 1

|

| 51 |

+

return examples

|

| 52 |

+

|

| 53 |

+

## Bert text encoding

|

| 54 |

+

class InputExample(object):

|

| 55 |

+

def __init__(self, unique_id, text_a, text_b):

|

| 56 |

+

self.unique_id = unique_id

|

| 57 |

+

self.text_a = text_a

|

| 58 |

+

self.text_b = text_b

|

| 59 |

+

|

| 60 |

+

class InputFeatures(object):

|

| 61 |

+

"""A single set of features of data."""

|

| 62 |

+

def __init__(self, unique_id, tokens, input_ids, input_mask, input_type_ids):

|

| 63 |

+

self.unique_id = unique_id

|

| 64 |

+

self.tokens = tokens

|

| 65 |

+

self.input_ids = input_ids

|

| 66 |

+

self.input_mask = input_mask

|

| 67 |

+

self.input_type_ids = input_type_ids

|

| 68 |

+

|

| 69 |

+

def convert_examples_to_features(examples, seq_length, tokenizer, usemarker=None):

|

| 70 |

+

"""Loads a data file into a list of `InputBatch`s."""

|

| 71 |

+

features = []

|

| 72 |

+

for (ex_index, example) in enumerate(examples):

|

| 73 |

+

tokens_a = tokenizer.tokenize(example.text_a)

|

| 74 |

+

|

| 75 |

+

tokens_b = None

|

| 76 |

+

if example.text_b:

|

| 77 |

+

tokens_b = tokenizer.tokenize(example.text_b)

|

| 78 |

+

|

| 79 |

+

if tokens_b:

|

| 80 |

+

# Modifies `tokens_a` and `tokens_b` in place so that the total

|

| 81 |

+

# length is less than the specified length.

|

| 82 |

+

# Account for [CLS], [SEP], [SEP] with "- 3"

|

| 83 |

+

_truncate_seq_pair(tokens_a, tokens_b, seq_length - 3)

|

| 84 |

+

else:

|

| 85 |

+

if usemarker is not None:

|

| 86 |

+

# tokens_a = ['a', 'e', 'b', '*', 'c', 'd', 'f', 'g', 'h', 'i', 'j', 'k', 'l', 'm', 'n', 'o', 'p', 'q', 'r', 's', 't', '*', 'u']

|

| 87 |

+

marker_idx = [i for i,x in enumerate(tokens_a) if x=='*']

|

| 88 |

+

if marker_idx[1] > seq_length - 3 and len(tokens_a) - seq_length+1 < marker_idx[0]: #第二个*的下标不能大于17,且从后往前数第一个*不能溢出

|

| 89 |

+

tokens_a = tokens_a[-(seq_length-2):]

|

| 90 |

+

new_marker_idx = [i for i,x in enumerate(tokens_a) if x=='*']

|

| 91 |

+

if len(new_marker_idx) < 2: #说明第一个marker被删掉了

|

| 92 |

+

pass

|

| 93 |

+

elif len(tokens_a) - seq_length+1 >= marker_idx[0]:

|

| 94 |

+

max_len = min(marker_idx[1]-marker_idx[0]+1, seq_length-2)

|

| 95 |

+

tokens_a = tokens_a[marker_idx[0]: marker_idx[0]+max_len]

|

| 96 |

+

tokens_a[-1] = '*' #如果**的内容超出范围,强行把最后一位置为*

|

| 97 |

+

elif marker_idx[1]-marker_idx[0]<2:

|

| 98 |

+

tokens_a = [i for i in tokens_a if i != '*']

|

| 99 |

+

tokens_a = ['*'] + tokens_a + ['*'] #如果**连在一起,把**放到首尾两端

|

| 100 |

+

else:

|

| 101 |

+

if len(tokens_a) > seq_length - 2:

|

| 102 |

+

tokens_a = tokens_a[0:(seq_length - 2)]

|

| 103 |

+

else:

|

| 104 |

+

# Account for [CLS] and [SEP] with "- 2"

|

| 105 |

+

if len(tokens_a) > seq_length - 2:

|

| 106 |

+

tokens_a = tokens_a[0:(seq_length - 2)]

|

| 107 |

+

|

| 108 |

+

tokens = []

|

| 109 |

+

input_type_ids = []

|

| 110 |

+

tokens.append("[CLS]")

|

| 111 |

+

input_type_ids.append(0)

|

| 112 |

+

for token in tokens_a:

|

| 113 |

+

tokens.append(token)

|

| 114 |

+

input_type_ids.append(0)

|

| 115 |

+

tokens.append("[SEP]")

|

| 116 |

+

input_type_ids.append(0)

|

| 117 |

+

|

| 118 |

+

if tokens_b:

|

| 119 |

+

for token in tokens_b:

|

| 120 |

+

tokens.append(token)

|

| 121 |

+

input_type_ids.append(1)

|

| 122 |

+

tokens.append("[SEP]")

|

| 123 |

+

input_type_ids.append(1)

|

| 124 |

+

|

| 125 |

+

input_ids = tokenizer.convert_tokens_to_ids(tokens)

|

| 126 |

+

|

| 127 |

+

# The mask has 1 for real tokens and 0 for padding tokens. Only real

|

| 128 |

+

# tokens are attended to.

|

| 129 |

+

input_mask = [1] * len(input_ids)

|

| 130 |

+

|

| 131 |

+

# Zero-pad up to the sequence length.

|

| 132 |

+

while len(input_ids) < seq_length:

|

| 133 |

+

input_ids.append(0)

|

| 134 |

+

input_mask.append(0)

|

| 135 |

+

input_type_ids.append(0)

|

| 136 |

+

|

| 137 |

+

assert len(input_ids) == seq_length

|

| 138 |

+

assert len(input_mask) == seq_length

|

| 139 |

+

assert len(input_type_ids) == seq_length

|

| 140 |

+

features.append(

|

| 141 |

+

InputFeatures(

|

| 142 |

+

unique_id=example.unique_id,

|

| 143 |

+

tokens=tokens,

|

| 144 |

+

input_ids=input_ids,

|

| 145 |

+

input_mask=input_mask,

|

| 146 |

+

input_type_ids=input_type_ids))

|

| 147 |

+

return features

|

| 148 |

+

|

| 149 |

+

class DatasetNotFoundError(Exception):

|

| 150 |

+

pass

|

| 151 |

+

|

| 152 |

+

class TransVGDataset(data.Dataset):

|

| 153 |

+

SUPPORTED_DATASETS = {

|

| 154 |

+

'referit': {'splits': ('train', 'val', 'trainval', 'test')},

|

| 155 |

+

'unc': {

|

| 156 |

+

'splits': ('train', 'val', 'trainval', 'testA', 'testB'),

|

| 157 |

+

'params': {'dataset': 'refcoco', 'split_by': 'unc'}

|

| 158 |

+

},

|

| 159 |

+

'unc+': {

|

| 160 |

+

'splits': ('train', 'val', 'trainval', 'testA', 'testB'),

|

| 161 |

+

'params': {'dataset': 'refcoco+', 'split_by': 'unc'}

|

| 162 |

+

},

|

| 163 |

+

'gref': {

|

| 164 |

+

'splits': ('train', 'val'),

|

| 165 |

+

'params': {'dataset': 'refcocog', 'split_by': 'google'}

|

| 166 |

+

},

|

| 167 |

+

'gref_umd': {

|

| 168 |

+

'splits': ('train', 'val', 'test'),

|

| 169 |

+

'params': {'dataset': 'refcocog', 'split_by': 'umd'}

|

| 170 |

+

},

|

| 171 |

+

'flickr': {

|

| 172 |

+

'splits': ('train', 'val', 'test')

|

| 173 |

+

},

|

| 174 |

+

'MS_CXR': {

|

| 175 |

+

'splits': ('train', 'val', 'test'),

|

| 176 |

+

'params': {'dataset': 'MS_CXR', 'split_by': 'MS_CXR'}

|

| 177 |

+

},

|

| 178 |

+

'ChestXray8': {

|

| 179 |

+

'splits': ('train', 'val', 'test'),

|

| 180 |

+

'params': {'dataset': 'ChestXray8', 'split_by': 'ChestXray8'}

|

| 181 |

+

},

|

| 182 |

+

'SGH_CXR_V1': {

|

| 183 |

+

'splits': ('train', 'val', 'test'),

|

| 184 |

+

'params': {'dataset': 'SGH_CXR_V1', 'split_by': 'SGH_CXR_V1'}

|

| 185 |

+

}

|

| 186 |

+

|

| 187 |

+

}

|

| 188 |

+

|

| 189 |

+

def __init__(self, args, data_root, split_root='data', dataset='referit',

|

| 190 |

+

transform=None, return_idx=False, testmode=False,

|

| 191 |

+

split='train', max_query_len=128, lstm=False,

|

| 192 |

+

bert_model='bert-base-uncased'):

|

| 193 |

+

self.images = []

|

| 194 |

+

self.data_root = data_root

|

| 195 |

+

self.split_root = split_root

|

| 196 |

+

self.dataset = dataset

|

| 197 |

+

self.query_len = max_query_len

|

| 198 |

+

self.lstm = lstm

|

| 199 |

+

self.transform = transform

|

| 200 |

+

self.testmode = testmode

|

| 201 |

+

self.split = split

|

| 202 |

+

self.tokenizer = AutoTokenizer.from_pretrained(bert_model, do_lower_case=True)

|

| 203 |

+

self.return_idx=return_idx

|

| 204 |

+

self.args = args

|

| 205 |

+

self.ID_Categories = {1: 'Cardiomegaly', 2: 'Lung Opacity', 3:'Edema', 4: 'Consolidation', 5: 'Pneumonia', 6:'Atelectasis', 7: 'Pneumothorax', 8:'Pleural Effusion'}

|

| 206 |

+

|

| 207 |

+

assert self.transform is not None

|

| 208 |

+

|

| 209 |

+

if split == 'train':

|

| 210 |

+

self.augment = True

|

| 211 |

+

else:

|

| 212 |

+

self.augment = False

|

| 213 |

+

|

| 214 |

+

if self.dataset == 'MS_CXR':

|

| 215 |

+

self.dataset_root = osp.join(self.data_root, 'MS_CXR')

|

| 216 |

+

self.im_dir = self.dataset_root # 具体的图片路径保存在split中

|

| 217 |

+

elif self.dataset == 'ChestXray8':

|

| 218 |

+

self.dataset_root = osp.join(self.data_root, 'ChestXray8')

|

| 219 |

+

self.im_dir = self.dataset_root # 具体的图片路径保存在split中

|

| 220 |

+

elif self.dataset == 'SGH_CXR_V1':

|

| 221 |

+

self.dataset_root = osp.join(self.data_root, 'SGH_CXR_V1')

|

| 222 |

+

self.im_dir = self.dataset_root # 具体的图片路径保存在split中

|

| 223 |

+

elif self.dataset == 'referit':

|

| 224 |

+

self.dataset_root = osp.join(self.data_root, 'referit')

|

| 225 |

+

self.im_dir = osp.join(self.dataset_root, 'images')

|

| 226 |

+

self.split_dir = osp.join(self.dataset_root, 'splits')

|

| 227 |

+

elif self.dataset == 'flickr':

|

| 228 |

+

self.dataset_root = osp.join(self.data_root, 'Flickr30k')

|

| 229 |

+

self.im_dir = osp.join(self.dataset_root, 'flickr30k_images')

|

| 230 |

+

else: ## refcoco, etc.

|

| 231 |

+

self.dataset_root = osp.join(self.data_root, 'other')

|

| 232 |

+

self.im_dir = osp.join(

|

| 233 |

+

self.dataset_root, 'images', 'mscoco', 'images', 'train2014')

|

| 234 |

+

self.split_dir = osp.join(self.dataset_root, 'splits')

|

| 235 |

+

|

| 236 |

+

if not self.exists_dataset():

|

| 237 |

+

# self.process_dataset()

|

| 238 |

+

print('Please download index cache to data folder: \n \

|

| 239 |

+

https://drive.google.com/open?id=1cZI562MABLtAzM6YU4WmKPFFguuVr0lZ')

|

| 240 |

+

exit(0)

|

| 241 |

+

|

| 242 |

+

dataset_path = osp.join(self.split_root, self.dataset)

|

| 243 |

+

valid_splits = self.SUPPORTED_DATASETS[self.dataset]['splits']

|

| 244 |

+

|

| 245 |

+

if self.lstm:

|

| 246 |

+

self.corpus = Corpus()

|

| 247 |

+

corpus_path = osp.join(dataset_path, 'corpus.pth')

|

| 248 |

+

self.corpus = torch.load(corpus_path)

|

| 249 |

+

|

| 250 |

+

if split not in valid_splits:

|

| 251 |

+

raise ValueError(

|

| 252 |

+

'Dataset {0} does not have split {1}'.format(

|

| 253 |

+

self.dataset, split))

|

| 254 |

+

|

| 255 |

+

splits = [split]

|

| 256 |

+

if self.dataset != 'referit':

|

| 257 |

+

splits = ['train', 'val'] if split == 'trainval' else [split]

|

| 258 |

+

for split in splits:

|

| 259 |

+

imgset_file = '{0}_{1}.pth'.format(self.dataset, split)

|

| 260 |

+

imgset_path = osp.join(dataset_path, imgset_file)

|

| 261 |

+

self.images += torch.load(imgset_path)

|

| 262 |

+

|

| 263 |

+

def exists_dataset(self):

|

| 264 |

+

return osp.exists(osp.join(self.split_root, self.dataset))

|

| 265 |

+

|

| 266 |

+

def pull_item(self, idx):

|

| 267 |

+

info = {}

|

| 268 |

+

if self.dataset == 'MS_CXR':

|

| 269 |

+

# anno_id, image_id, category_id, img_file, bbox, width, height, phrase, phrase_marker = self.images[idx] # 核心三要素 img_file, bbox, phrase

|

| 270 |

+

anno_id, image_id, category_id, img_file, bbox, width, height, phrase = self.images[idx] # 核心三要素 img_file, bbox, phrase

|

| 271 |

+

info['anno_id'] = anno_id

|

| 272 |

+

info['category_id'] = category_id

|

| 273 |

+

elif self.dataset == 'ChestXray8':

|

| 274 |

+

anno_id, image_id, category_id, img_file, bbox, phrase, prompt_text = self.images[idx] # 核心三要素 img_file, bbox, phrase

|

| 275 |

+

info['anno_id'] = anno_id

|

| 276 |

+

info['category_id'] = category_id

|

| 277 |

+

# info['img_file'] = img_file

|

| 278 |

+

elif self.dataset == 'SGH_CXR_V1':

|

| 279 |

+

anno_id, image_id, category_id, img_file, bbox, phrase, patient_id = self.images[idx] # 核心三要素 img_file, bbox, phrase

|

| 280 |

+

info['anno_id'] = anno_id

|

| 281 |

+

info['category_id'] = category_id

|

| 282 |

+

elif self.dataset == 'flickr':

|

| 283 |

+

img_file, bbox, phrase = self.images[idx]

|

| 284 |

+

else:

|

| 285 |

+

img_file, _, bbox, phrase, attri = self.images[idx]

|

| 286 |

+

## box format: to x1y1x2y2

|

| 287 |

+

if not (self.dataset == 'referit' or self.dataset == 'flickr'):

|

| 288 |

+

bbox = np.array(bbox, dtype=int)

|

| 289 |

+

bbox[2], bbox[3] = bbox[0]+bbox[2], bbox[1]+bbox[3]

|

| 290 |

+

else:

|

| 291 |

+

bbox = np.array(bbox, dtype=int)

|

| 292 |

+

|

| 293 |

+

# img_file = 'files/p12/p12423759/s53349935/b8c7a778-2f7f712d-5c598645-6aeebbb3-66ffbcc7.jpg' # Experiments @fixImage

|

| 294 |

+

if self.args.ablation == 'onlyText':

|

| 295 |

+

img_file = 'files/p12/p12423759/s53349935/b8c7a778-2f7f712d-5c598645-6aeebbb3-66ffbcc7.jpg'

|

| 296 |

+

|

| 297 |

+

img_path = osp.join(self.im_dir, img_file)

|

| 298 |

+

info['img_path'] = img_path

|

| 299 |

+

img = Image.open(img_path).convert("RGB")

|

| 300 |

+

|

| 301 |

+

# img = cv2.imread(img_path)

|

| 302 |

+

# ## duplicate channel if gray image

|

| 303 |

+

# if img.shape[-1] > 1:

|

| 304 |

+

# img = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

|

| 305 |

+

# else:

|

| 306 |

+

# img = np.stack([img] * 3)

|

| 307 |

+

|

| 308 |

+

bbox = torch.tensor(bbox)

|

| 309 |

+

bbox = bbox.float()

|

| 310 |

+

# info['phrase_marker'] = phrase_marker

|

| 311 |

+

return img, phrase, bbox, info

|

| 312 |

+

|

| 313 |

+

def tokenize_phrase(self, phrase):

|

| 314 |

+

return self.corpus.tokenize(phrase, self.query_len)

|

| 315 |

+

|

| 316 |

+

def untokenize_word_vector(self, words):

|

| 317 |

+

return self.corpus.dictionary[words]

|

| 318 |

+

|

| 319 |

+

def __len__(self):

|

| 320 |

+

return len(self.images)

|

| 321 |

+

|

| 322 |

+

def __getitem__(self, idx):

|

| 323 |

+

img, phrase, bbox, info = self.pull_item(idx)

|

| 324 |

+

# phrase = phrase.decode("utf-8").encode().lower()

|

| 325 |

+

phrase = phrase.lower()

|

| 326 |

+

if hasattr(self.args, 'CATextPoolType') and self.args.CATextPoolType == 'marker':

|

| 327 |

+

# TODO

|

| 328 |

+

phrase = info['phrase_marker']

|

| 329 |

+

info['phrase_record'] = phrase # for visualization # info: img_path, phrase_record, anno_id, category_id

|

| 330 |

+

input_dict = {'img': img, 'box': bbox, 'text': phrase}

|

| 331 |

+

|

| 332 |

+

if self.args.model_name == 'TransVG_ca' and self.split == 'train':

|

| 333 |

+

NegBBoxs = sampleNegBBox(bbox, self.args.CAsampleType, self.args.CAsampleNum) # negative bbox

|

| 334 |

+

|

| 335 |

+

input_dict = {'img': img, 'box': bbox, 'text': phrase, 'NegBBoxs': NegBBoxs}

|

| 336 |

+

if self.args.model_name == 'TransVG_gn' and self.split == 'train':

|

| 337 |

+

json_name = os.path.splitext(os.path.basename(info['img_path']))[0]+'_SceneGraph.json'

|

| 338 |

+

json_name = os.path.join(self.args.GNpath, json_name)

|

| 339 |

+

# 解析json, 得到所有的anatomy-level的分类label

|

| 340 |

+

gnLabel = getCLSLabel(json_name, bbox)

|

| 341 |

+

info['gnLabel'] = gnLabel

|

| 342 |

+

|

| 343 |

+

input_dict = self.transform(input_dict)

|

| 344 |

+

img = input_dict['img']

|

| 345 |

+

bbox = input_dict['box']

|

| 346 |

+

phrase = input_dict['text']

|

| 347 |

+

img_mask = input_dict['mask']

|

| 348 |

+

if self.args.model_name == 'TransVG_ca' and self.split == 'train':

|

| 349 |

+

info['NegBBoxs'] = [np.array(negBBox, dtype=np.float32) for negBBox in input_dict['NegBBoxs']]

|

| 350 |

+

|

| 351 |

+

if self.lstm:

|

| 352 |

+

phrase = self.tokenize_phrase(phrase)

|

| 353 |

+

word_id = phrase

|

| 354 |

+

word_mask = np.array(word_id>0, dtype=int)

|

| 355 |

+

else:

|

| 356 |

+

## encode phrase to bert input

|

| 357 |

+

examples = read_examples(phrase, idx)

|

| 358 |

+

if hasattr(self.args, 'CATextPoolType') and self.args.CATextPoolType == 'marker':

|

| 359 |

+

use_marker = 'yes'

|

| 360 |

+

else:

|

| 361 |

+

use_marker = None

|

| 362 |

+

features = convert_examples_to_features(

|

| 363 |

+

examples=examples, seq_length=self.query_len, tokenizer=self.tokenizer, usemarker=use_marker)

|

| 364 |

+

word_id = features[0].input_ids

|

| 365 |

+

word_mask = features[0].input_mask

|

| 366 |

+

if self.args.ablation == 'onlyImage':

|

| 367 |

+

word_mask = [0] * word_mask.__len__() # experiments @2

|

| 368 |

+

# if self.args.ablation == 'onlyText':

|

| 369 |

+

# img_mask = np.ones_like(np.array(img_mask))

|

| 370 |

+

|

| 371 |

+

if self.testmode:

|

| 372 |

+

return img, np.array(word_id, dtype=int), np.array(word_mask, dtype=int), \

|

| 373 |

+

np.array(bbox, dtype=np.float32), np.array(ratio, dtype=np.float32), \

|

| 374 |

+

np.array(dw, dtype=np.float32), np.array(dh, dtype=np.float32), self.images[idx][0]

|

| 375 |

+

else:

|

| 376 |

+

return img, np.array(img_mask), np.array(word_id, dtype=int), np.array(word_mask, dtype=int), np.array(bbox, dtype=np.float32), info

|

med_rpg/demo.py

ADDED

|

@@ -0,0 +1,222 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

import numpy as np

|

| 3 |

+

import torch

|

| 4 |

+

|

| 5 |

+

# import datasets

|

| 6 |

+

import utils.misc as misc

|

| 7 |

+

from utils.box_utils import xywh2xyxy

|

| 8 |

+

from utils.visual_bbox import visualBBox

|

| 9 |

+

from models import build_model

|

| 10 |

+

import transforms as T

|

| 11 |

+

import PIL.Image as Image

|

| 12 |

+

import data_loader

|

| 13 |

+

from transformers import AutoTokenizer

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def get_args_parser():

|

| 17 |

+

parser = argparse.ArgumentParser('Set transformer detector', add_help=False)

|

| 18 |

+

|

| 19 |

+

# Input config

|

| 20 |

+

# parser.add_argument('--image', type=str, default='xxx', help="input X-ray image.")

|

| 21 |

+

# parser.add_argument('--phrase', type=str, default='xxx', help="input phrase.")

|

| 22 |

+

# parser.add_argument('--bbox', type=str, default='xxx', help="alternative, if you want to show ground-truth bbox")

|

| 23 |

+

|

| 24 |

+

# fool

|

| 25 |

+

parser.add_argument('--lr', default=1e-4, type=float)

|

| 26 |

+

parser.add_argument('--lr_bert', default=0., type=float)

|

| 27 |

+

parser.add_argument('--lr_visu_cnn', default=0., type=float)

|

| 28 |

+

parser.add_argument('--lr_visu_tra', default=1e-5, type=float)

|

| 29 |

+

parser.add_argument('--batch_size', default=32, type=int)

|

| 30 |

+

parser.add_argument('--weight_decay', default=1e-4, type=float)

|

| 31 |

+

parser.add_argument('--epochs', default=100, type=int)

|

| 32 |

+

parser.add_argument('--lr_power', default=0.9, type=float, help='lr poly power')

|

| 33 |

+

parser.add_argument('--clip_max_norm', default=0., type=float,

|

| 34 |

+

help='gradient clipping max norm')

|

| 35 |

+

parser.add_argument('--eval', dest='eval', default=False, action='store_true', help='if evaluation only')

|

| 36 |

+

parser.add_argument('--optimizer', default='rmsprop', type=str)

|

| 37 |

+

parser.add_argument('--lr_scheduler', default='poly', type=str)

|

| 38 |

+

parser.add_argument('--lr_drop', default=80, type=int)

|

| 39 |

+

# Model parameters

|

| 40 |

+

parser.add_argument('--model_name', type=str, default='TransVG_ca',

|

| 41 |

+

help="Name of model to be exploited.")

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

# Transformers in two branches

|

| 45 |

+

parser.add_argument('--bert_enc_num', default=12, type=int)

|

| 46 |

+

parser.add_argument('--detr_enc_num', default=6, type=int)

|

| 47 |

+

|

| 48 |

+

# DETR parameters

|

| 49 |

+

# * Backbone

|

| 50 |

+

parser.add_argument('--backbone', default='resnet50', type=str,

|

| 51 |

+

help="Name of the convolutional backbone to use")

|

| 52 |

+

parser.add_argument('--dilation', action='store_true',

|

| 53 |

+

help="If true, we replace stride with dilation in the last convolutional block (DC5)")

|

| 54 |

+

parser.add_argument('--position_embedding', default='sine', type=str, choices=('sine', 'learned'), help="Type of positional embedding to use on top of the image features")

|

| 55 |

+

# * Transformer

|

| 56 |

+

parser.add_argument('--enc_layers', default=6, type=int,

|

| 57 |

+

help="Number of encoding layers in the transformer")

|

| 58 |

+

parser.add_argument('--dec_layers', default=0, type=int,

|

| 59 |

+

help="Number of decoding layers in the transformer")

|

| 60 |

+

parser.add_argument('--dim_feedforward', default=2048, type=int,

|

| 61 |

+

help="Intermediate size of the feedforward layers in the transformer blocks")

|

| 62 |

+

parser.add_argument('--hidden_dim', default=256, type=int,

|

| 63 |

+

help="Size of the embeddings (dimension of the transformer)")

|

| 64 |

+

parser.add_argument('--dropout', default=0.1, type=float,

|

| 65 |

+

help="Dropout applied in the transformer")

|

| 66 |

+

parser.add_argument('--nheads', default=8, type=int,

|

| 67 |

+

help="Number of attention heads inside the transformer's attentions")

|

| 68 |

+

parser.add_argument('--num_queries', default=100, type=int,

|

| 69 |

+

help="Number of query slots")

|

| 70 |

+

parser.add_argument('--pre_norm', action='store_true')

|

| 71 |

+

|

| 72 |

+

parser.add_argument('--imsize', default=640, type=int, help='image size')

|

| 73 |

+

parser.add_argument('--emb_size', default=512, type=int,

|

| 74 |

+

help='fusion module embedding dimensions')

|

| 75 |

+

# Vision-Language Transformer

|

| 76 |

+

parser.add_argument('--use_vl_type_embed', action='store_true',

|

| 77 |

+

help="If true, use vl_type embedding")

|

| 78 |

+

parser.add_argument('--vl_dropout', default=0.1, type=float,

|

| 79 |

+

help="Dropout applied in the vision-language transformer")

|

| 80 |

+

parser.add_argument('--vl_nheads', default=8, type=int,

|

| 81 |

+

help="Number of attention heads inside the vision-language transformer's attentions")

|

| 82 |

+

parser.add_argument('--vl_hidden_dim', default=256, type=int,

|

| 83 |

+

help='Size of the embeddings (dimension of the vision-language transformer)')

|

| 84 |

+

parser.add_argument('--vl_dim_feedforward', default=2048, type=int,

|

| 85 |

+

help="Intermediate size of the feedforward layers in the vision-language transformer blocks")

|

| 86 |

+

parser.add_argument('--vl_enc_layers', default=6, type=int,

|

| 87 |

+

help='Number of encoders in the vision-language transformer')

|

| 88 |

+

|

| 89 |

+

# Dataset parameters

|

| 90 |

+

# parser.add_argument('--data_root', type=str, default='./ln_data/',

|

| 91 |

+

# help='path to ReferIt splits data folder')

|

| 92 |

+

# parser.add_argument('--split_root', type=str, default='data',

|

| 93 |

+

# help='location of pre-parsed dataset info')

|

| 94 |

+

parser.add_argument('--dataset', default='MS_CXR', type=str,

|

| 95 |

+

help='referit/flickr/unc/unc+/gref')

|

| 96 |

+

parser.add_argument('--max_query_len', default=20, type=int,

|

| 97 |

+

help='maximum time steps (lang length) per batch')

|

| 98 |

+

|

| 99 |

+

# dataset parameters

|

| 100 |

+

parser.add_argument('--output_dir', default='outputs',

|

| 101 |

+

help='path where to save, empty for no saving')

|

| 102 |

+

parser.add_argument('--device', default='cuda',

|

| 103 |

+

help='device to use for training / testing')

|

| 104 |

+

# parser.add_argument('--seed', default=13, type=int)

|

| 105 |

+

# parser.add_argument('--resume', default='', help='resume from checkpoint')

|

| 106 |

+

parser.add_argument('--detr_model', default='./saved_models/detr-r50.pth', type=str, help='detr model')

|

| 107 |

+

parser.add_argument('--bert_model', default='bert-base-uncased', type=str, help='bert model')

|

| 108 |

+

# parser.add_argument('--light', dest='light', default=False, action='store_true', help='if use smaller model')

|

| 109 |

+

# parser.add_argument('--start_epoch', default=0, type=int, metavar='N',

|

| 110 |

+

# help='start epoch')

|

| 111 |

+

# parser.add_argument('--num_workers', default=2, type=int)

|

| 112 |

+

|

| 113 |

+

# distributed training parameters

|

| 114 |

+

# parser.add_argument('--world_size', default=1, type=int,

|

| 115 |

+

# help='number of distributed processes')

|

| 116 |

+

# parser.add_argument('--dist_url', default='env://', help='url used to set up distributed training')

|

| 117 |

+

|

| 118 |

+

# evalutaion options

|

| 119 |

+

# parser.add_argument('--eval_set', default='test', type=str)

|

| 120 |

+

parser.add_argument('--eval_model', default='checkpoint/best_miou_checkpoint.pth', type=str)

|

| 121 |

+

|

| 122 |

+

# visualization options

|

| 123 |

+

# parser.add_argument('--visualization', action='store_true',

|

| 124 |

+

# help="If true, visual the bbox")

|

| 125 |

+

# parser.add_argument('--visual_MHA', action='store_true',

|

| 126 |

+

# help="If true, visual the attention maps")

|

| 127 |

+

|

| 128 |

+

return parser

|

| 129 |

+

|

| 130 |

+

def make_transforms(imsize):

|

| 131 |

+

return T.Compose([

|

| 132 |

+

T.RandomResize([imsize]),

|

| 133 |

+

T.ToTensor(),

|

| 134 |

+

T.NormalizeAndPad(size=imsize),

|

| 135 |

+

])

|

| 136 |

+

|

| 137 |

+

def main(args):

|

| 138 |

+

|

| 139 |

+

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

|

| 140 |

+

image_size = 640 # hyper parameters

|

| 141 |

+

|

| 142 |

+

## build data

|

| 143 |

+

# case1

|

| 144 |

+

img_path = "data/649af982-e3af4e3a-75013d30-cdc71514-a34738fd.jpg"

|

| 145 |

+

phrase = 'Small left apical pneumothorax'

|

| 146 |

+

bbox = [332, 28, 141, 48] # xywh

|

| 147 |

+

# # case2

|

| 148 |

+

# img_path = 'files/p10/p10977201/s59062881/00363400-cee06fa7-8c2ca1f7-2678a170-b3a62a6e.jpg'

|

| 149 |

+

# phrase = 'small apical pneumothorax'

|

| 150 |

+

# bbox = [161, 134, 111, 37]

|

| 151 |

+

# # case3

|

| 152 |

+

# img_path = 'files/p18/p18426683/s59612243/95423e8e-45dff550-563d3eba-b8bc94be-a87f5a1d.jpg'

|

| 153 |

+

# phrase = 'cardiac silhouette enlarged'

|

| 154 |

+

# bbox = [196, 312, 371, 231]

|

| 155 |

+

# # case4

|

| 156 |

+

# img_path = 'files/p10/p10048451/s53489305/4b7f7a4c-18c39245-53724c25-06878595-7e41bb94.jpg'

|

| 157 |

+

# phrase = 'Focal opacity in the lingular lobe'

|

| 158 |

+

# bbox = [467, 373, 131, 189]

|

| 159 |

+

# # case5

|

| 160 |

+

# img_path = 'files/p19/p19757720/s59572378/13255e1f-91b7b172-02baaeee-340ec493-0e531681.jpg'

|

| 161 |

+

# phrase = 'multisegmental right upper lobe consolidation is present'

|

| 162 |

+

# bbox = [9, 86, 232, 278]

|

| 163 |

+

# # case6

|

| 164 |

+

# img_path = 'files/p10/p10469621/s56786891/04e10148-c36f7afb-d0aaf964-152d8a5d-a02ab550.jpg'

|

| 165 |

+

# phrase = 'right middle lobe opacity, suspicious for pneumonia in the proper clinical setting'

|

| 166 |

+

# bbox = [108, 405, 162, 83]

|

| 167 |

+

# # case7

|

| 168 |

+

# img_path = 'files/p10/p10670818/s50191454/1176839d-cf4f677f-d597a1ef-548bc32a-c05429f3.jpg'

|

| 169 |

+

# phrase = 'Newly appeared lingular opacity'

|

| 170 |

+

# bbox = [392, 297, 141, 151]

|

| 171 |

+

|

| 172 |

+

bbox = bbox[:2] + [bbox[0]+bbox[2], bbox[1]+bbox[3]] # xywh2xyxy

|

| 173 |

+

|

| 174 |

+

## encode phrase to bert input

|

| 175 |

+

examples = data_loader.read_examples(phrase, 1)

|

| 176 |

+

tokenizer = AutoTokenizer.from_pretrained(args.bert_model, do_lower_case=True)

|

| 177 |

+

features = data_loader.convert_examples_to_features(

|

| 178 |

+

examples=examples, seq_length=args.max_query_len, tokenizer=tokenizer, usemarker=None)

|