update readme

Browse files- README.md +13 -6

- TFT-ID.png +0 -0

README.md

CHANGED

|

@@ -1,6 +1,6 @@

|

|

| 1 |

---

|

| 2 |

license: mit

|

| 3 |

-

license_link: https://huggingface.co/microsoft/Florence-2-

|

| 4 |

pipeline_tag: image-text-to-text

|

| 5 |

tags:

|

| 6 |

- vision

|

|

@@ -14,14 +14,14 @@ tags:

|

|

| 14 |

|

| 15 |

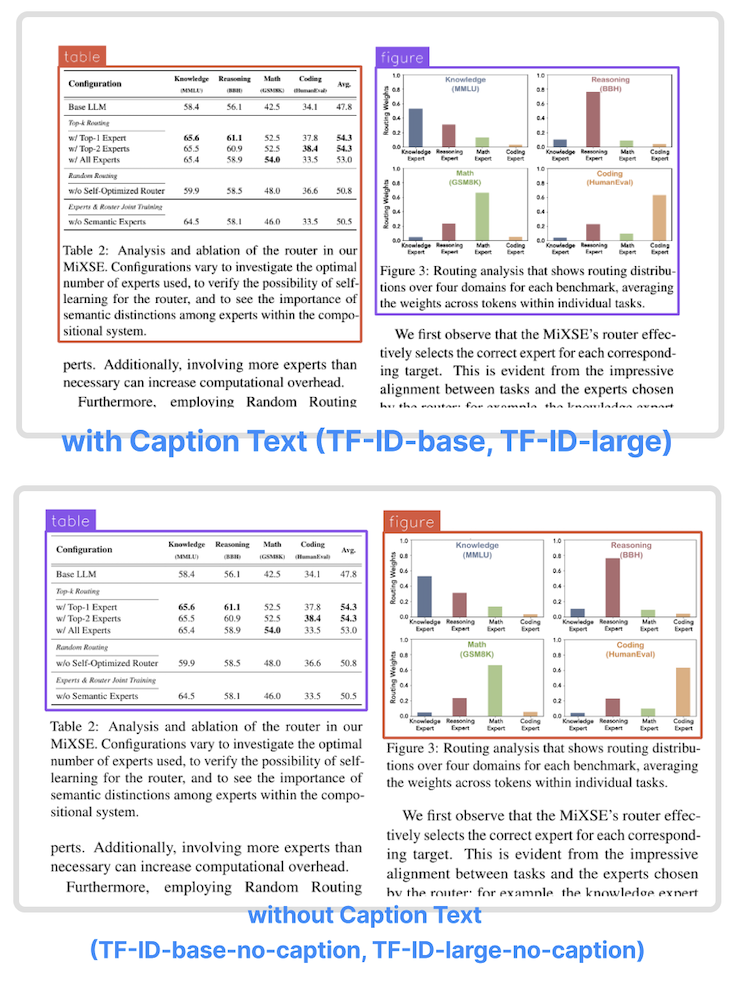

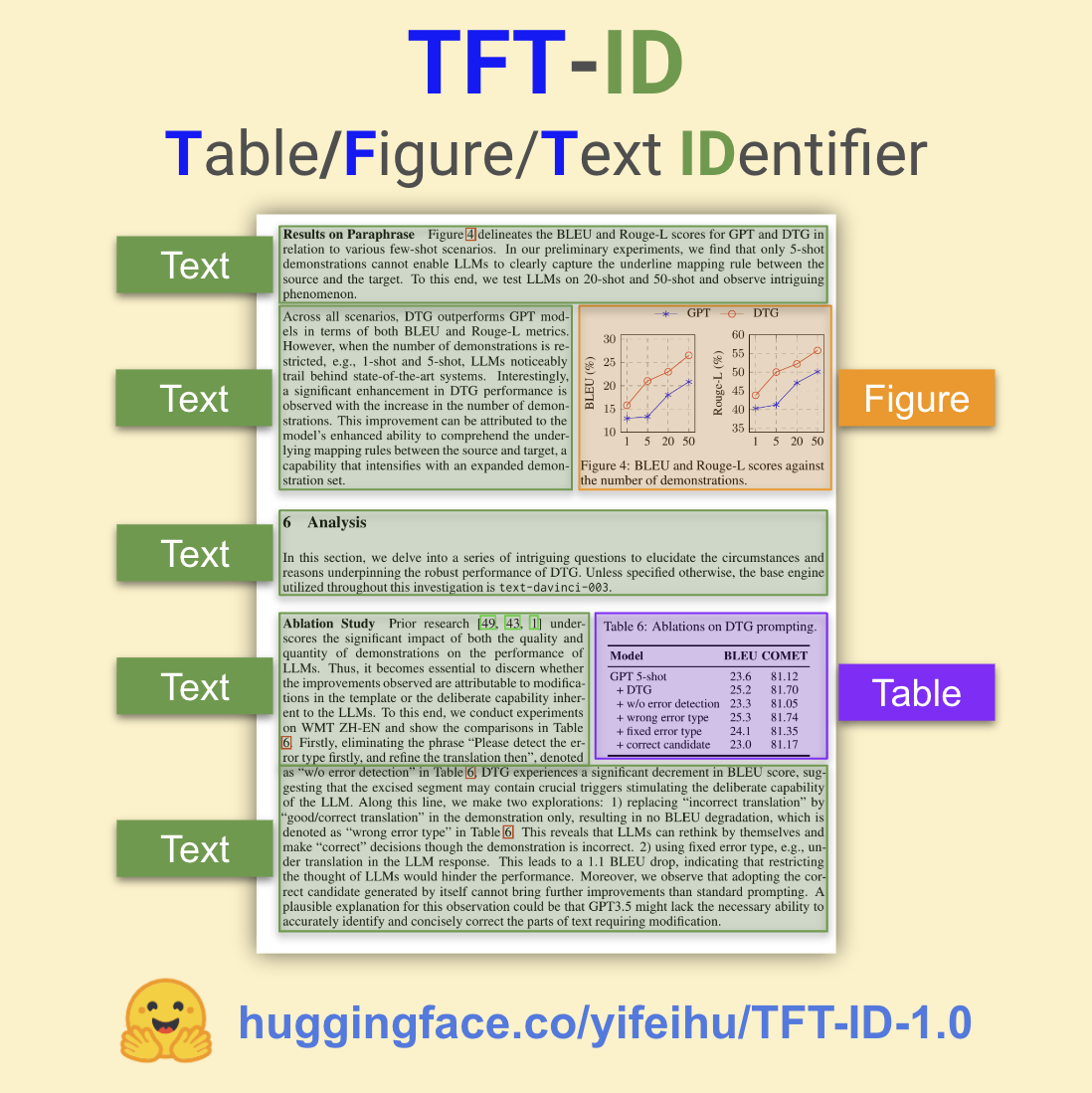

TFT-ID (Table/Figure/Text IDentifier) is a family of object detection models finetuned to extract tables, figures, and text sections in academic papers created by [Yifei Hu](https://x.com/hu_yifei).

|

| 16 |

|

| 17 |

-

|

|

|

|

|

|

|

| 18 |

|

| 19 |

- The models were finetuned with papers from Hugging Face Daily Papers. All bounding boxes are manually annotated and checked by humans.

|

| 20 |

- TFT-ID models take an image of a single paper page as the input, and return bounding boxes for all tables, figures, and text sections in the given page.

|

| 21 |

- The text sections contain clean text content perfect for downstream OCR workflows. However, TFT-ID is not an OCR model.

|

| 22 |

|

| 23 |

-

|

| 24 |

-

|

| 25 |

Object Detection results format:

|

| 26 |

{'\<OD>': {'bboxes': [[x1, y1, x2, y2], ...],

|

| 27 |

'labels': ['label1', 'label2', ...]} }

|

|

@@ -36,10 +36,17 @@ We tested the models on paper pages outside the training dataset. The papers are

|

|

| 36 |

|

| 37 |

Correct output - the model draws correct bounding boxes for every table/figure/text section in the given page and not missing any content.

|

| 38 |

|

|

|

|

| 39 |

| Model | Total Images | Correct Output | Success Rate |

|

| 40 |

|---------------------------------------------------------------|--------------|----------------|--------------|

|

| 41 |

| TFT-ID-1.0[[HF]](https://huggingface.co/yifeihu/TFT-ID-1.0) | 373 | 361 | 96.78% |

|

| 42 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

Depending on the use cases, some "incorrect" output could be totally usable. For example, the model draw two bounding boxes for one figure with two child components.

|

| 44 |

|

| 45 |

## How to Get Started with the Model

|

|

@@ -51,8 +58,8 @@ import requests

|

|

| 51 |

from PIL import Image

|

| 52 |

from transformers import AutoProcessor, AutoModelForCausalLM

|

| 53 |

|

| 54 |

-

model = AutoModelForCausalLM.from_pretrained("yifeihu/

|

| 55 |

-

processor = AutoProcessor.from_pretrained("yifeihu/

|

| 56 |

|

| 57 |

prompt = "<OD>"

|

| 58 |

|

|

|

|

| 1 |

---

|

| 2 |

license: mit

|

| 3 |

+

license_link: https://huggingface.co/microsoft/Florence-2-large/resolve/main/LICENSE

|

| 4 |

pipeline_tag: image-text-to-text

|

| 5 |

tags:

|

| 6 |

- vision

|

|

|

|

| 14 |

|

| 15 |

TFT-ID (Table/Figure/Text IDentifier) is a family of object detection models finetuned to extract tables, figures, and text sections in academic papers created by [Yifei Hu](https://x.com/hu_yifei).

|

| 16 |

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

TFT-ID is finetuned from [microsoft/Florence-2](https://huggingface.co/microsoft/Florence-2-large) checkpoints.

|

| 20 |

|

| 21 |

- The models were finetuned with papers from Hugging Face Daily Papers. All bounding boxes are manually annotated and checked by humans.

|

| 22 |

- TFT-ID models take an image of a single paper page as the input, and return bounding boxes for all tables, figures, and text sections in the given page.

|

| 23 |

- The text sections contain clean text content perfect for downstream OCR workflows. However, TFT-ID is not an OCR model.

|

| 24 |

|

|

|

|

|

|

|

| 25 |

Object Detection results format:

|

| 26 |

{'\<OD>': {'bboxes': [[x1, y1, x2, y2], ...],

|

| 27 |

'labels': ['label1', 'label2', ...]} }

|

|

|

|

| 36 |

|

| 37 |

Correct output - the model draws correct bounding boxes for every table/figure/text section in the given page and not missing any content.

|

| 38 |

|

| 39 |

+

Task 1: Table, Figure, and Text Section Identification

|

| 40 |

| Model | Total Images | Correct Output | Success Rate |

|

| 41 |

|---------------------------------------------------------------|--------------|----------------|--------------|

|

| 42 |

| TFT-ID-1.0[[HF]](https://huggingface.co/yifeihu/TFT-ID-1.0) | 373 | 361 | 96.78% |

|

| 43 |

|

| 44 |

+

Task 2: Table and Figure Identification

|

| 45 |

+

| Model | Total Images | Correct Output | Success Rate |

|

| 46 |

+

|---------------------------------------------------------------|--------------|----------------|--------------|

|

| 47 |

+

| **TFT-ID-1.0**[[HF]](https://huggingface.co/yifeihu/TFT-ID-1.0) | 258 | 255 | **98.84%** |

|

| 48 |

+

| TF-ID-large[[HF]](https://huggingface.co/yifeihu/TF-ID-large) | 258 | 253 | 98.06% |

|

| 49 |

+

|

| 50 |

Depending on the use cases, some "incorrect" output could be totally usable. For example, the model draw two bounding boxes for one figure with two child components.

|

| 51 |

|

| 52 |

## How to Get Started with the Model

|

|

|

|

| 58 |

from PIL import Image

|

| 59 |

from transformers import AutoProcessor, AutoModelForCausalLM

|

| 60 |

|

| 61 |

+

model = AutoModelForCausalLM.from_pretrained("yifeihu/TFT-ID-1.0", trust_remote_code=True)

|

| 62 |

+

processor = AutoProcessor.from_pretrained("yifeihu/TFT-ID-1.0", trust_remote_code=True)

|

| 63 |

|

| 64 |

prompt = "<OD>"

|

| 65 |

|

TFT-ID.png

ADDED

|