Introduction

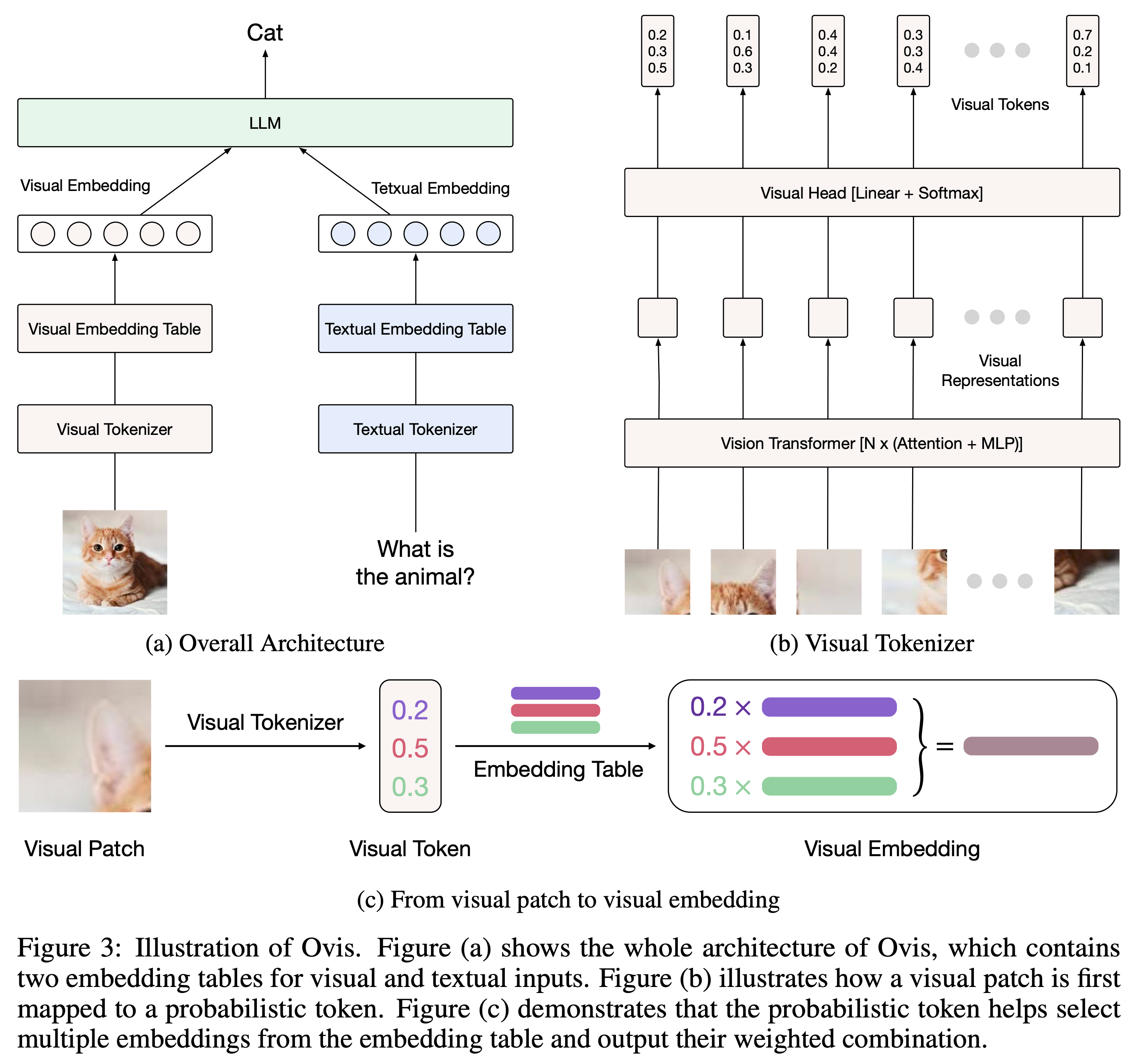

Ovis is a novel Multimodal Large Language Model (MLLM) architecture, designed to structurally align visual and textual embeddings. For a comprehensive introduction, please refer to Ovis paper and Ovis GitHub.

Model

Ovis can be instantiated with popular LLMs (e.g., Qwen, Llama3). We provide the following pretrained Ovis MLLMs:

| Ovis-Clip-Qwen1.5-7B | Ovis-Clip-Llama3-8B | Ovis-Clip-Qwen1.5-14B | |

|---|---|---|---|

| ViT | Clip | Clip | Clip |

| LLM | Qwen1.5-7B-Chat | Llama3-8B-Instruct | Qwen1.5-14B-Chat |

| Download | Huggingface | Huggingface | Huggingface |

| MMStar | 44.3 | 49.5 | 48.5 |

| MMB-EN | 75.1 | 77.4 | 78.4 |

| MMB-CN | 70.2 | 72.8 | 76.6 |

| MMMU-Val | 39.7 | 44.7 | 46.7 |

| MMMU-Test | 37.7 | 39.0 | 40.7 |

| MathVista-Mini | 41.4 | 40.8 | 43.4 |

| MME | 1882 | 2009 | 1961 |

| HallusionBench | 56.4 | 61.1 | 57.6 |

| RealWorldQA | 60.0 | 57.9 | 62.7 |

Usage

Below is a code snippet to run Ovis with multimodal inputs. For additional usage instructions, including inference wrapper and Gradio UI, please refer to Ovis GitHub.

pip install torch==2.1.0 transformers==4.41.1 deepspeed==0.14.0 pillow==10.3.0

import torch

from PIL import Image

from transformers import AutoModelForCausalLM

# load model

model = AutoModelForCausalLM.from_pretrained("AIDC-AI/Ovis-Clip-Qwen1_5-14B",

torch_dtype=torch.bfloat16,

multimodal_max_length=8192,

trust_remote_code=True).cuda()

text_tokenizer = model.get_text_tokenizer()

visual_tokenizer = model.get_visual_tokenizer()

conversation_formatter = model.get_conversation_formatter()

# enter image path and prompt

image_path = input("Enter image path: ")

image = Image.open(image_path)

text = input("Enter prompt: ")

query = f'<image> {text}'

prompt, input_ids = conversation_formatter.format_query(query)

input_ids = torch.unsqueeze(input_ids, dim=0).to(device=model.device)

attention_mask = torch.ne(input_ids, text_tokenizer.pad_token_id).to(device=model.device)

pixel_values = [visual_tokenizer.preprocess_image(image).to(

dtype=visual_tokenizer.dtype, device=visual_tokenizer.device)]

# print model output

with torch.inference_mode():

kwargs = dict(

pixel_values=pixel_values,

attention_mask=attention_mask,

do_sample=False,

top_p=None,

temperature=None,

top_k=None,

repetition_penalty=None,

max_new_tokens=512,

use_cache=True,

eos_token_id=text_tokenizer.eos_token_id,

pad_token_id=text_tokenizer.pad_token_id

)

output_ids = model.generate(input_ids, **kwargs)[0]

input_token_len = input_ids.shape[1]

output = text_tokenizer.decode(output_ids[input_token_len:], skip_special_tokens=True)

print(f'Output: {output}')

Citation

If you find Ovis useful, please cite the paper

@article{lu2024ovis,

title={Ovis: Structural Embedding Alignment for Multimodal Large Language Model},

author={Shiyin Lu and Yang Li and Qing-Guo Chen and Zhao Xu and Weihua Luo and Kaifu Zhang and Han-Jia Ye},

year={2024},

journal={arXiv:2405.20797}

}

License

The project is licensed under the Apache 2.0 License and is restricted to uses that comply with the license agreements of Qwen, Llama3, and Clip.

- Downloads last month

- 10

Inference API (serverless) does not yet support model repos that contain custom code.