license: other

license_name: mnpl-0.1

license_link: https://mistral.ai/licences/MNPL-0.1.md

tags:

- code

- generation

- debugging

- editing

Code Logic Debugger v0.1

Hardware requirements for ChatGPT GPT-4o level inference speed for the models in this repo: >=24 GB VRAM.

Note: The following results are based on my day-to-day workflows only on an RTX 3090. My goal was to run private models that could beat GPT-4o and Claude-3.5 in code debugging and generation to ‘load balance’ between OpenAI/Anthropic’s free plan and local models to avoid hitting rate limits, and to upload as few lines of my code and ideas to their servers as possible.

An example of a complex debugging scenario is where you build library A on top of library B that requires library C as a dependency but the root cause was a variable in library C. In this case, the following workflow guided me to correctly identify the problem.

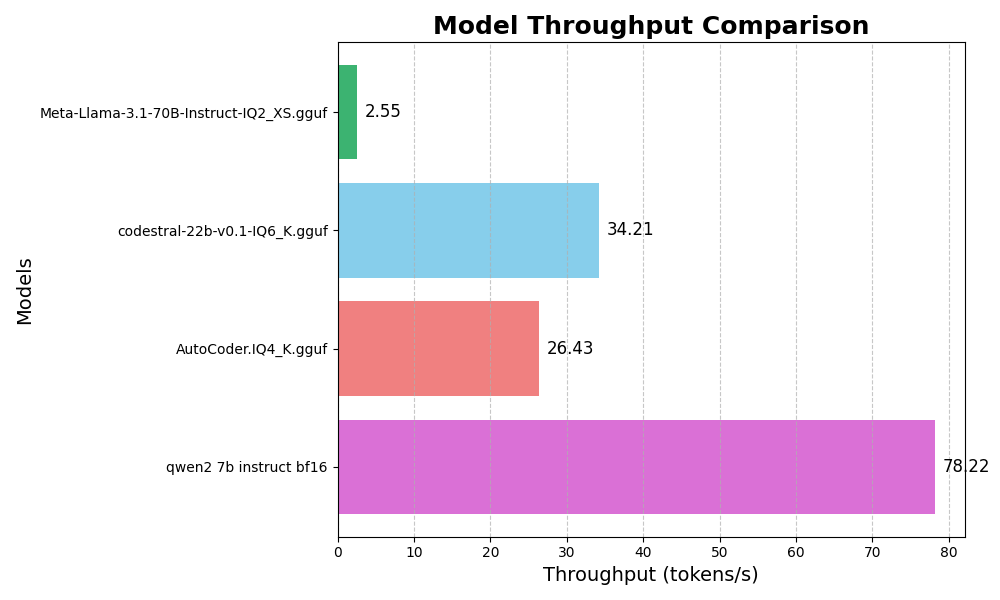

Throughput

IQ here refers to Importance Matrix Quantization. For performance comparison against regular GGUF, please read this Reddit post. For more info on the techique, please see this GitHub discussion.

Personal Preference Ranking

Evaluated on two programming tasks: debugging and generation. It may be a bit subjective. DeepSeekV2 Coder Instruct is ranked lower because DeepSeek's Privacy Policy says that they may collect "text input, prompt" and there's no way around it.

Code debugging/editing prompt template used:

<code>

<current output>

<the problem description of the current output>

<expected output (in English is fine)>

<any hints>

Think step by step. Solve this problem without removing any existing functionalities, logic, or checks, except any incorrect code that interferes with your edits.

| Rank | Model Name | Token Speed (tokens/s) | Debugging Performance | Code Generation Performance | Notes |

|---|---|---|---|---|---|

| 1 | codestral-22b-v0.1-IQ6_K.gguf (this repo) | 34.21 | Excellent at complex debugging, often surpasses GPT-4o and Claude-3.5 | Good, but may not be par with GPT-4o | Best overall for debugging in my workflow, use Balanced Mode. |

| 2* | Claude-3.5-Sonnet | N/A | Poor in complex debugging compared to Codestral | Excellent, better than GPT-4o in long code generation | Great for code generation, but weaker in debugging. |

| 2* | GPT-4o | N/A | Good at complex debugging but can be outperformed by Codestral | Excellent, generally reliable for code generation | Balanced performance between code debugging and generation. |

| 4 | DeepSeekV2 Coder Instruct | N/A | Good, but outputs the same code in complex scenarios | Great at general code generation, rivals GPT-4o | Excellent at code generation, but has data privacy concerns as per Privacy Policy. |

| 5* | Qwen2-7b-Instruct bf16 | 78.22 | Average, can think of correct approaches | Sometimes helps generate new ideas | High speed, useful for generating ideas. |

| 5* | AutoCoder.IQ4_K.gguf (this repo) | 26.43 | Excellent at solutions that require one to few lines of edits | Generates useful short code segments | Try Precise Mode or Balanced Mode. |

| 7 | GPT-4o-mini | N/A | Decent, but struggles with complex debugging tasks | Reliable for shorter or simpler code generation tasks | Suitable for less complex coding tasks. |

| 8 | Meta-Llama-3.1-70B-Instruct-IQ2_XS.gguf | 2.55 | Poor, too slow to be practical in day-to-day workflows | Occasionally helps generate ideas | Speed is a significant limitation. |

| 9 | Trinity-2-Codestral-22B-Q6_K_L | N/A | Poor, similar issues to DeepSeekV2 in outputing the same code | Decent, but often repeats code | Similar problem to DeepSeekV2, not recommended for my complex tasks. |

| 10 | DeepSeekV2 Coder Lite Instruct Q_8L | N/A | Poor, repeats code similar to other models in its family | Not as effective in my context | Not recommended overall based on my criteria. |

Generation Kwargs

Balanced Mode:

generation_kwargs = {

"max_tokens":8192,

"stop":["<|EOT|>", "</s>", "<|end▁of▁sentence|>", "<eos>", "<|start_header_id|>", "<|end_header_id|>", "<|eot_id|>"],

"temperature":0.7,

"stream":True,

"top_k":50,

"top_p":0.95,

}

Precise Mode:

generation_kwargs = {

"max_tokens":8192,

"stop":["<|EOT|>", "</s>", "<|end▁of▁sentence|>", "<eos>", "<|start_header_id|>", "<|end_header_id|>", "<|eot_id|>"],

"temperature":0.0,

"stream":True,

"top_p":1.0,

}

Qwen2 7B:

generation_kwargs = {

"max_tokens":8192,

"stop":["<|EOT|>", "</s>", "<|end▁of▁sentence|>", "<eos>", "<|start_header_id|>", "<|end_header_id|>", "<|eot_id|>"],

"temperature":0.4,

"stream":True,

"top_k":20,

"top_p":0.8,

}

Other variations in temperature, top_k, and top_p were tested 5-8 times per model too, but I'm sticking to the above three.

New Discoveries

The following are tested in my workflow, but may not generalize well to other workflows.

- In general, if there's an error in the code, copy pasting the last few rows of stacktrace to the LLM seems to work.

- Adding "Now, reflect." after a failed attempt at code generation sometimes allows Claude-3.5-Sonnet to generate the correct version.

- If GPT-4o reasons correctly in its first response and the conversation is then continued with GPT-4-mini, the mini model can maintain comparable level of reasoning/accuracy as GPT-4o.

License

A reminder that codestral-22b-v0.1-IQ6_K.gguf should only be used for non-commercial projects.

Please use Qwen2-7b-Instruct bf16 and AutoCoder.IQ4_K.gguf as alternatives for commericial activities.

Download

pip install -U "huggingface_hub[cli]"

Non-commercial (e.g. testing, research, personal, or evaluation purposes) use:

huggingface-cli download FredZhang7/claudegpt-code-logic-debugger-v0.1 --include "codestral-22b-v0.1-IQ6_K.gguf" --local-dir ./

Commercial use:

huggingface-cli download FredZhang7/claudegpt-code-logic-debugger-v0.1 --include "AutoCoder.IQ4_K.gguf" --local-dir ./