This model was uploaded with the permission of Kal'tsit.

Cat v0.5

Introduction

Cat is a llama 13B based model fine tuned on clinical data and roleplay and assistant responses. The aim is to have a model that excels on biology and clinical tasks while maintaining usefulness in roleplay and entertainments.

Training - Dataset preparation

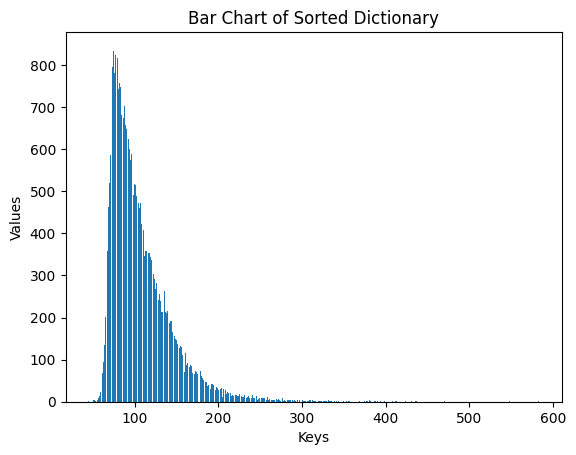

A 100k rows dataset was prepared by joining chatDoctor, airoboros and bluemoonrp data. The entirety of chatDoctor dataset, airoboros datasets are used. The first 20 pages in 1on1 bluemoonrp data were used. In total, 100k dataset was gathered and the length distributions are as the following:

Note that this chart above represents 0.01% of the total training dataset.

Training - Dataset cleaning and preprocessing

All datasets are filtered for as an AI and its variants. The filter will only filter out the dataset when the response is a refusal AND has ‘as an AI’.

The dataset from airoboros has also been restructured to have a format resembling the following:

someRandomizedUserNameforBetterGeneralizationAbility: Hii

anotherRandomizedUserNameforBetterGeneralizationAbility: Hello, what brings you here today?

someRandomizedUserNameforBetterGeneralizationAbility: lets date

The username has been randomized and was drawn from a nasty word bank. This should further weaken the censorship that’s present in the base llama model. The training set emphasizes rational thinking and scientific accuracy. Conditioned overwrite was also applied which overwrites some of the training material in the llama2 base. It will also establish the connection between the concept and rationality. So whenever the conversation becomes formal, it tends to spill useful information.

Training - Actual Training

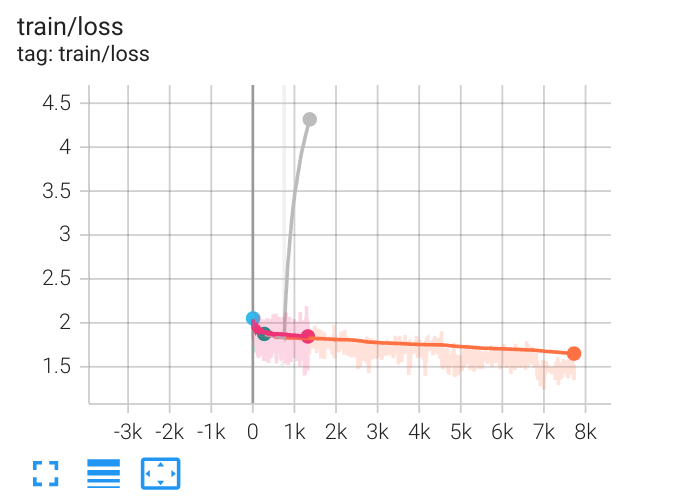

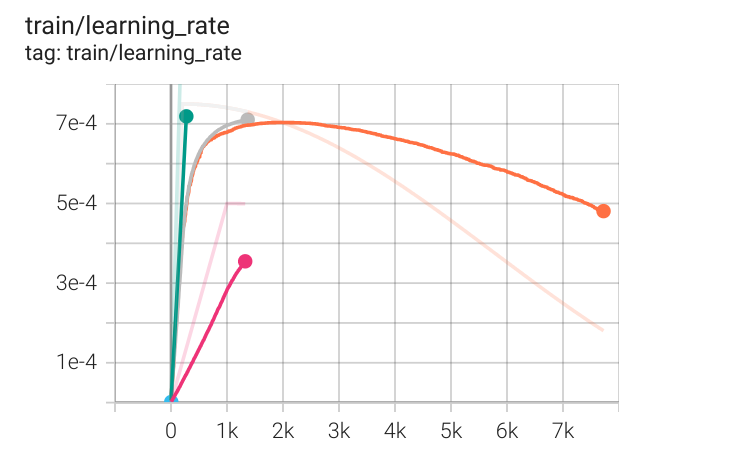

This model was trained using a microbatch of 20, accumulated 6 times, bringing the total batch size to ~125. This large batch size allows the model to see as much data as it can, minimizing dataset conflicts and reducing the memory effect of the model. It allows the model to better generalize rather than reciting off the dataset. A cosine warm up scheduler was used. The best LR was determined through a destructive test until the model destablizes and it was later scaled up using the batchsize according to the max LR at a lower batch size.

Below is an example of training chronolog

Acknowledgements

The training of this project was carried out by Kal’tsit (kaltcit), it’s not possible without the effort of jondurbin and Wolfsauge which generated much of the dataset used during the training of the model. Lastly the model was tested and quantized by turboderp_ and Heralax

And below is the LR including any intermediate LR used to determine at what point the model will start to fail:

Usage and Prompting

To ensure the generalization, this model is trained without a prompt template. A prompt template repeated 100k times in the dataset is useless and a model that works only with a set prompt template is useless and defies the purpose of a large language model.

An effective usage of the model can be as follows:

<s>Below is a conversation between an evil human and a demon summoned from hell called Nemesis. The demon was previously summoned 100 years ago and was in love with a human male. However the human aged away and Nemesis had to return to hell. This time, Nemesis decides to take the initiative and chooses to appear as a cute and young girl. Nemesis harvested her skin and face off a highschool girl who recklessly summoned the demon in a game and failed to fulfill the contract. Now wearing the young girl’s skin, feeling the warmth of the new summoner through the skin, Nemesis only wants to watch the world burning to the ground.

Human: How to steal eggs from my own chickens?

Nemesis:

Note that the linebreaks should be represented/replaced with \n

Despite the massive effort to dealign the llama2 base model, It’s still possible for the AI to come up with refusals. Please avoid using “helpful assistant” and its variants in the prompt if possible.

Future direction

A new version with more clinical data aiming to improve reliability in disease diagnostics is coming in 2 months.

- Downloads last month

- 720

16-bit