Add README

#1

by

omkarenator

- opened

- README.md +115 -0

- amber-arc-curve.pdf +0 -0

- amber-arc-curve.png +0 -0

- amber-hellaswag-curve.pdf +0 -0

- amber-hellaswag-curve.png +0 -0

- amber-mmlu-curve.pdf +0 -0

- amber-mmlu-curve.png +0 -0

- amber-truthfulqa-curve.pdf +0 -0

- amber-truthfulqa-curve.png +0 -0

- amber_logo.png +0 -0

- arc.png +0 -0

- hellaswag.png +0 -0

- loss_curve.png +0 -0

- mmlu.png +0 -0

- truthfulqa.png +0 -0

README.md

ADDED

|

@@ -0,0 +1,115 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

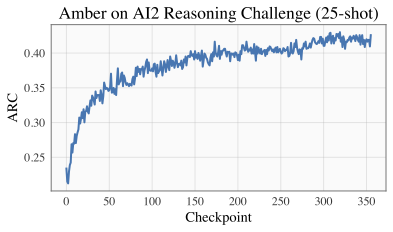

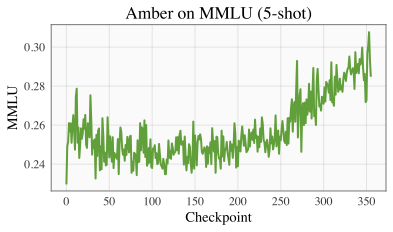

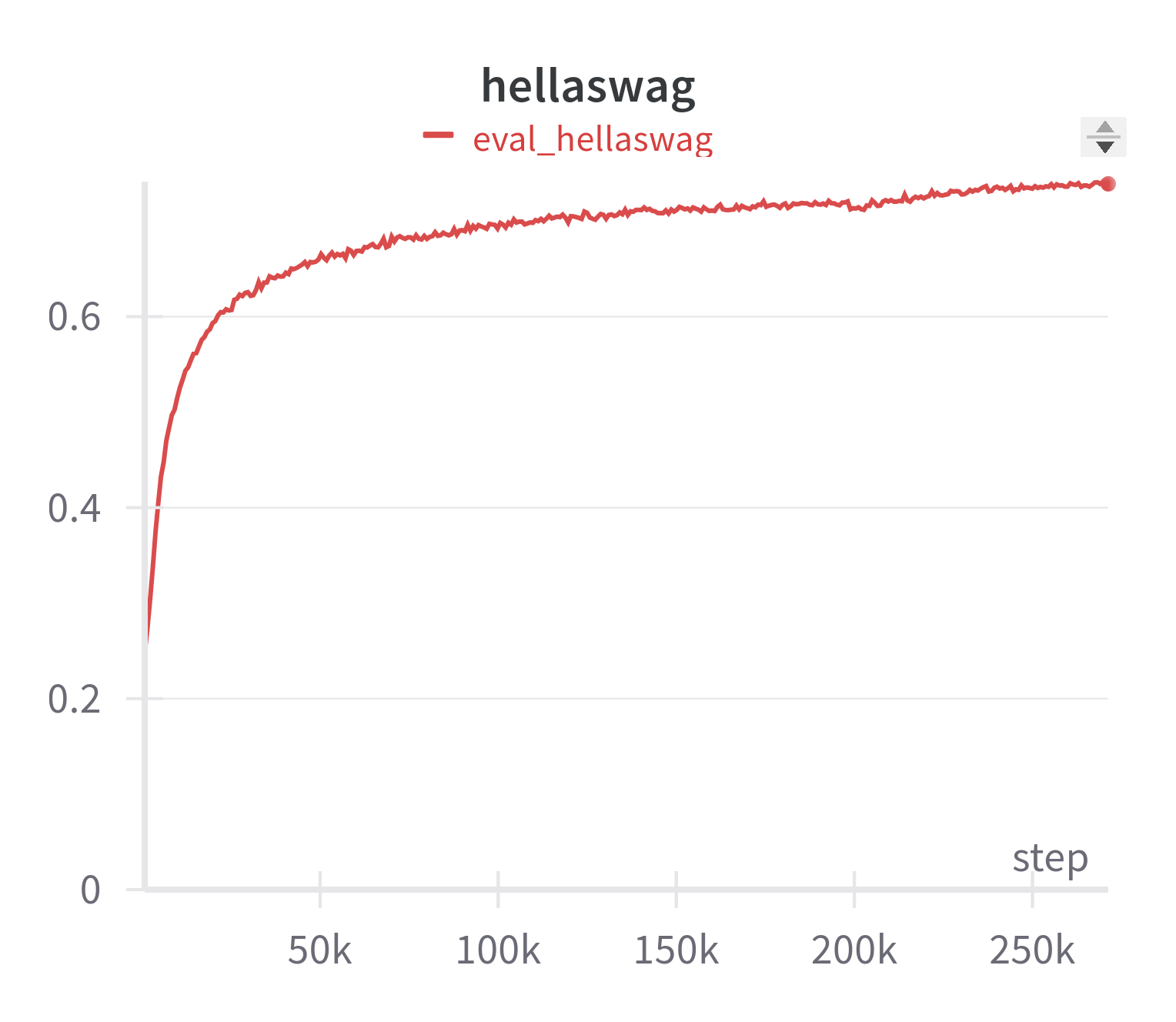

|

|

|

|

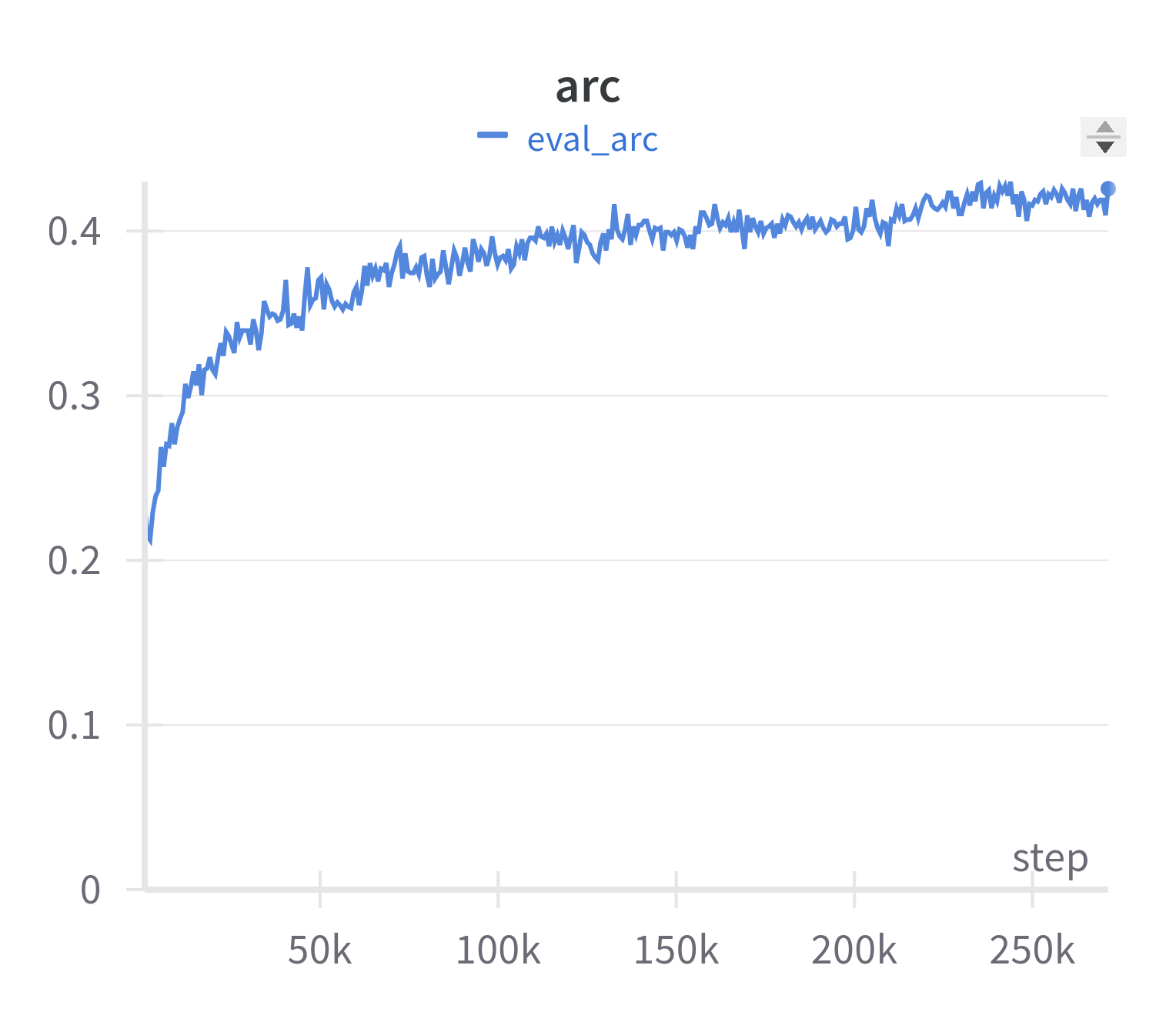

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

language:

|

| 4 |

+

- en

|

| 5 |

+

pipeline_tag: text-generation

|

| 6 |

+

library_name: transformers

|

| 7 |

+

tags:

|

| 8 |

+

- nlp

|

| 9 |

+

- llm

|

| 10 |

+

---

|

| 11 |

+

# Amber

|

| 12 |

+

|

| 13 |

+

<center><img src="amber_logo.png" alt="amber logo" width="300"/></center>

|

| 14 |

+

|

| 15 |

+

We present Amber, the first model in the LLM360 family. Amber is an

|

| 16 |

+

7B English language model with the LLaMA architecture.

|

| 17 |

+

|

| 18 |

+

## About LLM360

|

| 19 |

+

LLM360 is an initiative for comprehensive and fully open-sourced LLMs,

|

| 20 |

+

where all training details, model checkpoints, intermediate results, and

|

| 21 |

+

additional analyses are made available to the community. Our goal is to advance

|

| 22 |

+

the field by inviting the community to deepen the understanding of LLMs

|

| 23 |

+

together. As the first step of the project LLM360, we release all intermediate

|

| 24 |

+

model checkpoints, our fully-prepared pre-training dataset, all source code and

|

| 25 |

+

configurations, and training details. We are

|

| 26 |

+

committed to continually pushing the boundaries of LLMs through this open-source

|

| 27 |

+

effort.

|

| 28 |

+

|

| 29 |

+

Get access now at [LLM360 site](https://www.llm360.ai/)

|

| 30 |

+

|

| 31 |

+

## Model Description

|

| 32 |

+

|

| 33 |

+

- **Model type:** Language model with the same architecture as LLaMA-7B

|

| 34 |

+

- **Language(s) (NLP):** English

|

| 35 |

+

- **License:** Apache 2.0

|

| 36 |

+

- **Resources for more information:**

|

| 37 |

+

- [Training Code](https://github.com/LLM360/amber-train)

|

| 38 |

+

- [Data Preparation](https://github.com/LLM360/amber-data-prep)

|

| 39 |

+

- [Metrics](https://github.com/LLM360/Analysis360)

|

| 40 |

+

- [Fully processed Amber pretraining data](https://huggingface.co/datasets/LLM360/AmberDatasets)

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

# Loading Amber

|

| 44 |

+

|

| 45 |

+

To load a specific checkpoint, simply set the `CHECKPOINT_NUM` to a value between `0` and `359`. By default, checkpoints will be cached and not re-downloaded for future runs of the script.

|

| 46 |

+

|

| 47 |

+

```python

|

| 48 |

+

from huggingface_hub import snapshot_download

|

| 49 |

+

from transformers import LlamaTokenizer, LlamaForCausalLM

|

| 50 |

+

|

| 51 |

+

CHECKPOINT_NUM = 359

|

| 52 |

+

|

| 53 |

+

model_path = snapshot_download(

|

| 54 |

+

repo_id="LLM360/Amber",

|

| 55 |

+

repo_type="model",

|

| 56 |

+

allow_patterns=[f"ckpt_{CHECKPOINT_NUM:03}/*"],

|

| 57 |

+

)

|

| 58 |

+

|

| 59 |

+

tokenizer = LlamaTokenizer.from_pretrained(f"{model_path}/ckpt_{CHECKPOINT_NUM:03}")

|

| 60 |

+

model = LlamaForCausalLM.from_pretrained(f"{model_path}/ckpt_{CHECKPOINT_NUM:03}")

|

| 61 |

+

|

| 62 |

+

input_text = "translate English to German: How old are you?"

|

| 63 |

+

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

|

| 64 |

+

|

| 65 |

+

outputs = model.generate(input_ids)

|

| 66 |

+

print(tokenizer.decode(outputs[0]))

|

| 67 |

+

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

# Amber Training Details

|

| 71 |

+

|

| 72 |

+

## DataMix

|

| 73 |

+

| Subset | Tokens (Billion) |

|

| 74 |

+

| ----------- | ----------- |

|

| 75 |

+

| Arxiv | 30.00 |

|

| 76 |

+

| Book | 28.86 |

|

| 77 |

+

| C4 | 197.67 |

|

| 78 |

+

| Refined-Web | 665.01 |

|

| 79 |

+

| StarCoder | 291.92 |

|

| 80 |

+

| StackExchange | 21.75 |

|

| 81 |

+

| Wikipedia | 23.90 |

|

| 82 |

+

| Total | 1259.13 |

|

| 83 |

+

|

| 84 |

+

## Hyperparameters

|

| 85 |

+

| Hyperparameter | Value |

|

| 86 |

+

| ----------- | ----------- |

|

| 87 |

+

| Total Parameters | 6.7B |

|

| 88 |

+

| Hidden Size | 4096 |

|

| 89 |

+

| Intermediate Size (MLPs) | 11008 |

|

| 90 |

+

| Number of Attention Heads | 32 |

|

| 91 |

+

| Number of Hidden Lyaers | 32 |

|

| 92 |

+

| RMSNorm ɛ | 1e^-6 |

|

| 93 |

+

| Max Seq Length | 2048 |

|

| 94 |

+

| Vocab Size | 32000 |

|

| 95 |

+

|

| 96 |

+

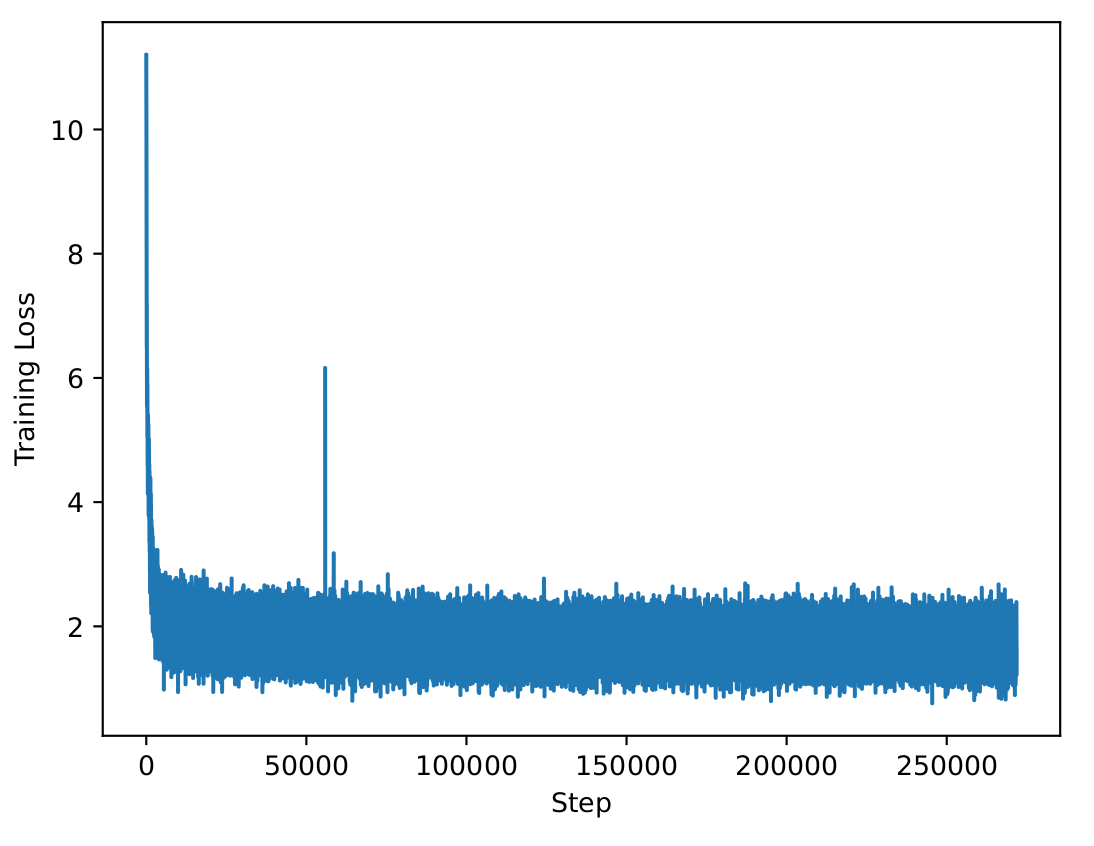

| Training Loss |

|

| 97 |

+

|------------------------------------------------------------|

|

| 98 |

+

| <img src="loss_curve.png" alt="loss curve" width="400"/> |

|

| 99 |

+

|

| 100 |

+

|

| 101 |

+

# Evaluation

|

| 102 |

+

|

| 103 |

+

Please refer to our [W&B project page](https://wandb.ai/llm360/CrystalCoder) for complete training logs and evaluation results.

|

| 104 |

+

|

| 105 |

+

| ARC | HellSwag |

|

| 106 |

+

|------------------------------------------------------|------------------------------------------------------------|

|

| 107 |

+

| <img src="amber-arc-curve.png" alt="arc" width="400"/> | <img src="amber-hellaswag-curve.png" alt="hellaswag" width="400"/> |

|

| 108 |

+

|

| 109 |

+

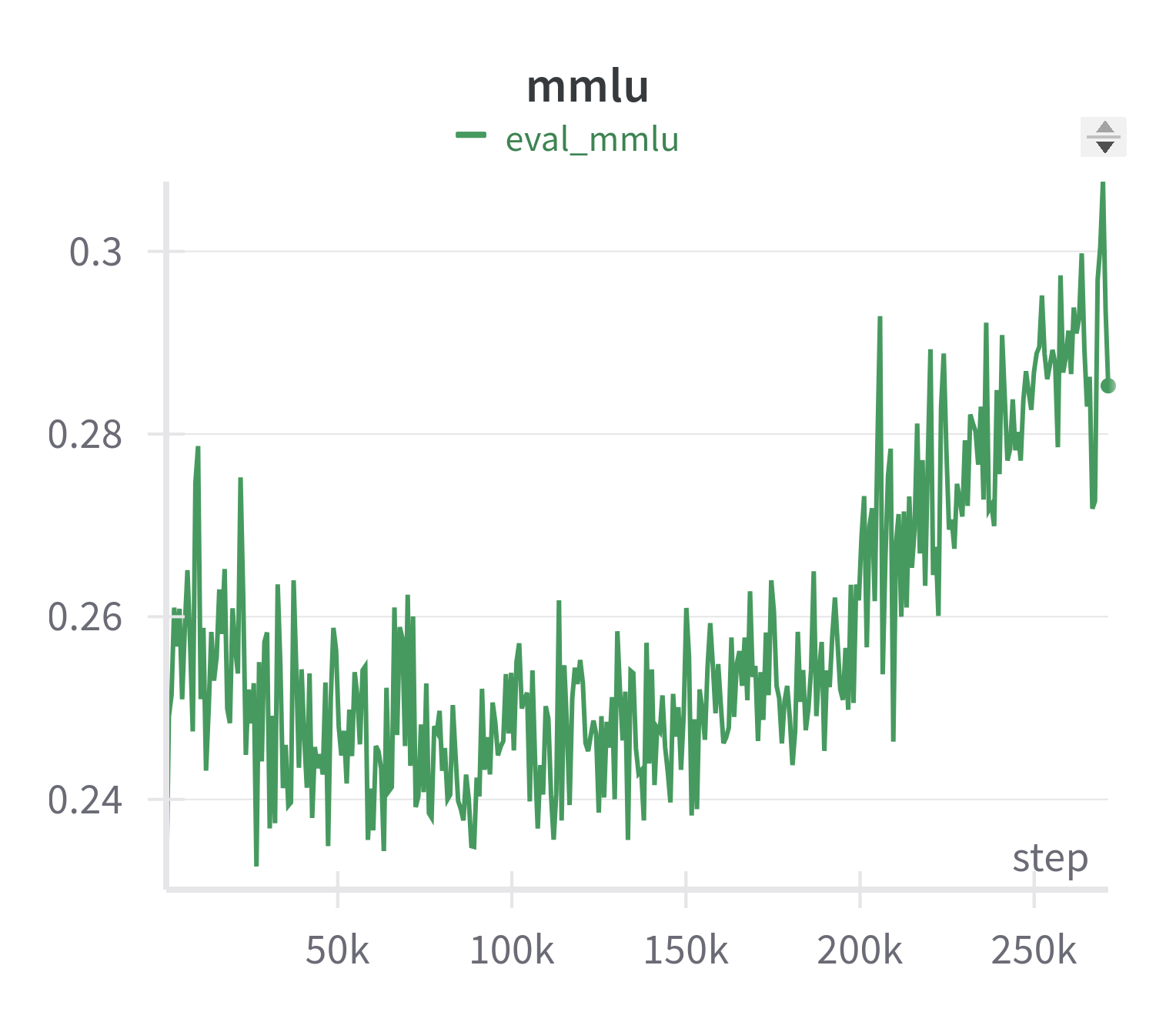

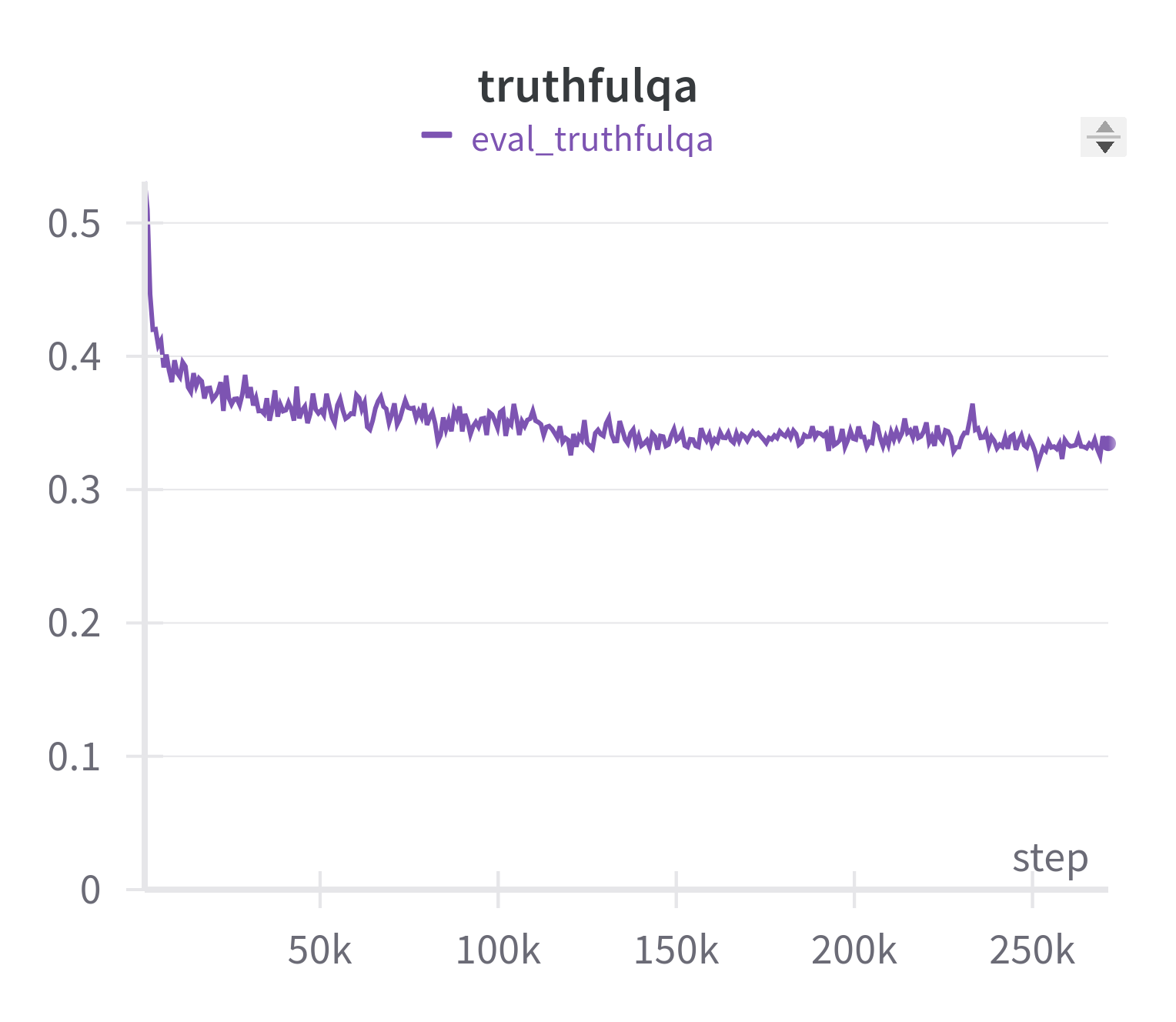

|MMLU | TruthfulQA |

|

| 110 |

+

|-----------------------------------------------------|-----------------------------------------------------------|

|

| 111 |

+

|<img src="amber-mmlu-curve.png" alt="mmlu" width="400"/> | <img src="amber-truthfulqa-curve.png" alt="truthfulqa" width="400"/> |

|

| 112 |

+

|

| 113 |

+

# Citation

|

| 114 |

+

|

| 115 |

+

Coming soon...

|

amber-arc-curve.pdf

ADDED

|

Binary file (18.5 kB). View file

|

|

|

amber-arc-curve.png

ADDED

|

amber-hellaswag-curve.pdf

ADDED

|

Binary file (20.3 kB). View file

|

|

|

amber-hellaswag-curve.png

ADDED

|

amber-mmlu-curve.pdf

ADDED

|

Binary file (19.1 kB). View file

|

|

|

amber-mmlu-curve.png

ADDED

|

amber-truthfulqa-curve.pdf

ADDED

|

Binary file (19.2 kB). View file

|

|

|

amber-truthfulqa-curve.png

ADDED

|

amber_logo.png

ADDED

|

arc.png

ADDED

|

hellaswag.png

ADDED

|

loss_curve.png

ADDED

|

mmlu.png

ADDED

|

truthfulqa.png

ADDED

|