Chinese-Style-Stable-Diffusion-2-v0.1

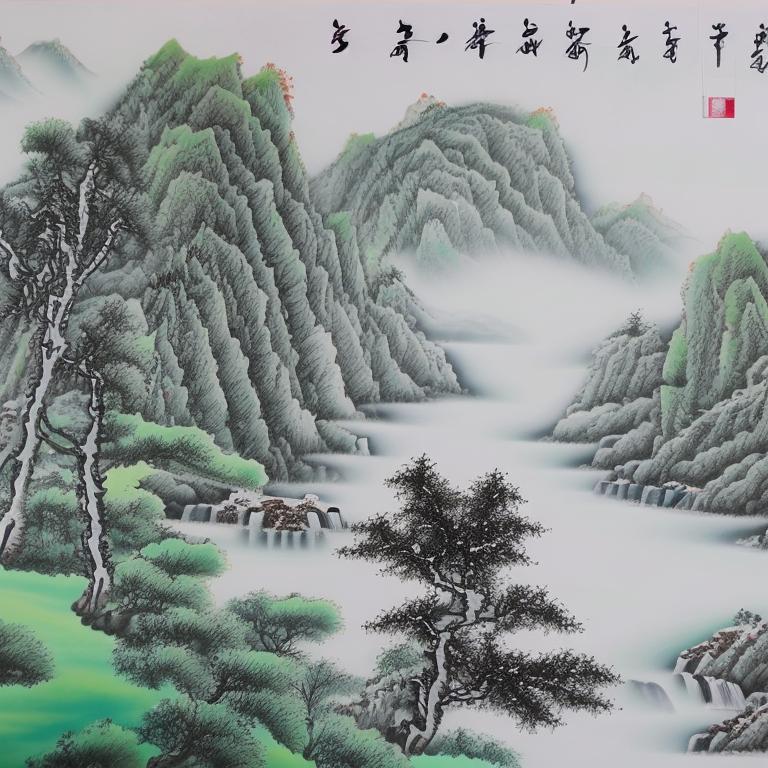

大概是Huggingface 🤗社区首个开源的Stable diffusion 2 中文模型。该模型基于stable diffusion V2.1模型,在约500万条的中国风格筛选过的中文数据上进行微调,数据来源于多个开源数据集如LAION-5B, Noah-Wukong, Zero和一些网络数据。

Probably the first open sourced Chinese Stable Diffusion 2 model in Huggingface🤗 community. This model is finetuned based on stable diffusion V2.1 with 5M chinese style filtered data. Dataset is composed of several different chinese open source dataset such as LAION-5B, Noah-Wukong, Zero and some web data.

Model Details

Text Encoder

文本编码器使用冻结参数的lyua1225/clip-huge-zh-75k-steps-bs4096。

Text encoder is frozen lyua1225/clip-huge-zh-75k-steps-bs4096 .

Unet

在筛选过的的500万中文数据集上训练了150K steps,使用指数移动平均值(EMA)做原绘画能力保留,使模型能够在中文风格和原绘画能力之间获得权衡。

Training on 5M chinese style filtered data for 150k steps. Exponential moving average(EMA) is applied to keep the original Stable Diffusion 2 drawing capability and reach a balance between chinese style and original drawing capability.

Usage

因为使用了customized tokenizer, 所以需要优先加载一下tokenizer, 并传入trust_remote_code=True

Customized Tokenizer should be loaded first with 'trust_remote_code=True'.

import torch

from diffusers import StableDiffusionPipeline

from transformers import AutoTokenizer

tokenizer_id = "lyua1225/clip-huge-zh-75k-steps-bs4096"

sd2_id = "Midu/chinese-style-stable-diffusion-2-v0.1"

tokenizer = AutoTokenizer.from_pretrained(tokenizer_id, trust_remote_code=True)

pipe = StableDiffusionPipeline.from_pretrained(sd2_id, torch_dtype=torch.float16, tokenizer=tokenizer)

pipe.to("cuda")

image = pipe("赛博朋克风格的城市街道,8K分辨率,CG渲染", guidance_scale=10, num_inference_steps=20).images[0]

image.save("cyberpunk.jpeg")

- Downloads last month

- 14