license: apache-2.0

datasets:

- Nuo97/Dolphin-DPO

language:

- zh

metrics:

- bleu

pipeline_tag: question-answering

COMEDY: COmpressive Memory-Enhanced Dialogue sYstems framework.

Github: https://github.com/nuochenpku/COMEDY

Paper: https://arxiv.org/abs/2402.11975.pdf

Task: Long-Term Conversation Dialogue Generation

Different from previous retrieval-based methods, COMEDY doesn't rely on any retrieval module or database.

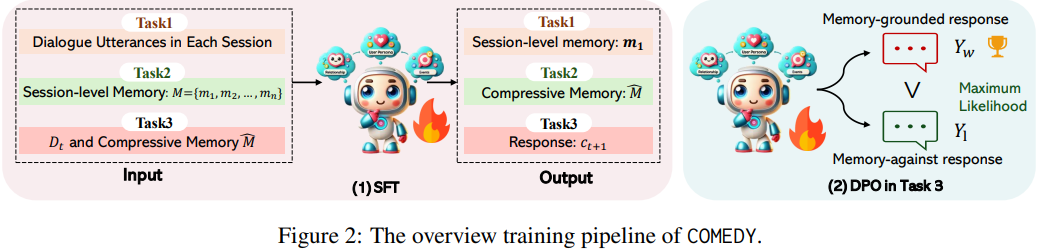

Instead, COMEDY adopts a groundbreaking ''One-for-All'' approach, utilizing a single, unified model to manage the entire process from memory generation, compression to final response generation for long-term memory dialogue generation.

COMEDY firstly involves distilling session-specific memory from past dialogues, encompassing fine-grained session summaries, including event recaps, and detailed user and bot portraits;

In a break from traditional systems, COMEDY eschews the use of a memory database for storing these insights. Instead, it reprocesses and condenses memories from all past interactions, forming a Compressive Memory: The first part is the concise events that have occurred throughout all the conversations, creating a historical narrative that the system can draw upon. The second and third parts consist of a detailed user profile and the dynamic relationship changes between the user and chatbot across sessions, both derived from past conversational events.

Finally, COMEDY skillfully integrates this compressive memory into ongoing conversations, enabling contextually memory-enhanced interactions.

Training Dataset

Dolphin, the biggest Chinese long-term conversation dataset, from actual online user-chatbot interactions.

This dataset contains three tasks:

Session-Level Memory Summarization;

Memory Compression;

Memory-Grounded Response Generation,

comprising an extensive collection of 100k samples.

Dolphin is available at Dolphin

Training Strategy

Our training strategies include two stages: Mixed-task training and DPO Alignment.