[GUIDE] Launch Q5_1 model with oobabooga's text-generation-webui

Obtain Q5_1 from: https://huggingface.co/TheBloke/wizardLM-7B-GGML/tree/main

Obtain and install latest version of https://github.com/oobabooga/text-generation-webui

git clone https://github.com/oobabooga/text-generation-webui

cd text-generation-webui

pip install -r requirements.txt

OPTIONAL (no longer needed as all models have been renamed to lowercase 'ggml') - Rename WizardLM-7B.GGML.q5_1.bin to WizardLM-7B.ggml.q5_1.bin as per https://github.com/oobabooga/text-generation-webui/blob/ee68ec9079492a72a35c33d5000da432ce94af71/modules/models.py#LL46C1-L46C1 glob() is case sensitive :(

Place the model into models/TheBloke_wizardLM-7B-GGML of text-generation-webui

OPTION 1 (NO LONGER REQUIRED IF THE LATEST VERSION OF text-generation-webui WAS INSTALLED) - We need to upgrade llama-cpp-python because support was only added recently

pip freeze | grep llama

pip uninstall -y llama-cpp-python

pip cache purge && pip install llama-cpp-python==0.1.41 # or more recent, q5 support added to pypi in 0.1.39 - https://github.com/abetlen/llama-cpp-python/issues/124

pip freeze | grep llama # output:

llama-cpp-python==0.1.41

- OPTION 2 (NO LONGER REQUIRED IF THE LATEST VERSION OF text-generation-webui WAS INSTALLED) - Alternatively, obtain and install the develop version

cd ~/

rm -rf llama-cpp-python

git clone https://github.com/abetlen/llama-cpp-python

cd llama-cpp-python

sed -i 's/git@github.com:/https:\/\/github.com\//g' .gitmodules

git submodule update --init --recursive

pip uninstall -y llama-cpp-python

pip install scikit-build

python3 setup.py develop

pip freeze | grep llama # output:

-e git+https://github.com/abetlen/llama-cpp-python@9339929f56ca71adb97930679c710a2458f877bd#egg=llama_cpp_python

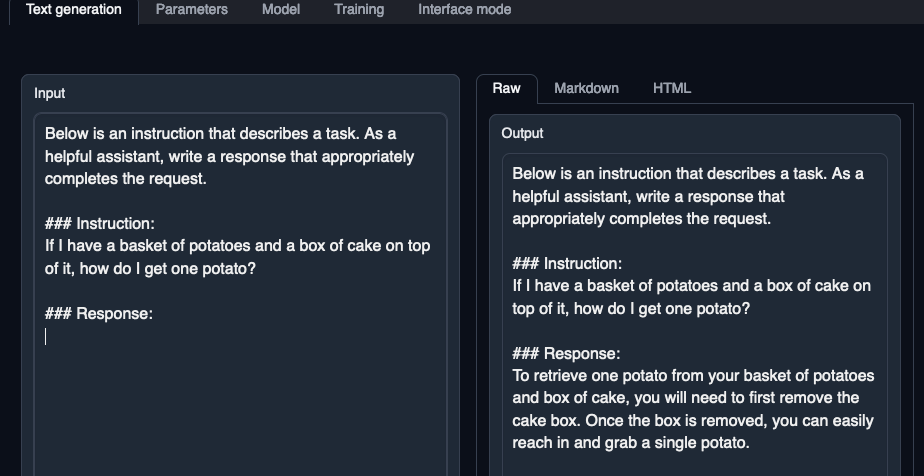

- Launch oobabooga's text-generation-webui with llama.cpp

python server.py --model TheBloke_wizardLM-7B-GGML --threads 4

Output generated in 11.22 seconds (4.10 tokens/s, 46 tokens, context 69, seed 1066937501)

Awesome guide, thanks! You can edit out point 3 as I've renamed all the files to ggml.bin. It's dumb that textgen is case sensitive but for now it's easier if I just change it here.

I will link to your guide on the README. Thanks for posting it!

Link added to README

Thanks for your converted model!

abetlen just released 0.1.39 to pypi. I've edited the guide.

Will it utilize my GPU? I have 6gb vram 1060 6gb?

Will it utilize my GPU? I have 6gb vram 1060 6gb?

Yes, if you compile llama.cpp or llama-cpp-python with cuBLAS support.

If you want to use a UI like text-generation-webui, you should use llama-cpp-python. Details here for compiling that with GPU support: https://github.com/abetlen/llama-cpp-python#installation-with-openblas--cublas--clblast--metal