license: other

license_name: bria-2.3

license_link: https://bria.ai/bria-huggingface-model-license-agreement/

pipeline_tag: image-to-image

inference: false

tags:

- text-to-image

- controlnet model

- legal liability

- commercial use

extra_gated_prompt: >-

This model weights by BRIA AI can be obtained after a commercial license is

agreed upon. Fill in the form below and we reach out to you.

extra_gated_fields:

Name: text

Company/Org name: text

Org Type (Early/Growth Startup, Enterprise, Academy): text

Role: text

Country: text

Email: text

By submitting this form, I agree to BRIA’s Privacy policy and Terms & conditions, see links below: checkbox

BRIA 2.3 ControlNet ColorGrid Model Card

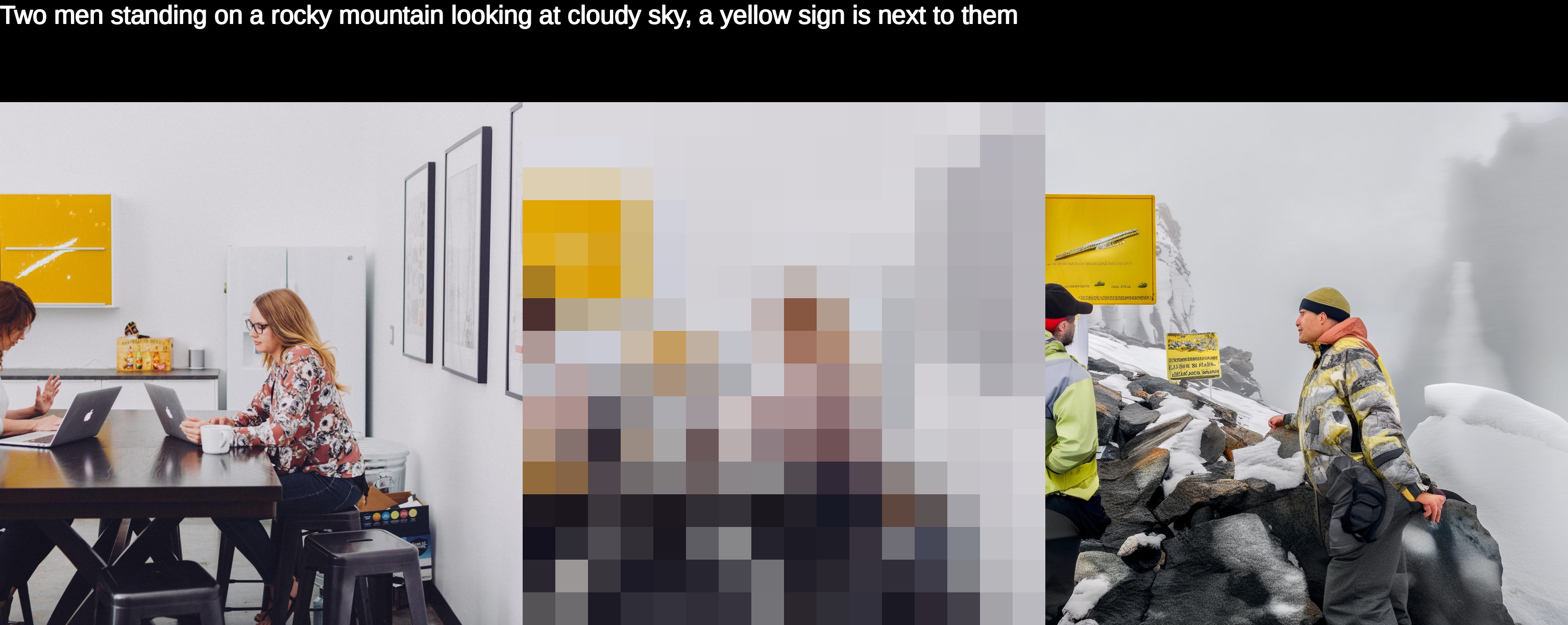

BRIA 2.3 ControlNet-ColorGrid, trained on the foundation of BRIA 2.3 Text-to-Image, enables the generation of high-quality images guided by a textual prompt and the extracted color grid from the input image. This allows for the creation of different scenes, all sharing the same color grid.

BRIA 2.3 was trained from scratch exclusively on licensed data from our esteemed data partners. Therefore, they are safe for commercial use and provide full legal liability coverage for copyright and privacy infringement, as well as harmful content mitigation. That is, our dataset does not contain copyrighted materials, such as fictional characters, logos, trademarks, public figures, harmful content, or privacy-infringing content.

Model Description

Developed by: BRIA AI

Model type: ControlNet for Latent diffusion

License: bria-2.3

Model Description: ControlNet ColorGrid for BRIA 2.3 Text-to-Image model. The model generates images guided by a spatial grid of RGB colors.

Resources for more information: BRIA AI

Get Access

BRIA 2.3 ControlNet-ColorGrid requires access to BRIA 2.3 Text-to-Image. For more information, click here.

Code example using Diffusers

pip install diffusers

from diffusers import ControlNetModel, StableDiffusionXLControlNetPipeline

import torch

from PIL import Image

controlnet = ControlNetModel.from_pretrained(

"briaai/BRIA-2.3-ControlNet-ColorGrid",

torch_dtype=torch.float16

)

pipe = StableDiffusionXLControlNetPipeline.from_pretrained(

"briaai/BRIA-2.3",

controlnet=controlnet,

torch_dtype=torch.float16,

)

pipe.to("cuda")

prompt = "A portrait of a Beautiful and playful ethereal singer, golden designs, highly detailed, blurry background"

negative_prompt = "Logo,Watermark,Text,Ugly,Morbid,Extra fingers,Poorly drawn hands,Mutation,Blurry,Extra limbs,Gross proportions,Missing arms,Mutated hands,Long neck,Duplicate,Mutilated,Mutilated hands,Poorly drawn face,Deformed,Bad anatomy,Cloned face,Malformed limbs,Missing legs,Too many fingers"

# Create ColorGrid image

input_image = Image.open('pics/singer.png')

control_image = input_image.resize((16, 16)).resize((1024,1024), Image.NEAREST)

image = pipe(prompt=prompt, negative_prompt=negative_prompt, image=control_image, controlnet_conditioning_scale=1.0, height=1024, width=1024).images[0]