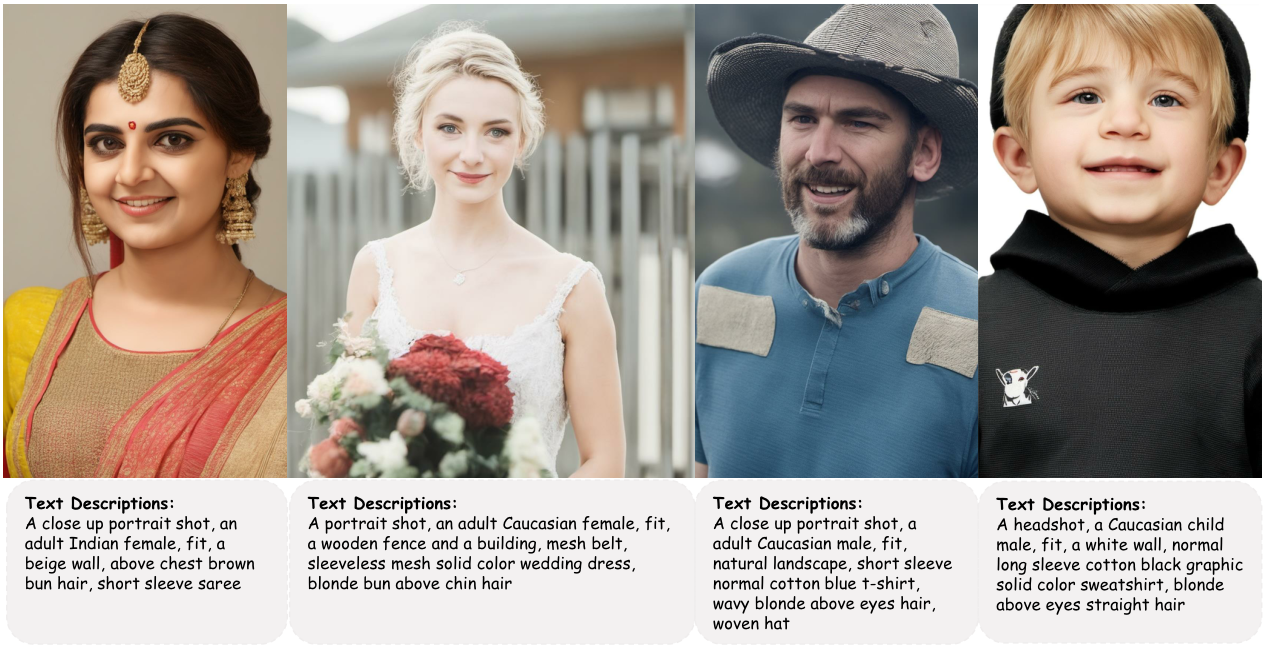

CosmicMan is a text-to-image foundation model specialized for generating high-fidelity human images. For more information, please refer to our research paper: CosmicMan: A Text-to-Image Foundation Model for Humans. Our model is based on stabilityai/stable-diffusion-xl-base-1.0. This repository provide UNet checkpoints for CosmicMan-SDXL.

Requirements

conda create -n cosmicman python=3.10

source activate cosmicman

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

pip install accelerate diffusers datasets transformers botocore invisible-watermark bitsandbytes gradio==3.48.0

Quick start with Gradio

To get started, first install the required dependencies, then run:

python demo_sdxl.py

Let's have a look at a simple example using the http://your-server-ip:port.

Inference

import torch

from diffusers import StableDiffusionXLPipeline, StableDiffusionXLImg2ImgPipeline, UNet2DConditionModel, EulerDiscreteScheduler

from huggingface_hub import hf_hub_download

from safetensors.torch import load_file

base_path = "stabilityai/stable-diffusion-xl-base-1.0"

refiner_path = "stabilityai/stable-diffusion-xl-refiner-1.0"

unet_path = "cosmicman/CosmicMan-SDXL"

# Load model.

unet = UNet2DConditionModel.from_pretrained(unet_path, torch_dtype=torch.float16)

pipe = StableDiffusionXLPipeline.from_pretrained(base_path, unet=unet, torch_dtype=torch.float16, variant="fp16", use_safetensors=True).to("cuda")

pipe.scheduler = EulerDiscreteScheduler.from_pretrained(base_path, subfolder="scheduler", torch_dtype=torch.float16)

refiner = StableDiffusionXLImg2ImgPipeline.from_pretrained(refiner_path,torch_dtype=torch.float16, variant="fp16", use_safetensors=True).to("cuda") # we found use base_path instead of refiner_path may get a better performance

# Generate image.

positive_prompt = "A fit Caucasian elderly woman, her wavy white hair above shoulders, wears a pink floral cotton long-sleeve shirt and a cotton hat against a natural landscape in an upper body shot"

negative_prompt = ""

image = pipe(positive_prompt, num_inference_steps=30,

guidance_scale=7.5, height=1024,

width=1024, negative_prompt=negative_prompt, output_type="latent").images[0]

image = refiner(positive_prompt, negative_prompt=negative_prompt, image=image[None, :]).images[0].save("output.png")

Citation Information

@article{li2024cosmicman,

title={CosmicMan: A Text-to-Image Foundation Model for Humans},

author={Li, Shikai and Fu, Jianglin and Liu, Kaiyuan and Wang, Wentao and Lin, Kwan-Yee and Wu, Wayne},

journal={arXiv preprint arXiv:2404.01294},

year={2024}

}

- Downloads last month

- 64