repo_id

stringlengths 4

110

| author

stringlengths 2

27

⌀ | model_type

stringlengths 2

29

⌀ | files_per_repo

int64 2

15.4k

| downloads_30d

int64 0

19.9M

| library

stringlengths 2

37

⌀ | likes

int64 0

4.34k

| pipeline

stringlengths 5

30

⌀ | pytorch

bool 2

classes | tensorflow

bool 2

classes | jax

bool 2

classes | license

stringlengths 2

30

| languages

stringlengths 4

1.63k

⌀ | datasets

stringlengths 2

2.58k

⌀ | co2

stringclasses 29

values | prs_count

int64 0

125

| prs_open

int64 0

120

| prs_merged

int64 0

15

| prs_closed

int64 0

28

| discussions_count

int64 0

218

| discussions_open

int64 0

148

| discussions_closed

int64 0

70

| tags

stringlengths 2

513

| has_model_index

bool 2

classes | has_metadata

bool 1

class | has_text

bool 1

class | text_length

int64 401

598k

| is_nc

bool 1

class | readme

stringlengths 0

598k

| hash

stringlengths 32

32

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

nejox/distilbert-base-uncased-distilled-squad-coffee20230108 | nejox | distilbert | 12 | 3 | transformers | 0 | question-answering | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,969 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-distilled-squad-coffee20230108

This model is a fine-tuned version of [distilbert-base-uncased-distilled-squad](https://huggingface.co/distilbert-base-uncased-distilled-squad) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 4.3444

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 15

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 89 | 1.9198 |

| 2.3879 | 2.0 | 178 | 1.8526 |

| 1.5528 | 3.0 | 267 | 1.8428 |

| 1.1473 | 4.0 | 356 | 2.4035 |

| 0.7375 | 5.0 | 445 | 2.3232 |

| 0.5986 | 6.0 | 534 | 2.4550 |

| 0.4252 | 7.0 | 623 | 3.2831 |

| 0.2612 | 8.0 | 712 | 3.2129 |

| 0.143 | 9.0 | 801 | 3.7849 |

| 0.143 | 10.0 | 890 | 3.8476 |

| 0.0984 | 11.0 | 979 | 4.1742 |

| 0.0581 | 12.0 | 1068 | 4.3476 |

| 0.0157 | 13.0 | 1157 | 4.3818 |

| 0.0131 | 14.0 | 1246 | 4.3357 |

| 0.0059 | 15.0 | 1335 | 4.3444 |

### Framework versions

- Transformers 4.25.1

- Pytorch 1.11.0+cu113

- Datasets 2.8.0

- Tokenizers 0.13.2

| ac50711e4c51d792b26d642b1aa8a847 |

gokuls/mobilebert_sa_GLUE_Experiment_logit_kd_stsb_128 | gokuls | mobilebert | 17 | 2 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['en'] | ['glue'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 2,040 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mobilebert_sa_GLUE_Experiment_logit_kd_stsb_128

This model is a fine-tuned version of [google/mobilebert-uncased](https://huggingface.co/google/mobilebert-uncased) on the GLUE STSB dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1533

- Pearson: 0.0554

- Spearmanr: 0.0563

- Combined Score: 0.0558

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Pearson | Spearmanr | Combined Score |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:---------:|:--------------:|

| 2.5973 | 1.0 | 45 | 1.2342 | -0.0353 | -0.0325 | -0.0339 |

| 1.0952 | 2.0 | 90 | 1.1740 | 0.0434 | 0.0419 | 0.0426 |

| 1.0581 | 3.0 | 135 | 1.1533 | 0.0554 | 0.0563 | 0.0558 |

| 1.0455 | 4.0 | 180 | 1.2131 | 0.0656 | 0.0690 | 0.0673 |

| 0.9795 | 5.0 | 225 | 1.3883 | 0.0868 | 0.0858 | 0.0863 |

| 0.9197 | 6.0 | 270 | 1.4141 | 0.1181 | 0.1148 | 0.1165 |

| 0.8182 | 7.0 | 315 | 1.3460 | 0.1771 | 0.1853 | 0.1812 |

| 0.6796 | 8.0 | 360 | 1.1577 | 0.2286 | 0.2340 | 0.2313 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

| d67261525a22e75eab30846a0dbc5531 |

microsoft/xclip-base-patch16-hmdb-2-shot | microsoft | xclip | 10 | 2 | transformers | 0 | feature-extraction | true | false | false | mit | ['en'] | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['vision', 'video-classification'] | true | true | true | 2,425 | false |

# X-CLIP (base-sized model)

X-CLIP model (base-sized, patch resolution of 16) trained in a few-shot fashion (K=2) on [HMDB-51](https://serre-lab.clps.brown.edu/resource/hmdb-a-large-human-motion-database/). It was introduced in the paper [Expanding Language-Image Pretrained Models for General Video Recognition](https://arxiv.org/abs/2208.02816) by Ni et al. and first released in [this repository](https://github.com/microsoft/VideoX/tree/master/X-CLIP).

This model was trained using 32 frames per video, at a resolution of 224x224.

Disclaimer: The team releasing X-CLIP did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

X-CLIP is a minimal extension of [CLIP](https://huggingface.co/docs/transformers/model_doc/clip) for general video-language understanding. The model is trained in a contrastive way on (video, text) pairs.

This allows the model to be used for tasks like zero-shot, few-shot or fully supervised video classification and video-text retrieval.

## Intended uses & limitations

You can use the raw model for determining how well text goes with a given video. See the [model hub](https://huggingface.co/models?search=microsoft/xclip) to look for

fine-tuned versions on a task that interests you.

### How to use

For code examples, we refer to the [documentation](https://huggingface.co/transformers/main/model_doc/xclip.html#).

## Training data

This model was trained on [HMDB-51](https://serre-lab.clps.brown.edu/resource/hmdb-a-large-human-motion-database/).

### Preprocessing

The exact details of preprocessing during training can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L247).

The exact details of preprocessing during validation can be found [here](https://github.com/microsoft/VideoX/blob/40f6d177e0a057a50ac69ac1de6b5938fd268601/X-CLIP/datasets/build.py#L285).

During validation, one resizes the shorter edge of each frame, after which center cropping is performed to a fixed-size resolution (like 224x224). Next, frames are normalized across the RGB channels with the ImageNet mean and standard deviation.

## Evaluation results

This model achieves a top-1 accuracy of 53.0%.

| c78f56c7cbd357af76c7855b4177f332 |

facebook/wmt19-en-ru | facebook | fsmt | 9 | 3,395 | transformers | 4 | translation | true | false | false | apache-2.0 | ['en', 'ru'] | ['wmt19'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['translation', 'wmt19', 'facebook'] | false | true | true | 3,248 | false |

# FSMT

## Model description

This is a ported version of [fairseq wmt19 transformer](https://github.com/pytorch/fairseq/blob/master/examples/wmt19/README.md) for en-ru.

For more details, please see, [Facebook FAIR's WMT19 News Translation Task Submission](https://arxiv.org/abs/1907.06616).

The abbreviation FSMT stands for FairSeqMachineTranslation

All four models are available:

* [wmt19-en-ru](https://huggingface.co/facebook/wmt19-en-ru)

* [wmt19-ru-en](https://huggingface.co/facebook/wmt19-ru-en)

* [wmt19-en-de](https://huggingface.co/facebook/wmt19-en-de)

* [wmt19-de-en](https://huggingface.co/facebook/wmt19-de-en)

## Intended uses & limitations

#### How to use

```python

from transformers import FSMTForConditionalGeneration, FSMTTokenizer

mname = "facebook/wmt19-en-ru"

tokenizer = FSMTTokenizer.from_pretrained(mname)

model = FSMTForConditionalGeneration.from_pretrained(mname)

input = "Machine learning is great, isn't it?"

input_ids = tokenizer.encode(input, return_tensors="pt")

outputs = model.generate(input_ids)

decoded = tokenizer.decode(outputs[0], skip_special_tokens=True)

print(decoded) # Машинное обучение - это здорово, не так ли?

```

#### Limitations and bias

- The original (and this ported model) doesn't seem to handle well inputs with repeated sub-phrases, [content gets truncated](https://discuss.huggingface.co/t/issues-with-translating-inputs-containing-repeated-phrases/981)

## Training data

Pretrained weights were left identical to the original model released by fairseq. For more details, please, see the [paper](https://arxiv.org/abs/1907.06616).

## Eval results

pair | fairseq | transformers

-------|---------|----------

en-ru | [36.4](http://matrix.statmt.org/matrix/output/1914?run_id=6724) | 33.47

The score is slightly below the score reported by `fairseq`, since `transformers`` currently doesn't support:

- model ensemble, therefore the best performing checkpoint was ported (``model4.pt``).

- re-ranking

The score was calculated using this code:

```bash

git clone https://github.com/huggingface/transformers

cd transformers

export PAIR=en-ru

export DATA_DIR=data/$PAIR

export SAVE_DIR=data/$PAIR

export BS=8

export NUM_BEAMS=15

mkdir -p $DATA_DIR

sacrebleu -t wmt19 -l $PAIR --echo src > $DATA_DIR/val.source

sacrebleu -t wmt19 -l $PAIR --echo ref > $DATA_DIR/val.target

echo $PAIR

PYTHONPATH="src:examples/seq2seq" python examples/seq2seq/run_eval.py facebook/wmt19-$PAIR $DATA_DIR/val.source $SAVE_DIR/test_translations.txt --reference_path $DATA_DIR/val.target --score_path $SAVE_DIR/test_bleu.json --bs $BS --task translation --num_beams $NUM_BEAMS

```

note: fairseq reports using a beam of 50, so you should get a slightly higher score if re-run with `--num_beams 50`.

## Data Sources

- [training, etc.](http://www.statmt.org/wmt19/)

- [test set](http://matrix.statmt.org/test_sets/newstest2019.tgz?1556572561)

### BibTeX entry and citation info

```bibtex

@inproceedings{...,

year={2020},

title={Facebook FAIR's WMT19 News Translation Task Submission},

author={Ng, Nathan and Yee, Kyra and Baevski, Alexei and Ott, Myle and Auli, Michael and Edunov, Sergey},

booktitle={Proc. of WMT},

}

```

## TODO

- port model ensemble (fairseq uses 4 model checkpoints)

| 09fd5ca751e6c96921792d1b942ec023 |

PeterBanning71/t5-small-finetuned-xsum-finetuned-bioMedv3 | PeterBanning71 | t5 | 12 | 8 | transformers | 0 | summarization | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['summarization', 'generated_from_trainer'] | true | true | true | 2,181 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-small-finetuned-xsum-finetuned-bioMedv3

This model is a fine-tuned version of [PeterBanning71/t5-small-finetuned-xsum](https://huggingface.co/PeterBanning71/t5-small-finetuned-xsum) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 6.1056

- Rouge1: 4.8565

- Rouge2: 0.4435

- Rougel: 3.9735

- Rougelsum: 4.415

- Gen Len: 19.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 8

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------:|:------:|:------:|:---------:|:-------:|

| No log | 1.0 | 1 | 8.4025 | 4.8565 | 0.4435 | 3.9735 | 4.415 | 19.0 |

| No log | 2.0 | 2 | 8.4025 | 4.8565 | 0.4435 | 3.9735 | 4.415 | 19.0 |

| No log | 3.0 | 3 | 7.7250 | 4.8565 | 0.4435 | 3.9735 | 4.415 | 19.0 |

| No log | 4.0 | 4 | 7.1617 | 4.8565 | 0.4435 | 3.9735 | 4.415 | 19.0 |

| No log | 5.0 | 5 | 6.7113 | 4.8565 | 0.4435 | 3.9735 | 4.415 | 19.0 |

| No log | 6.0 | 6 | 6.3646 | 4.8565 | 0.4435 | 3.9735 | 4.415 | 19.0 |

| No log | 7.0 | 7 | 6.1056 | 4.8565 | 0.4435 | 3.9735 | 4.415 | 19.0 |

| No log | 8.0 | 8 | 6.1056 | 4.8565 | 0.4435 | 3.9735 | 4.415 | 19.0 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

| fd41830000499dbb6d5db2af04fc04e4 |

yip-i/xls-r-53-copy | yip-i | wav2vec2 | 6 | 1 | transformers | 0 | null | true | false | true | apache-2.0 | ['multilingual'] | ['common_voice'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['speech'] | false | true | true | 2,197 | false |

# Wav2Vec2-XLSR-53

[Facebook's XLSR-Wav2Vec2](https://ai.facebook.com/blog/wav2vec-20-learning-the-structure-of-speech-from-raw-audio/)

The base model pretrained on 16kHz sampled speech audio. When using the model make sure that your speech input is also sampled at 16Khz. Note that this model should be fine-tuned on a downstream task, like Automatic Speech Recognition. Check out [this blog](https://huggingface.co/blog/fine-tune-wav2vec2-english) for more information.

[Paper](https://arxiv.org/abs/2006.13979)

Authors: Alexis Conneau, Alexei Baevski, Ronan Collobert, Abdelrahman Mohamed, Michael Auli

**Abstract**

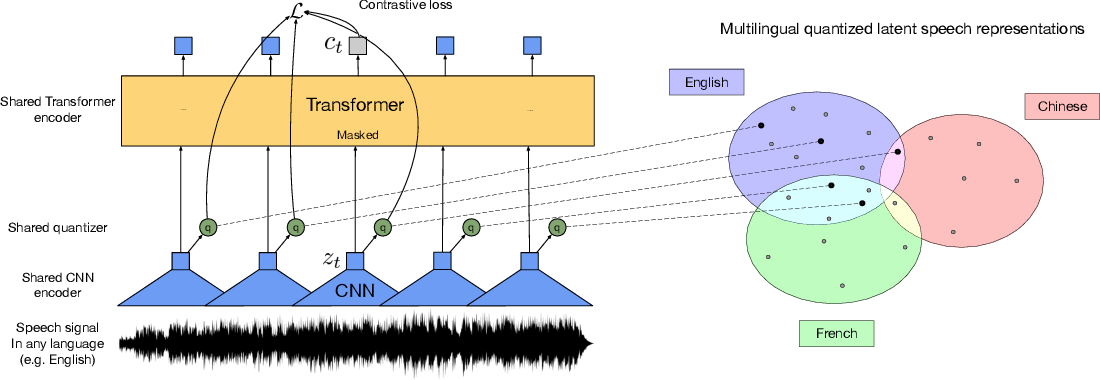

This paper presents XLSR which learns cross-lingual speech representations by pretraining a single model from the raw waveform of speech in multiple languages. We build on wav2vec 2.0 which is trained by solving a contrastive task over masked latent speech representations and jointly learns a quantization of the latents shared across languages. The resulting model is fine-tuned on labeled data and experiments show that cross-lingual pretraining significantly outperforms monolingual pretraining. On the CommonVoice benchmark, XLSR shows a relative phoneme error rate reduction of 72% compared to the best known results. On BABEL, our approach improves word error rate by 16% relative compared to a comparable system. Our approach enables a single multilingual speech recognition model which is competitive to strong individual models. Analysis shows that the latent discrete speech representations are shared across languages with increased sharing for related languages. We hope to catalyze research in low-resource speech understanding by releasing XLSR-53, a large model pretrained in 53 languages.

The original model can be found under https://github.com/pytorch/fairseq/tree/master/examples/wav2vec#wav2vec-20.

# Usage

See [this notebook](https://colab.research.google.com/github/patrickvonplaten/notebooks/blob/master/Fine_Tune_XLSR_Wav2Vec2_on_Turkish_ASR_with_%F0%9F%A4%97_Transformers.ipynb) for more information on how to fine-tune the model.

| fb0df48764b64890ae5c043865e65d6e |

google/t5-11b-ssm-wq | google | t5 | 9 | 8 | transformers | 1 | text2text-generation | true | true | false | apache-2.0 | ['en'] | ['c4', 'wikipedia', 'web_questions'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 2,413 | false |

[Google's T5](https://ai.googleblog.com/2020/02/exploring-transfer-learning-with-t5.html) for **Closed Book Question Answering**.

The model was pre-trained using T5's denoising objective on [C4](https://huggingface.co/datasets/c4), subsequently additionally pre-trained using [REALM](https://arxiv.org/pdf/2002.08909.pdf)'s salient span masking objective on [Wikipedia](https://huggingface.co/datasets/wikipedia), and finally fine-tuned on [Web Questions (WQ)](https://huggingface.co/datasets/web_questions).

**Note**: The model was fine-tuned on 100% of the train splits of [Web Questions (WQ)](https://huggingface.co/datasets/web_questions) for 10k steps.

Other community Checkpoints: [here](https://huggingface.co/models?search=ssm)

Paper: [How Much Knowledge Can You Pack

Into the Parameters of a Language Model?](https://arxiv.org/abs/1910.10683.pdf)

Authors: *Adam Roberts, Colin Raffel, Noam Shazeer*

## Results on Web Questions - Test Set

|Id | link | Exact Match |

|---|---|---|

|**T5-11b**|**https://huggingface.co/google/t5-11b-ssm-wq**|**44.7**|

|T5-xxl|https://huggingface.co/google/t5-xxl-ssm-wq|43.5|

## Usage

The model can be used as follows for **closed book question answering**:

```python

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

t5_qa_model = AutoModelForSeq2SeqLM.from_pretrained("google/t5-11b-ssm-wq")

t5_tok = AutoTokenizer.from_pretrained("google/t5-11b-ssm-wq")

input_ids = t5_tok("When was Franklin D. Roosevelt born?", return_tensors="pt").input_ids

gen_output = t5_qa_model.generate(input_ids)[0]

print(t5_tok.decode(gen_output, skip_special_tokens=True))

```

## Abstract

It has recently been observed that neural language models trained on unstructured text can implicitly store and retrieve knowledge using natural language queries. In this short paper, we measure the practical utility of this approach by fine-tuning pre-trained models to answer questions without access to any external context or knowledge. We show that this approach scales with model size and performs competitively with open-domain systems that explicitly retrieve answers from an external knowledge source when answering questions. To facilitate reproducibility and future work, we release our code and trained models at https://goo.gle/t5-cbqa.

| 421f2b02195337d45d10a6dd9600d571 |

josetapia/hygpt2-clm | josetapia | gpt2 | 17 | 4 | transformers | 0 | text-generation | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 980 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hygpt2-clm

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 256

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 4000

### Training results

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.2

- Datasets 1.18.4

- Tokenizers 0.11.6

| 4e288d13e1a2a45f6aa2104c6a908f1d |

terzimert/bert-finetuned-ner-v2.2 | terzimert | bert | 12 | 7 | transformers | 0 | token-classification | true | false | false | apache-2.0 | null | ['caner'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,545 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner-v2.2

This model is a fine-tuned version of [bert-base-multilingual-cased](https://huggingface.co/bert-base-multilingual-cased) on the caner dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3595

- Precision: 0.8823

- Recall: 0.8497

- F1: 0.8657

- Accuracy: 0.9427

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.2726 | 1.0 | 3228 | 0.4504 | 0.7390 | 0.7287 | 0.7338 | 0.9107 |

| 0.2057 | 2.0 | 6456 | 0.3679 | 0.8633 | 0.8446 | 0.8538 | 0.9385 |

| 0.1481 | 3.0 | 9684 | 0.3595 | 0.8823 | 0.8497 | 0.8657 | 0.9427 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

| c1a5f866053a6d759a96278f6c27ab14 |

openclimatefix/nowcasting_cnn_v4 | openclimatefix | null | 4 | 0 | transformers | 1 | null | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['nowcasting', 'forecasting', 'timeseries', 'remote-sensing'] | false | true | true | 962 | false |

# Nowcasting CNN

## Model description

3d conv model, that takes in different data streams

architecture is roughly

1. satellite image time series goes into many 3d convolution layers.

2. nwp time series goes into many 3d convolution layers.

3. Final convolutional layer goes to full connected layer. This is joined by

other data inputs like

- pv yield

- time variables

Then there ~4 fully connected layers which end up forecasting the

pv yield / gsp into the future

## Intended uses & limitations

Forecasting short term PV power for different regions and nationally in the UK

## How to use

[More information needed]

## Limitations and bias

[More information needed]

## Training data

Training data is EUMETSAT RSS imagery over the UK, on-the-ground PV data, and NWP predictions.

## Training procedure

[More information needed]

## Evaluation results

[More information needed]

| 409a984bb15368014d80cc8164fc5303 |

Thant123/distilbert-base-uncased-finetuned-emotion | Thant123 | distilbert | 12 | 1 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['emotion'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,343 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2270

- Accuracy: 0.924

- F1: 0.9241

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8204 | 1.0 | 250 | 0.3160 | 0.9035 | 0.9008 |

| 0.253 | 2.0 | 500 | 0.2270 | 0.924 | 0.9241 |

### Framework versions

- Transformers 4.17.0

- Pytorch 1.10.0+cu111

- Datasets 2.0.0

- Tokenizers 0.11.6

| ffacf1d2dcc9b780be66d5ad7b68e5e2 |

philschmid/roberta-base-squad2-optimized | philschmid | null | 15 | 3 | generic | 0 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['endpoints-template', 'optimum'] | false | true | true | 9,622 | false |

# Optimized and Quantized [deepset/roberta-base-squad2](https://huggingface.co/deepset/roberta-base-squad2) with a custom handler.py

This repository implements a `custom` handler for `question-answering` for 🤗 Inference Endpoints for accelerated inference using [🤗 Optiumum](https://huggingface.co/docs/optimum/index). The code for the customized handler is in the [handler.py](https://huggingface.co/philschmid/roberta-base-squad2-optimized/blob/main/handler.py).

Below is also describe how we converted & optimized the model, based on the [Accelerate Transformers with Hugging Face Optimum](https://huggingface.co/blog/optimum-inference) blog post. You can also check out the [notebook](https://huggingface.co/philschmid/roberta-base-squad2-optimized/blob/main/optimize_model.ipynb).

### expected Request payload

```json

{

"inputs": {

"question": "As what is Philipp working?",

"context": "Hello, my name is Philipp and I live in Nuremberg, Germany. Currently I am working as a Technical Lead at Hugging Face to democratize artificial intelligence through open source and open science. In the past I designed and implemented cloud-native machine learning architectures for fin-tech and insurance companies. I found my passion for cloud concepts and machine learning 5 years ago. Since then I never stopped learning. Currently, I am focusing myself in the area NLP and how to leverage models like BERT, Roberta, T5, ViT, and GPT2 to generate business value."

}

}

```

below is an example on how to run a request using Python and `requests`.

## Run Request

```python

import json

from typing import List

import requests as r

import base64

ENDPOINT_URL = ""

HF_TOKEN = ""

def predict(question:str=None,context:str=None):

payload = {"inputs": {"question": question, "context": context}}

response = r.post(

ENDPOINT_URL, headers={"Authorization": f"Bearer {HF_TOKEN}"}, json=payload

)

return response.json()

prediction = predict(

question="As what is Philipp working?",

context="Hello, my name is Philipp and I live in Nuremberg, Germany. Currently I am working as a Technical Lead at Hugging Face to democratize artificial intelligence through open source and open science."

)

```

expected output

```python

{

'score': 0.4749588668346405,

'start': 88,

'end': 102,

'answer': 'Technical Lead'

}

```

# Convert & Optimize model with Optimum

Steps:

1. [Convert model to ONNX](#1-convert-model-to-onnx)

2. [Optimize & quantize model with Optimum](#2-optimize--quantize-model-with-optimum)

3. [Create Custom Handler for Inference Endpoints](#3-create-custom-handler-for-inference-endpoints)

4. [Test Custom Handler Locally](#4-test-custom-handler-locally)

5. [Push to repository and create Inference Endpoint](#5-push-to-repository-and-create-inference-endpoint)

Helpful links:

* [Accelerate Transformers with Hugging Face Optimum](https://huggingface.co/blog/optimum-inference)

* [Optimizing Transformers for GPUs with Optimum](https://www.philschmid.de/optimizing-transformers-with-optimum-gpu)

* [Optimum Documentation](https://huggingface.co/docs/optimum/onnxruntime/modeling_ort)

* [Create Custom Handler Endpoints](https://link-to-docs)

## Setup & Installation

```python

%%writefile requirements.txt

optimum[onnxruntime]==1.4.0

mkl-include

mkl

```

```python

!pip install -r requirements.txt

```

## 0. Base line Performance

```python

from transformers import pipeline

qa = pipeline("question-answering",model="deepset/roberta-base-squad2")

```

Okay, let's test the performance (latency) with sequence length of 128.

```python

context="Hello, my name is Philipp and I live in Nuremberg, Germany. Currently I am working as a Technical Lead at Hugging Face to democratize artificial intelligence through open source and open science. In the past I designed and implemented cloud-native machine learning architectures for fin-tech and insurance companies. I found my passion for cloud concepts and machine learning 5 years ago. Since then I never stopped learning. Currently, I am focusing myself in the area NLP and how to leverage models like BERT, Roberta, T5, ViT, and GPT2 to generate business value."

question="As what is Philipp working?"

payload = {"inputs": {"question": question, "context": context}}

```

```python

from time import perf_counter

import numpy as np

def measure_latency(pipe,payload):

latencies = []

# warm up

for _ in range(10):

_ = pipe(question=payload["inputs"]["question"], context=payload["inputs"]["context"])

# Timed run

for _ in range(50):

start_time = perf_counter()

_ = pipe(question=payload["inputs"]["question"], context=payload["inputs"]["context"])

latency = perf_counter() - start_time

latencies.append(latency)

# Compute run statistics

time_avg_ms = 1000 * np.mean(latencies)

time_std_ms = 1000 * np.std(latencies)

return f"Average latency (ms) - {time_avg_ms:.2f} +\- {time_std_ms:.2f}"

print(f"Vanilla model {measure_latency(qa,payload)}")

# Vanilla model Average latency (ms) - 64.15 +\- 2.44

```

## 1. Convert model to ONNX

```python

from optimum.onnxruntime import ORTModelForQuestionAnswering

from transformers import AutoTokenizer

from pathlib import Path

model_id="deepset/roberta-base-squad2"

onnx_path = Path(".")

# load vanilla transformers and convert to onnx

model = ORTModelForQuestionAnswering.from_pretrained(model_id, from_transformers=True)

tokenizer = AutoTokenizer.from_pretrained(model_id)

# save onnx checkpoint and tokenizer

model.save_pretrained(onnx_path)

tokenizer.save_pretrained(onnx_path)

```

## 2. Optimize & quantize model with Optimum

```python

from optimum.onnxruntime import ORTOptimizer, ORTQuantizer

from optimum.onnxruntime.configuration import OptimizationConfig, AutoQuantizationConfig

# Create the optimizer

optimizer = ORTOptimizer.from_pretrained(model)

# Define the optimization strategy by creating the appropriate configuration

optimization_config = OptimizationConfig(optimization_level=99) # enable all optimizations

# Optimize the model

optimizer.optimize(save_dir=onnx_path, optimization_config=optimization_config)

```

```python

# create ORTQuantizer and define quantization configuration

dynamic_quantizer = ORTQuantizer.from_pretrained(onnx_path, file_name="model_optimized.onnx")

dqconfig = AutoQuantizationConfig.avx512_vnni(is_static=False, per_channel=False)

# apply the quantization configuration to the model

model_quantized_path = dynamic_quantizer.quantize(

save_dir=onnx_path,

quantization_config=dqconfig,

)

```

## 3. Create Custom Handler for Inference Endpoints

```python

%%writefile handler.py

from typing import Dict, List, Any

from optimum.onnxruntime import ORTModelForQuestionAnswering

from transformers import AutoTokenizer, pipeline

class EndpointHandler():

def __init__(self, path=""):

# load the optimized model

self.model = ORTModelForQuestionAnswering.from_pretrained(path, file_name="model_optimized_quantized.onnx")

self.tokenizer = AutoTokenizer.from_pretrained(path)

# create pipeline

self.pipeline = pipeline("question-answering", model=self.model, tokenizer=self.tokenizer)

def __call__(self, data: Any) -> List[List[Dict[str, float]]]:

"""

Args:

data (:obj:):

includes the input data and the parameters for the inference.

Return:

A :obj:`list`:. The list contains the answer and scores of the inference inputs

"""

inputs = data.get("inputs", data)

# run the model

prediction = self.pipeline(**inputs)

# return prediction

return prediction

```

## 4. Test Custom Handler Locally

```python

from handler import EndpointHandler

# init handler

my_handler = EndpointHandler(path=".")

# prepare sample payload

context="Hello, my name is Philipp and I live in Nuremberg, Germany. Currently I am working as a Technical Lead at Hugging Face to democratize artificial intelligence through open source and open science. In the past I designed and implemented cloud-native machine learning architectures for fin-tech and insurance companies. I found my passion for cloud concepts and machine learning 5 years ago. Since then I never stopped learning. Currently, I am focusing myself in the area NLP and how to leverage models like BERT, Roberta, T5, ViT, and GPT2 to generate business value."

question="As what is Philipp working?"

payload = {"inputs": {"question": question, "context": context}}

# test the handler

my_handler(payload)

```

```python

from time import perf_counter

import numpy as np

def measure_latency(handler,payload):

latencies = []

# warm up

for _ in range(10):

_ = handler(payload)

# Timed run

for _ in range(50):

start_time = perf_counter()

_ = handler(payload)

latency = perf_counter() - start_time

latencies.append(latency)

# Compute run statistics

time_avg_ms = 1000 * np.mean(latencies)

time_std_ms = 1000 * np.std(latencies)

return f"Average latency (ms) - {time_avg_ms:.2f} +\- {time_std_ms:.2f}"

print(f"Optimized & Quantized model {measure_latency(my_handler,payload)}")

#

```

`Optimized & Quantized model Average latency (ms) - 29.90 +\- 0.53`

`Vanilla model Average latency (ms) - 64.15 +\- 2.44`

## 5. Push to repository and create Inference Endpoint

```python

# add all our new files

!git add *

# commit our files

!git commit -m "add custom handler"

# push the files to the hub

!git push

```

| 8561f0d74d18810e336a6fc8caf0ae6d |

MaggieXM/distilbert-base-uncased-finetuned-squad | MaggieXM | distilbert | 20 | 5 | transformers | 0 | question-answering | true | false | false | apache-2.0 | null | ['squad'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,109 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-squad

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the squad dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 0.01

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 0.01 | 56 | 4.8054 |

### Framework versions

- Transformers 4.16.2

- Pytorch 1.10.0+cu111

- Datasets 1.18.3

- Tokenizers 0.11.0

| b71ec1cf30fd6b9f371d478067525884 |

jonatasgrosman/exp_w2v2t_pt_vp-nl_s6 | jonatasgrosman | wav2vec2 | 10 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['pt'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'pt'] | false | true | true | 467 | false | # exp_w2v2t_pt_vp-nl_s6

Fine-tuned [facebook/wav2vec2-large-nl-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-nl-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (pt)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 7a000bd97bc0b74b5287e62948946ec7 |

hsohn3/cchs-bert-visit-uncased-wordlevel-block512-batch8-ep10 | hsohn3 | bert | 8 | 4 | transformers | 0 | fill-mask | false | true | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_keras_callback'] | true | true | true | 1,340 | false |

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# hsohn3/cchs-bert-visit-uncased-wordlevel-block512-batch8-ep10

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 2.9857

- Epoch: 9

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Epoch |

|:----------:|:-----:|

| 4.4277 | 0 |

| 3.1148 | 1 |

| 3.0454 | 2 |

| 3.0227 | 3 |

| 3.0048 | 4 |

| 3.0080 | 5 |

| 2.9920 | 6 |

| 2.9963 | 7 |

| 2.9892 | 8 |

| 2.9857 | 9 |

### Framework versions

- Transformers 4.20.1

- TensorFlow 2.8.2

- Datasets 2.3.2

- Tokenizers 0.12.1

| d1d10ad0216333d9b17d1427aae2e8d4 |

shumail/wav2vec2-base-timit-demo-colab | shumail | wav2vec2 | 24 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,341 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vec2-base-timit-demo-colab

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8686

- Wer: 0.6263

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 1000

- num_epochs: 30

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 5.0505 | 13.89 | 500 | 3.0760 | 1.0 |

| 1.2748 | 27.78 | 1000 | 0.8686 | 0.6263 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.11.0+cu113

- Datasets 1.18.3

- Tokenizers 0.10.3

| 21419281cc9c65d8413aab2df9d3ffbe |

fveredas/xlm-roberta-base-finetuned-panx-de | fveredas | xlm-roberta | 16 | 5 | transformers | 0 | token-classification | true | false | false | mit | null | ['xtreme'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,320 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-de

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1365

- F1: 0.8649

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2553 | 1.0 | 525 | 0.1575 | 0.8279 |

| 0.1284 | 2.0 | 1050 | 0.1386 | 0.8463 |

| 0.0813 | 3.0 | 1575 | 0.1365 | 0.8649 |

### Framework versions

- Transformers 4.11.3

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

| 91b7a03e208a0ae34eca0e47fccabdb1 |

kurianbenoy/music_genre_classification_baseline | kurianbenoy | null | 4 | 0 | fastai | 1 | null | false | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['fastai'] | false | true | true | 736 | false |

# Amazing!

🥳 Congratulations on hosting your fastai model on the Hugging Face Hub!

# Some next steps

1. Fill out this model card with more information (see the template below and the [documentation here](https://huggingface.co/docs/hub/model-repos))!

2. Create a demo in Gradio or Streamlit using 🤗 Spaces ([documentation here](https://huggingface.co/docs/hub/spaces)).

3. Join the fastai community on the [Fastai Discord](https://discord.com/invite/YKrxeNn)!

Greetings fellow fastlearner 🤝! Don't forget to delete this content from your model card.

---

# Model card

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

| f36361bbf3a4111abdeda44875b284bc |

mkhairil/distillbert-finetuned-indonlusmsa | mkhairil | distilbert | 12 | 8 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['indonlu'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 948 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distillbert-finetuned-indonlusmsa

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the indonlu dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

| 42b35bdf153d04de3bdfd39be5fd4cfc |

Alred/bart-base-finetuned-summarization-cnn-ver2 | Alred | bart | 15 | 5 | transformers | 0 | summarization | true | false | false | apache-2.0 | null | ['cnn_dailymail'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['summarization', 'generated_from_trainer'] | true | true | true | 1,176 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bart-base-finetuned-summarization-cnn-ver2

This model is a fine-tuned version of [facebook/bart-base](https://huggingface.co/facebook/bart-base) on the cnn_dailymail dataset.

It achieves the following results on the evaluation set:

- Loss: 2.1715

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.3329 | 1.0 | 5742 | 2.1715 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.12.1+cu113

- Datasets 2.7.1

- Tokenizers 0.13.2

| 25269342aaf4d9a62e88d8b1b5ab5e8a |

manandey/wav2vec2-large-xlsr-_irish | manandey | wav2vec2 | 9 | 7 | transformers | 0 | automatic-speech-recognition | true | false | true | apache-2.0 | ['ga'] | ['common_voice'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['audio', 'automatic-speech-recognition', 'speech', 'xlsr-fine-tuning-week', 'hf-asr-leaderboard'] | true | true | true | 3,265 | false | # Wav2Vec2-Large-XLSR-53-Irish

Fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53) in Irish using the [Common Voice](https://huggingface.co/datasets/common_voice)

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "ga-IE", split="test[:2%]").

processor = Wav2Vec2Processor.from_pretrained("manandey/wav2vec2-large-xlsr-_irish")

model = Wav2Vec2ForCTC.from_pretrained("manandey/wav2vec2-large-xlsr-_irish")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset["speech"][:2], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset["sentence"][:2])

```

## Evaluation

The model can be evaluated as follows on the {language} test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "ga-IE", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("manandey/wav2vec2-large-xlsr-_irish")

model = Wav2Vec2ForCTC.from_pretrained("manandey/wav2vec2-large-xlsr-_irish")

model.to("cuda")

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\“\%\‘\”\�\’\–\(\)]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 42.34%

## Training

The Common Voice `train`, `validation` datasets were used for training. | 5019d14cdddfee6804b9e3be5a44eb38 |

Helsinki-NLP/opus-mt-it-vi | Helsinki-NLP | marian | 11 | 38 | transformers | 0 | translation | true | true | false | apache-2.0 | ['it', 'vi'] | null | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['translation'] | false | true | true | 2,001 | false |

### ita-vie

* source group: Italian

* target group: Vietnamese

* OPUS readme: [ita-vie](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/ita-vie/README.md)

* model: transformer-align

* source language(s): ita

* target language(s): vie

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm32k,spm32k)

* download original weights: [opus-2020-06-17.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/ita-vie/opus-2020-06-17.zip)

* test set translations: [opus-2020-06-17.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/ita-vie/opus-2020-06-17.test.txt)

* test set scores: [opus-2020-06-17.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/ita-vie/opus-2020-06-17.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.ita.vie | 36.2 | 0.535 |

### System Info:

- hf_name: ita-vie

- source_languages: ita

- target_languages: vie

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/ita-vie/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['it', 'vi']

- src_constituents: {'ita'}

- tgt_constituents: {'vie', 'vie_Hani'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm32k,spm32k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/ita-vie/opus-2020-06-17.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/ita-vie/opus-2020-06-17.test.txt

- src_alpha3: ita

- tgt_alpha3: vie

- short_pair: it-vi

- chrF2_score: 0.535

- bleu: 36.2

- brevity_penalty: 1.0

- ref_len: 2144.0

- src_name: Italian

- tgt_name: Vietnamese

- train_date: 2020-06-17

- src_alpha2: it

- tgt_alpha2: vi

- prefer_old: False

- long_pair: ita-vie

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 | 449a6592d1e9c61ddf102c80ed93f5c6 |

coreml/coreml-stable-diffusion-v1-5 | coreml | null | 6 | 0 | null | 5 | text-to-image | false | false | false | creativeml-openrail-m | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['coreml', 'stable-diffusion', 'text-to-image'] | false | true | true | 13,867 | false |

# Core ML Converted Model

This model was converted to Core ML for use on Apple Silicon devices by following Apple's instructions [here](https://github.com/apple/ml-stable-diffusion#-converting-models-to-core-ml).<br>

Provide the model to an app such as [Mochi Diffusion](https://github.com/godly-devotion/MochiDiffusion) to generate images.<br>

`split_einsum` version is compatible with all compute unit options including Neural Engine.<br>

`original` version is only compatible with CPU & GPU option.

# Stable Diffusion v1-5 Model Card

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input.

For more information about how Stable Diffusion functions, please have a look at [🤗's Stable Diffusion blog](https://huggingface.co/blog/stable_diffusion).

The **Stable-Diffusion-v1-5** checkpoint was initialized with the weights of the [Stable-Diffusion-v1-2](https:/steps/huggingface.co/CompVis/stable-diffusion-v1-2)

checkpoint and subsequently fine-tuned on 595k steps at resolution 512x512 on "laion-aesthetics v2 5+" and 10% dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

You can use this both with the [🧨Diffusers library](https://github.com/huggingface/diffusers) and the [RunwayML GitHub repository](https://github.com/runwayml/stable-diffusion).

### Diffusers

```py

from diffusers import StableDiffusionPipeline

import torch

model_id = "runwayml/stable-diffusion-v1-5"

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a photo of an astronaut riding a horse on mars"

image = pipe(prompt).images[0]

image.save("astronaut_rides_horse.png")

```

For more detailed instructions, use-cases and examples in JAX follow the instructions [here](https://github.com/huggingface/diffusers#text-to-image-generation-with-stable-diffusion)

### Original GitHub Repository

1. Download the weights

- [v1-5-pruned-emaonly.ckpt](https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned-emaonly.ckpt) - 4.27GB, ema-only weight. uses less VRAM - suitable for inference

- [v1-5-pruned.ckpt](https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned.ckpt) - 7.7GB, ema+non-ema weights. uses more VRAM - suitable for fine-tuning

2. Follow instructions [here](https://github.com/runwayml/stable-diffusion).

## Model Details

- **Developed by:** Robin Rombach, Patrick Esser

- **Model type:** Diffusion-based text-to-image generation model

- **Language(s):** English

- **License:** [The CreativeML OpenRAIL M license](https://huggingface.co/spaces/CompVis/stable-diffusion-license) is an [Open RAIL M license](https://www.licenses.ai/blog/2022/8/18/naming-convention-of-responsible-ai-licenses), adapted from the work that [BigScience](https://bigscience.huggingface.co/) and [the RAIL Initiative](https://www.licenses.ai/) are jointly carrying in the area of responsible AI licensing. See also [the article about the BLOOM Open RAIL license](https://bigscience.huggingface.co/blog/the-bigscience-rail-license) on which our license is based.

- **Model Description:** This is a model that can be used to generate and modify images based on text prompts. It is a [Latent Diffusion Model](https://arxiv.org/abs/2112.10752) that uses a fixed, pretrained text encoder ([CLIP ViT-L/14](https://arxiv.org/abs/2103.00020)) as suggested in the [Imagen paper](https://arxiv.org/abs/2205.11487).

- **Resources for more information:** [GitHub Repository](https://github.com/CompVis/stable-diffusion), [Paper](https://arxiv.org/abs/2112.10752).

- **Cite as:**

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

# Uses

## Direct Use

The model is intended for research purposes only. Possible research areas and

tasks include

- Safe deployment of models which have the potential to generate harmful content.

- Probing and understanding the limitations and biases of generative models.

- Generation of artworks and use in design and other artistic processes.

- Applications in educational or creative tools.

- Research on generative models.

Excluded uses are described below.

### Misuse, Malicious Use, and Out-of-Scope Use

_Note: This section is taken from the [DALLE-MINI model card](https://huggingface.co/dalle-mini/dalle-mini), but applies in the same way to Stable Diffusion v1_.

The model should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.

#### Out-of-Scope Use

The model was not trained to be factual or true representations of people or events, and therefore using the model to generate such content is out-of-scope for the abilities of this model.

#### Misuse and Malicious Use

Using the model to generate content that is cruel to individuals is a misuse of this model. This includes, but is not limited to:

- Generating demeaning, dehumanizing, or otherwise harmful representations of people or their environments, cultures, religions, etc.

- Intentionally promoting or propagating discriminatory content or harmful stereotypes.

- Impersonating individuals without their consent.

- Sexual content without consent of the people who might see it.

- Mis- and disinformation

- Representations of egregious violence and gore

- Sharing of copyrighted or licensed material in violation of its terms of use.

- Sharing content that is an alteration of copyrighted or licensed material in violation of its terms of use.

## Limitations and Bias

### Limitations

- The model does not achieve perfect photorealism

- The model cannot render legible text

- The model does not perform well on more difficult tasks which involve compositionality, such as rendering an image corresponding to “A red cube on top of a blue sphere”

- Faces and people in general may not be generated properly.

- The model was trained mainly with English captions and will not work as well in other languages.

- The autoencoding part of the model is lossy

- The model was trained on a large-scale dataset

[LAION-5B](https://laion.ai/blog/laion-5b/) which contains adult material

and is not fit for product use without additional safety mechanisms and

considerations.

- No additional measures were used to deduplicate the dataset. As a result, we observe some degree of memorization for images that are duplicated in the training data.

The training data can be searched at [https://rom1504.github.io/clip-retrieval/](https://rom1504.github.io/clip-retrieval/) to possibly assist in the detection of memorized images.

### Bias

While the capabilities of image generation models are impressive, they can also reinforce or exacerbate social biases.

Stable Diffusion v1 was trained on subsets of [LAION-2B(en)](https://laion.ai/blog/laion-5b/),

which consists of images that are primarily limited to English descriptions.

Texts and images from communities and cultures that use other languages are likely to be insufficiently accounted for.

This affects the overall output of the model, as white and western cultures are often set as the default. Further, the

ability of the model to generate content with non-English prompts is significantly worse than with English-language prompts.

### Safety Module

The intended use of this model is with the [Safety Checker](https://github.com/huggingface/diffusers/blob/main/src/diffusers/pipelines/stable_diffusion/safety_checker.py) in Diffusers.

This checker works by checking model outputs against known hard-coded NSFW concepts.

The concepts are intentionally hidden to reduce the likelihood of reverse-engineering this filter.

Specifically, the checker compares the class probability of harmful concepts in the embedding space of the `CLIPTextModel` *after generation* of the images.

The concepts are passed into the model with the generated image and compared to a hand-engineered weight for each NSFW concept.

## Training

**Training Data**

The model developers used the following dataset for training the model:

- LAION-2B (en) and subsets thereof (see next section)

**Training Procedure**

Stable Diffusion v1-5 is a latent diffusion model which combines an autoencoder with a diffusion model that is trained in the latent space of the autoencoder. During training,

- Images are encoded through an encoder, which turns images into latent representations. The autoencoder uses a relative downsampling factor of 8 and maps images of shape H x W x 3 to latents of shape H/f x W/f x 4

- Text prompts are encoded through a ViT-L/14 text-encoder.

- The non-pooled output of the text encoder is fed into the UNet backbone of the latent diffusion model via cross-attention.

- The loss is a reconstruction objective between the noise that was added to the latent and the prediction made by the UNet.

Currently six Stable Diffusion checkpoints are provided, which were trained as follows.

- [`stable-diffusion-v1-1`](https://huggingface.co/CompVis/stable-diffusion-v1-1): 237,000 steps at resolution `256x256` on [laion2B-en](https://huggingface.co/datasets/laion/laion2B-en).

194,000 steps at resolution `512x512` on [laion-high-resolution](https://huggingface.co/datasets/laion/laion-high-resolution) (170M examples from LAION-5B with resolution `>= 1024x1024`).

- [`stable-diffusion-v1-2`](https://huggingface.co/CompVis/stable-diffusion-v1-2): Resumed from `stable-diffusion-v1-1`.

515,000 steps at resolution `512x512` on "laion-improved-aesthetics" (a subset of laion2B-en,

filtered to images with an original size `>= 512x512`, estimated aesthetics score `> 5.0`, and an estimated watermark probability `< 0.5`. The watermark estimate is from the LAION-5B metadata, the aesthetics score is estimated using an [improved aesthetics estimator](https://github.com/christophschuhmann/improved-aesthetic-predictor)).

- [`stable-diffusion-v1-3`](https://huggingface.co/CompVis/stable-diffusion-v1-3): Resumed from `stable-diffusion-v1-2` - 195,000 steps at resolution `512x512` on "laion-improved-aesthetics" and 10 % dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

- [`stable-diffusion-v1-4`](https://huggingface.co/CompVis/stable-diffusion-v1-4) Resumed from `stable-diffusion-v1-2` - 225,000 steps at resolution `512x512` on "laion-aesthetics v2 5+" and 10 % dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

- [`stable-diffusion-v1-5`](https://huggingface.co/runwayml/stable-diffusion-v1-5) Resumed from `stable-diffusion-v1-2` - 595,000 steps at resolution `512x512` on "laion-aesthetics v2 5+" and 10 % dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

- [`stable-diffusion-inpainting`](https://huggingface.co/runwayml/stable-diffusion-inpainting) Resumed from `stable-diffusion-v1-5` - then 440,000 steps of inpainting training at resolution 512x512 on “laion-aesthetics v2 5+” and 10% dropping of the text-conditioning. For inpainting, the UNet has 5 additional input channels (4 for the encoded masked-image and 1 for the mask itself) whose weights were zero-initialized after restoring the non-inpainting checkpoint. During training, we generate synthetic masks and in 25% mask everything.

- **Hardware:** 32 x 8 x A100 GPUs

- **Optimizer:** AdamW

- **Gradient Accumulations**: 2

- **Batch:** 32 x 8 x 2 x 4 = 2048

- **Learning rate:** warmup to 0.0001 for 10,000 steps and then kept constant

## Evaluation Results

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 PNDM/PLMS sampling

steps show the relative improvements of the checkpoints:

Evaluated using 50 PLMS steps and 10000 random prompts from the COCO2017 validation set, evaluated at 512x512 resolution. Not optimized for FID scores.

## Environmental Impact

**Stable Diffusion v1** **Estimated Emissions**

Based on that information, we estimate the following CO2 emissions using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700). The hardware, runtime, cloud provider, and compute region were utilized to estimate the carbon impact.

- **Hardware Type:** A100 PCIe 40GB

- **Hours used:** 150000

- **Cloud Provider:** AWS

- **Compute Region:** US-east

- **Carbon Emitted (Power consumption x Time x Carbon produced based on location of power grid):** 11250 kg CO2 eq.

## Citation

```bibtex

@InProceedings{Rombach_2022_CVPR,

author = {Rombach, Robin and Blattmann, Andreas and Lorenz, Dominik and Esser, Patrick and Ommer, Bj\"orn},

title = {High-Resolution Image Synthesis With Latent Diffusion Models},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2022},

pages = {10684-10695}

}

```

*This model card was written by: Robin Rombach and Patrick Esser and is based on the [DALL-E Mini model card](https://huggingface.co/dalle-mini/dalle-mini).* | ff6dc79182f70d5525127385a73ba0ee |

jonatasgrosman/exp_w2v2t_pl_wavlm_s250 | jonatasgrosman | wavlm | 10 | 5 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['pl'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'pl'] | false | true | true | 439 | false | # exp_w2v2t_pl_wavlm_s250

Fine-tuned [microsoft/wavlm-large](https://huggingface.co/microsoft/wavlm-large) for speech recognition using the train split of [Common Voice 7.0 (pl)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 2ae287b7aad1344a15917389c6575372 |

Manishkalra/finetuning-movie-sentiment-model-9000-samples | Manishkalra | distilbert | 13 | 7 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['imdb'] | null | 1 | 1 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,061 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-movie-sentiment-model-9000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4040

- Accuracy: 0.9178

- F1: 0.9155

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

### Framework versions

- Transformers 4.19.2

- Pytorch 1.11.0+cu113

- Datasets 2.2.2

- Tokenizers 0.12.1

| 7ef4c70555b92d4568f030df0ffc5331 |

gokuls/mobilebert_add_GLUE_Experiment_logit_kd_pretrain_rte | gokuls | mobilebert | 17 | 2 | transformers | 0 | text-classification | true | false | false | apache-2.0 | ['en'] | ['glue'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,629 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mobilebert_add_GLUE_Experiment_logit_kd_pretrain_rte

This model is a fine-tuned version of [gokuls/mobilebert_add_pre-training-complete](https://huggingface.co/gokuls/mobilebert_add_pre-training-complete) on the GLUE RTE dataset.

It achieves the following results on the evaluation set:

- Loss: nan

- Accuracy: 0.5271

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 128

- eval_batch_size: 128

- seed: 10

- distributed_type: multi-GPU

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0 | 1.0 | 20 | nan | 0.5271 |

| 0.0 | 2.0 | 40 | nan | 0.5271 |

| 0.0 | 3.0 | 60 | nan | 0.5271 |

| 0.0 | 4.0 | 80 | nan | 0.5271 |

| 0.0 | 5.0 | 100 | nan | 0.5271 |

| 0.0 | 6.0 | 120 | nan | 0.5271 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.14.0a0+410ce96

- Datasets 2.9.0

- Tokenizers 0.13.2

| d0cde83f8c26abb80591ae721ba50e2a |

Sahara/finetuning-sentiment-model-3000-samples | Sahara | distilbert | 13 | 12 | transformers | 0 | text-classification | true | false | false | apache-2.0 | null | ['imdb'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,055 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# finetuning-sentiment-model-3000-samples

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3322

- Accuracy: 0.8533

- F1: 0.8562

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

### Framework versions

- Transformers 4.20.1

- Pytorch 1.11.0+cu113

- Datasets 2.3.2

- Tokenizers 0.12.1

| 900f912994c36c1ea4886ea41a8f8ee4 |

Nadav/camembert-base-squad-fr | Nadav | camembert | 10 | 7 | transformers | 0 | question-answering | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['generated_from_trainer'] | true | true | true | 1,226 | false |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# camembert-base-squad-fr

This model is a fine-tuned version of [camembert-base](https://huggingface.co/camembert-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.5182

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.2

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.7504 | 1.0 | 3581 | 1.6470 |

| 1.4776 | 2.0 | 7162 | 1.5182 |

### Framework versions

- Transformers 4.24.0

- Pytorch 1.13.0+cu117

- Datasets 2.7.1

- Tokenizers 0.13.2

| 27076f2df421437249dcc32fb253bc30 |

jonatasgrosman/exp_w2v2r_fr_vp-100k_gender_male-10_female-0_s626 | jonatasgrosman | wav2vec2 | 10 | 3 | transformers | 0 | automatic-speech-recognition | true | false | false | apache-2.0 | ['fr'] | ['mozilla-foundation/common_voice_7_0'] | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | ['automatic-speech-recognition', 'fr'] | false | true | true | 499 | false | # exp_w2v2r_fr_vp-100k_gender_male-10_female-0_s626

Fine-tuned [facebook/wav2vec2-large-100k-voxpopuli](https://huggingface.co/facebook/wav2vec2-large-100k-voxpopuli) for speech recognition using the train split of [Common Voice 7.0 (fr)](https://huggingface.co/datasets/mozilla-foundation/common_voice_7_0).

When using this model, make sure that your speech input is sampled at 16kHz.

This model has been fine-tuned by the [HuggingSound](https://github.com/jonatasgrosman/huggingsound) tool.

| 64dffcb720500b36cba63de43180e27a |

karolill/nb-bert-finetuned-on-norec | karolill | bert | 8 | 4 | transformers | 0 | text-classification | true | false | false | mit | null | null | null | 0 | 0 | 0 | 0 | 0 | 0 | 0 | [] | false | true | true | 630 | false | # NB-BERT fine-tuned on NoReC

## Description