license: apache-2.0

size_categories:

- 1K<n<10K

dataset_info:

features:

- name: id

dtype: int32

- name: image

dtype: image

- name: sensor_type

dtype: string

- name: question_type

dtype: string

- name: question

dtype: string

- name: question_query

dtype: string

- name: answer

dtype: string

splits:

- name: train

num_bytes: 1455392605

num_examples: 6248

download_size: 903353168

dataset_size: 1455392605

configs:

- config_name: default

data_files:

- split: train

path: data/train-*

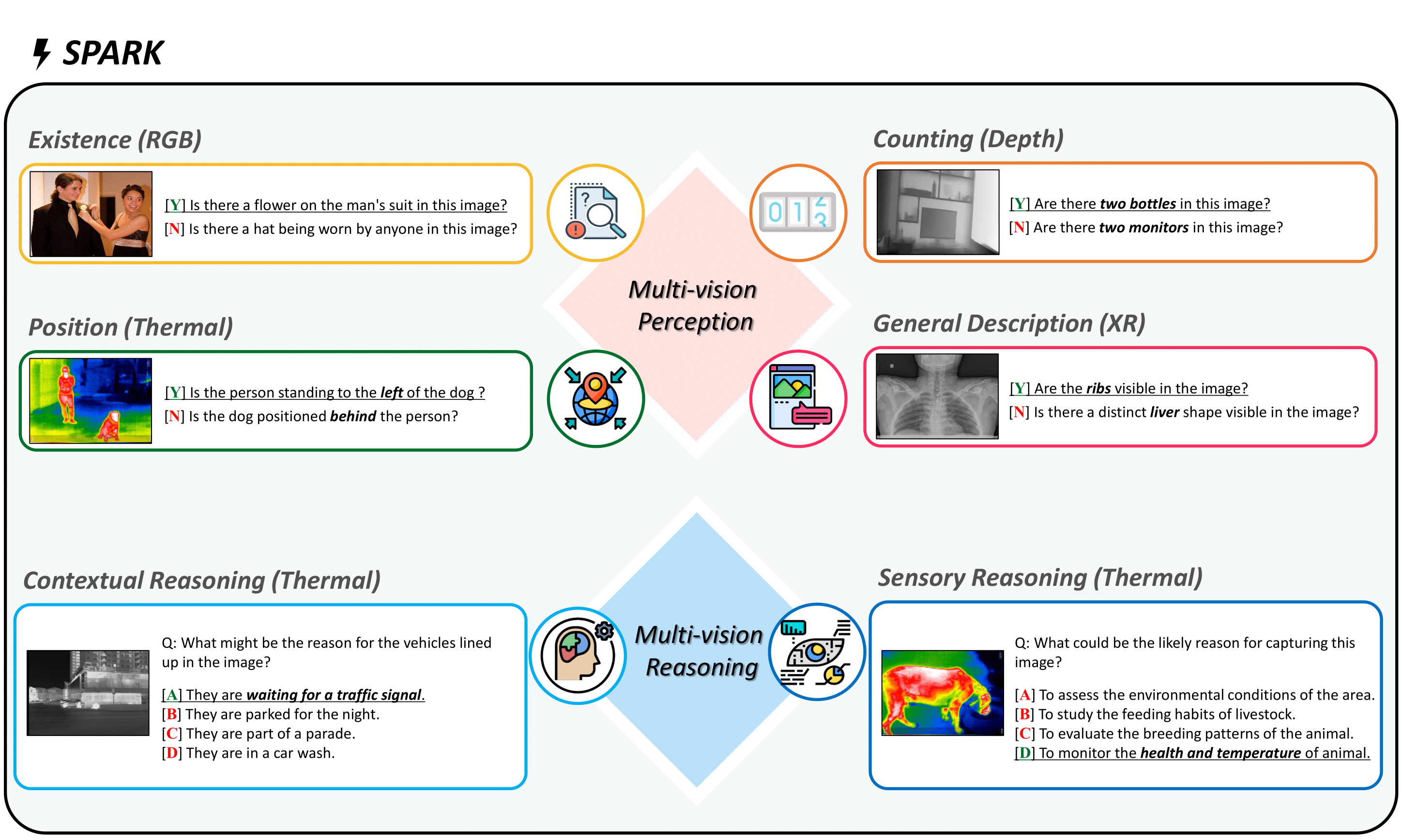

⚡ SPARK (multi-vision Sensor Perception And Reasoning benchmarK)

🌐 github | 🤗 Dataset | 📃 Paper

Dataset Details

SPARK can reduce the fundamental multi-vision sensor information gap between images and multi-vision sensors. We generated 6,248 vision-language test samples automatically to investigate multi-vision sensory perception and multi-vision sensory reasoning on physical sensor knowledge proficiency across different formats, covering different types of sensor-related questions.

Uses

you can easily download the dataset as follows:

from datasets import load_dataset

test_dataset = load_dataset("topyun/SPARK", split="train")

Additionally, we have provided two example codes for evaluation: Open Model(test.py) and Closed Model(test_closed_models.py). You can easily run them as shown below.

If you have 4 GPUs and want to run the experiment with llava-1.5-7b, you can do the following:

accelerate launch --config_file utils/ddp_accel_fp16.yaml \

--num_processes=4 \

test.py \

--batch_size 1 \

--model llava \

When running the closed model, make sure to insert your API KEY into the config.py file. If you have 1 GPU and want to run the experiment with gpt-4o, you can do the following:

accelerate launch --config_file utils/ddp_accel_fp16.yaml \

--num_processes=$n_gpu \

test_closed_models.py \

--batch_size 8 \

--model gpt \

--multiprocess True \

Tips

The evaluation method we've implemented simply checks whether 'A', 'B', 'C', 'D', 'yes', or 'no' appears at the beginning of the sentence. So, if the model you're evaluating provides unexpected answers (e.g., "'B'ased on ..." or "'C'onsidering ..."), you can resolve this by adding "Do not include any additional text." at the end of the prompt.

Source Data

Data Collection and Processing

These instructions are built from five public datasets: MS-COCO, M3FD, Dog&People, RGB-D scene dataset, and UNIFESP X-ray Body Part Classifier Competition dataset.

Citation

BibTeX:

@misc{yu2024sparkmultivisionsensorperception,

title={SPARK: Multi-Vision Sensor Perception and Reasoning Benchmark for Large-scale Vision-Language Models},

author={Youngjoon Yu and Sangyun Chung and Byung-Kwan Lee and Yong Man Ro},

year={2024},

eprint={2408.12114},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2408.12114},

}