repo_name

stringlengths 5

114

| repo_url

stringlengths 24

133

| snapshot_id

stringlengths 40

40

| revision_id

stringlengths 40

40

| directory_id

stringlengths 40

40

| branch_name

stringclasses 209

values | visit_date

timestamp[ns] | revision_date

timestamp[ns] | committer_date

timestamp[ns] | github_id

int64 9.83k

683M

⌀ | star_events_count

int64 0

22.6k

| fork_events_count

int64 0

4.15k

| gha_license_id

stringclasses 17

values | gha_created_at

timestamp[ns] | gha_updated_at

timestamp[ns] | gha_pushed_at

timestamp[ns] | gha_language

stringclasses 115

values | files

listlengths 1

13.2k

| num_files

int64 1

13.2k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

MrAhmedElsayed/python-lover | https://github.com/MrAhmedElsayed/python-lover | c7c60e7cbd55cd9e6f51255041a716fb7a27220c | ad720f80b8ae023109cc196ff525221f7e805571 | 28006d7806d492d088662d542aeb0e21c15b7aea | refs/heads/master | 2020-04-26T12:26:59.392412 | 2019-10-23T10:51:46 | 2019-10-23T10:51:46 | 173,549,625 | 1 | 0 | null | null | null | null | null | [

{

"alpha_fraction": 0.760617733001709,

"alphanum_fraction": 0.760617733001709,

"avg_line_length": 42.16666793823242,

"blob_id": "3c20c32ca2ff0612b79f9f5ab093aa09984f6d84",

"content_id": "8df83afca77a0349b74da3e2bee11a65b14a2455",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Text",

"length_bytes": 259,

"license_type": "no_license",

"max_line_length": 133,

"num_lines": 6,

"path": "/README.txt",

"repo_name": "MrAhmedElsayed/python-lover",

"src_encoding": "UTF-8",

"text": "Using IMAP (imapclient) to log in and access to your email (Gmail, Hotmail, Yahoo Mail) and select \"Sender\" then Delete all selected.\n\n#Note:\nyou have to unprotect your mail in email setting by using less protection. \n\nYou have idea Send me an email: ahmedsayed551991@gmail.com\n"

},

{

"alpha_fraction": 0.3456658124923706,

"alphanum_fraction": 0.37351107597351074,

"avg_line_length": 52.74787139892578,

"blob_id": "2fb853d69103e57bda450dc92be91916572d87dc",

"content_id": "1bac5a438f1df3f4e5c8ccfe288e59dc22be2118",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 51463,

"license_type": "no_license",

"max_line_length": 218,

"num_lines": 940,

"path": "/stable_version.py",

"repo_name": "MrAhmedElsayed/python-lover",

"src_encoding": "UTF-8",

"text": "# -*- coding: utf-8 -*-\r\nimport time\r\n\r\nimport requests\r\nfrom imapclient import *\r\nfrom PyQt5 import QtCore, QtGui, QtWidgets\r\n\r\n\r\nclass Ui_Main_Window(object):\r\n def setupUi(self, Main_Window):\r\n Main_Window.setObjectName(\"Main_Window\")\r\n Main_Window.resize(840, 600)\r\n Main_Window.setStyleSheet(\"QToolTip\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid black;\\n\"\r\n \" background-color: #ffa02f;\\n\"\r\n \" padding: 1px;\\n\"\r\n \" border-radius: 3px;\\n\"\r\n \" opacity: 100;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QWidget\\n\"\r\n \"{\\n\"\r\n \" color: #b1b1b1;\\n\"\r\n \" background-color: #323232;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTreeView, QListView\\n\"\r\n \"{\\n\"\r\n \" background-color: silver;\\n\"\r\n \" margin-left: 5px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QWidget:item:hover\\n\"\r\n \"{\\n\"\r\n \" background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #ffa02f, stop: 1 #ca0619);\\n\"\r\n \" color: #000000;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QWidget:item:selected\\n\"\r\n \"{\\n\"\r\n \" background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #ffa02f, stop: 1 #d7801a);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMenuBar::item\\n\"\r\n \"{\\n\"\r\n \" background: transparent;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMenuBar::item:selected\\n\"\r\n \"{\\n\"\r\n \" background: transparent;\\n\"\r\n \" border: 1px solid #ffaa00;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMenuBar::item:pressed\\n\"\r\n \"{\\n\"\r\n \" background: #444;\\n\"\r\n \" border: 1px solid #000;\\n\"\r\n \" background-color: QLinearGradient(\\n\"\r\n \" x1:0, y1:0,\\n\"\r\n \" x2:0, y2:1,\\n\"\r\n \" stop:1 #212121,\\n\"\r\n \" stop:0.4 #343434/*,\\n\"\r\n \" stop:0.2 #343434,\\n\"\r\n \" stop:0.1 #ffaa00*/\\n\"\r\n \" );\\n\"\r\n \" margin-bottom:-1px;\\n\"\r\n \" padding-bottom:1px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMenu\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid #000;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMenu::item\\n\"\r\n \"{\\n\"\r\n \" padding: 2px 20px 2px 20px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMenu::item:selected\\n\"\r\n \"{\\n\"\r\n \" color: #000000;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QWidget:disabled\\n\"\r\n \"{\\n\"\r\n \" color: #808080;\\n\"\r\n \" background-color: #323232;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QAbstractItemView\\n\"\r\n \"{\\n\"\r\n \" background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #4d4d4d, stop: 0.1 #646464, stop: 1 #5d5d5d);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QWidget:focus\\n\"\r\n \"{\\n\"\r\n \" /*border: 1px solid darkgray;*/\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QLineEdit\\n\"\r\n \"{\\n\"\r\n \" background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #4d4d4d, stop: 0 #646464, stop: 1 #5d5d5d);\\n\"\r\n \" padding: 1px;\\n\"\r\n \" border-style: solid;\\n\"\r\n \" border: 1px solid #1e1e1e;\\n\"\r\n \" border-radius: 5;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QPushButton\\n\"\r\n \"{\\n\"\r\n \" color: #b1b1b1;\\n\"\r\n \" background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #565656, stop: 0.1 #525252, stop: 0.5 #4e4e4e, stop: 0.9 #4a4a4a, stop: 1 #464646);\\n\"\r\n \" border-width: 1px;\\n\"\r\n \" border-color: #1e1e1e;\\n\"\r\n \" border-style: solid;\\n\"\r\n \" border-radius: 6;\\n\"\r\n \" padding: 3px;\\n\"\r\n \" font-size: 12px;\\n\"\r\n \" padding-left: 5px;\\n\"\r\n \" padding-right: 5px;\\n\"\r\n \" min-width: 40px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QPushButton:pressed\\n\"\r\n \"{\\n\"\r\n \" background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #2d2d2d, stop: 0.1 #2b2b2b, stop: 0.5 #292929, stop: 0.9 #282828, stop: 1 #252525);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QComboBox\\n\"\r\n \"{\\n\"\r\n \" selection-background-color: #ffaa00;\\n\"\r\n \" background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #565656, stop: 0.1 #525252, stop: 0.5 #4e4e4e, stop: 0.9 #4a4a4a, stop: 1 #464646);\\n\"\r\n \" border-style: solid;\\n\"\r\n \" border: 1px solid #1e1e1e;\\n\"\r\n \" border-radius: 5;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QComboBox:hover,QPushButton:hover\\n\"\r\n \"{\\n\"\r\n \" border: 2px solid QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #ffa02f, stop: 1 #d7801a);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"\\n\"\r\n \"QComboBox:on\\n\"\r\n \"{\\n\"\r\n \" padding-top: 3px;\\n\"\r\n \" padding-left: 4px;\\n\"\r\n \" background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #2d2d2d, stop: 0.1 #2b2b2b, stop: 0.5 #292929, stop: 0.9 #282828, stop: 1 #252525);\\n\"\r\n \" selection-background-color: #ffaa00;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QComboBox QAbstractItemView\\n\"\r\n \"{\\n\"\r\n \" border: 2px solid darkgray;\\n\"\r\n \" selection-background-color: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #ffa02f, stop: 1 #d7801a);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QComboBox::drop-down\\n\"\r\n \"{\\n\"\r\n \" subcontrol-origin: padding;\\n\"\r\n \" subcontrol-position: top right;\\n\"\r\n \" width: 15px;\\n\"\r\n \"\\n\"\r\n \" border-left-width: 0px;\\n\"\r\n \" border-left-color: darkgray;\\n\"\r\n \" border-left-style: solid; /* just a single line */\\n\"\r\n \" border-top-right-radius: 3px; /* same radius as the QComboBox */\\n\"\r\n \" border-bottom-right-radius: 3px;\\n\"\r\n \" }\\n\"\r\n \"\\n\"\r\n \"QComboBox::down-arrow\\n\"\r\n \"{\\n\"\r\n \" image: url(:/dark_orange/img/down_arrow.png);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QGroupBox\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid darkgray;\\n\"\r\n \" margin-top: 10px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QGroupBox:focus\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid darkgray;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTextEdit:focus\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid darkgray;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar:horizontal {\\n\"\r\n \" border: 1px solid #222222;\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0.0 #121212, stop: 0.2 #282828, stop: 1 #484848);\\n\"\r\n \" height: 7px;\\n\"\r\n \" margin: 0px 16px 0 16px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::handle:horizontal\\n\"\r\n \"{\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 1, y2: 0, stop: 0 #ffa02f, stop: 0.5 #d7801a, stop: 1 #ffa02f);\\n\"\r\n \" min-height: 20px;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::add-line:horizontal {\\n\"\r\n \" border: 1px solid #1b1b19;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 1, y2: 0, stop: 0 #ffa02f, stop: 1 #d7801a);\\n\"\r\n \" width: 14px;\\n\"\r\n \" subcontrol-position: right;\\n\"\r\n \" subcontrol-origin: margin;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::sub-line:horizontal {\\n\"\r\n \" border: 1px solid #1b1b19;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 1, y2: 0, stop: 0 #ffa02f, stop: 1 #d7801a);\\n\"\r\n \" width: 14px;\\n\"\r\n \" subcontrol-position: left;\\n\"\r\n \" subcontrol-origin: margin;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::right-arrow:horizontal, QScrollBar::left-arrow:horizontal\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid black;\\n\"\r\n \" width: 1px;\\n\"\r\n \" height: 1px;\\n\"\r\n \" background: white;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::add-page:horizontal, QScrollBar::sub-page:horizontal\\n\"\r\n \"{\\n\"\r\n \" background: none;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar:vertical\\n\"\r\n \"{\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 1, y2: 0, stop: 0.0 #121212, stop: 0.2 #282828, stop: 1 #484848);\\n\"\r\n \" width: 7px;\\n\"\r\n \" margin: 16px 0 16px 0;\\n\"\r\n \" border: 1px solid #222222;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::handle:vertical\\n\"\r\n \"{\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #ffa02f, stop: 0.5 #d7801a, stop: 1 #ffa02f);\\n\"\r\n \" min-height: 20px;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::add-line:vertical\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid #1b1b19;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #ffa02f, stop: 1 #d7801a);\\n\"\r\n \" height: 14px;\\n\"\r\n \" subcontrol-position: bottom;\\n\"\r\n \" subcontrol-origin: margin;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::sub-line:vertical\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid #1b1b19;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0 #d7801a, stop: 1 #ffa02f);\\n\"\r\n \" height: 14px;\\n\"\r\n \" subcontrol-position: top;\\n\"\r\n \" subcontrol-origin: margin;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QScrollBar::up-arrow:vertical, QScrollBar::down-arrow:vertical\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid black;\\n\"\r\n \" width: 1px;\\n\"\r\n \" height: 1px;\\n\"\r\n \" background: white;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"\\n\"\r\n \"QScrollBar::add-page:vertical, QScrollBar::sub-page:vertical\\n\"\r\n \"{\\n\"\r\n \" background: none;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTextEdit\\n\"\r\n \"{\\n\"\r\n \" background-color: #242424;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QPlainTextEdit\\n\"\r\n \"{\\n\"\r\n \" background-color: #242424;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QHeaderView::section\\n\"\r\n \"{\\n\"\r\n \" background-color: QLinearGradient(x1:0, y1:0, x2:0, y2:1, stop:0 #616161, stop: 0.5 #505050, stop: 0.6 #434343, stop:1 #656565);\\n\"\r\n \" color: white;\\n\"\r\n \" padding-left: 4px;\\n\"\r\n \" border: 1px solid #6c6c6c;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QCheckBox:disabled\\n\"\r\n \"{\\n\"\r\n \"color: #414141;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QDockWidget::title\\n\"\r\n \"{\\n\"\r\n \" text-align: center;\\n\"\r\n \" spacing: 3px; /* spacing between items in the tool bar */\\n\"\r\n \" background-color: QLinearGradient(x1:0, y1:0, x2:0, y2:1, stop:0 #323232, stop: 0.5 #242424, stop:1 #323232);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QDockWidget::close-button, QDockWidget::float-button\\n\"\r\n \"{\\n\"\r\n \" text-align: center;\\n\"\r\n \" spacing: 1px; /* spacing between items in the tool bar */\\n\"\r\n \" background-color: QLinearGradient(x1:0, y1:0, x2:0, y2:1, stop:0 #323232, stop: 0.5 #242424, stop:1 #323232);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QDockWidget::close-button:hover, QDockWidget::float-button:hover\\n\"\r\n \"{\\n\"\r\n \" background: #242424;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QDockWidget::close-button:pressed, QDockWidget::float-button:pressed\\n\"\r\n \"{\\n\"\r\n \" padding: 1px -1px -1px 1px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMainWindow::separator\\n\"\r\n \"{\\n\"\r\n \" background-color: QLinearGradient(x1:0, y1:0, x2:0, y2:1, stop:0 #161616, stop: 0.5 #151515, stop: 0.6 #212121, stop:1 #343434);\\n\"\r\n \" color: white;\\n\"\r\n \" padding-left: 4px;\\n\"\r\n \" border: 1px solid #4c4c4c;\\n\"\r\n \" spacing: 3px; /* spacing between items in the tool bar */\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMainWindow::separator:hover\\n\"\r\n \"{\\n\"\r\n \"\\n\"\r\n \" background-color: QLinearGradient(x1:0, y1:0, x2:0, y2:1, stop:0 #d7801a, stop:0.5 #b56c17 stop:1 #ffa02f);\\n\"\r\n \" color: white;\\n\"\r\n \" padding-left: 4px;\\n\"\r\n \" border: 1px solid #6c6c6c;\\n\"\r\n \" spacing: 3px; /* spacing between items in the tool bar */\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QToolBar::handle\\n\"\r\n \"{\\n\"\r\n \" spacing: 3px; /* spacing between items in the tool bar */\\n\"\r\n \" background: url(:/dark_orange/img/handle.png);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QMenu::separator\\n\"\r\n \"{\\n\"\r\n \" height: 2px;\\n\"\r\n \" background-color: QLinearGradient(x1:0, y1:0, x2:0, y2:1, stop:0 #161616, stop: 0.5 #151515, stop: 0.6 #212121, stop:1 #343434);\\n\"\r\n \" color: white;\\n\"\r\n \" padding-left: 4px;\\n\"\r\n \" margin-left: 10px;\\n\"\r\n \" margin-right: 5px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QProgressBar\\n\"\r\n \"{\\n\"\r\n \" border: 2px solid grey;\\n\"\r\n \" border-radius: 5px;\\n\"\r\n \" text-align: center;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QProgressBar::chunk\\n\"\r\n \"{\\n\"\r\n \" background-color: #d7801a;\\n\"\r\n \" width: 2.15px;\\n\"\r\n \" margin: 0.5px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTabBar::tab {\\n\"\r\n \" color: #b1b1b1;\\n\"\r\n \" border: 1px solid #444;\\n\"\r\n \" border-bottom-style: none;\\n\"\r\n \" background-color: #323232;\\n\"\r\n \" padding-left: 10px;\\n\"\r\n \" padding-right: 10px;\\n\"\r\n \" padding-top: 3px;\\n\"\r\n \" padding-bottom: 2px;\\n\"\r\n \" margin-right: -1px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTabWidget::pane {\\n\"\r\n \" border: 1px solid #444;\\n\"\r\n \" top: 1px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTabBar::tab:last\\n\"\r\n \"{\\n\"\r\n \" margin-right: 0; /* the last selected tab has nothing to overlap with on the right */\\n\"\r\n \" border-top-right-radius: 3px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTabBar::tab:first:!selected\\n\"\r\n \"{\\n\"\r\n \" margin-left: 0px; /* the last selected tab has nothing to overlap with on the right */\\n\"\r\n \"\\n\"\r\n \"\\n\"\r\n \" border-top-left-radius: 3px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTabBar::tab:!selected\\n\"\r\n \"{\\n\"\r\n \" color: #b1b1b1;\\n\"\r\n \" border-bottom-style: solid;\\n\"\r\n \" margin-top: 3px;\\n\"\r\n \" background-color: QLinearGradient(x1:0, y1:0, x2:0, y2:1, stop:1 #212121, stop:.4 #343434);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTabBar::tab:selected\\n\"\r\n \"{\\n\"\r\n \" border-top-left-radius: 3px;\\n\"\r\n \" border-top-right-radius: 3px;\\n\"\r\n \" margin-bottom: 0px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QTabBar::tab:!selected:hover\\n\"\r\n \"{\\n\"\r\n \" /*border-top: 2px solid #ffaa00;\\n\"\r\n \" padding-bottom: 3px;*/\\n\"\r\n \" border-top-left-radius: 3px;\\n\"\r\n \" border-top-right-radius: 3px;\\n\"\r\n \" background-color: QLinearGradient(x1:0, y1:0, x2:0, y2:1, stop:1 #212121, stop:0.4 #343434, stop:0.2 #343434, stop:0.1 #ffaa00);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QRadioButton::indicator:checked, QRadioButton::indicator:unchecked{\\n\"\r\n \" color: #b1b1b1;\\n\"\r\n \" background-color: #323232;\\n\"\r\n \" border: 1px solid #b1b1b1;\\n\"\r\n \" border-radius: 6px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QRadioButton::indicator:checked\\n\"\r\n \"{\\n\"\r\n \" background-color: qradialgradient(\\n\"\r\n \" cx: 0.5, cy: 0.5,\\n\"\r\n \" fx: 0.5, fy: 0.5,\\n\"\r\n \" radius: 1.0,\\n\"\r\n \" stop: 0.25 #ffaa00,\\n\"\r\n \" stop: 0.3 #323232\\n\"\r\n \" );\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QCheckBox::indicator{\\n\"\r\n \" color: #b1b1b1;\\n\"\r\n \" background-color: #323232;\\n\"\r\n \" border: 1px solid #b1b1b1;\\n\"\r\n \" width: 9px;\\n\"\r\n \" height: 9px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QRadioButton::indicator\\n\"\r\n \"{\\n\"\r\n \" border-radius: 6px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QRadioButton::indicator:hover, QCheckBox::indicator:hover\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid #ffaa00;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QCheckBox::indicator:checked\\n\"\r\n \"{\\n\"\r\n \" image:url(:/dark_orange/img/checkbox.png);\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QCheckBox::indicator:disabled, QRadioButton::indicator:disabled\\n\"\r\n \"{\\n\"\r\n \" border: 1px solid #444;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"\\n\"\r\n \"QSlider::groove:horizontal {\\n\"\r\n \" border: 1px solid #3A3939;\\n\"\r\n \" height: 8px;\\n\"\r\n \" background: #201F1F;\\n\"\r\n \" margin: 2px 0;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QSlider::handle:horizontal {\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1,\\n\"\r\n \" stop: 0.0 silver, stop: 0.2 #a8a8a8, stop: 1 #727272);\\n\"\r\n \" border: 1px solid #3A3939;\\n\"\r\n \" width: 14px;\\n\"\r\n \" height: 14px;\\n\"\r\n \" margin: -4px 0;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QSlider::groove:vertical {\\n\"\r\n \" border: 1px solid #3A3939;\\n\"\r\n \" width: 8px;\\n\"\r\n \" background: #201F1F;\\n\"\r\n \" margin: 0 0px;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QSlider::handle:vertical {\\n\"\r\n \" background: QLinearGradient( x1: 0, y1: 0, x2: 0, y2: 1, stop: 0.0 silver,\\n\"\r\n \" stop: 0.2 #a8a8a8, stop: 1 #727272);\\n\"\r\n \" border: 1px solid #3A3939;\\n\"\r\n \" width: 14px;\\n\"\r\n \" height: 14px;\\n\"\r\n \" margin: 0 -4px;\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \"}\\n\"\r\n \"\\n\"\r\n \"QAbstractSpinBox {\\n\"\r\n \" padding-top: 2px;\\n\"\r\n \" padding-bottom: 2px;\\n\"\r\n \" border: 1px solid darkgray;\\n\"\r\n \"\\n\"\r\n \" border-radius: 2px;\\n\"\r\n \" min-width: 50px;\\n\"\r\n \"}\")\r\n\r\n self.centralwidget = QtWidgets.QWidget(Main_Window)\r\n self.centralwidget.setObjectName(\"centralwidget\")\r\n\r\n self.frame = QtWidgets.QFrame(self.centralwidget)\r\n self.frame.setGeometry(QtCore.QRect(10, 0, 821, 591))\r\n self.frame.setFrameShape(QtWidgets.QFrame.StyledPanel)\r\n self.frame.setFrameShadow(QtWidgets.QFrame.Raised)\r\n self.frame.setObjectName(\"frame\")\r\n\r\n # ============================================= LABELS ===================================================\r\n self.label_email = QtWidgets.QLabel(self.frame)\r\n self.label_email.setGeometry(QtCore.QRect(50, 20, 61, 21))\r\n font = QtGui.QFont()\r\n font.setPointSize(10)\r\n font.setBold(True)\r\n font.setWeight(75)\r\n self.label_email.setFont(font)\r\n self.label_email.setObjectName(\"label_email\")\r\n\r\n self.label_password = QtWidgets.QLabel(self.frame)\r\n self.label_password.setGeometry(QtCore.QRect(340, 20, 101, 21))\r\n font = QtGui.QFont()\r\n font.setPointSize(9)\r\n font.setBold(True)\r\n font.setWeight(75)\r\n self.label_password.setFont(font)\r\n self.label_password.setObjectName(\"label_password\")\r\n\r\n self.label_mailer = QtWidgets.QLabel(self.frame)\r\n self.label_mailer.setGeometry(QtCore.QRect(50, 90, 91, 31))\r\n font = QtGui.QFont()\r\n font.setPointSize(12)\r\n self.label_mailer.setFont(font)\r\n self.label_mailer.setObjectName(\"label_mailer\")\r\n\r\n self.label_emailslistname = QtWidgets.QLabel(self.frame)\r\n self.label_emailslistname.setGeometry(QtCore.QRect(60, 170, 171, 31))\r\n font = QtGui.QFont()\r\n font.setPointSize(12)\r\n self.label_emailslistname.setFont(font)\r\n self.label_emailslistname.setObjectName(\"label_emailslistname\")\r\n\r\n self.label_mailinfo = QtWidgets.QLabel(self.frame)\r\n self.label_mailinfo.setGeometry(QtCore.QRect(640, 200, 141, 221))\r\n font = QtGui.QFont()\r\n font.setFamily(\"Times New Roman\")\r\n font.setPointSize(10)\r\n font.setBold(True)\r\n font.setWeight(75)\r\n self.label_mailinfo.setFont(font)\r\n self.label_mailinfo.setAutoFillBackground(False)\r\n self.label_mailinfo.setFrameShape(QtWidgets.QFrame.NoFrame)\r\n self.label_mailinfo.setScaledContents(False)\r\n self.label_mailinfo.setAlignment(QtCore.Qt.AlignLeading | QtCore.Qt.AlignLeft | QtCore.Qt.AlignTop)\r\n self.label_mailinfo.setWordWrap(True)\r\n self.label_mailinfo.setObjectName(\"label_mailinfo\")\r\n\r\n self.label_emailconfirmed = QtWidgets.QLabel(self.frame)\r\n self.label_emailconfirmed.setGeometry(QtCore.QRect(640, 40, 121, 31))\r\n font = QtGui.QFont()\r\n font.setPointSize(9)\r\n font.setBold(True)\r\n font.setWeight(75)\r\n self.label_emailconfirmed.setFont(font)\r\n self.label_emailconfirmed.setObjectName(\"label_emailconfirmed\")\r\n\r\n self.label_progress = QtWidgets.QLabel(self.frame)\r\n self.label_progress.setGeometry(QtCore.QRect(60, 460, 71, 21))\r\n font = QtGui.QFont()\r\n font.setPointSize(12)\r\n self.label_progress.setFont(font)\r\n self.label_progress.setObjectName(\"label_progress\")\r\n\r\n # ============================================= LINE EDIT ===================================================\r\n self.lineedit_mailer = QtWidgets.QLineEdit(self.frame)\r\n self.lineedit_mailer.setGeometry(QtCore.QRect(50, 120, 441, 31))\r\n font = QtGui.QFont()\r\n font.setFamily(\"Times New Roman\")\r\n font.setPointSize(10)\r\n font.setBold(True)\r\n font.setWeight(75)\r\n self.lineedit_mailer.setFont(font)\r\n self.lineedit_mailer.setObjectName(\"lineedit_mailer\")\r\n\r\n self.lineedit_mailer.setText(\"noreply@medium.com\")\r\n # self.lineedit_mailer.setPlaceholderText(\"Enter email you wish delete it's messages\")\r\n self.lineedit_mailer.setDisabled(True)\r\n\r\n self.lineedit_password = QtWidgets.QLineEdit(self.frame)\r\n self.lineedit_password.setGeometry(QtCore.QRect(340, 40, 201, 31))\r\n font = QtGui.QFont()\r\n font.setFamily(\"Times New Roman\")\r\n font.setPointSize(11)\r\n font.setBold(True)\r\n font.setItalic(False)\r\n font.setWeight(75)\r\n self.lineedit_password.setFont(font)\r\n self.lineedit_password.setObjectName(\"lineedit_password\")\r\n self.lineedit_password.setPlaceholderText(\"Enter your mail password\")\r\n\r\n self.lineedit_yourmail = QtWidgets.QLineEdit(self.frame)\r\n self.lineedit_yourmail.setGeometry(QtCore.QRect(50, 40, 271, 31))\r\n font = QtGui.QFont()\r\n font.setFamily(\"Times New Roman\")\r\n font.setPointSize(11)\r\n font.setBold(True)\r\n font.setItalic(False)\r\n font.setWeight(75)\r\n self.lineedit_yourmail.setFont(font)\r\n self.lineedit_yourmail.setObjectName(\"lineedit_yourmail\")\r\n self.lineedit_yourmail.setPlaceholderText(\"Enter your email\")\r\n\r\n # ============================================= BUTTON ===================================================\r\n self.button_addmail = QtWidgets.QPushButton(self.frame)\r\n self.button_addmail.setGeometry(QtCore.QRect(510, 120, 101, 31))\r\n self.button_addmail.setObjectName(\"button_addmail\")\r\n self.button_addmail.clicked.connect(self.add_mail)\r\n self.button_addmail.setDisabled(True)\r\n\r\n self.button_exit = QtWidgets.QPushButton(self.frame)\r\n self.button_exit.setGeometry(QtCore.QRect(290, 540, 101, 31))\r\n self.button_exit.setObjectName(\"button_exit\")\r\n self.button_exit.clicked.connect(self.close_app_exit)\r\n\r\n self.button_delete = QtWidgets.QPushButton(self.frame)\r\n self.button_delete.setGeometry(QtCore.QRect(640, 430, 141, 81))\r\n font = QtGui.QFont()\r\n font.setPointSize(-1)\r\n font.setBold(True)\r\n font.setWeight(75)\r\n self.button_delete.setFont(font)\r\n self.button_delete.setObjectName(\"button_delete\")\r\n self.button_delete.clicked.connect(self.delete_messages)\r\n self.button_delete.setDisabled(True)\r\n\r\n self.button_login = QtWidgets.QPushButton(self.frame)\r\n self.button_login.setGeometry(QtCore.QRect(550, 40, 61, 31))\r\n self.button_login.setObjectName(\"button_login\")\r\n self.button_login.clicked.connect(self.login)\r\n\r\n # ========================================== PROGRESS BAR =================================================\r\n self.progressbar = QtWidgets.QProgressBar(self.frame)\r\n self.progressbar.setGeometry(QtCore.QRect(60, 490, 561, 23))\r\n self.progressbar.setMinimum(1)\r\n self.progressbar.setProperty(\"value\", 0)\r\n self.progressbar.setObjectName(\"progressbar\")\r\n\r\n # ============================================= TEXT BROWSER =================================================\r\n self.textBrowser = QtWidgets.QTextBrowser(self.frame)\r\n self.textBrowser.setGeometry(QtCore.QRect(55, 201, 561, 221))\r\n font = QtGui.QFont()\r\n font.setFamily(\"Times New Roman\")\r\n font.setPointSize(11)\r\n font.setBold(True)\r\n font.setWeight(75)\r\n self.textBrowser.setFont(font)\r\n self.textBrowser.setObjectName(\"textBrowser\")\r\n\r\n Main_Window.setCentralWidget(self.centralwidget)\r\n\r\n self.retranslateUi(Main_Window)\r\n QtCore.QMetaObject.connectSlotsByName(Main_Window)\r\n\r\n self.deleted_mailes = []\r\n print(\"deleted_mailes list\", self.deleted_mailes)\r\n\r\n imaplib._MAXLINE = 1000000\r\n\r\n # ============================================= FUNCTIONS =================================================\r\n # TODO: ADD CLEAR TEXT BROWSE BUTTON & BUTTONS ICONS\r\n\r\n # --------------------New--------------------\r\n def auto_detect_mail(self):\r\n # auto detect mail type (yahoo, Gmail, hotmail)\r\n # while loop\r\n if '@yahoo.com' in self.lineedit_yourmail.text():\r\n # print(\"auto_detect_mail(self): yahoo mail\")\r\n return 'yahoo'\r\n\r\n elif '@gmail.com' in self.lineedit_yourmail.text():\r\n print(\"auto_detect_mail(self): Gmail\")\r\n return 'gmail'\r\n\r\n elif '@hotmail.com' in self.lineedit_yourmail.text():\r\n # print(\"auto_detect_mail(self): hotmail\")\r\n return \"hotmail\"\r\n else:\r\n return False\r\n\r\n # --------------------New--------------------\r\n def internet_connection(self):\r\n # check internet connection\r\n url = 'http://www.google.com/'\r\n timeout = 5\r\n\r\n try:\r\n requests.get(url, timeout=timeout)\r\n return True\r\n\r\n except requests.ConnectionError:\r\n self.label_emailconfirmed.setText(\"No internet\")\r\n return False\r\n\r\n # --------------------New--------------------\r\n def login(self):\r\n try:\r\n self.lineedit_mailer.setDisabled(True)\r\n self.button_addmail.setDisabled(True)\r\n self.button_delete.setDisabled(True)\r\n\r\n # login email using address and password\r\n # yahoo mail\r\n if self.internet_connection() and self.auto_detect_mail() is 'yahoo':\r\n self.label_mailinfo.setText(\"please unprotect your email goto link yahoo imap activate\")\r\n self.yahoo_e_mail = self.lineedit_yourmail.text()\r\n self.yahoo_password = self.lineedit_password.text()\r\n self.yahoo_imap_Object = imapclient.IMAPClient('imap.mail.yahoo.com', ssl=True, port=\"993\")\r\n self.yahoo_imap_Object.login(self.yahoo_e_mail, self.yahoo_password)\r\n self.label_emailconfirmed.setText(\"yahoo connected\")\r\n print(\"you have internet connection and yahoo register\")\r\n\r\n self.lineedit_mailer.setDisabled(False)\r\n self.button_addmail.setDisabled(False)\r\n self.button_delete.setDisabled(False)\r\n return True\r\n\r\n # Gmail\r\n elif self.internet_connection() and self.auto_detect_mail() is 'gmail':\r\n self.label_mailinfo.setText(\"please unprotect your email goto link gmail imap activate\")\r\n self.gmail_e_mail = self.lineedit_yourmail.text()\r\n self.gmail_password = self.lineedit_password.text()\r\n self.gmail_imap_Object = imapclient.IMAPClient('imap.gmail.com', ssl=True)\r\n self.gmail_imap_Object.login(self.gmail_e_mail, self.gmail_password)\r\n self.label_emailconfirmed.setText(\"Gmail connected\")\r\n print(\"you have internet connection and 'gmail' register\")\r\n\r\n self.lineedit_mailer.setDisabled(False)\r\n self.button_addmail.setDisabled(False)\r\n self.button_delete.setDisabled(False)\r\n return True\r\n\r\n # Hotmail\r\n elif self.internet_connection() and self.auto_detect_mail() is 'hotmail':\r\n self.label_mailinfo.setText(\"please unprotect your email goto link hotmail imap activate\")\r\n self.hotmail = self.lineedit_yourmail.text()\r\n self.hotmail_password = self.lineedit_password.text()\r\n\r\n Server_name = \"outlook.office365.com\"\r\n self.hotmail_imap_Object = imapclient.IMAPClient(Server_name, ssl=True, port=993)\r\n self.hotmail_imap_Object.login(self.hotmail, self.hotmail_password)\r\n self.label_emailconfirmed.setText(\"hotmail connected\")\r\n print(\"you have internet connection and hotmail register\")\r\n\r\n self.lineedit_mailer.setDisabled(False)\r\n self.button_addmail.setDisabled(False)\r\n self.button_delete.setDisabled(False)\r\n return True\r\n\r\n else:\r\n if self.internet_connection():\r\n print(\"Unknown email Or wrong email\")\r\n return False\r\n\r\n except:\r\n self.label_mailinfo.setText(\"Wrong mail or password\")\r\n self.label_emailconfirmed.setText(\"Error\")\r\n print(\"Unknown email\")\r\n\r\n # --------------------New--------------------\r\n def add_mail(self): # so complicated\r\n # check if the mail have record messages if it haven't don't add\r\n\r\n # take the all messages from sender and store it in dictionary {key(sender): value(count)}.\r\n if self.lineedit_mailer.text() not in self.deleted_mailes and \"@\" in self.lineedit_mailer.text():\r\n self.deleted_mailes.append(self.lineedit_mailer.text())\r\n self.lineedit_mailer.clear()\r\n else:\r\n self.label_mailinfo.setText(\"Repeated Mail or wrong mailer\")\r\n return False\r\n try:\r\n # login email using address and password\r\n # yahoo mail\r\n if self.internet_connection() and self.auto_detect_mail() is 'yahoo':\r\n\r\n self.yahoo_imap_Object.select_folder('INBOX', readonly=False)\r\n for link in self.deleted_mailes:\r\n search_address = f\"FROM {link}\"\r\n self.yahoo_UIDs = self.yahoo_imap_Object.search(search_address)\r\n print(self.yahoo_UIDs)\r\n index = len(self.deleted_mailes) - (len(self.deleted_mailes) + 1)\r\n\r\n for i in self.yahoo_UIDs:\r\n self.content = f\"mail from: {self.deleted_mailes[index]}, messages count = {len(self.yahoo_UIDs)}\"\r\n print(self.content)\r\n\r\n self.textBrowser.append(str(self.content))\r\n\r\n # Gmail\r\n elif self.internet_connection() and self.auto_detect_mail() is 'gmail': # CONFIRMED FUNCTION\r\n self.gmail_imap_Object.select_folder('INBOX', readonly=False)\r\n for link in self.deleted_mailes:\r\n search = \"FROM {}\".format(link)\r\n self.gmail_UIDs = self.gmail_imap_Object.search(search)\r\n for i in self.gmail_UIDs:\r\n self.content = f\"FROM: {self.deleted_mailes[-1]}, MESSAGES = {len(self.gmail_UIDs)}\"\r\n\r\n self.textBrowser.append(str(self.content))\r\n\r\n # Hotmail\r\n elif self.internet_connection() and self.auto_detect_mail() is 'hotmail':\r\n\r\n self.hotmail_imap_Object.select_folder('INBOX', readonly=False)\r\n for link in self.deleted_mailes:\r\n search = \"FROM {}\".format(link)\r\n self.hotmail_UIDs = self.hotmail_imap_Object.search(search)\r\n print(self.hotmail_UIDs)\r\n\r\n for i in self.hotmail_UIDs:\r\n self.content = f\"FROM: {self.deleted_mailes[-1]}, MESSAGES = {len(self.hotmail_UIDs)}\"\r\n\r\n print(self.deleted_mailes)\r\n self.textBrowser.append(str(self.content))\r\n\r\n else:\r\n if self.internet_connection():\r\n self.label_mailinfo.setText(\"Write Valid Mail\")\r\n print(\"Unknown email Or wrong email\")\r\n\r\n except:\r\n # self.label_mailinfo.setText(\"please select\\nyour email type\")\r\n # self.label_emailconfirmed.setText(\"choose mail\")\r\n print(\"Unknown email\")\r\n return False\r\n\r\n # --------------------New--------------------\r\n def delete_messages(self): # take mails in the list and delete it if it isn't empty\r\n # connect progressbar to delete messages and delete all messages stored in textBrowser\r\n if len(self.deleted_mailes) > 0:\r\n if self.auto_detect_mail() is 'gmail':\r\n for link in self.deleted_mailes:\r\n from_search = \"FROM {}\".format(link)\r\n self.after_gmail_UIDs = self.gmail_imap_Object.search(from_search)\r\n for i in self.after_gmail_UIDs:\r\n self.gmail_imap_Object.delete_messages(i)\r\n self.label_mailinfo.setText(\"Process Finished\")\r\n return True\r\n\r\n elif self.auto_detect_mail() is 'yahoo':\r\n for link in self.deleted_mailes:\r\n from_search = \"FROM {}\".format(link)\r\n self.after_yahoo_UIDs = self.yahoo_imap_Object.search(from_search)\r\n for i in self.after_yahoo_UIDs:\r\n self.yahoo_imap_Object.delete_messages(i)\r\n self.label_mailinfo.setText(\"Process Finished\")\r\n return True\r\n\r\n elif self.auto_detect_mail() is 'hotmail':\r\n for link in self.deleted_mailes:\r\n from_search = \"FROM {}\".format(link)\r\n self.after_hotmail_UIDs = self.hotmail_imap_Object.search(from_search)\r\n for i in self.after_hotmail_UIDs:\r\n self.hotmail_imap_Object.delete_messages(i)\r\n self.label_mailinfo.setText(\"Process Finished\")\r\n return True\r\n\r\n else:\r\n # NOTHING TO DELETE\r\n return False\r\n\r\n # TODO: LOGOUT BEFORE YOY CLOSE THE APP\r\n def close_app_exit(self):\r\n # try to logout Before EXIT\r\n # ---------------\r\n\r\n # EXIT\r\n self.button_exit.clicked.connect(sys.exit(0))\r\n\r\n # TODO: TRY TO ADD ARABIC EDITION , SELECT LANGUAGE\r\n def retranslateUi(self, Main_Window):\r\n _translate = QtCore.QCoreApplication.translate\r\n Main_Window.setWindowTitle(_translate(\"Main_Window\", \"Mail Cleaner\"))\r\n self.label_mailer.setText(_translate(\"Main_Window\", \"E-mail From\"))\r\n self.button_addmail.setText(_translate(\"Main_Window\", \"Add mail to list\"))\r\n self.label_emailslistname.setText(_translate(\"Main_Window\", \"Emails You will delete\"))\r\n self.label_progress.setText(_translate(\"Main_Window\", \"Progress\"))\r\n self.button_exit.setText(_translate(\"Main_Window\", \"EXIT\"))\r\n self.button_delete.setText(_translate(\"Main_Window\", \"Delete\"))\r\n self.label_email.setText(_translate(\"Main_Window\", \"Your Mail\"))\r\n self.label_password.setText(_translate(\"Main_Window\", \"Your PassWord\"))\r\n self.label_mailinfo.setText(_translate(\"Main_Window\", \"information about activate mail\"))\r\n self.label_emailconfirmed.setText(_translate(\"Main_Window\", \"Please Login\"))\r\n self.button_login.setText(_translate(\"Main_Window\", \"Login\"))\r\n self.textBrowser.setHtml(_translate(\"Main_Window\",\r\n \"<!DOCTYPE HTML PUBLIC \\\"-//W3C//DTD HTML 4.0//EN\\\" \\\"http://www.w3.org/TR/REC-html40/strict.dtd\\\">\\n\"\r\n \"<html><head><meta name=\\\"qrichtext\\\" content=\\\"1\\\" /><style type=\\\"text/css\\\">\\n\"\r\n \"p, li { white-space: pre-wrap; }\\n\"\r\n \"</style></head><body style=\\\" font-family:\\'Times New Roman\\'; font-size:11pt; font-weight:600; font-style:normal;\\\">\\n\"\r\n \"<p style=\\\"-qt-paragraph-type:empty; margin-top:0px; margin-bottom:0px; margin-left:0px; margin-right:0px; -qt-block-indent:0; text-indent:0px;\\\"><br /></p></body></html>\"))\r\n\r\n\r\nif __name__ == \"__main__\":\r\n import sys\r\n\r\n app = QtWidgets.QApplication(sys.argv)\r\n Main_Window = QtWidgets.QMainWindow()\r\n ui = Ui_Main_Window()\r\n ui.setupUi(Main_Window)\r\n Main_Window.show()\r\n sys.exit(app.exec_())\r\n"

}

] | 2 |

QCH-top/- | https://github.com/QCH-top/- | 04ca8d6e539dd536d5a4f4ff0b1677d9619050d0 | bc5311db993e1bc84bc42e6cb6b401038cb42fb3 | abd770690b2382908d30dbdd92f6eca1b8b43569 | refs/heads/main | 2023-02-20T08:17:46.487404 | 2021-01-20T15:14:26 | 2021-01-20T15:14:26 | 331,338,123 | 9 | 3 | null | 2021-01-20T14:52:26 | 2021-01-20T14:52:31 | 2021-01-20T14:56:14 | null | [

{

"alpha_fraction": 0.45264846086502075,

"alphanum_fraction": 0.5013375878334045,

"avg_line_length": 23.216217041015625,

"blob_id": "f168c0f5aea1318ff57dd7eaf4061e4dbd715877",

"content_id": "9d14f620670fa477a464d643da32dc1e739bbf79",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 4212,

"license_type": "no_license",

"max_line_length": 96,

"num_lines": 148,

"path": "/sonar/k-means-sonar.py",

"repo_name": "QCH-top/-",

"src_encoding": "UTF-8",

"text": "import pandas as pd\r\nimport numpy as np\r\nimport matplotlib.pyplot as plt\r\nfrom sklearn import datasets, decomposition, manifold\r\n\r\nsonar = pd.read_csv(r'sonar.all-data', header=None, sep=',')\r\nsonar1 = sonar.iloc[0:208, 0:61]#取208行,前61列(数据)\r\ndata = np.mat(sonar1)\r\n#print(data)\r\n\r\nfor i in range(208):\r\n if sonar1.iloc[i, 60] == \"R\":\r\n sonar1.iloc[i, 60] = 0\r\n else:\r\n sonar1.iloc[i, 60] = 1#换成0,1来判断类别\r\n\r\ndata = np.mat(sonar1.iloc[:, 0:61])\r\ndata1 = np.mat(sonar1.iloc[:, 0:60])\r\n#print(data)\r\n#print(data1)\r\nk = 2 # k为聚类的类别数\r\nn = 208 # n为样本总个数\r\nd = 60 # d为数据集的特征数\r\n\r\n\r\n# k-means算法\r\ndef k_means():\r\n # 随机选k个初始聚类中心,聚类中心为每一类的均值向量\r\n m = np.zeros((k, d))#2行 60列零矩阵\r\n for i in range(k):\r\n m[i] = data1[np.random.randint(0, n)]\r\n \r\n # k_means聚类\r\n m_new = m.copy()\r\n #print(\"m_new = \",m_new)\r\n #print(m_new == m)\r\n t = 0\r\n while (1):\r\n # 更新聚类中心\r\n m[0] = m_new[0]\r\n m[1] = m_new[1]\r\n w1 = np.zeros((1, d+1))\r\n w2 = np.zeros((1, d+1))\r\n # 将每一个样本按照欧式距离聚类\r\n for i in range(n):#每一个样本跟两个聚类中心求欧氏距离\r\n distance = np.zeros(k)\r\n sample = data[i]#第i个样本\r\n for j in range(k): # 将每一个样本与聚类中心比较\r\n distance[j] = np.linalg.norm(sample[:, 0:60] - m[j])#二范数\r\n category = distance.argmin()#种类 距离小的下标是0则是第一类\r\n if category == 0:\r\n w1 = np.row_stack((w1, sample))# 第一类的数据\r\n if category == 1:\r\n w2 = np.row_stack((w2, sample))\r\n\r\n # 新的聚类中心\r\n w1 = np.delete(w1, 0, axis=0)#数组合并后会多出一个0删除0\r\n w2 = np.delete(w2, 0, axis=0)\r\n m_new[0] = np.mean(w1[:, 0:60], axis=0)\r\n m_new[1] = np.mean(w2[:, 0:60], axis=0)\r\n\r\n # 聚类中心不再改变时,聚类停止\r\n if (m[0] == m_new[0]).all() and (m[1] == m_new[1]).all():\r\n break\r\n\r\n print(t)\r\n t += 1\r\n\r\n w = np.vstack((w1, w2))\r\n label1 = np.zeros((len(w1), 1))\r\n label2 = np.ones((len(w2), 1))\r\n label = np.vstack((label1, label2))\r\n label = np.ravel(label)#合并为一维矩阵\r\n # print(label)\r\n test_PCA(w, label)#pac降维\r\n plot_PCA(w, label)\r\n return w1, w2\r\n\r\n\r\ndef test_PCA(*data):\r\n X, Y = data\r\n pca = decomposition.PCA(n_components=None)#自动选择降维维度\r\n pca.fit(X)#训练模型\r\n\r\n\r\n# print(\"explained variance ratio:%s\"%str(pca.explained_variance_ratio_))\r\n\r\ndef plot_PCA(*data):\r\n X, Y = data\r\n pca = decomposition.PCA(n_components=2)#降维2个维度\r\n pca.fit(X)\r\n X_r = pca.transform(X)#执行降维\r\n # print(X_r)\r\n\r\n fig = plt.figure()\r\n ax = fig.add_subplot(1, 1, 1)\r\n colors = ((1, 0, 0), (0.33, 0.33, 0.33),)\r\n for label, color in zip(np.unique(Y), colors):\r\n position = Y == label\r\n ax.scatter(X_r[position, 0], X_r[position, 1], label=\"category=%d\" % label, color=color)\r\n ax.set_xlabel(\"X[0]\")\r\n ax.set_ylabel(\"Y[0]\")\r\n ax.legend(loc=\"best\")\r\n ax.set_title(\"PCA\")\r\n # plt.show()\r\n\r\n\r\nif __name__ == '__main__':\r\n w1, w2 = k_means()\r\n accuracy = 0\r\n\r\n l1 = []\r\n l2 = []\r\n for i in range(w1.shape[0]):\r\n l1.append(w1[i, 4])\r\n l2.append(l1.count(0))\r\n l2.append(l1.count(1))\r\n\r\n l3 = np.mat(l2)\r\n count = l3.argmax()\r\n for i in range(w1.shape[0]):\r\n if w1[i, 60] == count:\r\n accuracy += 1\r\n\r\n count2 = 0\r\n if count == 0:\r\n count2=1\r\n else:\r\n count2=0\r\n\r\n for i in range(w2.shape[0]):\r\n if w2[i, 60] == count2:\r\n accuracy += 1\r\n\r\n\r\n # print(w1)\r\n\r\n accuracy /= 150\r\n print(\"准确度为:\")\r\n print(\"%.2f\" % accuracy)\r\n\r\n print(\"第一类的聚类样本数为:\")\r\n print(w1.shape[0])\r\n # print(w2)\r\n print(\"第二类的聚类样本数为:\")\r\n print(w2.shape[0])\r\n\r\n plt.show()\r\n \r\n"

},

{

"alpha_fraction": 0.5968406796455383,

"alphanum_fraction": 0.6146978139877319,

"avg_line_length": 29.214284896850586,

"blob_id": "bf5d6da74877b3cd49515ed058e3bfd8bd922f9b",

"content_id": "5a0a7af077a704090d07aee80cb358cb1acacb57",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 4752,

"license_type": "no_license",

"max_line_length": 136,

"num_lines": 140,

"path": "/yale/Bagging_yale.py",

"repo_name": "QCH-top/-",

"src_encoding": "UTF-8",

"text": "import numpy as np\r\nimport os\r\nimport skimage.io\r\nimport skimage.color\r\nfrom sklearn import svm,datasets\r\nimport matplotlib.pyplot as plt\r\nimport skimage.io as io\r\nimport matplotlib.pyplot as plt\r\nfrom sklearn import datasets,decomposition,manifold\r\nfrom sklearn.model_selection import GridSearchCV\r\nfrom sklearn.svm import SVC\r\nfrom sklearn.decomposition import PCA\r\nfrom sklearn.model_selection import KFold\r\nfrom sklearn.ensemble import RandomForestClassifier\r\n'''\r\ndata_dir = 'dataset/'#文件地址/名称\r\nclasses = os.listdir(data_dir)\r\ndata = []\r\nall_image = np.zeros(100*100)\r\n#all_image = np.array(all_image)\r\nall_label = []\r\nfor cls in classes:\r\n files = os.listdir(data_dir+cls)\r\n for f in files: \r\n img = skimage.io.imread(data_dir+cls+\"/\"+f)\r\n img = skimage.color.rgb2gray(img)#将图片转为灰度图\r\n img = img.reshape(1,100*100)\r\n #print(img)\r\n all_image = np.vstack((all_image,img))\r\n all_label.append(cls)\r\n \r\n \r\n\r\nall_image = np.array(all_image)\r\nall_label = np.array(all_label)\r\n\r\nall_label = all_label.reshape(len(all_label),1)\r\n\r\nall_image = np.delete(all_image,0,0) #删除第一行\r\nall_data = np.hstack((all_label,all_image))\r\n\r\nprint(type(all_image[0,0]))\r\nprint(all_data)\r\n'''\r\n\r\ndata_dir = 'dataset/'#文件地址/名称\r\nclasses = os.listdir(data_dir)\r\ndata = []\r\nall_image = np.zeros(100*100)\r\n#all_image = np.array(all_image)\r\nall_label = []\r\nfor cls in classes:\r\n files = os.listdir(data_dir+cls)\r\n for f in files: \r\n img = skimage.io.imread(data_dir+cls+\"/\"+f)\r\n img = skimage.color.rgb2gray(img)#将图片转为灰度图\r\n img = img.reshape(1,100*100)\r\n #print(img)\r\n all_image = np.vstack((all_image,img))\r\n all_label.append(int(cls))\r\n \r\n \r\n\r\nall_image = np.array(all_image)\r\nall_label = np.array(all_label)\r\n\r\nall_label = all_label.reshape(len(all_label),1)\r\n\r\nall_image = np.delete(all_image,0,0) #删除第一行\r\nall_image.dtype = 'float32'\r\n\r\npca = decomposition.PCA(n_components=10, svd_solver='auto',\r\n whiten=True).fit(all_image)\r\n# PCA降维后的总数据集\r\nall_image = pca.transform(all_image)\r\nall_data = np.hstack((all_label,all_image))\r\n\r\n#print(all_image)\r\n#print(all_label) \r\n#print(all_data)\r\n\r\nall_data_set = [] # 原始总数据集,二维矩阵n*m,n个样例,m个属性\r\nall_data_label = [] # 总数据对应的类标签\r\nall_data_set = all_data[ : ,1: ]\r\nall_data_label = all_data[:,0]\r\n\r\nX = np.array(all_data_set)\r\ny = np.array(all_data_label)\r\n\r\nn_estimators = [10,11]\r\ncriterion_test_names = [\"gini\", \"entropy\"]#测试 系数与熵\r\n #分类树: 基尼系数 最小的准则 在sklearn中可以选择划分的默认原则 \r\n #决策树:criterion:默认是’gini’系数,也可以选择信息增益的熵’entropy\r\n\r\n\r\n\r\ndef RandomForest(n_estimators,criterion):\r\n # 十折交叉验证计算出平均准确率\r\n # 交叉验证,随机取\r\n kf = KFold(n_splits=10, shuffle=True)\r\n precision_average = 0.0\r\n # param_grid = {'C': [1e3, 5e3, 1e4, 5e4, 1e5]} # 自动穷举出最优的C参数\r\n # clf = GridSearchCV(SVC(kernel=kernel_name, class_weight='balanced', gamma=param),\r\n # param_grid)s\r\n\r\n parameter_space = {\r\n \"min_samples_leaf\": [2, 4, 6], }#参数空间 随机森林RandomForest使用了CART决策树作为弱学习器\r\n clf = GridSearchCV(RandomForestClassifier(random_state=14,n_estimators = n_estimators,criterion = criterion), parameter_space, cv=5)\r\n for train, test in kf.split(X):\r\n clf = clf.fit(X[train], y[train].astype('int'))\r\n # print(clf.best_estimator_)\r\n test_pred = clf.predict(X[test])\r\n # print classification_report(y[test], test_pred)\r\n # 计算平均准确率\r\n precision = 0\r\n for i in range(0, len(y[test])):\r\n if (y[test][i] == test_pred[i]):\r\n precision = precision + 1\r\n precision_average = precision_average + float(precision) / len(y[test])\r\n precision_average = precision_average / 10\r\n print (u\"准确率为\" + str(precision_average))\r\n return precision_average\r\n\r\n\r\nfor criterion in criterion_test_names:\r\n print(\"criterion\")\r\n print(criterion)\r\n x_label = []\r\n y_label = []\r\n for i in range(10,15):\r\n print(i)\r\n y_label.append(RandomForest(i,criterion))\r\n x_label.append(i)\r\n plt.plot(x_label, y_label, label=criterion)\r\n# print(\"done in %0.3fs\" % (time() - t0))\r\nplt.xlabel(\"criterion\")\r\nplt.ylabel(\"Precision\")\r\nplt.title('Different Contrust')\r\nplt.legend()\r\nplt.show()"

},

{

"alpha_fraction": 0.428725928068161,

"alphanum_fraction": 0.4795587360858917,

"avg_line_length": 25.205883026123047,

"blob_id": "02f63335e27829683759be464380ea8361032b68",

"content_id": "9c18e1d21a8d288bee671eee4376f339b83278ba",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 4985,

"license_type": "no_license",

"max_line_length": 96,

"num_lines": 170,

"path": "/iris/k-means-iris.py",

"repo_name": "QCH-top/-",

"src_encoding": "UTF-8",

"text": "import pandas as pd\r\nimport numpy as np\r\nimport matplotlib.pyplot as plt\r\nfrom sklearn import datasets, decomposition, manifold\r\n\r\niris = pd.read_csv(r'iris.data', header=None, sep=',')\r\niris1 = iris.iloc[0:150, 0:5]\r\nfor i in range(150):\r\n if iris1.iloc[i, 4] == \"Iris-setosa\":\r\n iris1.iloc[i, 4] = 0\r\n elif iris1.iloc[i, 4] == \"Iris-versicolor\":\r\n iris1.iloc[i, 4] = 1\r\n else:\r\n iris1.iloc[i, 4] = 2\r\ndata = np.mat(iris1.iloc[:, 0:5])\r\ndata1 = np.mat(iris1.iloc[:, 0:4])\r\nk = 3 # k为聚类的类别数\r\nn = 150 # n为样本总个数\r\nd = 4 # t为数据集的特征数\r\n\r\n\r\n# k-means算法\r\ndef k_means():\r\n # 随机选k个初始聚类中心,聚类中心为每一类的均值向量\r\n m = np.zeros((k, d)) # m = (3, 4)\r\n for i in range(k):\r\n m[i] = data1[np.random.randint(0, 10)]\r\n # k_means聚类\r\n m_new = m.copy()\r\n\r\n t = 0\r\n while (1):\r\n # 更新聚类中心\r\n m[0] = m_new[0]\r\n m[1] = m_new[1]\r\n m[2] = m_new[2]\r\n\r\n w1 = np.zeros((1, d+1)) # 引进标签\r\n w2 = np.zeros((1, d+1))\r\n w3 = np.zeros((1, d+1))\r\n\r\n for i in range(n):\r\n distance = np.zeros(3)\r\n sample = data[i]\r\n for j in range(k): # 将每一个样本与聚类中心比较\r\n distance[j] = np.linalg.norm(sample[:, 0:4] - m[j]) # 求范数,默认计算欧式距离\r\n category = distance.argmin()\r\n if category == 0:\r\n w1 = np.row_stack((w1, sample)) # 行添加\r\n if category == 1:\r\n w2 = np.row_stack((w2, sample))\r\n if category == 2:\r\n w3 = np.row_stack((w3, sample))\r\n\r\n # 新的聚类中心\r\n w1 = np.delete(w1, 0, axis=0)\r\n w2 = np.delete(w2, 0, axis=0)\r\n w3 = np.delete(w3, 0, axis=0)\r\n m_new[0] = np.mean(w1[:, 0:4], axis=0)\r\n m_new[1] = np.mean(w2[:, 0:4], axis=0)\r\n m_new[2] = np.mean(w3[:, 0:4], axis=0)\r\n\r\n # 聚类中心不再改变时,聚类停止\r\n if (m[0] == m_new[0]).all() and (m[1] == m_new[1]).all() and (m[2] == m_new[2]).all():\r\n break\r\n # print(t)\r\n t += 1\r\n w = np.vstack((w1, w2))\r\n w = np.vstack((w, w3))\r\n '''\r\n # 画出每一次迭代的聚类效果图\r\n \r\n label1 = np.zeros((len(w1), 1))\r\n label2 = np.ones((len(w2), 1))\r\n label3 = np.zeros((len(w3), 1))\r\n for i in range(len(w3)):\r\n label3[i, 0] = 2\r\n label = np.vstack((label1, label2))\r\n label = np.vstack((label, label3))\r\n label = np.ravel(label)\r\n test_PCA(w, label)\r\n plot_PCA(w, label)\r\n'''\r\n return w1, w2, w3, t\r\n\r\n\r\ndef test_PCA(*data):\r\n X, Y = data\r\n pca = decomposition.PCA(n_components=None)\r\n pca.fit(X)\r\n\r\n\r\n# print(\"explained variance ratio:%s\"%str(pca.explained_variance_ratio_))\r\n\r\ndef plot_PCA(*data):\r\n X, Y = data\r\n pca = decomposition.PCA(n_components=50)\r\n pca.fit(X)\r\n X_r = pca.transform(X)\r\n # print(X_r)\r\n\r\n fig = plt.figure()\r\n ax = fig.add_subplot(1, 1, 1)\r\n colors = ((1, 0, 0), (0.3, 0.3, 0.3), (0, 0.3, 0.7),)\r\n for label, color in zip(np.unique(Y), colors):\r\n position = Y == label\r\n # print(position)\r\n ax.scatter(X_r[position, 0], X_r[position, 1], label=\"category=%d\" % label, color=color)\r\n ax.set_xlabel(\"X[0]\")\r\n ax.set_ylabel(\"Y[0]\")\r\n ax.legend(loc=\"best\")\r\n ax.set_title(\"PCA\")\r\n # plt.show()\r\n\r\n\r\nif __name__ == '__main__':\r\n w1, w2, w3, t = k_means()\r\n accuracy = 0\r\n\r\n l1 = []\r\n l2 = []\r\n for i in range(w1.shape[0]): # 标签投票,得票多数判断为该类\r\n l1.append(w1[i, 4])\r\n l2.append(l1.count(0))\r\n l2.append(l1.count(1))\r\n l2.append(l1.count(2))\r\n l3 = np.mat(l2)\r\n count = l3.argmax()\r\n for i in range(w1.shape[0]):\r\n if w1[i, 4] == count:\r\n accuracy += 1\r\n\r\n l1 = []\r\n l2 = []\r\n for i in range(w2.shape[0]):\r\n l1.append(w2[i, 4])\r\n l2.append(l1.count(0))\r\n l2.append(l1.count(1))\r\n l2.append(l1.count(2))\r\n l3 = np.mat(l2)\r\n count = l3.argmax()\r\n for i in range(w2.shape[0]):\r\n if w2[i, 4] == count:\r\n accuracy += 1\r\n\r\n l1 = []\r\n l2 = []\r\n for i in range(w3.shape[0]):\r\n l1.append(w3[i, 4])\r\n l2.append(l1.count(0))\r\n l2.append(l1.count(1))\r\n l2.append(l1.count(2))\r\n l3 = np.mat(l2)\r\n count = l3.argmax()\r\n for i in range(w3.shape[0]):\r\n if w3[i, 4] == count:\r\n accuracy += 1\r\n print(accuracy)\r\n accuracy /= 150 # 纯度计算\r\n print(\"准确度为:\")\r\n print(\"%.2f\"%accuracy)\r\n print(\"迭代次数为:\")\r\n print(t)\r\n print(\"第一类的聚类样本数为:\")\r\n print(w1.shape[0])\r\n print(\"第二类的聚类样本数为:\")\r\n print(w2.shape[0])\r\n print(\"第三类的聚类样本数为:\")\r\n print(w3.shape[0])\r\n plt.show()"

},

{

"alpha_fraction": 0.5078148245811462,

"alphanum_fraction": 0.5199056267738342,

"avg_line_length": 27.504348754882812,

"blob_id": "012f44dee5d8c9a79a368cca9ff031056adc735d",

"content_id": "bf490a948b0a98b560b723caf57a59388a2291e0",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 4009,

"license_type": "no_license",

"max_line_length": 104,

"num_lines": 115,

"path": "/sonar/FCM-sonar.py",

"repo_name": "QCH-top/-",

"src_encoding": "UTF-8",

"text": "import numpy as np\r\nimport pandas as pd\r\n\r\n\r\ndef loadData(datapath):\r\n data = pd.read_csv(r'sonar.all-data', sep=',', header=None)\r\n data = data.sample(frac=1.0) # 打乱数据顺序\r\n dataX = data.iloc[:, :-1].values # 特征\r\n labels = data.iloc[:, -1].values # 标签\r\n # 将标签类别用 0, 1表示\r\n labels[np.where(labels == \"R\")] = 0\r\n labels[np.where(labels == \"M\")] = 1\r\n\r\n\r\n return dataX, labels\r\n\r\n#==========================隶属度矩阵=============================#\r\ndef initialize_U(samples, classes):\r\n U = np.random.rand(samples, classes) # 先生成随机矩阵\r\n sumU = 1 / np.sum(U, axis=1) # 求每行的和\r\n U = np.multiply(U.T, sumU) # 使隶属度矩阵每一行和为1\r\n\r\n return U.T\r\n\r\n\r\n# =====================样本和簇中心的距离,欧氏距离==================#\r\ndef distance(X, centroid):\r\n return np.sqrt(np.sum((X - centroid) ** 2, axis=1))\r\n\r\n\r\n#=======================更新隶属度矩阵Uij = 1/sum(dij/dki)**2/(a - 1)================# \r\ndef computeU(X, centroids, m=2):\r\n sampleNumber = X.shape[0] # 样本数(行数)\r\n classes = len(centroids)\r\n U = np.zeros((sampleNumber, classes))\r\n # 更新隶属度矩阵\r\n for i in range(classes):\r\n for k in range(classes):\r\n U[:, i] += (distance(X, centroids[i]) / distance(X, centroids[k])) ** (2 / (m - 1))\r\n U = 1 / U\r\n\r\n return U\r\n\r\n#===============================真实类别=========================#\r\ndef adjustCentroid(centroids, U, labels): # 调整使中心的标签代表类标签\r\n newCentroids = [[], []]\r\n curr = np.argmax(U, axis=1) # 行方向搜索最大值,当前中心顺序得到的标签\r\n for i in range(len(centroids)):\r\n index = np.where(curr == i) # 建立中心和类别的映射\r\n trueLabel = list(labels[index]) # 获取labels[index]出现次数最多的元素,就是真实类别\r\n trueLabel = max(set(trueLabel), key=trueLabel.count)\r\n newCentroids[trueLabel] = centroids[i]\r\n return newCentroids\r\n \r\n#=========================更新聚类中心=====================#\r\ndef cluster(data, labels, m, classes, EPS):\r\n \"\"\"\r\n :param data: 数据集\r\n :param m: 模糊系数(fuzziness coefficient)\r\n :param classes: 类别数\r\n :return: 聚类中心\r\n \"\"\"\r\n sampleNumber = data.shape[0] # 样本数\r\n\r\n U = initialize_U(sampleNumber, classes) # 初始化隶属度矩阵\r\n\r\n t = 0\r\n while True:\r\n centroids = []\r\n # 更新簇中心\r\n for i in range(classes):\r\n centroid = np.dot(U[:, i] ** m, data) / (np.sum(U[:, i] ** m))#sum(Uij**a)*x / sum(Uij**a)\r\n centroids.append(centroid)\r\n\r\n U_old = U.copy()\r\n U = computeU(data, centroids, m) # 计算新的隶属度矩阵\r\n t += 1 \r\n if np.max(np.abs(U - U_old)) < EPS: # abs绝对值\r\n # 这里的类别和数据标签并不是一一对应的, 调整使得第i个中心表示第i类\r\n centroids = adjustCentroid(centroids, U, labels)\r\n return centroids, U, t\r\n\r\n\r\n# 预测所属的类别\r\ndef predict(X, centroids):\r\n labels = np.zeros(X.shape[0])\r\n U = computeU(X, centroids) # 计算隶属度矩阵\r\n labels = np.argmax(U, axis=1) # 找到隶属度矩阵中每行的最大值,即该样本最大可能所属类别\r\n\r\n return labels\r\n\r\n\r\ndef main():\r\n\r\n dataX, labels = loadData('sonar (2).csv') # 读取数据\r\n\r\n EPS = 1e-6 # 停止误差条件\r\n m = 2 # 模糊因子\r\n classes = 2 # 类别数\r\n # 得到各类别的中心\r\n centroids, U, t = cluster(dataX, labels, m, classes, EPS)\r\n\r\n trainLabels_prediction = predict(dataX, centroids)\r\n\r\n accuracy = 0\r\n for i in range(208):\r\n if trainLabels_prediction[i] == labels[i]:\r\n accuracy += 1\r\n accuracy /= 208\r\n print(\"准确度为:%.2f\"%accuracy)\r\n print(\"迭代次数为:\", t)\r\n\r\n\r\nif __name__ == \"__main__\":\r\n main()"

},

{

"alpha_fraction": 0.5143943428993225,

"alphanum_fraction": 0.5399239659309387,

"avg_line_length": 25.507463455200195,

"blob_id": "40e8c544053078b2c09cfb30b7075bfb80667489",

"content_id": "fcf8f7e5fe7dcda5e4dee875a12de95e3dd6691d",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 2156,

"license_type": "no_license",

"max_line_length": 72,

"num_lines": 67,

"path": "/iris/k_means.py",

"repo_name": "QCH-top/-",

"src_encoding": "UTF-8",

"text": "import matplotlib.pyplot as plt\r\nfrom random import sample\r\nimport numpy as np\r\nfrom sklearn.cluster import KMeans\r\n#from sklearn import datasets\r\nfrom sklearn.datasets import load_iris\r\n#导入鸢尾花数据集\r\n#以二维数据为例 假设k=2,X为鸢尾花数据集前两维\r\niris = load_iris()\r\nX = iris.data[:,0:2] ##表示我们只取特征空间中的前两个维度 X类型是np.array\r\nprint(len(X))\r\n#绘制数据分布图 显示前两维数据\r\nplt.scatter(X[:, 0], X[:, 1], c = \"red\", marker='o', label='data')\r\nplt.xlabel('petal length')\r\nplt.ylabel('petal width')\r\nplt.legend(loc=2)\r\nplt.show()\r\n \r\n#从X中随机选择k个样本作为初始“簇中心”向量: μ(1),μ(2),...,,μ(k)\r\n#随机获得两个数据\r\nn = 3 #表示n个聚类\r\nu = sample(X.tolist(),n) #随机选择n个X中的向量作为聚类中心\r\nmax_iter = 0 #记录迭代次数\r\nu_before = u\r\n \r\nwhile max_iter<5:\r\n #簇分配过程\r\n c = []\r\n print(u_before,u)\r\n for i in range(len(X)):\r\n min = 1000\r\n index = 0\r\n for j in range(n):\r\n vec1 = X[i]\r\n vec2 = u[j]\r\n dist = np.sqrt(np.sum(np.square(vec1 - vec2)))\r\n if dist<min:\r\n min = dist\r\n index =j\r\n c.append(index)\r\n # print(i,\"------\",c[i])\r\n #移动聚类中心\r\n for j in range(n):\r\n sum = np.zeros(2) # 定义n为零向量 此处n为2\r\n count = 0 # 统计不同类别的个数\r\n for i in range(len(X)):\r\n if c[i]==j:\r\n sum = sum+X[i]\r\n count = count+1\r\n u[j] = sum/count\r\n \r\n print(max_iter,\"------------\",u)\r\n #设置迭代次数\r\n max_iter = max_iter + 1\r\nprint(np.array(c))\r\nlabel_pred = np.array(c)\r\n#绘制k-means结果\r\nx0 = X[label_pred == 0]\r\nx1 = X[label_pred == 1]\r\nx2 = X[label_pred == 2]\r\nplt.scatter(x0[:, 0], x0[:, 1], c = \"red\", marker='o', label='label0')\r\nplt.scatter(x1[:, 0], x1[:, 1], c = \"green\", marker='*', label='label1')\r\nplt.scatter(x2[:, 0], x2[:, 1], c = \"blue\", marker='+', label='label2')\r\nplt.xlabel('petal length')\r\nplt.ylabel('petal width')\r\nplt.legend(loc=2)\r\nplt.show()"

},

{

"alpha_fraction": 0.6285097002983093,

"alphanum_fraction": 0.6491823792457581,

"avg_line_length": 24.0930233001709,

"blob_id": "c1c850fa7ae267e4c8a14bf70fdd423143403bff",

"content_id": "e4ddd41757faa1b9455b3b3350a804b3df5664bd",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 3925,

"license_type": "no_license",

"max_line_length": 136,

"num_lines": 129,

"path": "/mnist/Bagging_minst.py",

"repo_name": "QCH-top/-",

"src_encoding": "UTF-8",

"text": "#!/usr/bin/env python\n# coding: utf-8\n\n# In[8]:\n\n\nimport os\nimport gzip\nimport numpy as np\nimport tensorflow as tf\nimport pandas as pd\nfrom sklearn.svm import SVC\nfrom matplotlib import pyplot as plt\nfrom sklearn import datasets, decomposition, manifold\nfrom sklearn.model_selection import KFold\nfrom sklearn.model_selection import GridSearchCV\nfrom sklearn.ensemble import RandomForestClassifier\n\n\n# In[9]:\n\n\ndata = pd.read_csv(r'mnist_train.csv',header = None)\ndata1 = pd.read_csv(r'mnist_test.csv',header = None)\ndata = np.array(data)\ndata1 = np.array(data1)\ntrain_label = data[:,0] #训练集标签\ntrain_images = data[:,1:784] #训练集图片\ntest_label = data1[:,0] #训练集标签\ntest_images = data1[:,1:784] #训练集图片\n\n\n# In[10]:\n\n\nall_data_set = [] # 原始总数据集,二维矩阵n*m,n个样例,m个属性\nall_data_label = [] # 总数据对应的类标签\nall_data_set = train_images\nall_data_label = train_label\n\nn_components = 16 #降为16维度\npca = decomposition.PCA(n_components=n_components, svd_solver='auto',\n whiten=True).fit(all_data_set)\n# PCA降维后的总数据集\nall_data_pca = pca.transform(all_data_set)\n# X为降维后的数据,y是对应类标签\nX = np.array(all_data_pca)\ny = np.array(all_data_label)\n\nn_estimators = [10,11]\ncriterion_test_names = [\"gini\", \"entropy\"]#测试 系数与熵\n #分类树: 基尼系数 最小的准则 在sklearn中可以选择划分的默认原则 \n #决策树:criterion:默认是’gini’系数,也可以选择信息增益的熵’entropy’\n\n\n# In[11]:\n\n\n#RF使用了CART决策树作为弱学习器,\n#在使用决策树的基础上,\n#RF对决策树的建立做了改进\n#RF通过随机选择节点上的一部分样本特征,\n#这个数字小于n\n#假设为nsub,\n#然后在这些随机选择的nsub个样本特征中,\n#选择一个最优的特征来做决策树的左右子树划分。\n#这样进一步增强了模型的泛化能力。\n#总的来说,随机森林是在将bagging方法的基学习器确定为决策树,并且在决策树的训练过程中引入了随机属性选择\n\n\n# In[12]:\n\n\ndef RandomForest(n_estimators,criterion):\n # 十折交叉验证计算出平均准确率\n # 交叉验证,随机取\n kf = KFold(n_splits=10, shuffle=True)\n precision_average = 0.0\n # param_grid = {'C': [1e3, 5e3, 1e4, 5e4, 1e5]} # 自动穷举出最优的C参数\n # clf = GridSearchCV(SVC(kernel=kernel_name, class_weight='balanced', gamma=param),\n # param_grid)\n\n parameter_space = {\n \"min_samples_leaf\": [2, 4, 6], }#参数空间 \n clf = GridSearchCV(RandomForestClassifier(random_state=14,n_estimators = n_estimators,criterion = criterion), parameter_space, cv=5)\n for train, test in kf.split(X):\n clf = clf.fit(X[train], y[train])\n # print(clf.best_estimator_)\n test_pred = clf.predict(X[test])\n # print classification_report(y[test], test_pred)\n # 计算平均准确率\n precision = 0\n for i in range(0, len(y[test])):\n if (y[test][i] == test_pred[i]):\n precision = precision + 1\n precision_average = precision_average + float(precision) / len(y[test])\n precision_average = precision_average / 10\n print (u\"准确率为\" + str(precision_average))\n return precision_average\n\n\n# In[13]:\n\n\nprint(n_estimators)\n\n\n# In[ ]:\n\n\nfor criterion in criterion_test_names:\n print(\"criterion\",criterion)\n x_label = []\n y_label = []\n for i in range(10,15):\n print(i)\n y_label.append(RandomForest(i,criterion))\n x_label.append(i)\n plt.plot(x_label, y_label, label=criterion)\n time += 1\n# print(\"done in %0.3fs\" % (time() - t0))\nplt.xlabel(\"criterion\")\nplt.ylabel(\"Precision\")\nplt.title('Different Contrust')\nplt.legend()\nplt.show()\n\n\n# In[ ]:\n\n\n\n\n"

},

{

"alpha_fraction": 0.8133333325386047,

"alphanum_fraction": 0.8133333325386047,

"avg_line_length": 36.5,

"blob_id": "dfafcfd7a7655c2542cb725d5fbff40c91b23407",

"content_id": "60c3aba771d86bb8cb8d47d4454785b698253313",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Markdown",

"length_bytes": 151,

"license_type": "no_license",

"max_line_length": 70,

"num_lines": 2,

"path": "/README.md",

"repo_name": "QCH-top/-",

"src_encoding": "UTF-8",

"text": "# -\n针对iris,sonar数据集的K_means(k均值聚类),FCM(模糊均值聚类)算法,以及minst,yale人脸数据集的SVM与包分类\n"

}

] | 7 |

Jimbomaniak/13_cinemas | https://github.com/Jimbomaniak/13_cinemas | 5395e6c3a6046d72018b065339a3e2d760b91960 | 52aa65321c4d28ff23022de96fc43f0bfa48f217 | 1feab6b2429f2f3a3079e335d952ee4a7452092c | refs/heads/master | 2021-01-10T23:37:58.200422 | 2016-10-17T12:30:40 | 2016-10-17T12:30:40 | 70,414,486 | 0 | 0 | null | 2016-10-09T16:06:18 | 2016-10-09T16:06:19 | 2016-10-01T09:10:39 | Python | [

{

"alpha_fraction": 0.7240143418312073,

"alphanum_fraction": 0.7419354915618896,

"avg_line_length": 26.899999618530273,

"blob_id": "5700e0932e6b73d010d26423cbcb3b23bd32c952",

"content_id": "2c73a8dc0153406509a61c291d73cf27ceb12f49",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Markdown",

"length_bytes": 279,

"license_type": "no_license",

"max_line_length": 78,

"num_lines": 10,

"path": "/README.md",

"repo_name": "Jimbomaniak/13_cinemas",

"src_encoding": "UTF-8",

"text": "# 13_cinemas\n\n###Prerequisites:\nRun in console pip install `-r requirements.txt` to install 3rd party modules.\n\n###This script can help you to find most popular movies in cinema now a day.\n\n###How to use:\n\nRun script - get resault top 10 movies by rating or cinema count number.\n"

},

{

"alpha_fraction": 0.6338475346565247,

"alphanum_fraction": 0.6397458910942078,

"avg_line_length": 34.54838562011719,

"blob_id": "8cce929ea32306639ed9bc2a3dd289da43688d49",

"content_id": "fad3e04ed3639acbe3a0255472c303fb21c70603",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 2204,

"license_type": "no_license",

"max_line_length": 86,

"num_lines": 62,

"path": "/cinemas.py",

"repo_name": "Jimbomaniak/13_cinemas",

"src_encoding": "UTF-8",

"text": "import requests\nimport re\nfrom bs4 import BeautifulSoup as BS\n\n\nAFISHA_URL = 'http://www.afisha.ru/msk/schedule_cinema/'\nKINOPOISK_URL = 'https://www.kinopoisk.ru/get'\nNUMBER_MOVIES_TO_SHOW = 10\n\n\ndef fetch_afisha_page():\n response = requests.get(AFISHA_URL)\n return response.content\n\n\ndef parse_afisha_list(html):\n soup = BS(html, 'html.parser')\n movies_info = soup.find('div', {\n 'class': 'b-theme-schedule m-schedule-with-collapse',\n 'id': 'schedule'})\n movies = []\n for movie in movies_info.find_all(class_='object s-votes-hover-area collapsed'):\n one_movie = fetch_movie_info(movie.h3.a.text)\n one_movie['title'] = movie.h3.a.text\n one_movie['cinema_number'] = len(movie.find_all('td', {'class': 'b-td-item'}))\n movies.append(one_movie)\n return movies\n\n\ndef fetch_movie_info(movie_title):\n payload = {'kp_query': movie_title, 'first': 'yes'}\n response = requests.get(KINOPOISK_URL, params=payload)\n soup = BS(response.content, 'html.parser')\n try:\n rate = float(soup.find('span', class_='rating_ball').text)\n rating_count_site = soup.find('span', class_='ratingCount').text\n rating_count_digits = re.search(r'\\d+', rating_count_site)\n rating_count = int(rating_count_digits.group())\n except AttributeError:\n rate, rating_count = 0, 0\n return {'rate': rate, 'ratingCount': rating_count}\n\n\ndef sort_movies(movies, how_sort_movies):\n sort_by = how_sort_movies and 'cinema_number' or 'rate'\n movies.sort(key=lambda item: item[sort_by], reverse=True)\n return movies\n\n\ndef output_movies_to_console(movies, number_movies_to_show):\n for num, movie in enumerate(movies[:number_movies_to_show]):\n print('{0}. {title}, movie rate - {rate},'\n 'you can watch in {cinema_number} cinema'.format(num+1, **movie))\n print('------')\n\n\nif __name__ == '__main__':\n movies = parse_afisha_list(fetch_afisha_page())\n how_sort_movies = int(input('0 - movies by rating\\n'\n '1 - movies by cinema numbers\\nEnter 1 or 0: '))\n sort_movies = sort_movies(movies, how_sort_movies)\n output_movies_to_console(sort_movies, NUMBER_MOVIES_TO_SHOW)\n"

}

] | 2 |

ajlangley/ml-training-progress-bar | https://github.com/ajlangley/ml-training-progress-bar | 715f324a935114e00c1f1658b658e26a0c53a990 | e3cb2a8b730636cd153c741572a8837f239c77cb | 3e285a710911e5e1afa0636e252e7e8015c4f7e7 | refs/heads/master | 2018-07-02T04:00:59.763964 | 2018-06-30T16:46:42 | 2018-06-30T16:46:42 | 127,028,694 | 0 | 0 | null | null | null | null | null | [

{

"alpha_fraction": 0.7982456088066101,

"alphanum_fraction": 0.7982456088066101,

"avg_line_length": 56,

"blob_id": "784ac6efa7ab66a9f73cc3ac194ae502d13e425c",

"content_id": "c435579cde13676c42897efde781c1c4ef1b171c",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Markdown",

"length_bytes": 114,

"license_type": "no_license",

"max_line_length": 89,

"num_lines": 2,

"path": "/README.md",

"repo_name": "ajlangley/ml-training-progress-bar",

"src_encoding": "UTF-8",

"text": "# Training Progress Bar\nAdd as a submodule to your ml projects to render a training progress bar in the terminal.\n"

},

{

"alpha_fraction": 0.5357961058616638,

"alphanum_fraction": 0.55756014585495,

"avg_line_length": 32.25714111328125,

"blob_id": "a96313f7f8328f16206c5ad88a6323c48c556dad",

"content_id": "2547884f28c704aed97ea895e530f46c5b5d49d6",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 3492,

"license_type": "no_license",

"max_line_length": 109,

"num_lines": 105,

"path": "/training_progress_bar.py",

"repo_name": "ajlangley/ml-training-progress-bar",

"src_encoding": "UTF-8",

"text": "from colorama import Fore\nfrom colorama import Style\nimport sys\nimport os\n\n\ncolors = {'RED': Fore.RED, 'YELLOW':Fore.YELLOW, 'GREEN': Fore.GREEN, 'BLUE': Fore.BLUE, 'BLACK': Fore.BLACK,\n 'WHITE': Fore.WHITE, 'CYAN': Fore.CYAN}\n\n\nclass TrainingProgressBar:\n def __init__(self, n_examples, bar_color=None, text_color=None, show_epoch_on_newline=True):\n self.epoch_num = 1\n self.n_examples = n_examples\n self.total_examples_seen = 0\n self.epoch_examples_seen = 0\n self.total_loss = 0\n self.epoch_loss = 0\n self.bar_color = None\n self.text_color = None\n self.show_epoch_on_newline = show_epoch_on_newline\n\n # Appearance configurations\n if bar_color:\n self.bar_color = bar_color.upper()\n if text_color:\n self.text_color = text_color.upper()\n\n def update(self, examples_seen, loss):\n self.epoch_examples_seen += examples_seen\n self.total_examples_seen += examples_seen\n self.epoch_loss += loss * examples_seen\n self.total_loss += loss * examples_seen\n\n def show(self):\n percent_complete = self.epoch_examples_seen / self.n_examples * 100\n\n avg_epoch_loss = self.epoch_loss / self.epoch_examples_seen if self.epoch_examples_seen else 0\n avg_total_loss = self.total_loss / self.total_examples_seen if self.total_examples_seen else 0\n\n sys.stdout.flush()\n print('\\r', end='')\n\n self.set_text_color()\n print(f'Epoch {self.epoch_num}: ', end='')\n\n self.print_bar(percent_complete)\n\n self.set_text_color()\n print((50 - int(percent_complete / 2)) * ' ', end='')\n print('|{0:.1f}%'.format(percent_complete), end='')\n print('\\t [Avg Error for Epoch]: {0:.4f}'.format(avg_epoch_loss), end='')\n print('\\t [Total Training Loss]: {0:.4f}'.format(avg_total_loss), end='')\n print(f'{Style.RESET_ALL}', end='')\n\n self._update_epoch()\n\n def print_bar(self, percent_complete):\n self.set_bar_color()\n\n # Print n / 2 blocks, followed by a fraction of a block\n # for added precision\n for i in range(int(percent_complete / 2)):\n print(u'\\u2589', end='')\n self.print_fraction(percent_complete / 2 - int(percent_complete / 2))\n\n def set_bar_color(self):\n if self.bar_color:\n print(f'{colors[self.bar_color]}', end='')\n else:\n print(f'{Style.RESET_ALL}', end='')\n\n def set_text_color(self):\n if self.text_color:\n print(f'{colors[self.text_color]}', end='')\n else:\n print(f'{Style.RESET_ALL}', end='')\n\n def _update_epoch(self):\n if self.epoch_examples_seen >= self.n_examples:\n self.epoch_num += 1\n self.epoch_examples_seen = 0\n self.epoch_loss = 0\n\n if self.show_epoch_on_newline:\n print('\\n')\n\n @staticmethod\n def print_fraction(fraction):\n if fraction >= 0.875:\n print(u'\\u2589', end='')\n elif fraction >= 0.75:\n print(u'\\u258A', end='')\n elif fraction >= 0.625:\n print(u'\\u258B', end='')\n elif fraction >= 0.5:\n print(u'\\u258C', end='')\n elif fraction >= 0.375:\n print(u'\\u258D', end='')\n elif fraction >= 0.25:\n print(u'\\u258E', end='')\n elif fraction >= 0.125:\n print(u'\\u258F', end='')\n else:\n print(' ', end='')\n"

}

] | 2 |

aashutosh0012/Youtube-Video-Downloader | https://github.com/aashutosh0012/Youtube-Video-Downloader | 8c0da0477dedcc0681d01df611e757f9cf31ce27 | e553f2d356de4f2cf469d0b3323a67a24f319c8a | 8f8c63e4b84d240331da9e2ca3815e0a01b670a3 | refs/heads/main | 2023-05-06T13:38:59.959080 | 2021-05-30T10:33:45 | 2021-05-30T10:33:45 | 372,182,914 | 1 | 0 | null | null | null | null | null | [

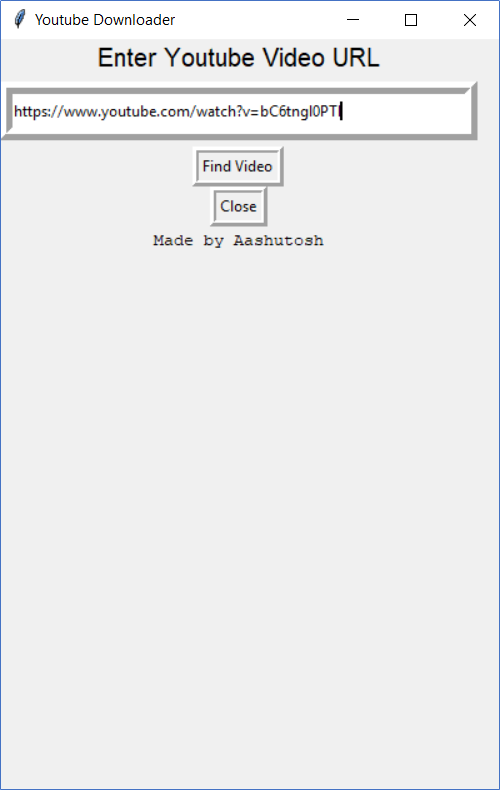

{

"alpha_fraction": 0.6385002732276917,

"alphanum_fraction": 0.6650811433792114,

"avg_line_length": 43.846153259277344,

"blob_id": "54d9c028ae2a9bfe0f2e780f5c96b13b325dedd3",

"content_id": "45336142b33999d7496f3f816e8b5845738467bc",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 3574,

"license_type": "no_license",

"max_line_length": 173,

"num_lines": 78,

"path": "/youtube_downloader.py",

"repo_name": "aashutosh0012/Youtube-Video-Downloader",

"src_encoding": "UTF-8",

"text": "from tkinter import *\r\nfrom tkinter import ttk\r\nfrom pytube import YouTube\r\nimport os,requests\r\nfrom PIL import ImageTk,Image\r\n\r\n\r\n#Get Current Path or Script Path where Video will be Downloaded\r\ntry:\r\n PATH = os.path.dirname(os.path.abspath(__file__))\r\nexcept NameError:\r\n PATH = os.path.dirname(os.path.dirname(os.path.abspath(os.getcwd())))\r\n\r\nwindow = Tk()\r\nwindow.title('Youtube Downloader')\r\nwindow.geometry('400x600+100+100')\r\nurl = StringVar()\r\ndef get_data():\r\n global yt,res,title,video_url,url,resolutions\r\n video_url = url.get() \r\n yt = YouTube(video_url)\r\n title = yt.title\r\n\r\n #Download Thumbnail\r\n v_id = video_url.split('=')[1]+'.jpg'\r\n thumbnail_file = os.path.join(PATH,v_id)\r\n thumbnail = requests.get(yt.thumbnail_url)\r\n with open(thumbnail_file,'wb') as file:\r\n file.write(thumbnail.content)\r\n\r\n #Display Thumbnail\r\n img = Image.open(thumbnail_file)\r\n size = 300, 300\r\n img.thumbnail(size, Image.ANTIALIAS)\r\n img = ImageTk.PhotoImage(img) \r\n thumbnail_label = Label(window, image = img,bd = 5,relief = 'solid')\r\n thumbnail_label.grid(row=3,column=0,columnspan=2)\r\n thumbnail_label.image=img\r\n\r\n #Display Title of Video in Window\r\n title_message = Message(window, text=title, font=(\"Arial Bold\",10),width=300,bd=10).grid(row=4,columnspan=2)\r\n available_res = [stream.resolution for stream in yt.streams.filter(progressive=True,file_extension='mp4').order_by(\"resolution\")]\r\n \r\n #Select Avaialable Resolution of Video to Download\r\n choose_res = Label(window,text='Select Resolution').grid(row=5,column=0)\r\n resolutions = ttk.Combobox(window)\r\n resolutions['values'] = available_res\r\n resolutions.current(0)\r\n resolutions.grid(row = 5,column = 1)\r\n res = resolutions.get()\r\n #print(f\"resolution = {res} title = {title}\") \r\n \r\n #Display Download Button\r\n download_btn = Button(window,text=\"Download Video\",bd=5, relief='ridge', bg = 'violet', fg='Black', command = download).grid(row = 6, column = 0,columnspan = 2,pady = 3)\r\n\r\ndef download():\r\n download_label = Label(window,text = 'Downloading...',bd = 5).grid(row = 6,column = 0, columnspan=2,pady = 3)\r\n global yt,res,title,resolutions\r\n res = resolutions.get()\r\n #print(res)\r\n\r\n #Download Video in mp4 Format and Selected Resolution\r\n video = yt.streams.filter(file_extension = 'mp4',progressive = True,res = res)[0]\r\n video.download()\r\n \r\n #Display Video Downlaoded Messaged along with Path\r\n download_label = Label(window,text = 'Download Finished',bd = 5).grid(row = 6,column = 0, columnspan = 2,ipady = 3)\r\n success_message = Text(window,height = 5,width = 50,wrap = CHAR, bd = 0)\r\n success_message.insert(1.0,f'Video Downloaded \\n {os.path.join(PATH,title)}')\r\n success_message.grid(row = 7, column = 0,columnspan = 2)\r\n success_message.configure(state = \"disabled\")\r\n\r\nL1 = Label(window,text='Enter Youtube Video URL', font = ('Arial',15)).grid(row = 0,column = 0, columnspan = 2)\r\nurl_Entry = Entry(window,textvariable = url, width = 60,bd = 10,relief = 'ridge').grid(row = 1, column = 0, pady = 5, ipady = 5,columnspan = 2)\r\nfind_video = Button(window,text = 'Find Video',bd = 5, relief = 'ridge',command = get_data).grid(row = 2, column = 0, columnspan = 2)\r\nquit = Button(window, text = 'Close',bd = 5, relief = 'ridge',command = window.destroy).grid(row = 8, column = 0, columnspan = 2,rowspan = 2)\r\ninfo = Label(window, text = 'Made by Aashutosh', font = (\"Courier New\",10)).grid(row = 15, column = 0, columnspan = 2)\r\nwindow.mainloop()"

},

{

"alpha_fraction": 0.6483103632926941,

"alphanum_fraction": 0.7947434186935425,

"avg_line_length": 48.8125,

"blob_id": "a416f5a204439d18b544b2c2914fe30c5c3f1745",

"content_id": "6887927d8d7139587c55675b415b8443afb69e17",

"detected_licenses": [],

"is_generated": false,

"is_vendor": false,

"language": "Markdown",

"length_bytes": 799,

"license_type": "no_license",

"max_line_length": 155,

"num_lines": 16,

"path": "/README.md",

"repo_name": "aashutosh0012/Youtube-Video-Downloader",

"src_encoding": "UTF-8",

"text": "# Youtube-Video-Downloader\nDownload Videos from Youtube to your local system.\n\nThis Program uses **[Pytube](https://pytube.io/en/latest/index.html)** Library to download videos from Youtube and **tkinter** to diplay Graphical Window..\n\n\n\n\nEnter URL of the Youtube Video & hit Find.\n\n\n\nApp Searches for the Video and provides a Dropdown button to Select available Resolution and a Download Button.\nAfter Video gets downloaded, it displays the File Path and Name.\n\n\n\n\n"

}

] | 2 |

portelaoliveira/CSV_e_JSON | https://github.com/portelaoliveira/CSV_e_JSON | 69dad48dd02ec7cd2c6feac72ae7b0ebe6e6bf89 | 0c8c5b93f7a2ed4433d3133c93d004687aa4c497 | 58f936e6c296576d1a5b3c787977a3595b0c0347 | refs/heads/master | 2022-07-29T17:38:53.876534 | 2020-05-16T19:58:26 | 2020-05-16T19:58:26 | 264,503,010 | 2 | 0 | null | null | null | null | null | [

{

"alpha_fraction": 0.7212121486663818,

"alphanum_fraction": 0.7212121486663818,

"avg_line_length": 56.07692337036133,

"blob_id": "13bad409350942bd4db232096ae4de3e069f7ebd",

"content_id": "bb76a1cbe361bc5fa385a5e91aaef420f9285bcd",

"detected_licenses": [

"MIT"

],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 1498,

"license_type": "permissive",

"max_line_length": 160,

"num_lines": 26,

"path": "/APIS/python_repos.py",

"repo_name": "portelaoliveira/CSV_e_JSON",

"src_encoding": "UTF-8",

"text": "import requests\n\n# Faz uma chamada de API e armazena a resposta.\nurl = 'https://api.github.com/search/repositories?q=language:python&sort=stars' # Armazenamos o URL da chamada da API.\nr = requests.get(url) # Usamos requests para fazer a chamada.\nprint('Status Code:', r.status_code)\n''' O objeto com a resposta tem um atributo chamado status_code, que nos informa se a resposta\n foi bem sucedida. '''\n\n# Armazena a resposta da API em uma variável.\nrespose_dict = r.json()\nprint('Total repositories:', respose_dict['total_count']) # Total de repositórios python no GitHub.\n\n# Explora informaçôes sobre os repositórios.\nrepo_dicts = respose_dict['items']\nprint('Repositories returned:', len(repo_dicts))\n\nprint(\"\\nSelected information about each repository:\")\nfor repo_dict in repo_dicts:\n print(f\"Name: {repo_dict['name']}\") # Nome do projeto.\n print(f\"Owner: {repo_dict['owner']['login']}\") # Acessa o dicionário que o representa com owner e então usamos a chave login para obter o seu nome de login.\n print(f\"Stars: {repo_dict['stargazers_count']}\") # Exibe a quantidade de estrelas que o projeto recebeu.\n print(f\"Repository: {repo_dict['html_url']}\") # Extraí o URL do repositório no GitHub.\n print(f\"Created: {repo_dict['created_at']}\") # Mostra a data em que o projeto foi criado.\n print(f\"Updated: {repo_dict['updated_at']}\") # Quando foi atualizado pela última vez.\n print(f\"Description: {repo_dict['description']}\") # Exibe a descrição do repositório.\n\n"

},

{

"alpha_fraction": 0.719462513923645,

"alphanum_fraction": 0.729234516620636,

"avg_line_length": 47.156864166259766,

"blob_id": "c02cda914534ad56cbb4c02da3d24b8a979fe33c",

"content_id": "6bd3f57500716b5f4957c9fe2fcffb72e8028399",

"detected_licenses": [

"MIT"

],

"is_generated": false,

"is_vendor": false,

"language": "Python",

"length_bytes": 2480,

"license_type": "permissive",

"max_line_length": 125,

"num_lines": 51,

"path": "/APIS/bar_descriptions_visula.py",

"repo_name": "portelaoliveira/CSV_e_JSON",

"src_encoding": "UTF-8",