hmBERT: Historical Multilingual Language Models for Named Entity Recognition

More information about our hmBERT model can be found in our new paper: "hmBERT: Historical Multilingual Language Models for Named Entity Recognition".

Languages

Our Historic Language Models Zoo contains support for the following languages - incl. their training data source:

| Language | Training data | Size |

|---|---|---|

| German | Europeana | 13-28GB (filtered) |

| French | Europeana | 11-31GB (filtered) |

| English | British Library | 24GB (year filtered) |

| Finnish | Europeana | 1.2GB |

| Swedish | Europeana | 1.1GB |

Corpora Stats

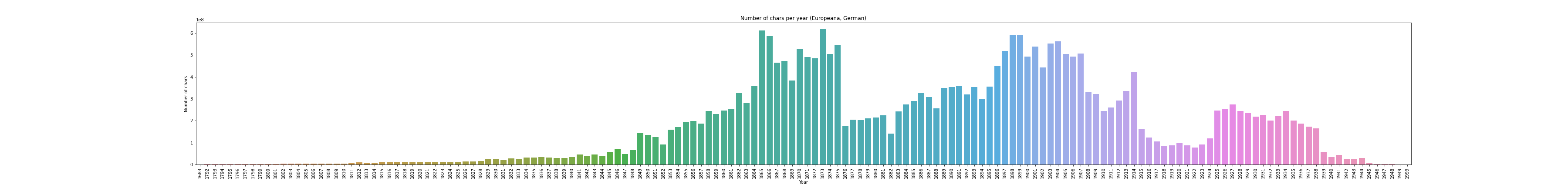

German Europeana Corpus

We provide some statistics using different thresholds of ocr confidences, in order to shrink down the corpus size and use less-noisier data:

| OCR confidence | Size |

|---|---|

| 0.60 | 28GB |

| 0.65 | 18GB |

| 0.70 | 13GB |

For the final corpus we use a OCR confidence of 0.6 (28GB). The following plot shows a tokens per year distribution:

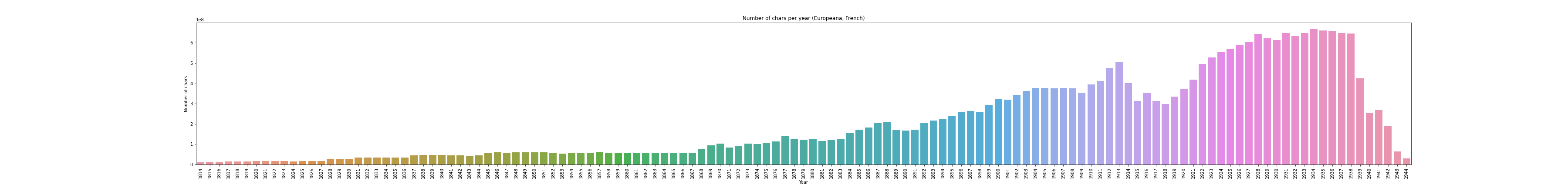

French Europeana Corpus

Like German, we use different ocr confidence thresholds:

| OCR confidence | Size |

|---|---|

| 0.60 | 31GB |

| 0.65 | 27GB |

| 0.70 | 27GB |

| 0.75 | 23GB |

| 0.80 | 11GB |

For the final corpus we use a OCR confidence of 0.7 (27GB). The following plot shows a tokens per year distribution:

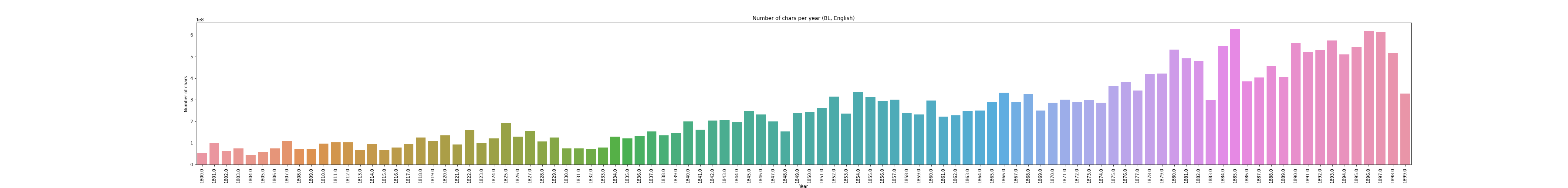

British Library Corpus

Metadata is taken from here. Stats incl. year filtering:

| Years | Size |

|---|---|

| ALL | 24GB |

| >= 1800 && < 1900 | 24GB |

We use the year filtered variant. The following plot shows a tokens per year distribution:

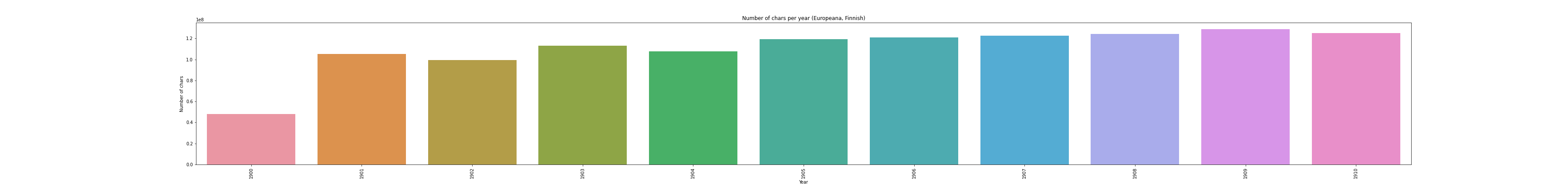

Finnish Europeana Corpus

| OCR confidence | Size |

|---|---|

| 0.60 | 1.2GB |

The following plot shows a tokens per year distribution:

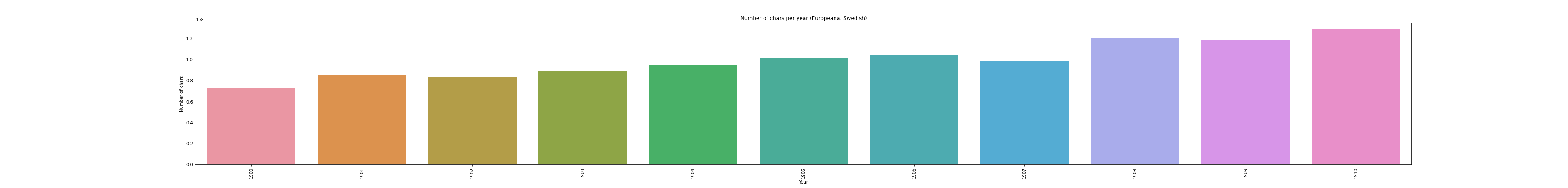

Swedish Europeana Corpus

| OCR confidence | Size |

|---|---|

| 0.60 | 1.1GB |

The following plot shows a tokens per year distribution:

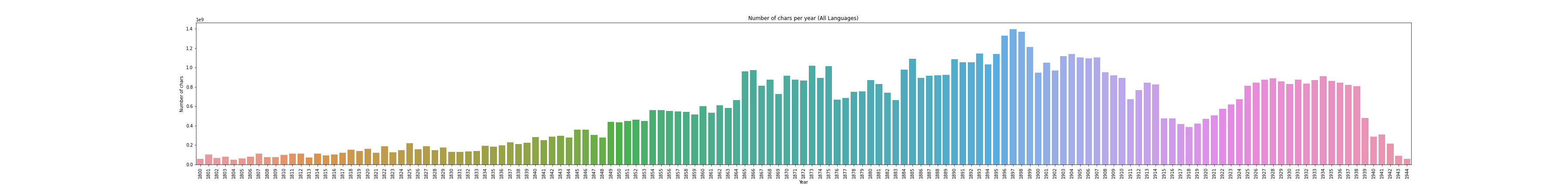

All Corpora

The following plot shows a tokens per year distribution of the complete training corpus:

Multilingual Vocab generation

For the first attempt, we use the first 10GB of each pretraining corpus. We upsample both Finnish and Swedish to ~10GB. The following tables shows the exact size that is used for generating a 32k and 64k subword vocabs:

| Language | Size |

|---|---|

| German | 10GB |

| French | 10GB |

| English | 10GB |

| Finnish | 9.5GB |

| Swedish | 9.7GB |

We then calculate the subword fertility rate and portion of [UNK]s over the following NER corpora:

| Language | NER corpora |

|---|---|

| German | CLEF-HIPE, NewsEye |

| French | CLEF-HIPE, NewsEye |

| English | CLEF-HIPE |

| Finnish | NewsEye |

| Swedish | NewsEye |

Breakdown of subword fertility rate and unknown portion per language for the 32k vocab:

| Language | Subword fertility | Unknown portion |

|---|---|---|

| German | 1.43 | 0.0004 |

| French | 1.25 | 0.0001 |

| English | 1.25 | 0.0 |

| Finnish | 1.69 | 0.0007 |

| Swedish | 1.43 | 0.0 |

Breakdown of subword fertility rate and unknown portion per language for the 64k vocab:

| Language | Subword fertility | Unknown portion |

|---|---|---|

| German | 1.31 | 0.0004 |

| French | 1.16 | 0.0001 |

| English | 1.17 | 0.0 |

| Finnish | 1.54 | 0.0007 |

| Swedish | 1.32 | 0.0 |

Final pretraining corpora

We upsample Swedish and Finnish to ~27GB. The final stats for all pretraining corpora can be seen here:

| Language | Size |

|---|---|

| German | 28GB |

| French | 27GB |

| English | 24GB |

| Finnish | 27GB |

| Swedish | 27GB |

Total size is 130GB.

Pretraining

Details about the pretraining are coming soon.

Acknowledgments

Research supported with Cloud TPUs from Google's TPU Research Cloud (TRC) program, previously known as TensorFlow Research Cloud (TFRC). Many thanks for providing access to the TRC ❤️

Thanks to the generous support from the Hugging Face team, it is possible to download both cased and uncased models from their S3 storage 🤗

- Downloads last month

- 196