djovak/embedic-base

Say hello to Embedić, a group of new text embedding models finetuned for the Serbian language!

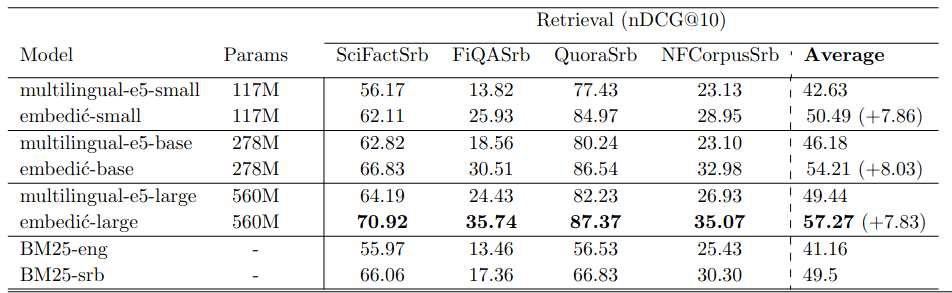

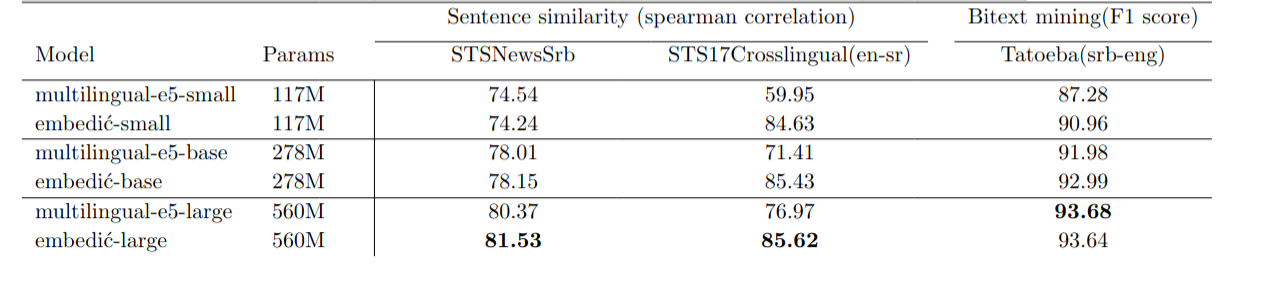

These models are particularly useful in Information Retrieval and RAG purposes. Check out images showcasing benchmark performance, you can beat previous SOTA with 5x fewer parameters!

Although specialized for Serbian(Cyrillic and Latin scripts), Embedić is Cross-lingual(it understands English too). So you can embed English docs, Serbian docs, or a combination of the two :)

This is a sentence-transformers model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

Usage (Sentence-Transformers)

Using this model becomes easy when you have sentence-transformers installed:

pip install -U sentence-transformers

Then you can use the model like this:

from sentence_transformers import SentenceTransformer

sentences = ["ko je Nikola Tesla?", "Nikola Tesla je poznati pronalazač", "Nikola Jokić je poznati košarkaš"]

model = SentenceTransformer('djovak/embedic-base')

embeddings = model.encode(sentences)

print(embeddings)

Important usage notes

- "ošišana latinica" (usage of c instead of ć, etc...) significantly deacreases search quality

- The usage of uppercase letters for named entities can significantly improve search quality

Training

Embedić models are fine-tuned from multilingual-e5 models and they come in 3 sizes (small, base, large).

Training is done on a single 4070ti super GPU

3-step training: distillation, training on (query, text) pairs and finally fine-tuning with triplets.

Evaluation

Model description:

| Model Name | Dimension | Sequence Length | Parameters |

|---|---|---|---|

| intfloat/multilingual-e5-small | 384 | 512 | 117M |

| djovak/embedic-small | 384 | 512 | 117M |

| intfloat/multilingual-e5-base | 768 | 512 | 278M |

| djovak/embedic-base | 768 | 512 | 278M |

| intfloat/multilingual-e5-large | 1024 | 512 | 560M |

| djovak/embedic-large | 1024 | 512 | 560M |

BM25-ENG - Elasticsearch with English analyzer

BM25-SRB - Elasticsearch with Serbian analyzer

evaluation results

Evaluation on 3 tasks: Information Retrieval, Sentence Similarity, and Bitext mining. I personally translated the STS17 cross-lingual evaluation dataset and Spent 6,000$ on Google translate API, translating 4 IR evaluation datasets into Serbian language.

Evaluation datasets will be published as Part of MTEB benchmark in the near future.

Contact

If you have any question or sugestion related to this project, you can open an issue or pull request. You can also email me at novakzivanic@gmail.com

Full Model Architecture

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

(2): Normalize()

)

License

Embedić models are licensed under the MIT License. The released models can be used for commercial purposes free of charge.

- Downloads last month

- 1,153

Model tree for djovak/embedic-base

Base model

intfloat/multilingual-e5-base